Autopilot is becoming a top priority for Tesla. On September 26th, Tesla officially released Version 10.0, while at the same time, the first acquisition and restructuring since the establishment of the Tesla Autopilot department is underway.

In 2019, autonomous driving is no longer a hot topic in the investment and financing field. As “commercialization” becomes a keyword, top autonomous driving companies such as Waymo and GM Cruise have actively lowered their expectations and redefined their commercialization timetables.

Tesla has become an exception in this regard. On one hand, at the Autonomous Driving Investor Day on April 23rd, Tesla reiterated its timetable for the release of autonomous driving functions by the end of 2019. On the other hand, after encountering difficulties in the development of the “Smart Summon” function which has high requirements for technology capabilities, Elon Musk chose to directly dismiss dozens of engineers from the Autopilot department and personally lead the project.

On October 1st, 2019, CNBC was the first to report that Tesla had acquired DeepScale, a start-up company specializing in autonomous driving perception. In the current cold winter of the autonomous driving industry, what kind of storm will this acquisition cause?

Front Perception Fusion + Edge Computing = DeepScale

Regarding DeepScale’s advantages, CEO Forrest Iandola pointed to front perception fusion: “What we do is early fusion of the original data, which is fusion before target detection.” In fact, using raw data rather than object data for perception fusion is DeepScale’s most differentiated advantage.

What is front fusion and what is back fusion?

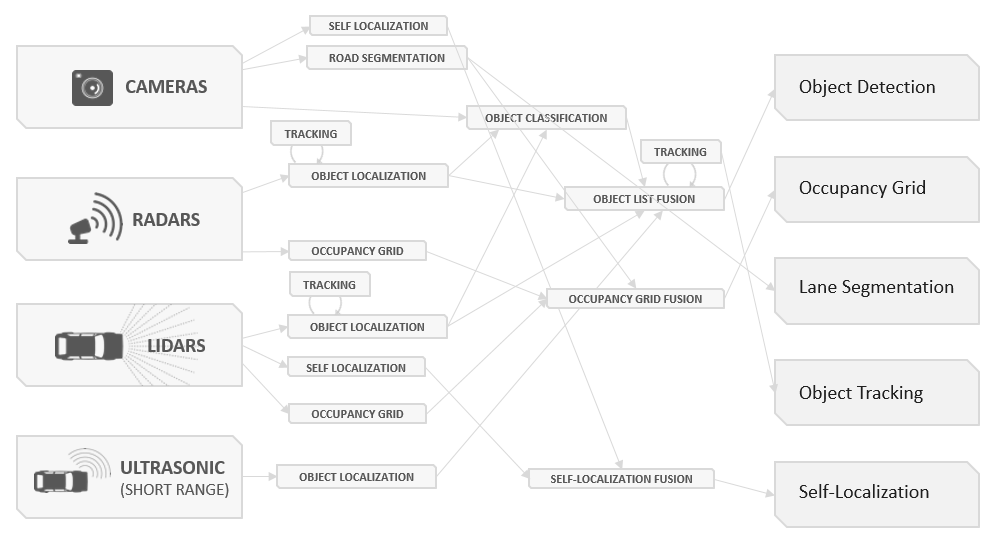

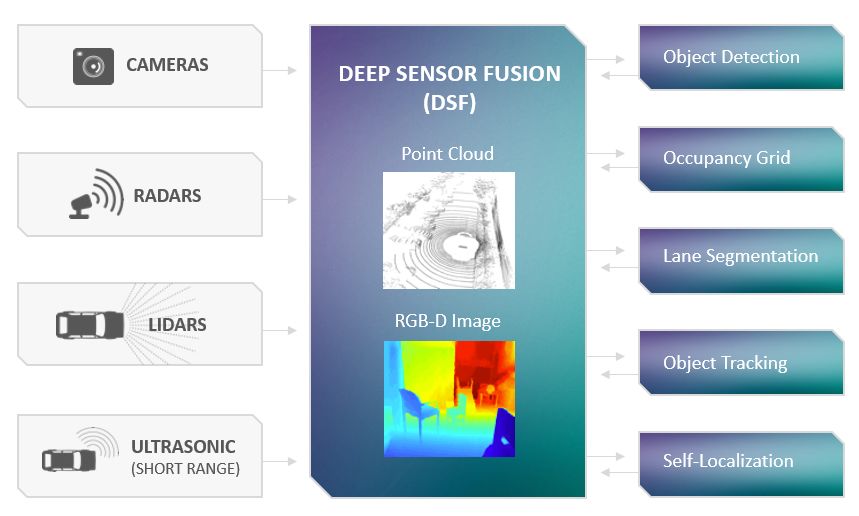

Back fusion refers to different sensors performing their respective duties, with ultrasonic sensors, cameras, and millimeter-wave radar performing independent perception, and generating independent information and object lists (Objectlist) after identification. These pieces of information and object lists are verified and compared, and in the process of generating the final object list, the sensors filter out invalid and useless information and merge some objects to complete the entire perception process.

The front fusion makes all sensors run the same algorithm, and unifies the different raw data from ultrasound, camera, and millimeter-wave radar. This is equivalent to a set of super sensors that surround the entire car 360 degrees, completing the entire perception process through a complex and precise super algorithm.

Of course, Tesla does not have LIDAR.

So what are the benefits of front fusion compared to back fusion?

Firstly, using a unified algorithm to process all raw data from sensors reduces the complexity and system latency of the entire perception architecture. This is of great significance to driving scenarios with high requirements for computing power, power consumption, and real-time performance.

Secondly, many invalid and useless information that are filtered out in posterior fusion perception can be recognized through fusion with data from other sensors in the front perception route, creating a more comprehensive and complete environment perception information.

Forrest gave an example in an interview with EE Time: If the camera is directly exposed to sunlight or the radar performance is greatly reduced due to heavy snow, the raw data of the two sensors will produce perception results that are very different or even conflicting. At this time, the system needs to make a judgment.

Many “ineffective” information of independent sensors in posterior fusion scenarios become “effective” in front fusion recognition scenarios, and together with data from other sensors, they “piece together” a complete object recognition, greatly improving the robustness of the perception system.

How high is it? According to tests by a certain autonomous driving company under the same complex road conditions, if the posterior fusion model has a probability of 1% of encountering extreme scenarios (CornerCase), the front fusion model can be as low as 0.01%, in other words, the perception performance is improved by two orders of magnitude.Most people don’t have a clear concept of the challenge of front-end fusion. Firstly, achieving front-end fusion means that you need to independently develop all sensing technologies and obtain the raw data from all sensors, which has blocked 99% of car companies. Most car companies will directly purchase solutions from suppliers (such as the famous Mobileye), rather than developing from scratch.

Secondly, from a technical perspective, synchronizing sensor data with different resolutions in time and space is a very difficult challenge. For example, the sampling rate of the main sensor may only be 30 times/s, but another sensor reaches 40 times/s. A large amount of effort is required to achieve ultra-high-precision time and space synchronization of the two sensors (autonomous driving requires time precision of 10 to the negative 6th power and spatial precision of 0.015 degrees at 100 meters away, which is an error of 3 cm at 100 meters). According to Forrest’s previous introduction, DeepScale has basically solved this problem by independently developing time-series neural networks TSNN (Time Series Neural Network) in conjunction with RNN.

The simplification of the perception algorithm structure in the front-end fusion route reduces the computational power requirement of DeepScale. According to official information, DeepScale supports ultra-high computational efficiency based on the raw data of 4 cameras and 1 millimeter-wave radar processed by the Qualcomm Snapdragon 820A.

In the automatic driving perception software Carver 21 launched by DeepScale, it claimed to support the perception ability of automatic driving/parking in high-speed scenes, running three deep neural networks in parallel but consuming only about 0.6 tops of computational power, which is only 0.8% of Tesla AP 3.0 chip’s total computational power (72 tops per chip).

Obviously, it is far from enough to rely solely on the front-end fusion route to achieve such extreme efficiency, low computational power, and low power consumption. DeepScale’s another killer is to redesign the neural network to improve the efficiency of the perception system without sacrificing performance by changing the underlying implementation, increasing parallelism, and changing the design of the neural network itself.

Before founding DeepScale, Forrest and his advisor, Professor Keutzer, found that by changing the underlying implementation, increasing parallelism, and changing the design of the neural network itself, the operation speed of the computer vision model and the neural network can be optimized, and the use of memory and computational power can be reduced.The two eventually collaborated to launch the famed SqueezeNet and SqueezeDet in the AI field. SqueezeNet targets image classification and can also be repositioned and applied to other tasks later on. SqueezeDet, on the other hand, is specifically designed for object detection and demonstrates impressive performance in understanding image content, recognizing objects, and identifying their positions.

This research also led to the establishment of DeepScale.

If you recall, Elon Musk, CEO of Tesla, once stated that the L4 level of autonomous driving function of Tesla Autopilot in low-speed open scenarios, such as intelligent summon and intelligent parking, would be pushed to all AP 2+ models. It is noteworthy that the central computing chip for these models comes from a custom version of Nvidia Drive PX2, which only has six video interfaces and 8-10 Tops computing power, which is not enough to support Tesla’s 8 camera arsenal in full swing.

Without a doubt, over the last few months, while the intelligent summon push has been delayed several times, Tesla has been working hard internally to streamline deep neural networks and improve perception performance. This is where DeepScale’s technological accumulation comes in handy. From this perspective, this acquisition is a perfect match.

In the AP 3.0 era, with abundant computing power and massive full-resolution image data provided by hundreds of thousands of vehicle fleets, DeepScale and the Autopilot team will have broader imagination space on the computer vision+AI-driven autonomous driving road.

Why DeepScale?

In early 2017, Tesla mocked the bubble in the autonomous driving field in a lawsuit:

A small team of programmers who can sell their high-priced (autonomous driving) demo with their software.

Frankly speaking, the bubble in the autonomous driving field has forced Tesla to increase the labor cost of the Autopilot team and inevitably affected the stability of the Autopilot team. From this perspective, Elon Musk has long been reserved about startup companies. However, to this day, Tesla has also acquired a startup company, so what is DeepScale?

With a financing of 18 million US dollars and a valuation of over 100 million US dollars, it does not seem like a bubble in the autonomous driving field, where billions of US dollars are commonly spent. However, considering the team size of less than 40 people, this small company is not cheap.

Team size after a financing of 18 million US dollars.This also makes me cautiously suspect whether Tesla’s acquisition of DeepScale is a real acquisition or an acqui-hire in the competitive field of technology.

Acqui-hire refers to large technology companies acquiring talent and intellectual property from small companies in the form of talent acquisition, making the whole process more cost-effective in highly competitive technical areas. Common examples include Waymo hiring 13 robot experts from the closed robot startup Anki, and Apple’s acquisition of Drive.ai, a self-driving startup valued at $200 million, at a price lower than $77 million.

By searching LinkedIn, it can be found that while the software engineering team of DeepScale, led by CEO Forrest Iandola, collectively joined Tesla, the members of DeepScale’s original market and BD teams have resigned one after another. Combining Tesla’s financial practices of splitting a penny into two and refusing to disclose transaction details, Tesla’s acquisition is more likely to be an acqui-hire.

Therefore, re-examining this acquisition, in addition to the strong coupling between Tesla and DeepScale in terms of front-end fusion perception, fully autonomous research and development perception, end-to-end neural networks, high efficiency and low power consumption, the cost-effectiveness of acqui-hire is also a key factor.

Finally, let’s talk about Autopilot. At the Autonomous Driving Investor Day on April 23, Elon clearly stated that the timeline for autonomous driving and the expansion of battery production capacity and reduction of cost per kilowatt hour are Tesla’s two most important strategic focuses.

This means that in the foreseeable future, Autopilot will still be a frequent action. In the current situation where the entire industry is stagnant, Tesla Autopilot has become the most worthy of attention in the field of autonomous driving.

* Tesla’s Lonely Journey of Linux: Version 10.0 Update

* Tesla’s Lonely Journey of Linux: Version 10.0 Update

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.