Author: Michelin

Whether it’s adding animations to WHUD or using different images for the left and right eyes to create a stereoscopic effect, these are just expedient measures for immature AR HUD technology, making them more like “meal replacements” before AR HUD matures…

Talking about HUD in the car, we always hear different voices: people who love it think it can bring a fighter-like driving immersion, while those who dislike it think it’s a waste. Some people prefer the sense of technology it creates, while others think that the large amount of information in front of the windshield affects driving vision. Some people think it’s not clear enough during the day, while others dislike its dazzling colors at night…

When it comes to these “criticisms” of HUD, we have to say that it’s unfair.

Although this technology originated from fighter jets was introduced into cars in 1988, it is only in the past few years when intelligent cars hit the road that HUD has truly evolved, from the CHUD that required a transparent resin glass plate to the WHUD that uses the windshield as a screen, and now to AR HUD with better imaging effects.

Therefore, despite the controversies surrounding HUD, it does not affect its popularity in intelligent cars: HUD, which was previously only optional in high-end cars, is gradually entering into mid-to-low-end models. Even in the recently released Ideal L9, HUD has directly replaced the dashboard and become the first choice for information display. While HUD is singing its way into the “sinking market”, its effects are also improving, with larger images, better imaging effects, and more auxiliary driving information displayed…But for the top players in this field, AR HUD is definitely their common goal.

In the past, CES has always been the barometer of advanced technology, allowing us to learn about the latest advances in AR HUD. However, with car shows having to be postponed, it has become more difficult to keep up with the latest developments of HUD players, and more of us can only take a peek from mass-produced cars that have already hit the market.

To this end, we contacted our old friend at GeekCar, FUTURUS Future Technology, located in Beijing. As one of the pioneers in the field of HUD in China, FUTURUS officially partnered with BMW in 2018 and is also the core HUD supplier for a domestic new energy vehicle manufacturer.During the conversation with FUTURUS, we gradually became unfamiliar with the “AR HUD” that we had experienced countless times in the past year, and then gained new insights.

Is your AR HUD true “AR”?

In the past year of 2021, many people have referred to it as the “AR HUD year”.

What is AR HUD? As the predecessor of AR HUD, WHUD uses the windshield as the screen and projects the HUD virtual image onto a certain location in front of the windshield. When the driver is driving, he or she can directly look at the instrument information such as speed, fuel consumption, navigation, and driving assistance without frequently lowering or turning his or her head to check the instrument and central control screen. AR HUD is based on WHUD, and “AR-izes” this information by integrating the HUD virtual image with the real road scene.

To make the holographic image of HUD blend with the real scene, the image needs to have a depth effect: when marking a vehicle 5 meters ahead, the image looks like it is at a distance of 5 meters; when marking a lane line 10 meters ahead, the image can create a 10-meter distant effect. Being able to present a three-dimensional virtual image that adapts to the continuous zooming process of the human eye, and presenting a visual effect of virtual and reality fusion, can be said to be a necessary condition for an AR HUD.

In 2021, many car models have introduced “AR HUD” function, such as Mercedes S-Class sedan, Volkswagen ID. series, Hongqi E-HS9, and Great Wall WEY Mocha, etc., which seem to be quite popular in the market. These products that came out with the “AR HUD” title have indeed made improvements in visual effects, such as larger and clearer images, longer imaging distances, and some even have a dynamic feeling, but the actual experience is unsatisfactory.

It is not that users are too picky, but that human eyes are too sharp.

These “AR HUD” products use close-range single-layer or double-layer 2D displays. For users, whether it is 2D or 3D seems unimportant, as long as it is easy to use. But the key is that they are not easy to use.

It’s like the naked eye 3D graphics we used to play with in our student days. Currently, “AR HUD” mainly uses different images viewed by the left and right eyes to deceive the brain and finally present a stereoscopic effect in the mind.

However, not everyone can see the naked eye 3D image easily, and the difference in people’s eyes also determines not everyone can be “deceived” and see the stereo effect. Coupled with the shaking of the vehicle during driving, it may result in a poor experience and make people dizzy and nauseous with the “AR HUD.”

Even if the dizziness problem is solved, how to perfectly integrate 2D images with the 3D world is still an insurmountable problem.

For example, when the AR HUD is equipped with the ACC function, it needs to track the position of the front car. As the vehicle is farther away, the image marking the position of the front car also needs to be pulled farther away accordingly. In order to create the dynamic effect of “simulated tracking,” some “AR HUD” use animation.

On the AR HUD of the Mercedes-Benz S-Class, a 2D animation is used to create the dynamic effect of the guidance arrow, making the screen look full of stereoscopic feeling. However, although animation can simulate, the integration with the environment cannot be achieved through animation. Therefore, the Mercedes-Benz S-Class simply gave up the plan to integrate the virtual image with the environment, and let the virtual information of the AR HUD “drift” alone in front of the windshield.

In addition, another drawback of the current “AR HUD” is that in order to improve the field of view and imaging size on the existing flat display, the packaging size is too large. Taking the Mercedes-Benz S-Class, which currently has the best relative effect, as an example, the box size of the “AR HUD” it carries is 27L. This size and the cost behind it make many affordable models hesitate.

Therefore, whether it is adding animation to WHUD, or using different images in the left and right eyes to deceive the brain to create a stereoscopic effect, they are all temporary measures when the AR HUD technology is not mature yet. They are more like “meal substitutes” before AR HUD becomes mature.

On the one hand, using these technologies to make consumers familiar with and accept the concept of AR HUD, on the other hand, accelerating the R&D of “real AR HUD,” so that virtual images can be 3Dized and integrated with reality, and the images are no longer “drifting” in front of the vehicle, but “growing” on the objects that need to be tracked and recognized in front. Naturally, it can avoid the drawbacks of visual fatigue, dizziness, and affecting driving.## What is slowing down the footsteps of AR HUD?

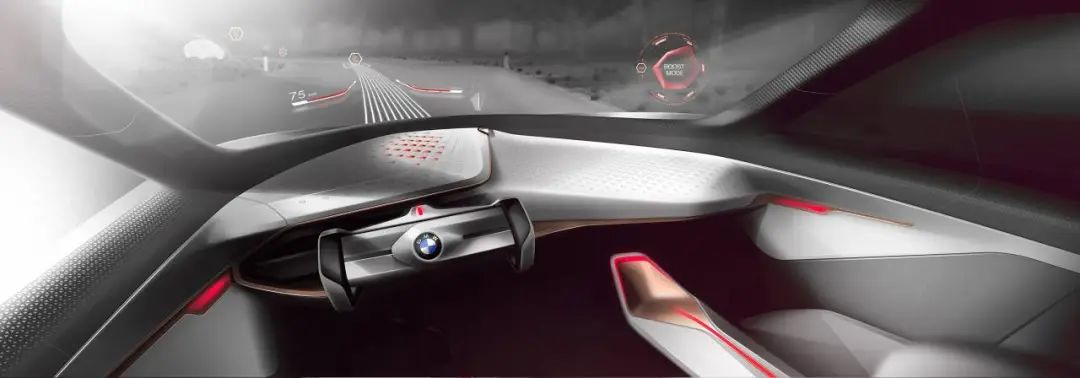

If we say that the HUD has been around for more than 30 years since its inception, the concept of AR HUD is not a new commodity either. As early as 2016, on the BMW NEXT VISON 100 concept car, we could see the embryonic form of AR HUD concept.

A few years ago, the industry still optimistically set the expectation for the landing of AR HUD in 2022. However, even though many so-called “AR HUD” products have already been launched, mass-produced cars that can truly present AR effects obviously still need to wait for a considerable amount of time. Otherwise, they won’t be in a hurry to launch “meal substitutes” on the market.

Regarding this, Weihongxuan, co-founder of FUTURUS Future Black Technology, said: “The technology of the HUD is already mature, but AR HUD is still far from mature.”

So, what makes the progress of AR HUD so difficult?

Technical threshold: Virtual Imaging and the Integration of Virtual and Real Scenes

To quote Xu Junfeng, founder and CEO of FUTURUS Future Black Technology, the most difficult part of AR HUD is to present the virtual world through 3D light field display technology and the real-time integration of virtual and real scenes.

Unlike the current 2D version of “AR HUD,” the key to AR HUD is to create a three-dimensional display screen in front of the driver’s line of sight, presenting the virtual image in a stereoscopic manner, like a hologram, which has become the focus of attention of head players in the HUD field.

For example, Continental, a traditional HUD supplier, invested in start-up company DigiLens, focusing on the research and development of holographic waveguide technology, to create a three-dimensional imaging effect through a principle similar to that of a holographic sight; Panasonic and HUD start-up WayRay both chose laser holographic projection technology to achieve the stereoscopic effect of virtual images and the continuous variable zoom effect.

In the AR field, Apple, which has been brewing for a long time, has shown in public patents that it will use light field HUD technology to output 3D imaging effects. Like Apple, FUTURUS, as a domestic enterprise, also uses light field AR HUD technology. As early as 2016, FUTURUS laid out relevant patents, even overtaking Apple in terms of timing. The light field AR HUD that FUTURUS plans to showcase at the Beijing Auto Show may be the closest “true AR” HUD product that we can get up close and personal with.

In the AR field, Apple, which has been brewing for a long time, has shown in public patents that it will use light field HUD technology to output 3D imaging effects. Like Apple, FUTURUS, as a domestic enterprise, also uses light field AR HUD technology. As early as 2016, FUTURUS laid out relevant patents, even overtaking Apple in terms of timing. The light field AR HUD that FUTURUS plans to showcase at the Beijing Auto Show may be the closest “true AR” HUD product that we can get up close and personal with.

It can be said that in the matter of creating a true AR HUD, everyone is competing fiercely to gain an advantage.

At the same time, after creating a three-dimensional virtual world, there are further advanced challenges to be addressed, such as delay and jitter in the fusion of reality and virtuality. This kind of fusion includes not only spatial fusion but also temporal fusion.

To this end, FUTURUS uses independently developed graphic correction, data compensation, and 3D rendering technology to present HUD virtual images and peripheral environmental information in a 3D manner and display them in corresponding spatial positions. When the virtual image is perfectly fused with the real scenery, the virtual image of the HUD screen appears to be “growing” in the environment, naturally free of disharmony and lack of reality.

In terms of time, the display of instrument information by the AR HUD is real-time. Especially when the AR HUD is connected to the navigation and ADAS systems, more information such as route guidance, lane keeping warning, and forward obstacle warning needs to be displayed. The display of the virtual image part of the HUD must also synchronize in real-time with the vehicle sensors, processors, vehicle information, and surrounding environment, keeping the delay within the range of milliseconds that are imperceptible to the human eye.

Of course, in addition to the core hurdle of processing virtual images and the fusion of the real and virtual worlds, the AR HUD must also face many common problems encountered by previous HUDs, such as the choice of different PGU (imaging unit) technology routes, which determine the imaging effects and direct cost, but more is reliant on upstream material suppliers. The core component, the optical curved mirror, has stringent requirements for accuracy, which is a test for the upstream manufacturers of AR HUDs.

For HUD suppliers and start-up companies, what is the milestone moment?

“It’s going into mass production and being put on cars,” responded Wei Hongxuan.In his view, getting on the HUD is a process of exploring suboptimal solution. As a product with optical design at its core, the precision and complexity of HUD are comparable to but not limited to cameras, and even slight structural adjustments affect the final result. This also makes the difficulty of getting on the HUD product no less than the investment in early research and development.

Japanese machinery company, which occupies half of the HUD market, once revealed that it began to build the HUD for Mercedes-Benz S-Class in 2018. It took nearly three years from the start to the mass production of Mercedes-Benz S-Class at the end of 2020. This was based on the basis of Japanese machinery’s years of mass production experience in the traditional WHUD field.

Similarly, the HUD function of a domestic new force in car manufacturing also takes as long as four years from planning to final mass production. During this period, every minor adjustment of the car model and every change in the angle of the windshield require corresponding adjustment of the HUD optical path design plan, especially the core component of the optical curved mirror; changes in the layout of the vehicle sensor also require corresponding adjustment of the data access of the HUD. This also determines that neither HUD nor AR HUD can be mass-produced or modularized.

Tailoring restricts the final step of AR HUD getting onboard.

Both domestic and foreign enterprises give similar estimates for the time for real AR HUD to come into use. According to Futurus, it will take about three years from the publication of light field AR HUD this year to its application on mass production vehicles, that is, 2025. In the same way, Panasonic, which showcased the laser holographic projection AR HUD at 2022 CES, has also set the product’s landing expectation in 2025.

It appears that although many people regard 2021 as the first year of AR HUD, it will still take us several years to use real AR HUD.

Where is the ultimate destination of the AR HUD when humans don’t need to drive?

Whether it is the HUD in the cockpit of a fighter or the HUD on an intelligent car today, its greatest role is to present key information in front of one’s eyes and reduce the risk of accidents caused by brief gaze deviation when looking down at the dashboard.

The development of AR HUD and automatic driving seems to be contradictory: if someday in the future, fully automatic driving is achieved and people no longer need to drive, do we still need AR HUD? What is the purpose of AR HUD?The “first principle” frequently mentioned by Musk is also applicable to HUD. From CHUD to WHUD, and to future AR HUD, although they differ in form and effect, their mission remains unchanged: to serve as the display carrier in the cabin, provide convenience for humans, and meet user interaction needs.

Therefore, as the autonomous driving gradually frees the driver and human needs change, the role of HUD will naturally change.

Information Simulator Stage: Driving safety is the top priority

At present, humans have absolute control over driving, and the role of intelligent driving systems is to provide warnings and assistive interventions in special situations. The mission of HUD at this stage is to display necessary driving information in an intuitive and non-interfering way, making driving safer.

Therefore, the current HUD is more like an information simulator, displaying instrument information such as speed and speed limit, while displaying navigation and some ADAS information to assist driving.

For example, AR HUD is combined with LDW lane departure warning system, ACC adaptive cruise control system, etc., marking the front lane, giving warning prompts in case of deviation, marking the position of the front vehicle, and adjusting the following distance based on the proximity of the front vehicle. Even on Ideal L9, the HUD is no longer an optional backup plan for instrument information, but directly replaces the physical instrument panel.

Visualization stage of intelligent system: Enhancing trust between people and systems

As the level of vehicle intelligence gradually improves, more and more scenarios can be independently completed by intelligent systems, and they only need to be handed over to people when certain conditions are triggered, which is the so-called L3 level of automatic driving stage. Although we cannot strictly call the navigator assistant driving function as L3 level of autonomous driving at present, they face the same problem: when should the car be under the system’s control, and when should it be under the human’s control? When the automatic driving system encounters a crisis, how can we as drivers know that the system needs to take over in time?

This requires establishing a visual medium between people and cars to display system status in real-time. Is the system detecting the red light ahead? Has it detected a suddenly incoming bicycle? Is it unable to complete a sharp turn and needs human to take over? With the visualization mode of AR HUD, the system status is clear at a glance. The trust between people and systems is also established in this know-your-enemy process.### The Era of Self-driving Cars: The “Window” for In-car and Out-car Communication

When the day of fully automated driving comes, instrument information, auxiliary warnings, and system status may not be as important, and we fully trust the decisions made by the vehicle. In this case, the entertainment functions and screen design inside the cabin will also become more and more, and the car will become a truly mobile “third space”.

However, even if there are many screens inside the cabin, car windows are still the natural channel for communication between people inside the car and the outside world. Just like a large LCD TV screen in the living room cannot replace our longing for the scenery outside the window. At this time, it is logical to use car windows as a natural screen to project information and display surrounding cafes, restaurants, and shopping malls.

When car windows become the window for communication between the inside and outside of the car, the interaction between people and the world will no longer be limited to the flat world, but will create an immersive experience through the depth of AR HUD, allowing people inside the car to not be trapped in cars and screens.

Finally

If the HUD market was dominated by foreign companies during the WHUD era, then under the support of intelligence, the AR HUD with richer attributes will bring everyone back to the same starting line, showing the situation where domestic start-ups and traditional suppliers are making progress side by side.

Now, those poorly performing AR HUDs seem to be an inevitable embarrassing stage in the evolution of the HUD industry. We cannot deny the AR HUD itself because of it, but we also hope for the early arrival of true AR HUDs. Like Tom Cruise driving the BMW concept car Vision Efficient Dynamics in the movie “Mission: Impossible – Ghost Protocol”, where he can load the navigation map on the windshield and switch between screens at any time.

Do you look forward to this future of intelligent cars?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.