Since last year, HoloMatic has held a brand day every quarter to share the company’s progress in the field of technology. However, starting from the fourth quarter, HoloMatic officially renamed its brand day as AI Day, and this name will be used going forward.

It’s important to note that, apart from HoloMatic, the only other company globally to host a sharing event named AI Day is Tesla, on the other side of the Pacific Ocean. So, let’s take a look at the content released during the event to see if HoloMatic has any real technology to showcase.

As usual, at the beginning of the event, the company reviewed the achievements made and made prospect for the future.

Since the launch of the HoloMatic HWA high-speed intelligent driving system on the Weipai Mocha model in May 2021, HoloMatic’s intelligent driving products have been successively installed on five vehicle models such as the Tank 300 City Version and Haval Shenshou.

In November last year, HoloMatic officially launched the high-speed NOH system, and the Weipai Mocha with the HoloMatic NOH system became the world’s first fuel-powered car with navigation intelligent driving function.

As of April this year, the total mileage driven by users equipped with the HoloMatic HWA high-speed intelligent driving system has exceeded 7 million kilometers. HoloMatic’s long-term goal is to pre-install one million vehicles with the system within the next three years.

Three major campaigns

Data intelligence technology campaign

At today’s AI Day, HoloMatic Chairman Zhang Kai stated that in 2022, HoloMatic will carry out three major campaigns: the technology campaign for data intelligence, the scene campaign for intelligent driving, and the scale campaign for automatic end logistics vehicle distribution.

In terms of data, HoloMatic officially released the MANA data intelligence system at last year’s AI Day. MANA is a set of data processing tools based on massive data, including algorithm models, test verification systems, simulation tools, and computing hardware. In short, this system can transform data into knowledge and ultimately optimize product performance.

Here, let’s briefly review HoloMatic’s introduction of the MANA intelligent data system at last year’s AI Day.

The origin of MANA

To achieve good autonomous driving, large amounts of data must be accumulated. For HoloMatic, data is not an issue, but the problem is how to make the best use of this data.

# MANA consists of four major components, which are BASE underlying system, TARS data prototype system, LUCAS data generalization system, and VENUS data visualization platform.

# MANA consists of four major components, which are BASE underlying system, TARS data prototype system, LUCAS data generalization system, and VENUS data visualization platform.

BASE underlying system refers to the storage, transmission, computation, analysis, and data services of data; TARS is the core algorithm prototype related to computation, including perception, cognition, on-board mapping, and verification; LUCAS is the practical application of algorithms in scenarios, including high-performance computing, diagnosis, validation, and core capability transformation; VENUS visualization platform refers to the restoration of scenarios and data insight capabilities.

These professional terms are indeed obscure and difficult to understand, which requires a certain understanding cost. To sum up in the words of Gu Weihao, the “ideological steel stamp” of data intelligence is to iterate products with lower costs and faster speed, and provide safe and easy-to-use products to customers.

And now, after three months, the perception ability of MANA has been significantly improved. Thanks to the exponential growth of chip computing power, the emergence of Transformer cross-modal models, and the rapid improvement of camera pixels, the improvement of hardware technology drives the improvement of software capabilities.

The development of all technologies is to solve the scene problems encountered in driving.

Evolution of Perception

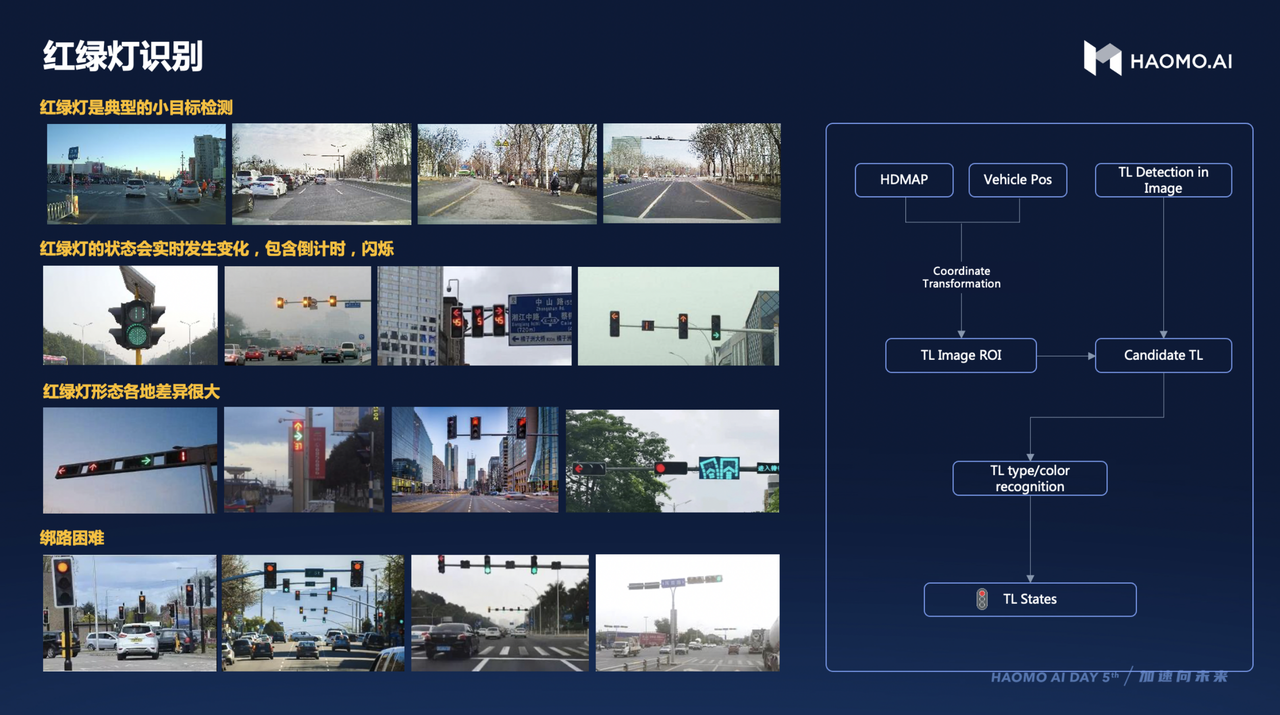

In urban roads, the most common scene is the traffic light intersection, where the difficult points of traffic lights are mainly the small detection targets, changing states (such as flickering), and different shapes of traffic lights in different regions, and of course, the most complex is the “binding road” problem, where different traffic lights need to correspond to different lanes, even human drivers sometimes hesitate in special situations, let alone machines.

Therefore, for this scene, Mauve Intelligent Systems uses a large amount of data for training, and accelerates technology update through image synthesis and transfer learning.

To expand the sample size, Mauve Intelligent Systems use a large number of simulation environments to simulate and make up for the insufficient real-world sample size.

There are two problems with simulated synthetic images. One is that the features are not real, for example, outdoor traffic lights are often exposed to heavy rain and sun, so damage and dust are common. Even if the synthetic images simulate the real situation completely, there may still be differences in distribution probabilities. Therefore, Mauve researchers can only use synthetic data to the greatest extent possible to reduce the error between synthetic and real data.

Mixing transfer learning refers to the direction of Mauve Intelligent Systems to use synthetic data to make up for the lack of real data, and continuously adjust the training strategy to reduce the probability distribution difference between the two different data types.

With enough data basis, Mauve Intelligence Systems began to try to identify real-world traffic lights.

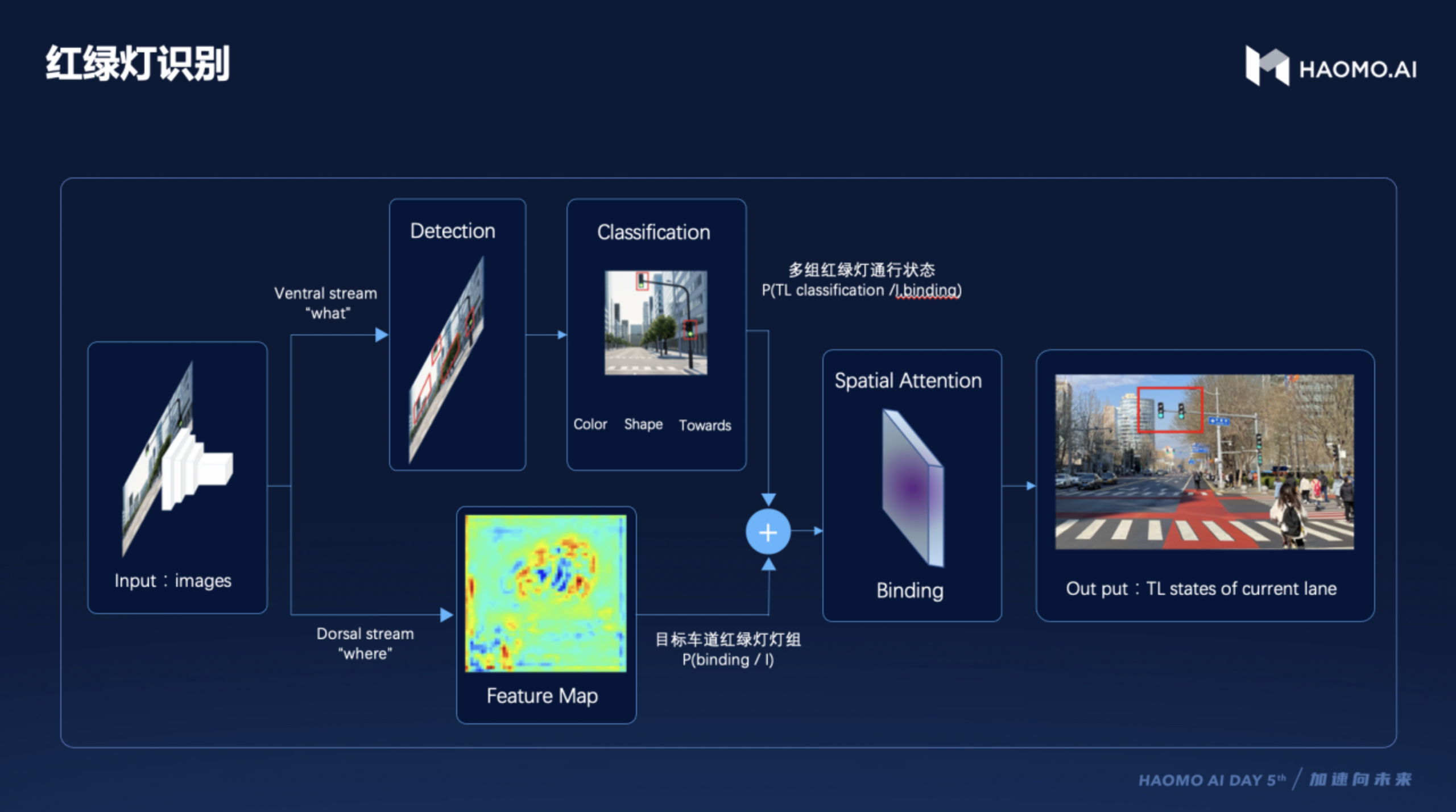

To address this, Moovita has developed the innovative Dual-Stream Perception Model, which divides traffic sign detection and lane binding into two channels. In simpler terms, this model has two channels: one for object detection (what), and the other for understanding spatial and positional relationships (where).

The “what” channel is mainly responsible for detecting traffic signs, including detecting and classifying traffic lights based on color, shape, and orientation. The “where” channel is mainly responsible for binding traffic lights to the target lane.

Currently, most advanced driving assist systems rely on lane tracking. Moovita utilizes the BEV Transformer to identify lane markings. Following the acquisition of image data from cameras distributed around the vehicle, these images are first processed using Resnet + FPN for 2D image processing, then BEV Mapping is performed, which maps the images to bird’s-eye-views. This part uses Cross Attention to dynamically determine the position of a frame’s contents in the camera’s bird’s-eye-view space. By using multiple Cross Attentions, a complete BEV space is finally formed.

However, at this stage, the perceived information lacks the capability of “contextual association”. Therefore, historical bird’s-eye-views and other time-related features need to be added to enable the system to have the ability of front-to-back correlation, which further improves the accuracy of perception.

Cognitive Evolution of MANA

Before discussing cognitive evolution, I will first explain what “cognition” means in simpler terms.

Perception is easy to understand: it is what a vehicle sees, and this depends on the quality of its hardware. However, “cognition” depends on the code written by engineers.

Cognition is not an objective fact, but rather a social convention.

Here, Wei Hao Gu used a scenario to explain the importance of cognitive evolution.

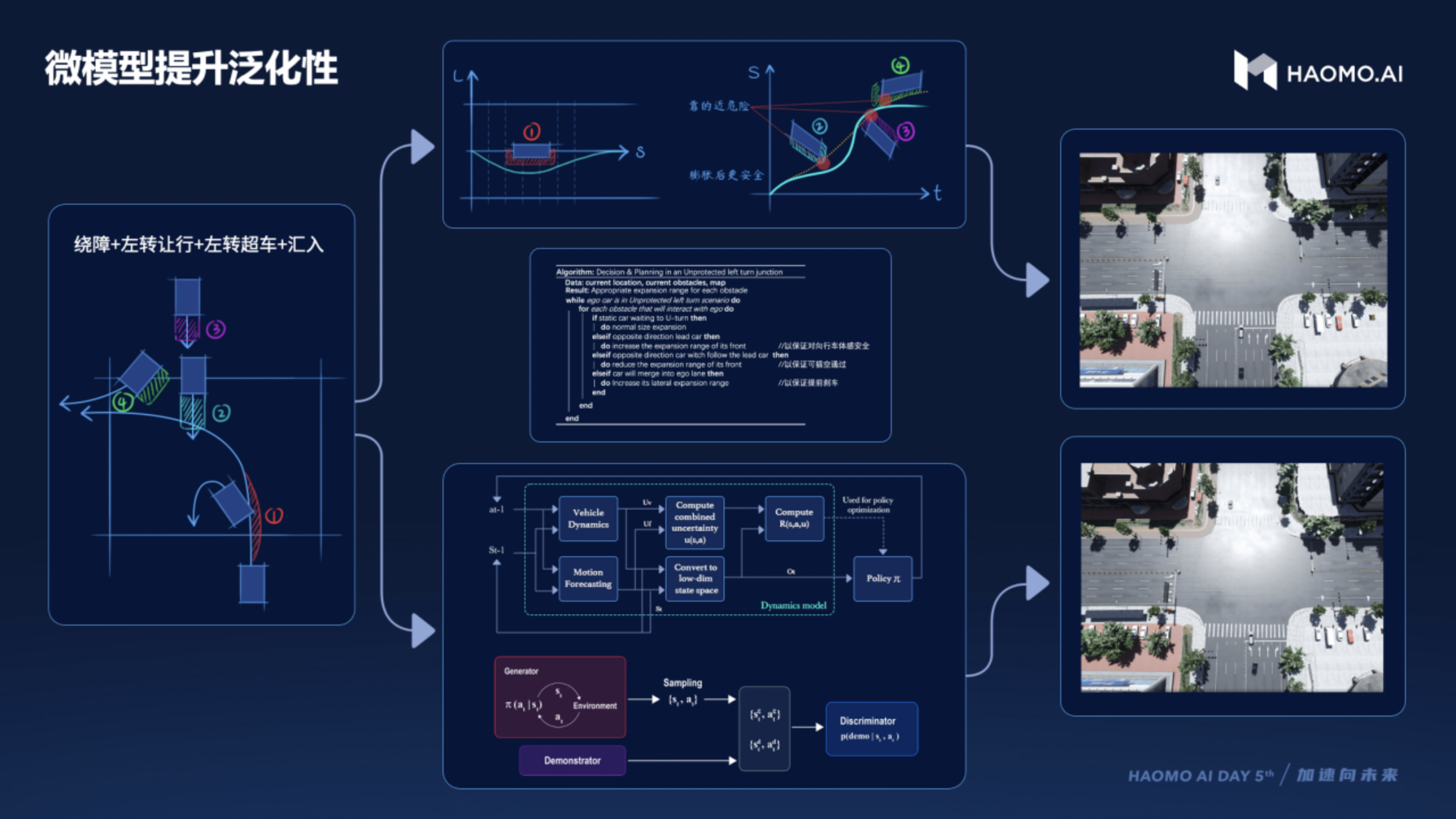

When we want to turn left at an intersection, we not only need to wait for the vehicle in front of us to turn around, but also observe vehicles coming from the other direction. In the past, engineers had to write a large number of rule-based scenario judgments and parameter settings to deal with this scenario, resulting in very bloated code.

However, nowadays, Moovita is studying more data based on similar scenarios and using machine learning models to replace handwritten rules and parameters. Furthermore, these models have broader applicability.

In addition, there are still issues with consistency and interpretability of driving strategies on cognitive problems.Alibaba and Horizon Robotics work with Momenta to bring Alibaba’s M6 large models, originally developed for natural language processing, text generation, text classification, and image classification, into the autonomous driving field.

Competition in Scene Implementation

Autonomous driving is a product of competition and scene implementation.

Zhang Kai, the chairman of Momenta, said that the company has completed all the functions of urban NOH development. In urban environments, it can achieve automatic lane-changing and overtaking, red light recognition and control, complex intersection passage, unprotected left and right turns, and other major functions. According to recent released testing videos, the Momenta Urban NOH has demonstrated its strength by completing the entire autonomous driving process without intervention in a high traffic volume road section.

Momenta claims that the NOH system is being further polished in Beijing, Baoding, and other cities. Vehicles equipped with Momenta’s NOH system will also be mass-produced and put into use soon.

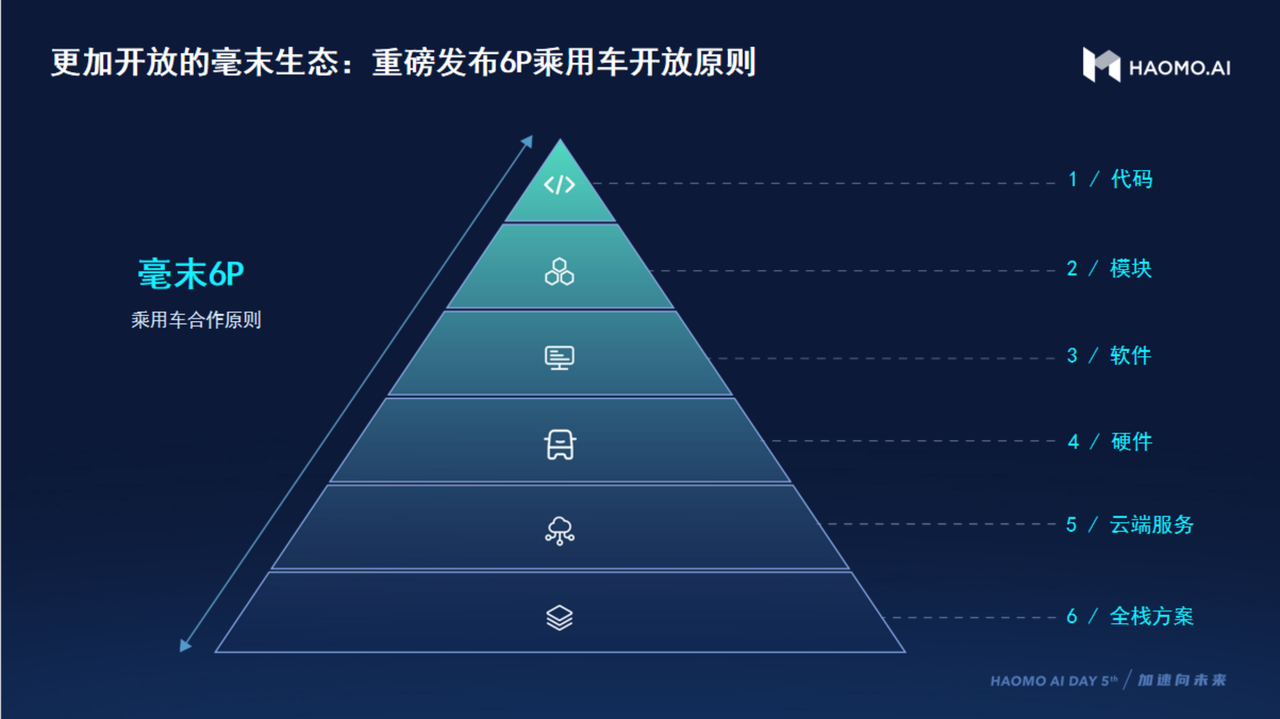

In addition, Momenta also announced an extremely open cooperation model – the 6P Open Cooperation Model.

Autonomous driving is an extremely complex systemic engineering that requires the integration of multiple disciplines from different fields and industries. Today, most players choose the cooperative model of “keeping warm by huddling together” and “collaborating for creation.”

As a technology company, Momenta can provide solutions to companies that do not have the ability to self-developes as a supplier, and can also develop with other companies. Cooperative partners can choose to use Momenta’s full-stack technology solutions, cooperate with Momenta on data intelligent cloud service level, or cooperate with Momenta on software and hardware levels.

This kind of gameplay is very similar to Horizon Robotics, both of which are companies with both hardware and software development capabilities.

Automatic Delivery in Last Mile Logistics

In terms of automatic delivery in last mile logistics, Momenta has fully upgraded the production base of its automated delivery vehicles this year. The upgraded production workshop covers an area of 10,000 square meters and can achieve an annual production capacity of 10,000 unmanned delivery vehicles.

At today’s AI DAY, Momenta officially released the second-generation of its automated delivery vehicles, Little Camel 2.0, priced at CNY 128,800.

Little Camel 2.0 carries the ICU 3.0 high computing power platform and can customize the 600L loading space of the cargo box. In addition, it also supports intelligent voice and touch interaction.

Urban NOH

At last year’s AI DAY event, Momenta demonstrated a demo video of the city NOH. But just three months later, this feature arrived at the doorstep of mass production.

At last year’s AI DAY event, Momenta demonstrated a demo video of the city NOH. But just three months later, this feature arrived at the doorstep of mass production.

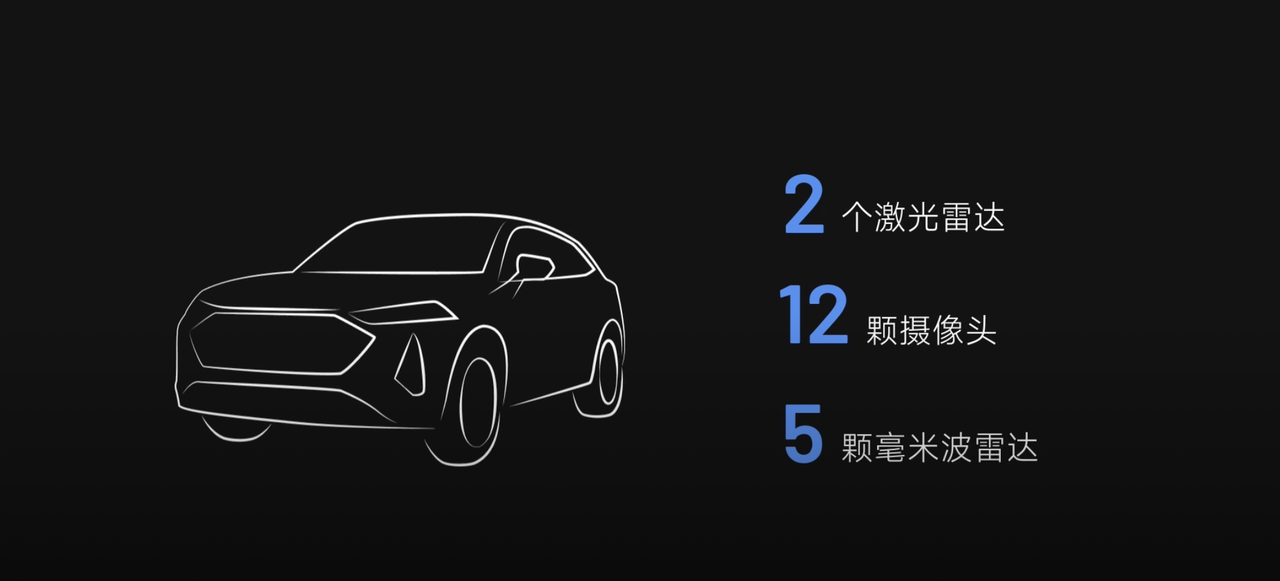

At the hardware level, the HPilot 3.0 system is equipped with Qualcomm 8540 + 9000 chips, with a computing power of 360 TOPS. It also comes with two lidars, 12 cameras, and 5 millimeter-wave radars.

Momenta has set a goal for itself: to install more than 100 units in the next three years and land in more than 100 cities, fully covering domestic first-tier and second-tier cities.

According to Momenta’s plan, the city NOH will be officially put into production in June. Interestingly, XPeng Motors also stated in a recent earnings conference call that it will officially launch its city NGP at the end of the second quarter after obtaining regulatory approval. We can take this opportunity to see who will be the first to mass-produce city navigation-assisted driving: the new energy vehicle company XPeng Motors or the smart driving technology company Momenta, which focuses on intelligent driving.

Momenta’s To do List

Momenta’s goals for 2022 are very clear, but also full of challenges.

Momenta plans to deliver 34 car models and 78 projects in 2022. This not only tests Momenta’s R&D capabilities but also the organization’s ability to deploy and recruit talented personnel.

In addition, Momenta will also cooperate with others to become a “qualified” Tier-1 company. Based on its cooperation with Great Wall Motors, it is clear that their partnership is a mutually beneficial one. Momenta can provide Great Wall Motors with the most competitive assisted-driving capabilities, while the large amount of front-loading can promote the development of MANA.

For Momenta, being the first to mass-produce city NOH seems to be just going from 0 to 1. After that, the company plans to expand their network, deepen their research efforts and build a more robust talent system…

Momenta has started 2022 off with good momentum, but it still has a lot of work to do.

Finally, please feel welcome to download the Garage App to keep up with the latest new energy news. If you want to have more timely communication, you can join our community by clicking here.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.