Jia Haonan posted from the Copilot Temple.

Reference for AI Auto | Official Account – AI4Auto

The most significant development in the field of intelligent driving today:

Tesla FSD new version is online.

Why is it important?

FSD Beta 10.11 version, Elon Musk confirmed that there have been significant architectural improvements, making it a major update.

Furthermore, Musk said that because the new 10.11 capabilities are excellent, they may consider opening up more FSD testing slots in the future.

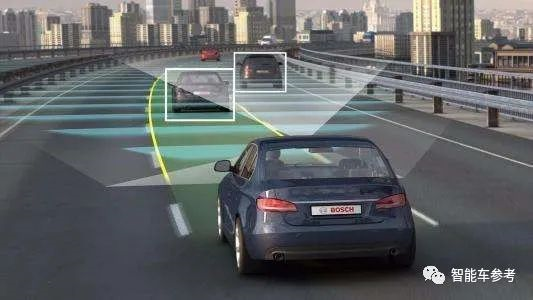

From a broader perspective, Tesla’s AI represents the fastest progress, pure visual route representation, and complete data system construction. It is a benchmark that consumers and the driverless car industry are closely monitoring.

Now let’s take a closer look at how far the pure visual automatic driving technology, represented by FSD, has progressed.

Why is Elon Musk most concerned about one of the 11 updates?

The 11 updates are:

-

Upgraded the modeling of lane geometry shape from dense raster (dot packet) to self-autoregressive decoder.

-

Improved the automatic driving system’s understanding of road rights (road types, drivable areas) when the map is inaccurate or navigation fails. Especially for modeling the road at the junction, it now relies entirely on neural network predictions rather than map information.

-

Improved the accuracy of VRU (vulnerable road users) detection by 44.9%, significantly reducing false alarms for motorcycles, skateboards, wheelchairs, and pedestrians in rainy or bumpy road surface conditions. This was achieved by increasing the data volume of the next-generation recognizer, training previously frozen network parameters, and modifying the network loss function.

-

Reduced the speed prediction error of VRU at a very close distance by 63.6%. The measure is the introduction of a new simulation adversarial high-speed VRU dataset. This update improves automatic driving control for VRUs that move and cut in quickly.

-

Improved the vehicle climbing posture, and the acceleration or braking force at the beginning and end of climbing is stronger.

-

Improved the perception network of static obstacle, enhancing the perception and recognition of obstacles around the vehicle.

-

The recognition error rate for the “parking” attribute (referring to other vehicles on the road) was reduced by 17%, and the accuracy of brake lights was also improved by increasing the data set size by 14%.

-

Improved the automatic driving ability of the vehicle under difficult scenarios by adjusting the loss function. The speed error of the “passable state” was improved by 5%, and the speed error in high-speed scenarios was improved by 10%.

-

Improved the detection and control of vehicles parked on the roadside that may open doors.

-

Optimized the vehicle body control algorithm when the vehicle has both lateral and longitudinal acceleration and during bumps to obtain a smoother turning experience.- Ethernet data transmission optimization, improving the stability of FSD UI visualization.

All updates can be roughly divided into two categories. The first category is improvements to the passenger riding experience, such as smooth cornering, UI visualization, and climbing posture.

The second category is closely related to the vehicle’s perception and decision-making, such as using neural networks to predict intersections and reducing reliance on high-precision maps.

In addition, the “phantom braking” issue that users frequently reported since the end of last year is caused by camera recognition errors under interference.

This update improves the recognition accuracy of different targets and states by adding recognizers, enriching data sets, and adjusting loss functions in the backend AI neural network.

And the progress of these capabilities, whether in perception decision-making or modeling, cannot be separated from the most basic lane and target prediction capabilities.

This is also the “architectural-level” update Musk was most concerned about in this update:

The modeling of lane geometry shape has been upgraded from dense raster to autoregressive decoder.

What does that mean?

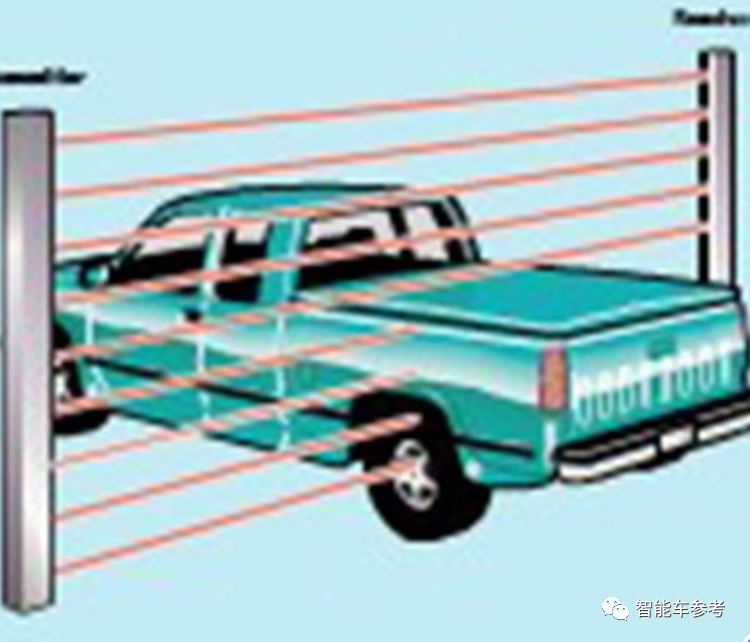

Lane raster was originally a technology commonly used in toll station ETC, which extracts contour features through object shading and is used to distinguish different vehicles.

The dense raster modeling mentioned by Tesla also uses a virtual dense raster to extract a large number of feature points from image data to reconstruct digital models.

The autoregressive decoder directly predicts and connects the vector space lanes using the transformer.

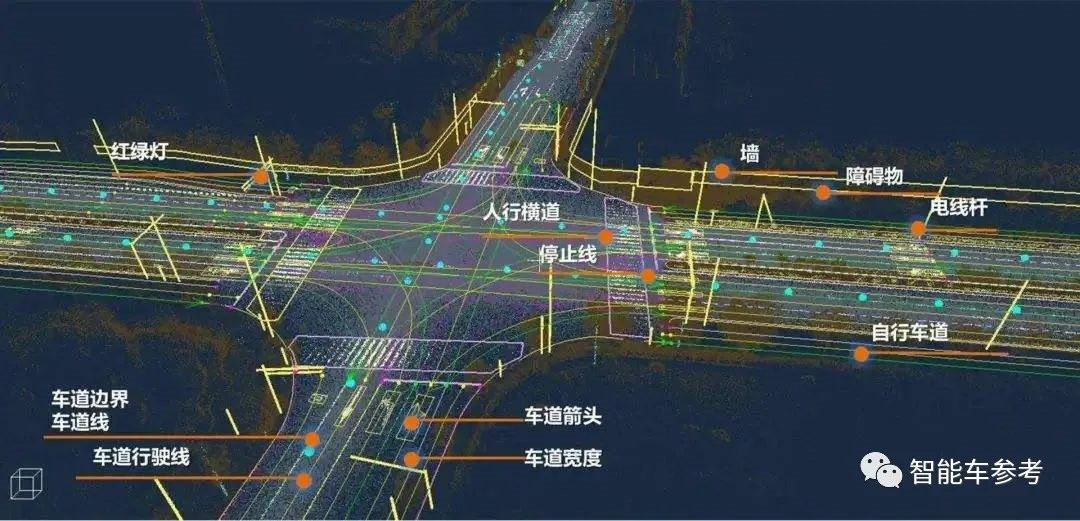

The so-called “vector space” lane refers to decomposing the overall situation of the lane into several key parameter information, such as width, material, color, lane line type, etc.

Why do this? Because for humans, they can understand the basic situation at a glance.

However, for neural networks, it is necessary to extract “understandable” key parameters. If each key parameter is regarded as a vector, then all the information contained in a lane is a multidimensional space.

However, for neural networks, it is necessary to extract “understandable” key parameters. If each key parameter is regarded as a vector, then all the information contained in a lane is a multidimensional space.

With this concept, Musk’s architectural update can be simply understood as eliminating the intermediate step of extracting a large number of points from images and directly generating parameter information that AI can understand.

The advantage of this approach is that the efficiency of the system’s prediction of roads, other target behaviors, and fusion of multiple sensor information is higher.

By reducing the intermediate steps, the computational cost and error rate are naturally reduced.

How do we evaluate this update?

Essentially, the previous modeling methods relied on features extracted from image data that serve “human eyes”, reflecting a human-first ideology.

The ultimate goal of autonomous driving is to replace humans with AI. Isn’t it redundant to extract parameters from information data that serves humans?

Moreover, the cost of calculation and error rate will increase.

Therefore, this update reflects Musk’s deep understanding of the essence of AI, and embodies his emphasis on “first principles” that he has always emphasized.

Cold and rational, Musk has gone further and further in abandoning human inherent thinking.

For the Tesla FSD product, it is more “machine” thorough. Perhaps it is currently not as good as some friendly businesses in MPI and smoothness, but in terms of underlying structure, it is already a purer AI.

What does a more AI-focused Tesla mean?

Musk believes that a more AI-focused and essential FSD represents stronger autonomous driving capabilities.

Currently, FSD 10.11 is being pushed internally among Tesla employees, but Musk says that if it “performs” well, it will be pushed for testing to a larger range of users.

This “performance” includes both the algorithm’s abilities and the reliability of the car owner.To experience Tesla’s FSD, you must be an “excellent student”. Tesla rates every user who applies for FSD testing to determine if they are a qualified and responsible driver, based on five dimensions: forward collision warnings per 1000 miles, emergency braking, sharp turns, unsafe following, and forced autopilot disengagement. In other words, distracted or aggressive driving, as well as mistrust of autonomous driving, can result in a low rating. At present, only those with a rating of 98 or above are eligible to test FSD, with only about 60,000 people meeting this requirement. Elon Musk’s plan to scale up involves lowering the driver admission score to 95. Users who have heard this news overseas are already excited. It is like a prestige admission system that Musk has created instead of simply selling the service. As a Tesla owner, or a user of other smart cars, what features have left the deepest impression on you during use?

Markdown Text:

To experience Tesla’s FSD, you must be an “excellent student”. Tesla rates every user who applies for FSD testing to determine if they are a qualified and responsible driver, based on five dimensions: forward collision warnings per 1000 miles, emergency braking, sharp turns, unsafe following, and forced autopilot disengagement. In other words, distracted or aggressive driving, as well as mistrust of autonomous driving, can result in a low rating.

At present, only those with a rating of 98 or above are eligible to test FSD, with only about 60,000 people meeting this requirement. Elon Musk’s plan to scale up involves lowering the driver admission score to 95. Users who have heard this news overseas are already excited. It is like a prestige admission system that Musk has created instead of simply selling the service. As a Tesla owner, or a user of other smart cars, what features have left the deepest impression on you during use?

<video controls class="w-full" preload="metadata" poster="https://upload.42how.com/article/image_20220316193532.png">

<source src="https://upload.42how.com/v/%E8%A7%86%E9%A2%91%E7%B4%A0%E6%9D%90/shices%E5%AE%9E%E6%B5%8B%E7%89%B9%E6%96%AF%E6%8B%89.mp4">

</video>

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.