Introduction

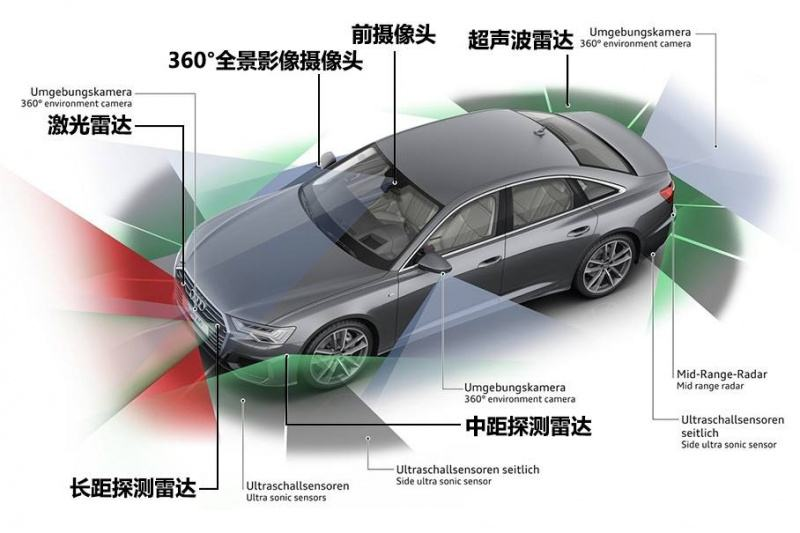

Sensors are the hardware foundation of a car to perceive the surrounding environment, which are indispensable in every stage of autonomous driving. The coordination among the perception layer, control layer, and execution layer is crucial for autonomous driving. Sensors such as cameras, mmWave radars, and LiDARs acquire information like images, distance, and speed, playing the role of eyes and ears. The decision-making and planning module analyzes and processes the information, makes judgments, gives commands, and plays the role of the brain. The control module and vehicle structure are responsible for executing the commands, playing the role of hands and feet. Environmental perception is the foundation of everything, so sensors are indispensable for autonomous driving. Nowadays, the fusion of multiple sensors is becoming mainstream in autonomous driving compared to single-sensor perception. The more data sources there are, the more stable and reliable the final perception result is. The advantages of each sensor can be utilized while avoiding defects. This article will introduce the sensors that are currently more widely used in autonomous driving vehicles and the multi-sensor fusion perception solutions based on them.

Camera: The Eye of Autonomous Driving

The vehicle-mounted camera is the basis for implementing many warning and recognition-related ADAS functions. Among many ADAS functions, the visual image processing system is relatively basic and more intuitive for drivers, and the camera is the basis for the visual image processing system. Therefore, the vehicle-mounted camera is essential for autonomous driving. Currently, the ADAS functions that can be achieved based on cameras are shown in the table below:

Many of these functions can be achieved through cameras, and some functions can only be implemented through cameras. The price of vehicle-mounted cameras has continued to decline, and multi-camera systems integrated into a single vehicle will become a trend in the future. The cost of cameras is relatively low, and the price has continued to decline from over 300 yuan in 2010 to around 200 yuan per camera in 2014, making them easy to popularize and apply. According to the requirements of different ADAS functions, the installation position of the camera also varies. It can be divided into four parts: front view, side view, rear view, and built-in. To achieve a full set of ADAS functions in the future, a single vehicle needs to be equipped with at least 5 cameras.

The front view camera has the highest frequency of use, and a single camera can achieve multiple functions, such as driving record, lane departure warning, forward collision warning, and pedestrian recognition. The front view camera is usually equipped with a wide-angle lens and mounted on the inside of the rearview mirror or a higher position on the windshield to achieve a longer effective distance.

The front view camera has the highest frequency of use, and a single camera can achieve multiple functions, such as driving record, lane departure warning, forward collision warning, and pedestrian recognition. The front view camera is usually equipped with a wide-angle lens and mounted on the inside of the rearview mirror or a higher position on the windshield to achieve a longer effective distance.

Replacing the rearview mirror with a side view camera will become a trend. Due to the limited range of the rearview mirror, when another vehicle is located outside this range in the oblique rear direction, it is “invisible”. The existence of blind spots greatly increases the probability of traffic accidents. Installing side view cameras on both sides of the vehicle can cover the blind spots, and when a vehicle enters the blind spot, it will automatically remind the driver to pay attention.

Currently, in-vehicle cameras are widely used and relatively inexpensive compared to other sensors. Compared with mobile phone cameras, in-vehicle cameras have harsher working conditions and need to meet various requirements such as shock resistance, magnetic resistance, waterproofness, and high temperature resistance. The manufacturing process is complicated and the technical difficulty is high. Especially for front view cameras used for ADAS functions, which involve driving safety, reliability must be very high. Therefore, the manufacturing process of in-vehicle cameras is also more complicated.

Millimeter Wave Radar: Core Sensor of ADAS

Millimeter waves have a wavelength between centimeter waves and optical waves, so millimeter wave guidance has the advantages of both microwave guidance and optoelectronic guidance:

-

Compared with centimeter wave guidance, millimeter wave guidance has the characteristics of smaller volume, lighter weight, and higher spatial resolution;

-

Compared with infrared, laser and other optical guidance, millimeter wave guidance has the ability to penetrate fog, smoke, dust, long transmission distance and all-weather features;

-

Stable performance is not affected by the shape, color and other interference of the target object. Millimeter wave radar makes up for the usage scenarios that other sensors such as infrared, laser, ultrasound, cameras and other sensors do not have in vehicle applications.

The detection distance of millimeter wave radar is generally between 150 m and 250 m, and some high-performance millimeter wave radars can even reach 300 m, which can meet the needs of detecting a large range of vehicles when the car is running at high speed. At the same time, millimeter wave radar has high detection accuracy. These characteristics enable millimeter wave radar to monitor the operating conditions of vehicles over a wide range, and also to accurately detect the speed, acceleration, distance and other information of vehicles in front. Therefore, it is the preferred sensor for adaptive cruise control (ACC) and automatic emergency braking (AEB).

However, the cost of millimeter wave radar is currently high, which also limits its mass production in vehicles, such as the unit price of the 77GHz millimeter wave radar system is about 250 euros, and the high price limits the vehicle application of millimeter wave radar.

LiDAR: Trade-offs Between Cost and FunctionalityThe excellent performance of lidar makes it the best technology route for unmanned driving. Lidar has superior performance compared to other autonomous driving sensors:

-

High resolution. Lidar can obtain extremely high angular, distance, and speed resolutions, which means that it can use Doppler imaging technology to obtain very clear images.

-

High accuracy. Lidar has a straight propagation path, good directionality, very narrow beams, and very low diffusion, so it has high accuracy.

-

Strong anti-active interference ability. Unlike microwave and millimeter wave radars, which are easily affected by electromagnetic waves widely existing in nature, there are not many signal sources in nature that can interfere with lidar, so lidar has strong anti-active interference ability.

The 3D lidar is usually installed on the roof of the car and can rotate at high speed to obtain point cloud data of the surrounding space, thereby drawing a real-time 3D spatial map of the vehicle surroundings. At the same time, lidar can also measure the distance, speed, acceleration, angular velocity, and other information of other vehicles in three directions around it. Combined with GPS map calculation, the location of the vehicle can be obtained. After transmitting these massive and rich data information to the ECU for analysis and processing, it is used to make quick judgments for the vehicle.

Despite the fact that lidar has so many advantages, the biggest obstacle to its use in mass-produced car models is cost. The unit price of lidar is in the tens of thousands of units, and its high price makes it difficult to market.

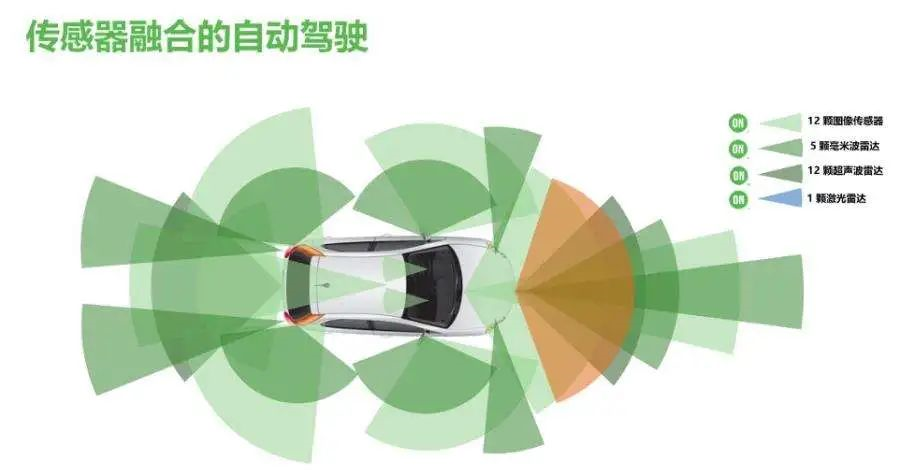

The future of autonomous driving: multi-sensor fusion

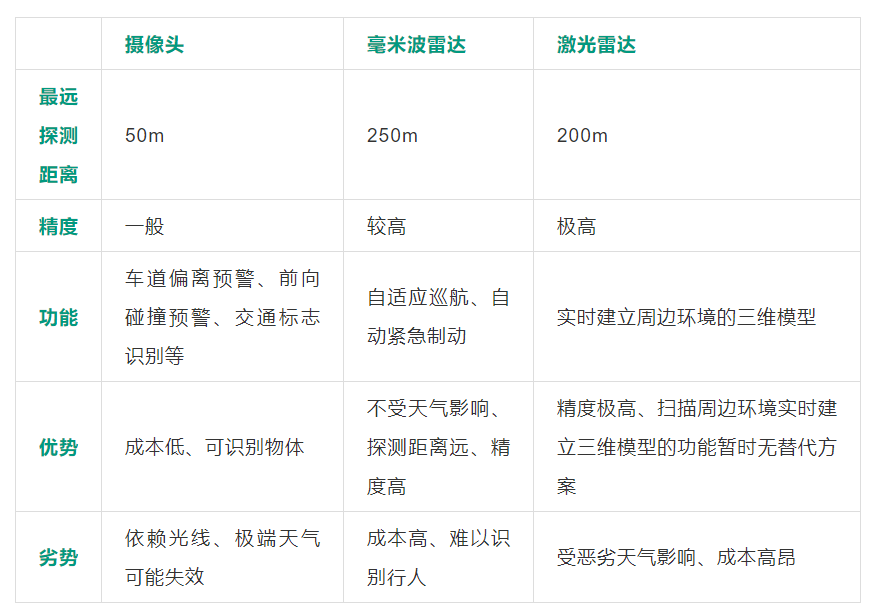

The above describes the three most commonly used categories of perception sensors for autonomous driving. Summarizing their advantages and disadvantages, the table below shows:

Each sensor has its own advantages and disadvantages, and it is difficult to replace each other. In order to achieve autonomous driving in the future, it is necessary to have multiple (several) sensors to cooperate and form the perception system of the car together. The principles and functions of different sensors are different, and they can play their respective advantages in different usage scenarios, and it is difficult to replace each other. For example, in a previous Tesla autonomous driving collision, both the millimeter wave radar and front camera of Tesla made errors in extreme situations. It can be seen that the camera + millimeter wave radar scheme lacks redundancy and has poor fault tolerance, and it is difficult to complete the mission of autonomous driving. Multiple sensor information fusion and comprehensive judgment is needed.Multiple sensors of the same or different types can obtain information about different local areas and categories. The information may complement each other, or there may exist redundancies or contradictions. The control center needs to issue a unique and correct command, which requires it to integrate and comprehensively evaluate the information from multiple sensors. Imagine a situation where one sensor requires the car to brake immediately while another sensor shows that it is safe to continue driving, or where one sensor requires the car to turn left while another sensor requires it to turn right. Without integrating the information from the sensors, the car will “feel lost” and may ultimately lead to accidents.

Therefore, to ensure safety when using multiple sensors, it is necessary to integrate their information. Sensor fusion can significantly improve the system’s redundancy and fault tolerance, thereby ensuring the speed and accuracy of decision-making. It is an inevitable trend in autonomous driving.

At the same time, the use of multiple sensors increases the amount of information that needs to be processed, including contradictory information. It is crucial to ensure that the system can quickly process data, filter out useless or incorrect information, and ensure that the system makes timely and correct decisions. Currently, the theoretical methods for sensor fusion include the Bayes’ rule method, Kalman filter method, D-S evidence theory, fuzzy set theory, and artificial neural network method. In fact, sensor fusion is not difficult to implement in hardware, and the focus and difficulty lie in algorithms. Sensor fusion software and hardware are difficult to separate, but algorithms are the core and difficult point, with a high technical barrier. Therefore, in the future, algorithms will occupy the main part of the value chain in the entire autonomous driving industry.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.