Recently, at the 2025 Global Developer Pioneer Conference (GDC), SenseTime unveiled the industry’s first “R-UniAD,” an end-to-end autonomous driving route coordinating interactive engagement with a world model. This approach constructs a simulation environment through the world model for online interaction, enabling reinforcement learning training of the end-to-end model.

This is notably similar to the DeepSeek technology that gained widespread attention during the Spring Festival this year. SenseTime JuYing believes that evolving from imitation learning to reinforcement learning, end-to-end autonomous driving holds the potential to surpass human driving performance.

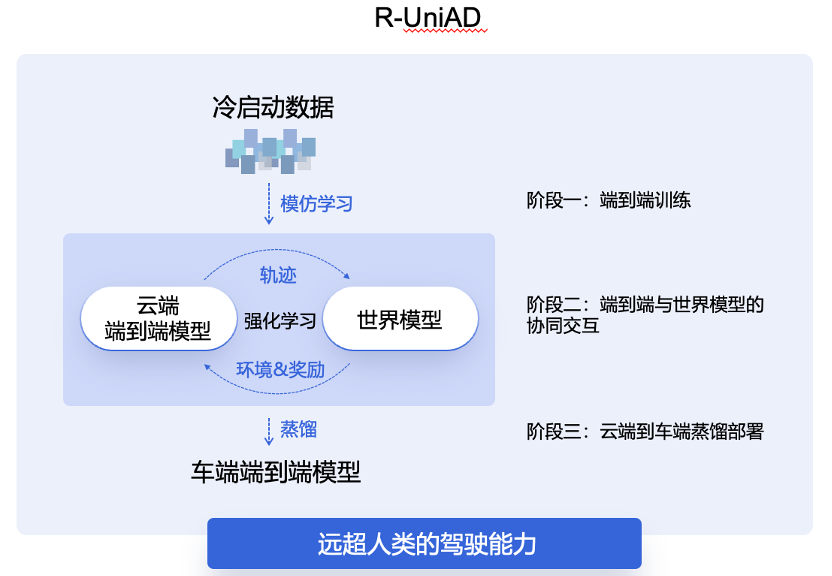

In his speech, SenseTime JuYing’s CEO, SenseTime’s co-founder and Chief Scientist Wang Xiaogang, detailed that training R-UniAD involves three stages. Initially, large end-to-end autonomous driving models are trained in the cloud through imitation learning with cold-start data. Then, utilizing reinforced learning, the cloud-based end-to-end large models engage interactively with the world model, continually enhancing performance. Finally, the large cloud model undergoes efficient distillation, achieving the vehicle-end deployment of high-performance small end-to-end autonomous driving models.

According to the plan, at the Shanghai Auto Show in April, SenseTime JuYing will demonstrate the real vehicle deployment of the “end-to-end autonomous driving solution with world model coordination.”

As per SenseTime JuYing’s depiction, it’s a “DeepSeek moment” for China’s intelligent driving development.

After the speech, Garage 42 and multiple media outlets interviewed Wang Xiaogang for an in-depth discussion about the technical core of R-UniAD, the simulation capability of the “enlightened” world model, and responses to trending topics like DeepSeek and official FSD deployments. Through this dialogue, we aim to uncover SenseTime’s advance strategies in intelligent driving and the cutting edge of its R&D.

A tenfold reduction in data demand

Today, the industry acknowledges the end-to-end intelligent driving technical route, essentially realizing optimal “imitation” driving effects through vast quantities of high-quality human driving data. However, the imitation learning-based paradigm can approximate human capacity but struggles to surpass human limitations. The scarcity of high-quality data, inconsistency in driving data quality, and the requirement for millions of quality data clips form a significant barrier to achieving the zenith of human driving capability in end-to-end intelligent driving solutions.

This situation has somewhat transformed competition in the end-to-end intelligent driving level into a contest of computational power.

During the Spring Festival, DeepSeek-R1’s innovation using pure reinforcement learning garnered global attention. With minimal high-quality data for cold starts, the model undergoes multi-stage reinforcement learning training, thereby reducing the data scale barrier for training large models. Importantly, reinforcement learning enables the model to spontaneously develop long-chain reasoning capabilities, enhances inference outcomes, and may even possess the potential to surpass human cognitive abilities.Based on the reinforcement learning technology path of large models, it can be transferred to the training and development of end-to-end autonomous driving algorithms. This is also the technical approach of HYCAN R-UniAD.

According to estimations by SenseTime, the technology path of multi-stage learning with small samples can reduce the data demand for end-to-end autonomous driving by an order of magnitude. How is this achieved?

Wang Xiaogang gave a practical example, a challenging traffic scenario, where only 10% of high-quality human driving data can pass, making data collection and training quite difficult. But for reinforcement learning, it can repeatedly attempt through simulations, ultimately not only passing smoothly in this scenario but also generating different postures and paths to solve complex scenarios.

“In this scenario, its data utilization could be increased by tenfold to a hundredfold,” said Wang Xiaogang.

The key to the next stage of intelligent driving training is not about finding quality human driving data but about finding complex scenarios to enter the next round of evolution and iteration.

However, multi-stage reinforcement learning does not mean data is unnecessary; rather, the definition of high-quality data has fundamentally changed. Previously, the entire process required skilled driver data, but now only a scene image or a very short video clip is necessary, and reinforcement learning can complete the rest of the work.

It can be said that data remains crucial, but the acquisition method has been optimized.

However, Wang Xiaogang did not provide an exact answer to the extent of data demand reduction, as he believes more practical validation is needed.

“Awakening” World Model: The Simulation-driven Foundation of Intelligent Driving

After cloud-based end-to-end autonomous driving large model training, R-UniAD enters the second stage. Based on reinforcement learning, it allows the cloud’s end-to-end large model to interact with the world model, continuously enhancing the end-to-end model’s performance. How are the technical challenges during this process resolved?

Wang Xiaogang believes the world model’s more realistic simulation, diversity of predictive generation, and evaluation of driving behavior through the reward function are key.

He said, “We have long-term accumulation in world model simulation. During this process, the resulting models should comply quite well with physical laws and traffic regulations. Moreover, the generated videos need to be not only visually appealing but also accurate, which are areas where we have done a lot of work previously.”

The diversity of predictive generation is the second challenge. When driving in a particular traffic scenario, there are actually different ways to navigate, and surrounding vehicles and people may change at different times. Therefore, these generated simulation trajectories and video sequences need more diversity.

The third challenge is using a reward function to evaluate good driving behavior, comparing during interactions to dynamically identify whether simulation outcomes are good or bad.After end-to-end autonomous driving model training in the cloud and collaborative interaction with the world model, the cloud-based large model will ultimately achieve high-performance end-to-end autonomous driving small model deployment to the vehicle end through efficient distillation.

Among these, high-quality data almost exclusively comes from simulation. Currently, SenseTime’s SimPro simulation data accounts for approximately 20%, with hopes to increase this to 50-80% in the future.

However, during the process of deploying from cloud distillation to the vehicle end, how can we ensure that the inference model’s capabilities are not compromised?

Xiaogang Wang candidly admits that the model’s capabilities will inevitably diminish, but on the edge, it is crucial to ensure basic functionality given limited computational power.

He believes two aspects are critical: “One is to generate high-quality data for distillation from the cloud model. Additionally, it is essential to design a Mixture of Experts (MoE) model architecture tailored to the architecture characteristics of edge chips.”

Currently, in the smart cockpit, SenseTime has already run a series of such models on the edge.

Changing the Cost Structure of Intelligent Driving

According to SenseTime’s mass production landing plans, the 2024 Beijing Auto Show saw SenseTime SimPro showcasing UniAD’s on-road achievements, with mass-produced end-to-end intelligent driving solutions expected to be delivered by the end of this year. The newly released R-UniAD will be showcased at the Shanghai Auto Show this April in real vehicle deployment solutions.

In terms of mass production landing timelines, SenseTime still lags somewhat behind other industry leaders.

Xiaogang Wang states, “The so-called end-to-end mass production actually involves significant technological differences in the background. For instance, some two-stage end-to-end approaches mix with certain rules to varying degrees, which are not the same.”

Introducing a new technical route signifies a fundamental change in its entire R&D system. The capacities for data simulation, as well as cloud capabilities, will be greatly enhanced. For the intelligent driving industry, this presents an opportunity.

In fact, R-UniAD is quietly altering the cost structure of intelligent driving.

In SenseTime’s intelligent driving solution, perception components do not involve LiDAR but primarily rely on vision-based perception. Xiaogang Wang argues that such a choice of sensing hardware is partly due to cost considerations and partly due to the enhanced upper limit of vision solutions as training data scales up, which can compensate for the shortcomings of other sensors.

He noted, “When visual capabilities are relatively weak, LiDAR has to be used as a supplement.”

Today, the price of vehicles equipped with intelligent driving has already dropped below 100,000 RMB, and the trend towards universal intelligent driving is unstoppable. Xiaogang Wang believes this further demands reduced hardware costs and increased robustness.

In Conclusion

The R-UniAD technology route released by SenseTime at the 2025GDC Global Developer Conference signifies yet another significant breakthrough in the autonomous driving field. Through collaborative interaction with the “Awakening” world model, this end-to-end autonomous driving route not only reduces data requirements by an order of magnitude but also leverages enhancements in reinforcement learning and simulation capabilities, paving the way for intelligent driving to potentially surpass human driving levels.From Wang Xiaogang’s presentation, we see how R-UniAD is quietly transforming the cost structure of the smart driving industry through few-shot learning and diversified simulation. The debut of R-UniAD may be a sign that the smart driving industry is reaching a “DeepSeek moment.” We believe that after many car companies have adopted the slogan of democratizing smart driving, SenseAuto can also secure its place in urban smart driving.

This article is a translation by AI of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.