Commercialization: the pressing issue for all self-driving companies, even for giants like Baidu

On November 29th, the Apollo Day Technology Open Day was held online, and all the core members of Baidu’s self-driving team were present, providing detailed introductions on the core technologies involved in self-driving, including high-precision maps, large-scale models, closed-loop data, and AI chips, as well as their plans to focus on creating the world’s largest fully autonomous driving service area by 2023.

It was not until the final speech that Wang Liang, the Chairman of Baidu’s Intelligent Driving Division’s Technology Committee, revealed the core topic: Baidu will launch an L2+ flagship product ANP3.0 for navigation-assisted driving in 2023. “Today, the L4 technology is downscaled to the L2+ product, and we can quickly create a leading ANP3.0 product from 0 to 1, which is a good demonstration,” he said.

However, implementing the technology is just the first step in Baidu’s long journey towards commercializing L2+ intelligent driving.

ANP3.0: Baidu Downsizes L2+

Based on the current disclosed information, ANP3.0’s overall plan and technical route are in line with the industry standard.

ANP3.0 will be the first in China to support complex urban road scenarios as well as seamless connectivity between highways and parking scenes.

Currently, the primary players in China with similar high-level intelligent driving capabilities for small-scale scenarios are ARCFOXhu Alpha S HI version, XPeng P5, and the upcoming Weipai Mocha DHT-PHEV with LiDAR versions.

Regarding the hardware configuration, ANP3.0 is equipped with a high-definition camera with 8 million pixels and a visual distance of up to 400 meters. It is also accompanied by state-of-the-art semi-solid-state LiDAR that generates more than one million points per second.

An 8 million pixel camera has become a standard configuration for high-level intelligent driving systems.

As for LiDAR, Alpha S HI version, P5, and Mocha DHT-PHEV LiDAR version are respectively equipped with three 96-line Huawei hybrid solid-state LiDAR, two Vayyar HAP144 line semi-solid-state LiDARs, and two SureStar M1P125 line semi-solid-state LiDARs.Considering that Jidu ROBO-01 is equipped with the HESAI AT128 (128-line) quasi-solid-state lidar, it is possible that Baidu’s ANP3.0 also uses the same product.

For the computing platform, ANP3.0 is equipped with two NVIDIA Orin-x chips, with an AI computing power of 500 TOPS, higher than its “competitors”.

Huawei MDC810, NVIDIA Xavier, and Qualcomm Snapdragon 8540+7nm + Qualcomm Snapdragon 9000 are the solutions adopted by the previous three, and their computing powers are 400 TOPS, 30 TOPS and 360 TOPS, respectively.

Interestingly, the Kunlun chip has already been fully adapted to Baidu’s RoboTaxi system and is being used in actual road conditions, but ANP3.0 still uses NVIDIA Orin instead of Kunlun chip to enhance the collaborative effect.

Regarding the algorithm model, the ANP3.0 mainly adopts a visual scheme, equipped with a lidar for safety redundancy.

The second-generation pure visual perception system Apollo Lite++ carried by ANP3.0 uses a Transformer to transform the front view features to BEV, and directly outputs three-dimensional perception results after fusing the camera’s observations at the feature level, and realizes motion estimation learning through temporal feature fusion.

“ANP3.0’s visual and lidar two sets of systems operate independently and have low coupling. It is probably the only environment perception solution in China that can truly achieve redundancy,” said Wang Liang in his speech.

It is worth noting that with the high-end advancement of intelligent driving systems towards city navigation, perception redundancy has become a common choice for most players. There are many fusion schemes, including middle fusion and even front fusion. The post-fusion scheme adopted by Baidu seems to be “against the trend”, but on the other hand, it may also indicate their confidence in their own visual perception capabilities.

Not long ago, another L4 player, QZY, launched a mass-produced product of the L2+ level, which, for the first time in the industry, deployed a large model (OmniNet) that fuses temporal multimodal features on a mass production platform, and can output multi-task results of visual, lidar and millimeter-wave radar in BEV space and image space with only one neural network.

The Confidence of ANP3.0## ANP3.0 is also very characteristic of Baidu.

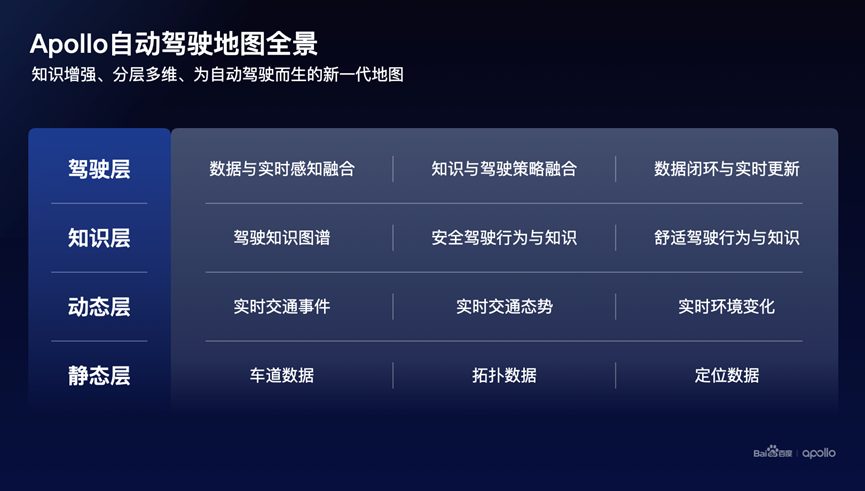

“Among the many advanced “heavy sensing, light mapping” solutions in the industry, high-precision maps are a necessary feature for safe and good-driving experience for smart driving products in city-level advanced driving aids today,” said Wang Liang.

This is, of course, based primarily on Baidu’s inherent capabilities in high-precision maps. To address the generalization and freshness issues in high-precision maps, Baidu substantially reduces the cost of map production by using a lean on-board algorithm that improves fault tolerance.

For example, by optimizing line planning and control algorithms and upgrading the point cloud stitching algorithm online, with increased fault tolerance for map accuracy errors, each road can be mapped with only one collection, which greatly reduces the production cost of field work for map production.

Location algorithms reduce dependence on point clouds and feature points, relying only on landmark positioning layers to support ANP3.0’s high-precision self-positioning on city roads.

In terms of the core data loop, Wang Liang states that with Baidu’s accumulation in AI, cloud computing, and autonomous driving, they have created a software-as-a-service (SaaS) product for data loop for internal and external users. By managing the full lifecycle of data mining, labeling, model training, and simulation verification, data-driven intelligent driving capabilities are achieved.

At this technical conference, Baidu proposed a data loop design approach centered on high refinement and high digestion. High refinement is achieved through efficient data mining and automated annotation with small-scale and large-scale vehicle-to-cloud collaboration. High digestion is achieved through centralized, end-to-end integration of data, models, and metrics.

Furthermore, Baidu hopes that ANP3.0 data can feed back to L4 after 2023.

In the past two years, Baidu has unified the L4, L2 + overall technology architecture, unified the vision BEV scheme, and unified the lightweight high-precision map, while connecting the data on both sides, completely sharing the data loop, simulation infrastructure, and supporting tool chains.## Commercialization Path

At the technology day event, Baidu fully demonstrated its “muscles” in the field of autonomous driving technology and system but dropping from L4 to L2+ is not just about having “good technology”.

Currently, aside from its investments in Jidu and WM Motor, Baidu’s autonomous driving commercialization projects only include the previously rumored BYD.

Earlier this year, media reports stated that BYD had chosen Baidu as its intelligent driving supplier. Baidu provided ANP intelligent driving products and human-machine collaborative driving maps to BYD. The Baidu intelligent driving team entered early to cooperate with BYD for development, and mass production of the cooperative vehicle models will be realized soon.

However, as 2022 comes to an end, Baidu and BYD have yet to officially announce the collaboration.

According to the “Electric Vehicle Observer,” Baidu did indeed have relatively in-depth contact with BYD on their intelligent driving system collaboration, but it is unknown how the project has progressed. “BYD’s SOP requirements are tight, and supplier selection is also cautious.”

“Bring the L4 technology and create a new, professional, Tier 1 business locally.” Wang Liang expressed in an interview with the media.

It is undeniable that although Baidu has a complete autonomous driving technology system, it is still considered a “novice” in the mass production market, lacking experience.

To be the party B, mass production experience is sometimes more important than technology.

“We have encountered competitors with very strong algorithmic capabilities, but they did not enter the final PK round simply because they didn’t do the basics well,” said a T1 intelligent driving supplier, “the entire system, platform, functional safety, information security, and real-time ability at the vehicle regulation level are thresholds that automakers value more. Only after passing this threshold can they start focusing on characteristics and customization, and can complete any demand, no matter what it is.”

In 2023, Jidu’s first product will be delivered. The car-making skills Baidu learned from Jidu may become the deciding factor for whether its L2+ technology capabilities can truly penetrate the market.

–END–

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.