At 8 p.m. last night (October 24th), XPeng Motors (Xpeng) held its 1024 Tech Day as scheduled, with four core themes:

- Intelligent driving

- Intelligent interaction

- Intelligent robotics

- Flying cars

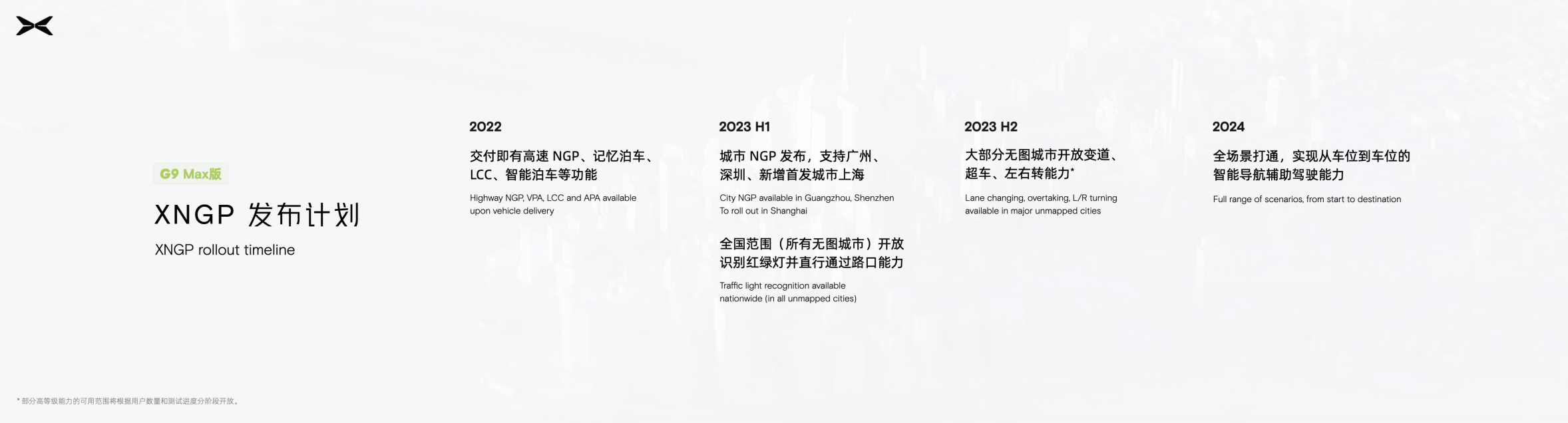

Our greatest concern is “intelligent driving”. Dr. Wu Xinzhou gave the XNGP release timeline directly at the event.

G9 Max version XNGP release plan:

- In 2022, there will be high-speed NGP, memory parking and other functions available for delivery.

- In the first half of 2023, city NGP will be released, supporting Guangzhou, Shenzhen, and Shanghai as a new addition, with the ability to recognize traffic lights and go straight through intersections nationwide.

- In the second half of 2023, most image-less cities will have the ability to change lanes, pass, and turn left and right.

- In 2024, full-scene connectivity will be achieved, with the ability to provide intelligent navigation assistance from parking space to parking space.

P5 E version & P7 E version XPILOT release plan:

- In the first half of 2022-2023, traffic light recognition and lane-level navigation functions will be opened.

- In the second half of 2023, high-speed NGP strategy optimization (speed limit adjustment, hand-off detection, etc.) will be implemented.

The biggest highlight is that in the first half of 2023, city NGP will be pushed onto the G9 Max version of vehicles and will support Shanghai. At this point in time, compared with other new forces, NIO and Li Auto, Xpeng has at least a year’s lead. After watching the event, I really feel like ordering a G9.We have clarified the next iteration plan, so let’s go back to the product launch event to see what XPeng will rely on for the upcoming updates.

It’s Hard, But We Still Need to Do It

At this year’s launch event, Dr. Wu Xinzhou brought up the first question: Why do we need to create urban scenes that are more than 100 times harder to build?

Coincidentally, we discussed this issue in the community in September, whether we should continue to improve the freeway navigation system or introduce urban navigation system earlier.

72 people participated, with 65% in favor of continuing the improvement of the freeway navigation system and 35% supporting the earlier introduction of urban navigation system.

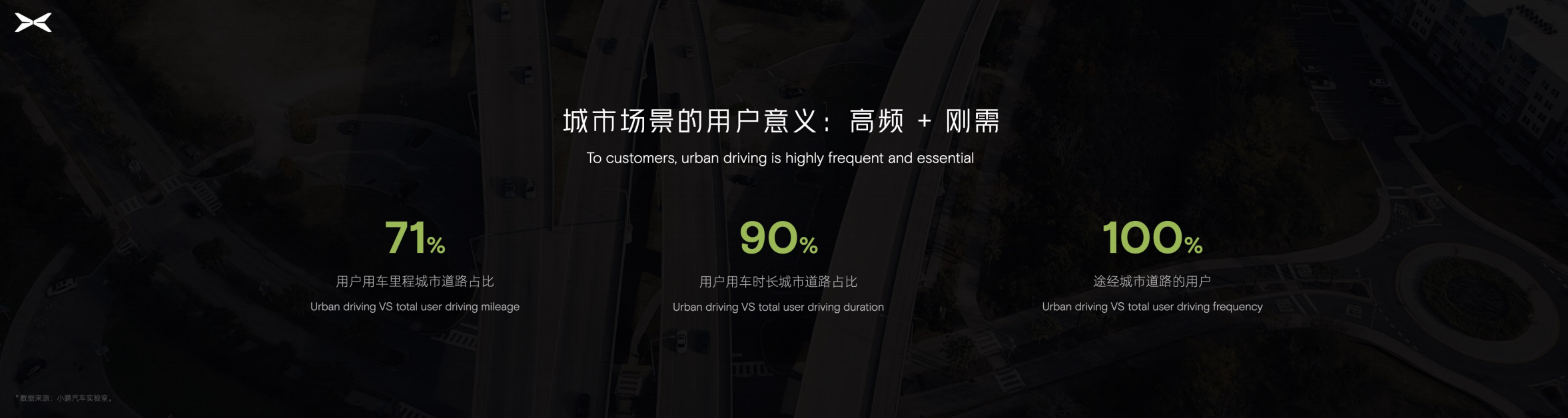

Dr. Wu Xinzhou presented some statistics during the event:

- City roads account for 71% of the total mileage of user driving;

- City roads account for 90% of the total driving time of users;

- All users passing through city roads are using them.

These are the statistics for the user driving scene in general.

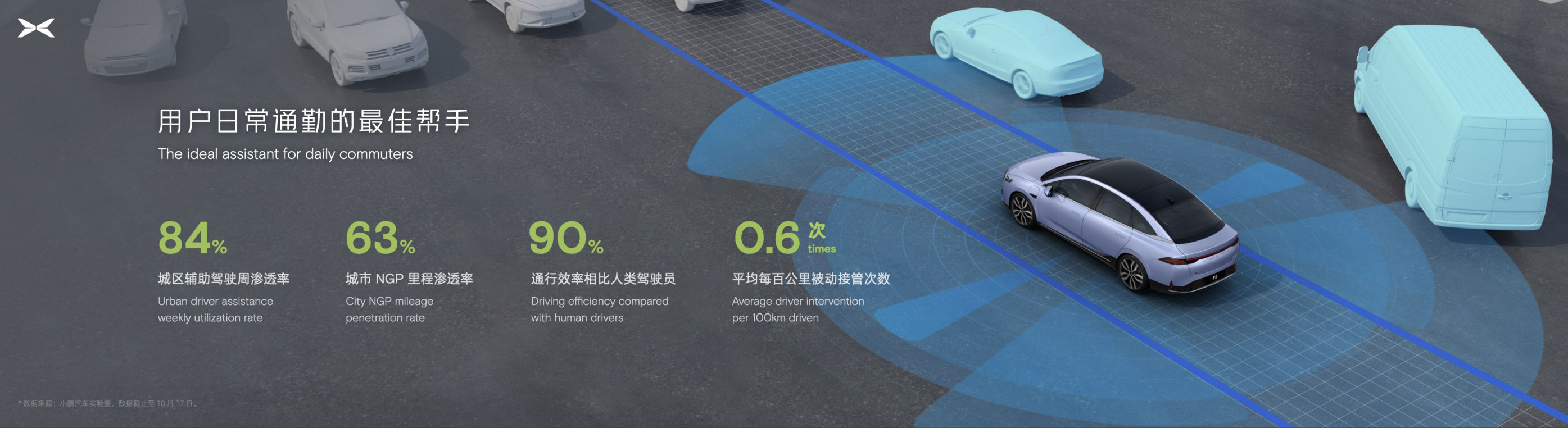

- The penetration rate of Urban NGP’s auxiliary driving system in urban areas is 84%;

- The penetration rate of the Urban NGP system in terms of mileage is 63%;

- The traffic efficiency compared with human drivers is 90%;

- The average number of passive takeovers per 100 kilometers is 0.6.

These are the data after XPeng P5’s Urban NGP push. The data shows that out of every 100 kilometers of roads that can use the City NGP system, 63 kilometers are where this system is operating.

Obviously, the urban scene is a high-frequency and essential scenario for users. But why do we all hope to continue to refine the high-speed navigation function?

Obviously, the urban scene is a high-frequency and essential scenario for users. But why do we all hope to continue to refine the high-speed navigation function?

From most users’ comments, we can see that the core reason why everyone hopes to continue to refine the high-speed navigation function is because of a preconceived stance: because the urban scene is too complex, city navigation is undoubtedly difficult to use under current technology, so it is better to go and refine the high-speed navigation.

Under this logic, it seems reasonable to go and refine the high-speed navigation. However, when we look back at the above data, it is not that we do not need it, but that we believe it cannot be done.

Whether it is Tesla or XPeng Motors, when they decide to tackle the urban scene, their goal is undoubtedly to launch a “user-friendly urban navigation” function.

It is precisely this kind of “thinking from the end” logic that allows them to face difficulties squarely and work hard to overcome them.

Just like a road that is easy to walk on, and a difficult but correct road, Tesla and XPeng have chosen the difficult but correct road.

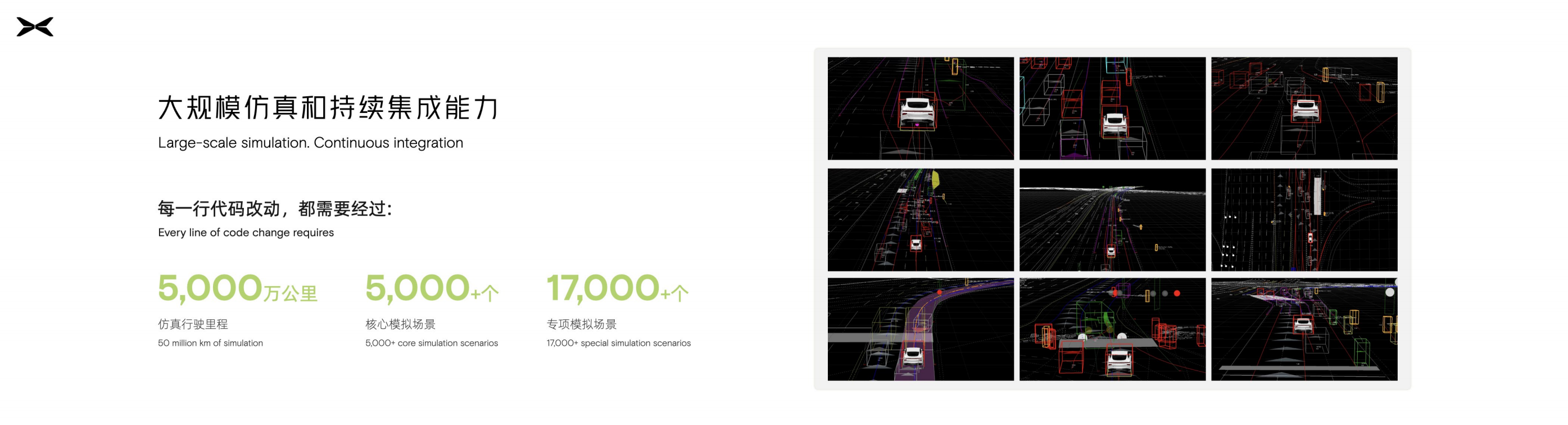

As for the difficulty here, Dr. Wu Xinzhou also gave a set of relative quantitative data:

- The code volume of Urban NGP is six times that of High-speed NGP;

- The data of the Urban NGP perception model is four times that of the High-speed NGP;

- Prediction/planning/control-related code is 88 times that of High-speed NGP.

“`

“`

This is still the data comparison in the case of high-precision map assistance, and XPeng has also stated clearly that it aims to achieve the ability of lane changing, overtaking, and turning left and right in cities without maps in the second half of next year.

To overcome the various tricky scenarios in urban scenes, XPeng starts with “perception” at the source.

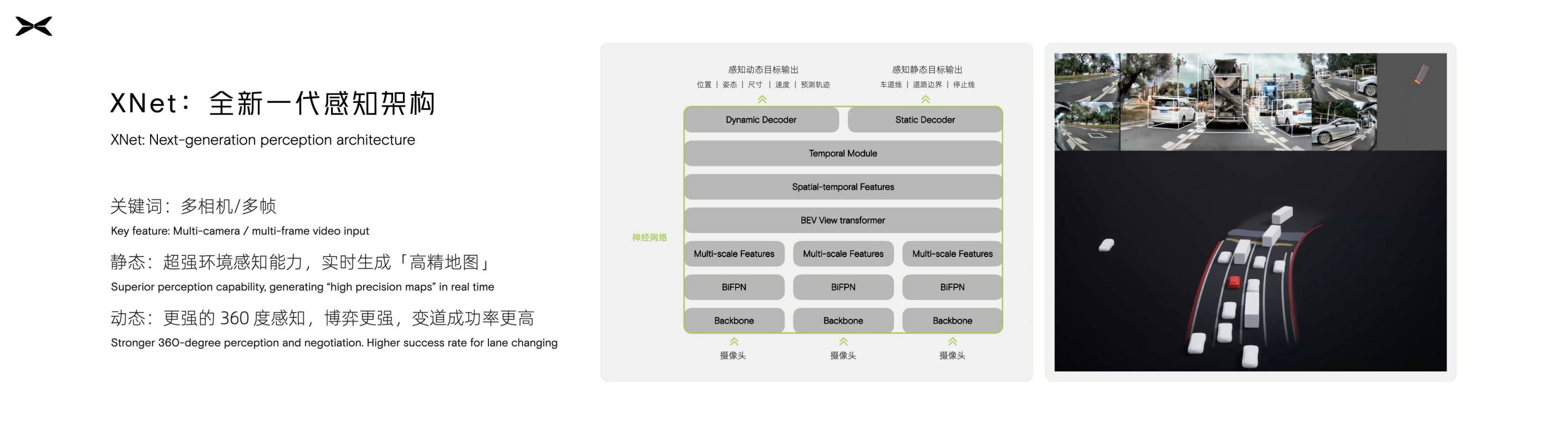

At the press conference, Wu Xinzhou revealed for the first time that XPeng will adopt the XNet new generation perception architecture on G9.

Similar to Tesla, it will input the perception results of the surrounding cameras to the Transformer neural network, carry out multi-frame temporal pre-fusion, and output the 4D information of dynamic target objects and the 3D information of static target objects under BEV perspective.

Simply put, under the original technical framework, it is equivalent to letting you “perceive the surrounding world through multiple perspectives with only fuzzy position correlation, and through multiple comic-like pictures”, and also judging the speed of surrounding vehicles and predicting their driving trajectories.

Under such a perception result, even if it is judged by an experienced driver, it is still very difficult.

But under the current technical framework, the system will directly output a god’s-eye view with dynamic information about surrounding vehicles.

The benefits of this operation are that it can greatly improve the accuracy of monitoring 3D information of static target objects. In Wu Xinzhou’s words: “This perception architecture has the ability to generate high-precision maps in real-time.”

More accurate 4D information of dynamic target objects also means that the system can achieve stronger lane changing and gaming abilities.

Of course, this powerful large-scale neural network model is not something that can be acquired just because.

“`Dr. Wu Xinzhou revealed at the event that behind the powerful XNet is the latest Transformer network architecture. To smoothly deploy this large-model network, 500,000 to 1 million short videos are required for training and optimization. If directly deployed without optimization, it would require computing power greater than an Orin-X.

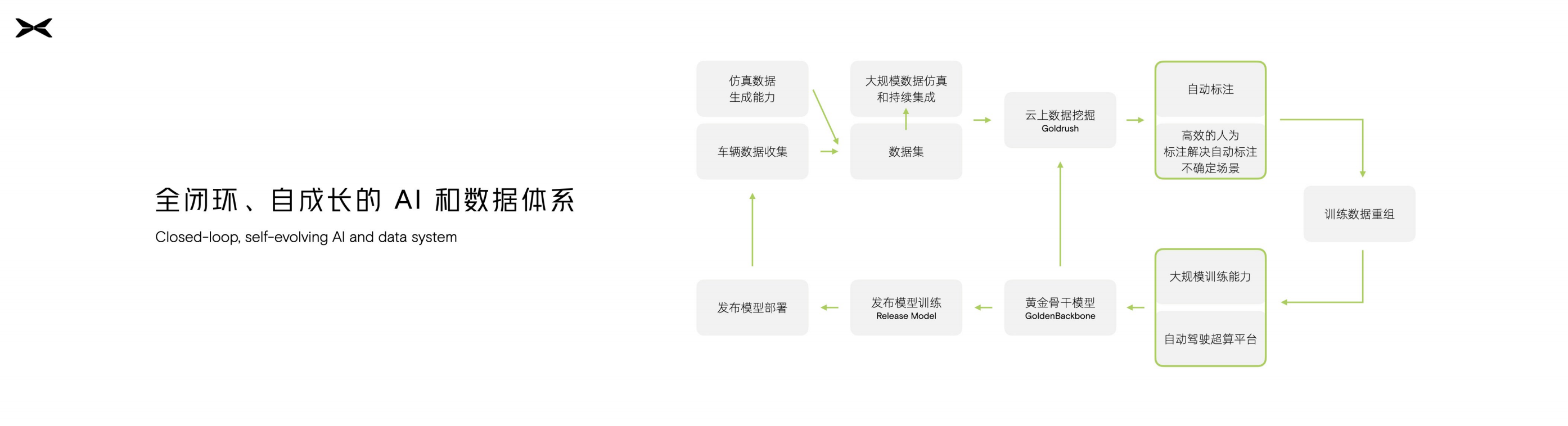

However, the number of dynamic objects in these videos is in the billions. If manually annotated, it would require at least 2,000 people a year to complete the task.

To solve this problem, the autonomous driving team at Xpeng has developed an “automatic annotation system,” compressing the annotation time to 16.7 days.

Then comes the training.

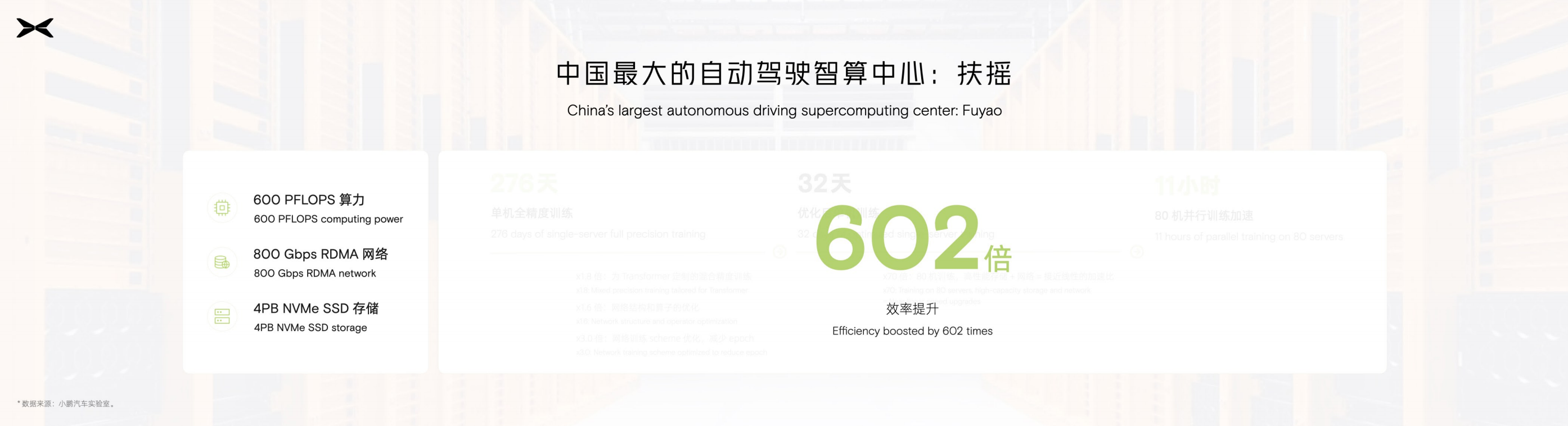

In August of this year, Xpeng announced the completion of China’s largest self-driving intelligent computing center, “Fuyao,” in Ulanqab. It is used for training self-driving models, with computing power reaching 600 PFLOPS, a 602-fold increase in efficiency compared to before.

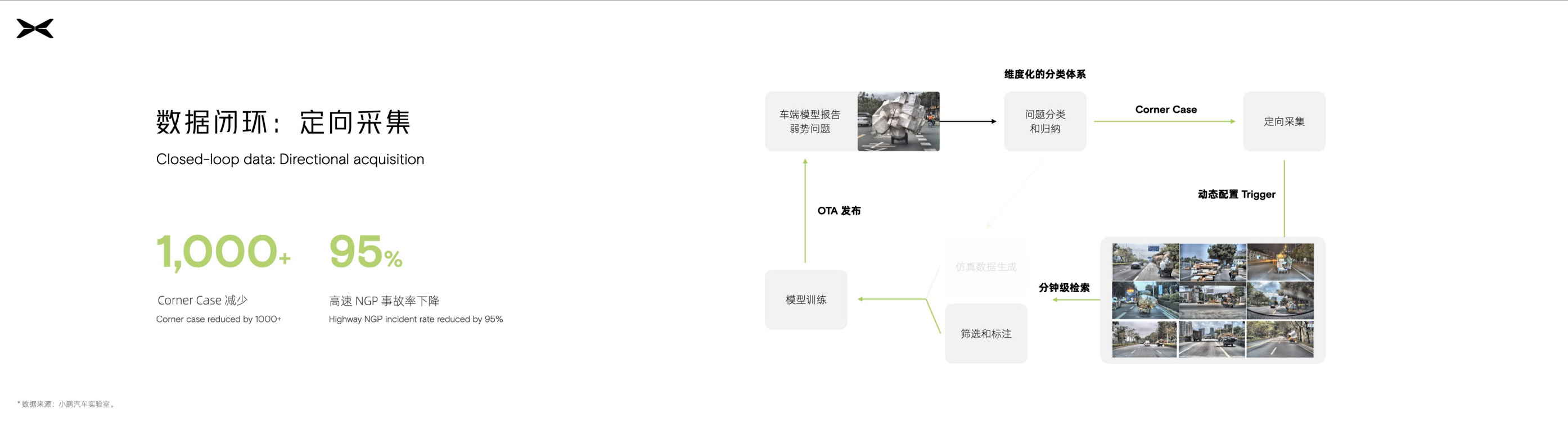

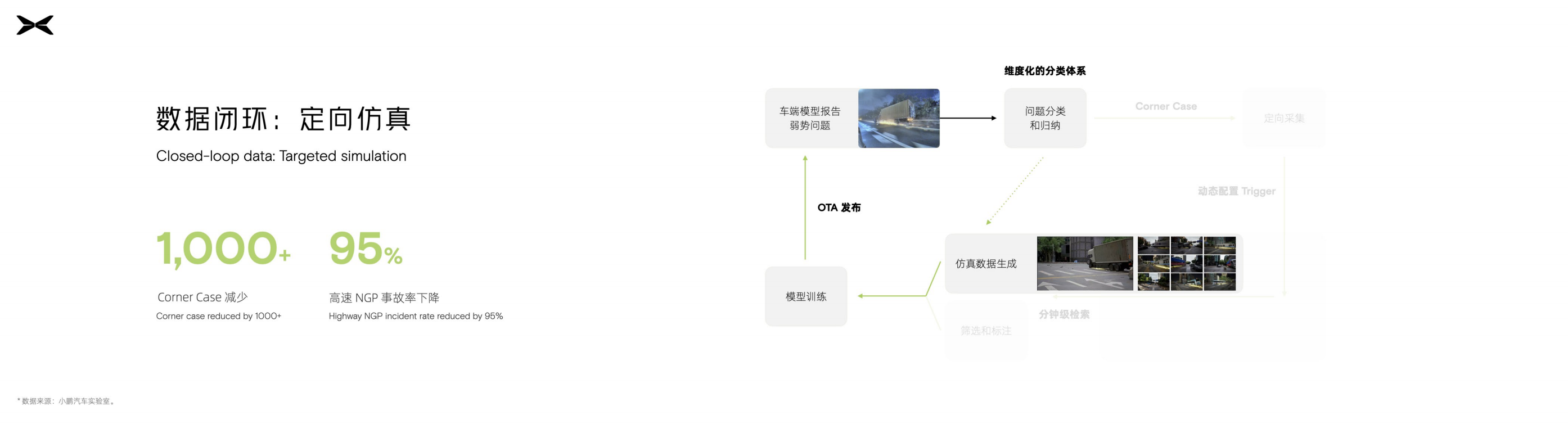

Next, Xpeng will continue to “collect,” “annotate,” “train,” and “deploy” to improve this model.

According to Dr. Wu Xinzhou, currently, nearly 100,000 of XPeng’s users’ cars are capable of data collection, and over 300 triggering signals have been set on the vehicle side. The system can determine which Corner Cases are useful for the system and then upload them. The collected data includes dynamic and static objects outside the car and full-stack data as well.

Lastly, XPeng put forward a new concept, “XNGP – Full-scenario Assisted Driving,” which has stronger capabilities and wider coverage than the current XPilot. XPeng’s goal is to bring the NGP experience in cities without maps infinitely close to that of areas with maps.

Returning to the topic of “whether to continue refining high-speed navigation or implement city navigation as soon as possible,” the answer is obvious. Although the experience of city navigation assisted driving can be refined to a relatively good state under the current technological route, it is destined to be unable to assist in city navigation.

If we use building a house as an analogy, your goal is to build a 100-story skyscraper, and XPeng is restructuring the foundation that can support the height of 100 floors.

Meanwhile, car companies that are still refining high-speed navigation assistance driving are more like constructing on a foundation capable of supporting only three floors. They have done a luxurious decoration for these three floors, but even if they have done a great job with the decoration, they still need to rebuild from the foundation.

“Do What You Say”

The second focus of this year’s October 24th (1024) is “Full-scenario Voice Control.”

In 2020, XPeng first proposed the concept of Full-scenario Voice Control. XPeng’s goal is to make voice control a new way of interaction that can achieve the same effect as physical buttons.In 2020, this system achieved “speakable when visible,” “continuous dialogue,” “dialogue in multitone areas,” and other functions. The emergence of these features has transformed the car’s voice commands from an unusable state into a usable one, and after its release, it has become an object imitated by many car companies. This year, XPeng hopes to transform the voice commands from “usable” to “easy to use.”

We have extensively tested this system in our previous test drives, and the clear conclusion that can be drawn is that this is currently the most powerful voice command system and it will undoubtedly become a benchmark in the industry.

Here are the specific highlights of this system:

Wake-up Free

Whether it is a voice assistant in a car or a smart home, for people with social anxiety, the biggest usage threshold is “waking up” the assistant. When carrying a car full of people, or when friends come over to your house, there is always a great resistance to wake up the voice assistant.

But can’t the voice assistant wake up without being prompted?

It is possible, but difficult.

In the case of a wake-up word, the system can clearly know that the next thing you say is intended for it.

However, in the absence of a wake-up word, the system needs to determine which of the 10 things you say are directed to the driver and which are intended for the assistant. This not only requires the system to have excellent recognition abilities but also requires it to possess human-like semantic judgment capabilities.

On the XPeng G9, the wake-up-free voice commands support all 4 seats in the car.

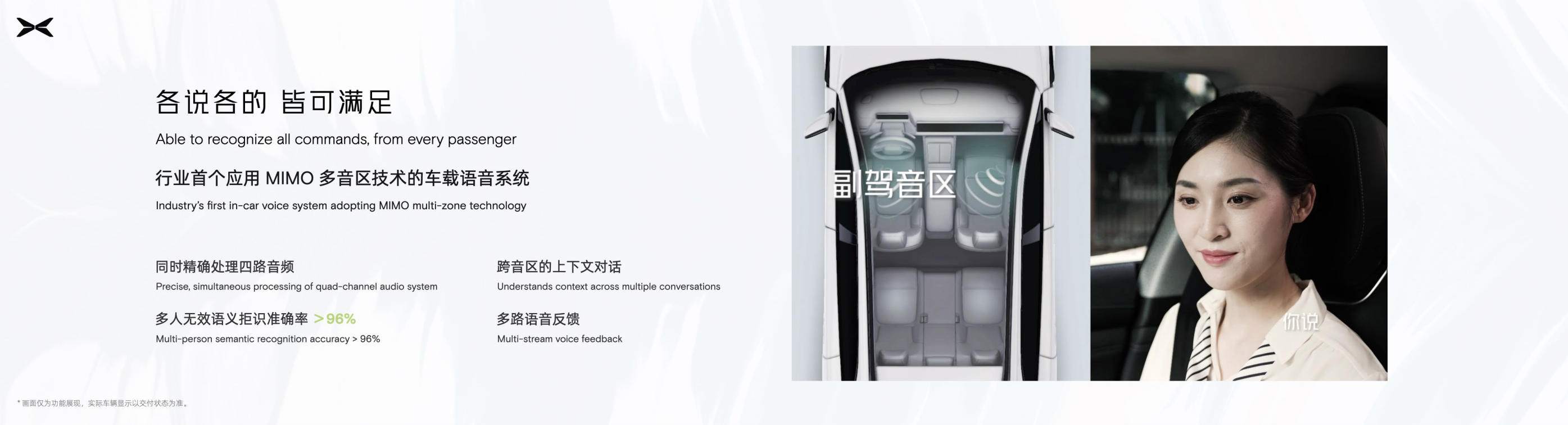

Simultaneous Dialogue in Multitone Areas

In the 2020 edition of the Ideal ONE, we experienced multi-zone voice recognition for the first time. However, due to limitations in computing power and technology, ideal ONE’s multi-zone voice recognition can only achieve 4-way recognition and 1-way execution, meaning that at most only one person can use voice commands in the entire car.

In the 2020 edition of the Ideal ONE, we experienced multi-zone voice recognition for the first time. However, due to limitations in computing power and technology, ideal ONE’s multi-zone voice recognition can only achieve 4-way recognition and 1-way execution, meaning that at most only one person can use voice commands in the entire car.

On the other hand, in the G9, XPeng has achieved 4-way recognition. Four people in the car can simultaneously issue commands to XPeng, and XPeng will execute four commands simultaneously, as if XPeng had four avatars.

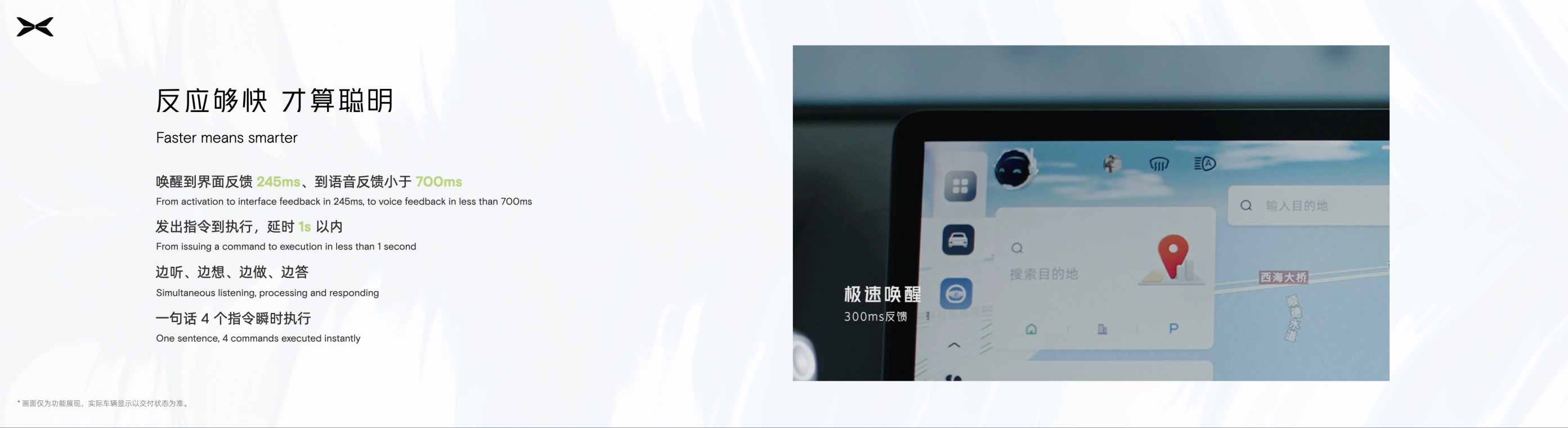

Fast

According to the official data provided by XPeng, it takes 245ms from wake-up to interface feedback and 700ms from wake-up to voice feedback.

On traditional voice assistants, passengers need to finish speaking commands before the system can recognize them, understand and then execute them.

However, XPeng has adopted a way of thinking that mimics human thinking. XPeng can recognize and understand text, query data in real-time, and once the passenger has finished speaking, all understanding is already complete. This is called ‘streaming comprehension’.

With this logic, the efficiency of the interaction is naturally much higher than the previous method.

Usable without internet

Existing voice assistants require all text recognition to be processed by the cloud. Therefore, in areas without good network reception, like highways, tunnels and underground car parks, voice recognition is rendered completely unusable.

Based on the larger computing power of the 8155 chip, XPeng has implemented edge computing on the G9, so that the execution of the user’s instructions can be recognized and processed using the power of the car’s hardware.

In summary, the four objectives can be summed up as: reducing the usage threshold for humans.

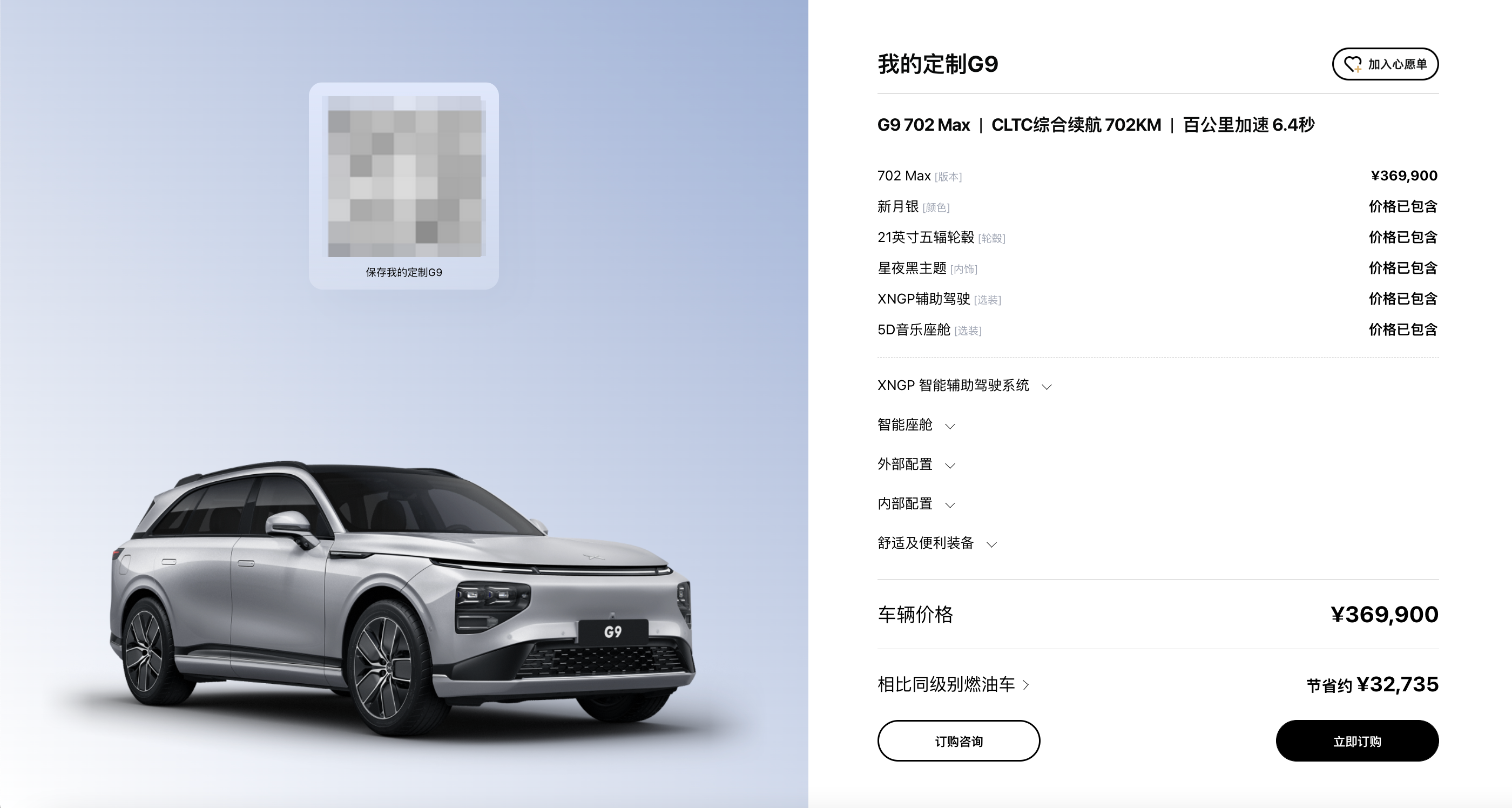

ConclusionI tweeted when I saw the NGP (Next Generation Platform) plan of XPeng Motors at the 1024 Conference: “The G9 Max will be delivered to the NGP cities, with the first launch city being Shanghai, in the first half of 2023, and I’m quite interested in buying it because of this.”

Then, my colleague wrote in the comments section: “The intelligence of G9 is very attractive, but G9 doesn’t have it.”

Thinking carefully, although G9 is not unattractive, the attractiveness of G9’s intelligence is greater than that of the car itself.

The 1024 Conference this year is the most hardcore one in XPeng’s history, undoubtedly, and XPeng’s technological capabilities have reached a new high.

But compared to previous years, this is also the most complicated year for XPeng, from the delay of the G9’s release, to the adjustment of SKUs after the release, to the organizational structure adjustment of XPeng. Every event conveys XPeng solving one problem after another.

From a pessimistic point of view, there are indeed many problems with XPeng right now, but from an optimistic point of view, as XPeng solves one problem after another, XPeng is also becoming better and better.

In terms of assisted driving, the hardware cost and R&D expenditure inevitably require an expensive car to carry it at this stage.

From the configuration of G9, it can fully support this price, and even can be regarded as having a high cost performance. However, when the price exceeds 300,000 yuan, the brand segment is a point that consumers cannot ignore.

From the perspective of a former P7 owner, XPeng must raise its brand tonality from service and design in the future.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.