Introduction

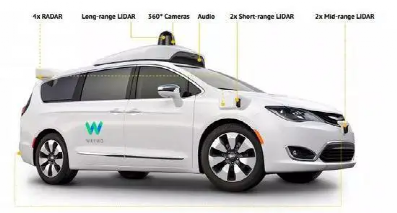

When it comes to autonomous vehicles, it is clear that they are significantly different from ordinary vehicles. Simply by looking at the top of the vehicle, where a variety of sensors of different sizes are placed, and the body of the vehicle, which is covered in cameras, it is easy to see the numerous and diverse array of sensors that are utilized in achieving the autonomous driving function. What roles do these sensors play in achieving autonomous driving, and what are their characteristics? In addition to the visible differences in sensors, what other differences are there between autonomous and ordinary vehicles? This article will take readers behind the scenes of the hardware stack technology currently used in autonomous vehicles.

The Backbone of Autonomous Vehicles – Line-Control Chassis Technology

As an autonomous vehicle, the most basic chassis of the vehicle is fundamentally different from that of a traditional car. As the main carrier of intelligent driving, autonomous vehicles utilize line-control chassis technology, and future high-level autonomous driving will be based on line-controlled chassis technology. That’s right, traditional and cumbersome “metallic beasts” will be replaced by sensors, control units, and electromagnetic actuators driven by electrical signals.

Line-control technology is a type of technology that replaces “hard” connections with “electric wires” or electrical signals to deliver controls, in contrast to traditional mechanical connections. The line-controlled chassis consists of five systems: steering, braking, shifting, throttle, and suspension. The line-control system eliminates some of the cumbersome and less precise pneumatic, hydraulic, and mechanical connections, replacing them with sensors, control units, and electromagnetic actuators driven by electrical signals, and therefore has the advantages of compact structure, good controllability, and fast response.

In the future, as three-electric technology gradually matures, charging convenience improves significantly, and safety and reliability issues are basically solved, line-control chassis technology will also gradually mature and lead the revolution in the automotive industry.

The Eyes of Autonomous Vehicles – Sensors

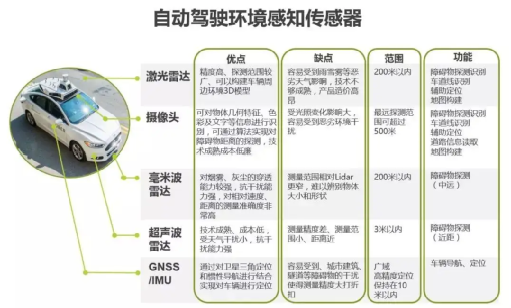

When it comes to the hardware system of autonomous driving, the most important part is the various sensors that act as the vehicle’s eyes for perceiving external information. With the collection of information about the outside world by sensors, algorithms can better understand the surrounding environment and situations, which directly determines the final stability and safety of autonomous driving systems.Currently, there are several types of sensors equipped on autonomous vehicles, including LiDAR, mmWave radar, cameras, and ultrasonic radar, each with distinct characteristics and applicable scenarios. Among them, cameras are the most widely used perception hardware due to their mature technology and low cost. Vehicle cameras serve as the main visual sensor of ADAS and are one of the most mature onboard sensors. However, like human eyes, cameras passively receive visible light, resulting in poor visual effects in backlit or complex lighting conditions and vulnerability to adverse weather.

mmWave radar has the smallest impact from weather conditions and the best all-weather performance. Similar to LiDAR, the mmWave radar works through electromagnetic waves, with commonly used frequency bands in the automotive field being 24GHz, 77GHz, and 79GHz, corresponding to short, long, and medium-range radar, respectively. Due to its long wavelength and good detection capability for obstacles, mmWave radar is less impacted by weather conditions, but its detection accuracy significantly drops due to the longer wavelength.

LiDAR has the best accuracy for meeting L3-L5 autonomous driving requirements. LiDAR uses laser as the carrier wave, with a shorter wavelength than mmWave radar, resulting in higher detection accuracy and longer range. LiDAR can also form 3D “point cloud” images of obstacles by collecting information from different direction laser beams. Due to the high technical difficulty and cost, large-scale deployment has not been realized yet, but the LiDAR industry may erupt as the industry chain matures and costs decrease.

Due to the distinct characteristics and cost of various sensors, currently available mass-produced models are mostly equipped with only onboard cameras. The layout and selection of sensors for autonomous vehicles are still being tested by time. Nevertheless, the demand for sensors will undoubtedly rise in the future, leading to a qualitative change in autonomous driving technology as sensor accuracy gradually improves and costs decrease.

The Brain of Autonomous Driving – Computing Platforms and Chips# The Development Trend of Automotive Architecture and Chips in the Era of Autonomous Driving

In the era of autonomous driving, there will be a significant trend towards centralization in automotive architecture and control chips. Specifically, automotive architecture will shift from distributed E/E architecture to central computing architecture, and control chips will move from single CPUs to SoC chips that include AI modules.

Controllers require analyzing and processing a large and complex number of signals, and intelligent automotive systems need to process a vast amount of non-structural data such as images and videos. The original distributed computing architecture, corresponding to one ECU, or a single module domain controller that could not adapt to future needs. As such, central computing architecture will become the primary development trend.

High calculation power and low latency are the features that autonomous driving SoC chips should have, and they have significant growth potential in the future. Central computing architecture can control the entire vehicle using only one computer, which is more convenient for achieving OTA software upgrades.

Automotive data processing chips mainly consist of two types: MCU (chip-level chips) and SoC (system-level chips). The MCU has a simple structure that contains only the CPU processor unit (CPU+storage+interface unit). The CPU frequency and specifications are appropriately reduced, and the memory, interfaces, and other structures integrate into a single chip. It is mainly used in the ECU to perform control instruction calculations.

SoC includes multiple processor units (CPU+ GPU+DSP+NPU+ storage+ interface unit), with high integration, and it will bring substantial growth as the future automotive intelligentization trend requires strong algorithm and computing power. Thus, it promotes automotive chip rapid shift towards stronger SoC chips.

At present, the autonomous driving chip market is mainly monopolized by foreign giants. Intel’s Mobileye is the first company to mass-produce and use autonomous driving chip products. Later, Nvidia launched better performing autonomous driving chip products. Tesla, as a full vehicle plant, also quickly launched its own autonomous driving chip products for use in its electric cars. Horizon’s chips are currently the only products being mass-produced and used on cars domestically. Huawei, Black Sesame, and other companies are in the first echelon domestically because of their outstanding chip performance. In addition, companies such as Deep Glint, Horizon Robotics, Xilinx, and so on have also joined the competition in China’s autonomous driving chip industry.

In the future, the development of the autonomous driving chip field will directly determine the size of the market and the success or failure of enterprises.The above analysis has concluded several main hardware components currently equipped on autonomous driving vehicles. These components are crucial to the future development of autonomous driving technology, and their functionality, safety, and stability will directly affect the size of the autonomous driving market and public acceptance. As hardware technology becomes mature and refined, and costs gradually decrease, it will also assist autonomous driving software algorithms to complete more complex and safe driving operations, and determine the upper limit of future autonomous driving development.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.