| Author | Zhu Shiyun |

|---|---|

| Editor | Wang Lingfang |

Only by comparing in the same dimension can we find the “terrible” aspects of Tesla.

At this AI DAY, Tesla announced that the number of vehicles participating in the FSD Beta test in the United States has reached 160,000. Under the monitoring of human drivers, the Beta test can achieve “fully automatic driving” from one parking lot to another based on navigation.

This is similar to the high-speed and urban navigation functions provided by manufacturers such as XPeng, Maodou, and Huawei in recent times.

However, it is worth noting that FSD Beta realizes the above functions based on 144Tops of computing power, and recently Tesla announced the cancellation of ultrasound radar on new vehicles, completely following the pure visual route at the hardware level.

This means that hardware costs will be further reduced, and data structures will be further unified.

Previously, some investors estimated that the overall price of FSD was around 1400 US dollars, and the cost would surely decrease after the cancellation of radar.

In comparison, the price of Nvidia Orin chip with 254TOPS is 300 US dollars/piece, and the price of Speed-Tensor M1 LiDAR is 500 US dollars/piece.

Excluding cameras, the cost of only two Orin and two LiDARs has exceeded the overall solution price of FSD HW3.0.

Different roads lead to the same goal. Besides the route of high computing power and heavy perception, how can Tesla achieve “fully automatic driving capability” with only 144TOPS in urban scenes?

When will autonomous driving be realized?

“FSD has reached the deep water area of autonomous driving. This AI DAY shows the solution to some very niche and tedious issues. In my opinion, FSD has made another big step forward.” Luo Heng said: The three quantitative elements for landing advanced autonomous driving functions are: the coverage of automatic driving, the number of vehicles carrying, and the proportion of machine driving during the journey.

Luo Heng said that if the number of landing vehicles in Tesla’s FSD Beta test in the United States and Canada reaches 500,000 in the future and people use it frequently, it will be very close to achieving advanced autonomous driving functions.

As for China, Guo Jishun said that when L2+ level vehicles (capable of automatic lane changing, overtaking, entering and exiting ramps, etc. on high-speed/urban roads) account for more than 10% of personal driving time; and L4 level autonomous driving taxis can operate on a commercial basis within the regional area of first-tier and second-tier cities and becomes people’s daily means of transportation, it can be considered that the advanced intelligent driving capability is close to landing.From a timeline perspective, Luo Heng believes that Tesla may achieve a scale of 500,000 FSD testing applications in North America next year; Guo Jishun believes that domestically, advanced level intelligent driving functions may be mass-produced by 2024 or 2025.

“In the deep waters of autonomous driving, it is necessary to find a balance point in terms of safety, stability, advancement, and cost, and achieve the maximization of technology and cost benefits. In this respect, Tesla is still the best autonomous driving company in the world in terms of technology implementation and functional feasibility,” said Guo Jishun.

Is huge computing power necessary?

In addition to intuitive data on the scale of deployed vehicles and regions, Tesla demonstrated its ability to converge computing power requirements at this AI Day event.

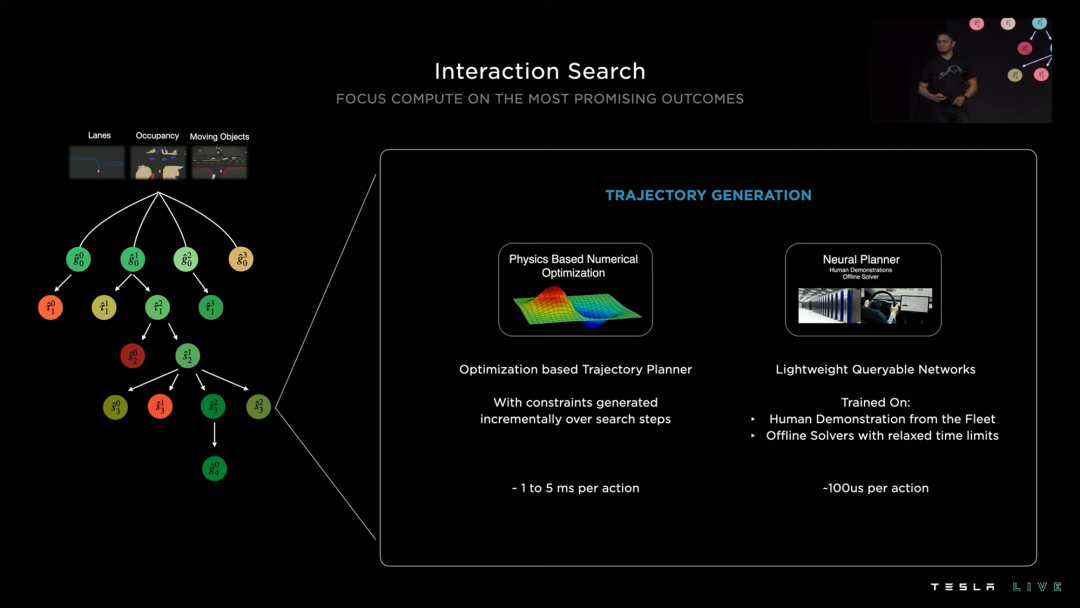

At AI Day, Tesla presented three decision results in a scenario without protected left turns, with over 20 interactive objects and over 100 possibilities.

Using traditional search methods, the decision time for each possibility is about 10 milliseconds, and the final decision or 50 milliseconds.

Tesla shortened the decision time from the usual 1-5 milliseconds to within 100 microseconds by introducing neural networks at the planning level.

“The traditional method in the planning field is iterative optimization and cyclical solving. Its problems include uncertain results, significant computing power requirements per unit time, or long calculation times required under limited computing power, such as in-vehicle computing power, making it difficult to deal with highly complex and fast decision-making crossroads in cities,” said Tesla.

“On the other hand, neural networks will give a highly deterministic result. By offline training of the full model in the cloud, the trained lightweight network can be deployed to the vehicle for a very efficient planning result,” added Tesla.

If we compare Tesla FSD beta with Waymo abroad, we will find that FSD’s decision-making is very aggressive and decisive, while Waymo is much more conservative, only turning right instead of left, and would rather go around.

“It is precisely because FSD’s planning is more efficient that it can search a larger space and make more global decisions quickly,” said Tesla.

“Overall, Tesla puts a large amount of complex iterative calculations offline and trains fast, deterministic, and low-latency neural networks online for processing. This combination enables Tesla to achieve very high efficiency,” as stated by Luo Heng. “According to Tesla’s proposal, a traditional action takes about 1-5 milliseconds, while for neural networks, it’s a fixed value of 0.1 millimeters, resulting in a 10 to 50-fold increase in performance.”

“Overall, Tesla puts a large amount of complex iterative calculations offline and trains fast, deterministic, and low-latency neural networks online for processing. This combination enables Tesla to achieve very high efficiency,” as stated by Luo Heng. “According to Tesla’s proposal, a traditional action takes about 1-5 milliseconds, while for neural networks, it’s a fixed value of 0.1 millimeters, resulting in a 10 to 50-fold increase in performance.”

One of the reasons why Tesla can achieve city-level autonomous driving capabilities with only 144TOPS computational power is by deploying efficient and lightweight neural networks for planning.

Luo Heng mentioned that when discussing the FSD chip earlier, Tesla emphasized FPS (frame rate – the speed of recognizing images in a unit of time) performance over computational power. Moreover, based on FSD beta version update information, Tesla continues to add datasets and improve performance, “indicating that computational power is still sufficient for Tesla.”

In fact, achieving a balance between algorithm and computational power with regard to effectiveness and cost will be one of the core challenges for major companies to implement urban autonomous driving capabilities.

Guo Jishun said that “using large computational power domestically is primarily for safety assurance. We first use large computational power to ensure safety and then continue to optimize to reduce costs to ensure that the system can be extensively used.”

Under this premise, the reason why the computational power requirements for L2+ autonomous driving capabilities in the industry are increasing and even approaching 1000TOPS is due to the increasing number and performance of perception hardware and the increase in scenarios that need to be addressed.

Guo Jishun stated that “the most difficult part, from highway to city roads, lies in perception and prediction, as it requires more objects to be classified, complexity to increase, and the need for model quantity and parallelism to increase accordingly. This is why computational power requirements are increasing, but it doesn’t mean that infinite computational power is needed.”

“The reason why Tesla can achieve city navigation capabilities with only 144Tops of computational power is due to the high efficiency of software and hardware coordination, and it also shows that algorithm engineers should use computational power in a more streamlined and intensive way to promote the landing of advanced intelligent driving as soon as possible,” said Guo Jishun.

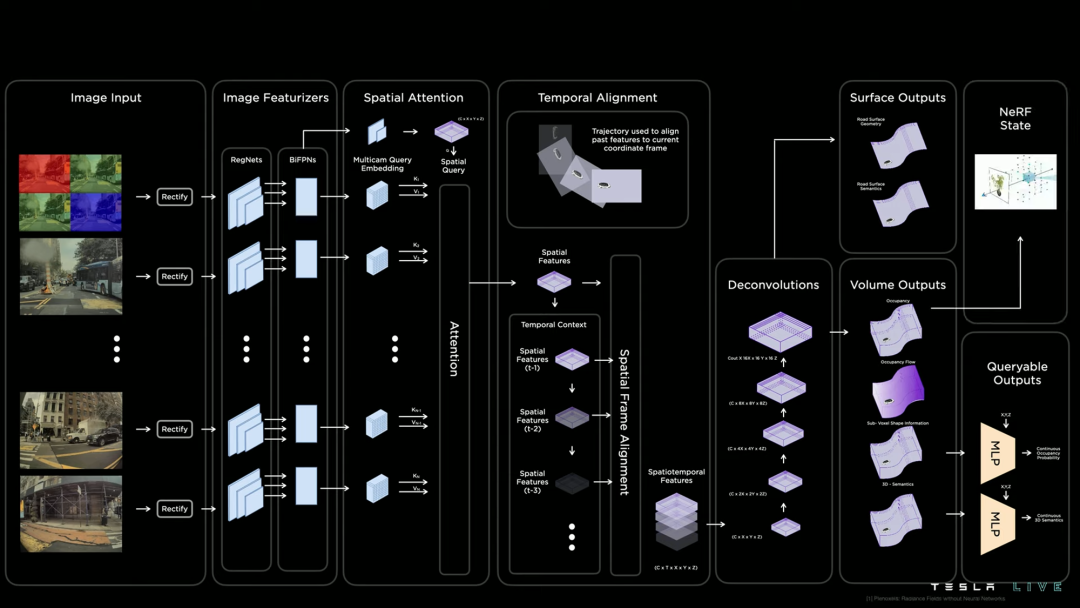

In addition to saving computational power by applying neural networks in the planning field, Tesla has also introduced new neural network models in the perception field to achieve similar perception effects as LiDAR.

One highlight of this year’s AI DAY was the Occupancy Network, which describes the three-dimensional world through vector data.

“Tesla hopes to use the Occupancy Network to determine objects’ position and their speed in three-dimensional space without defining what they exactly are,” Luo Heng described the role of the Occupancy Network.From the point of view of the effect, the use of neural networks is very similar to using lidar, which can provide direct three-dimensional obstacle and motion change information.

However, it is worth noting that Tesla’s use of neural networks is based on visual signals, which are very rich in content and can achieve high precision. In contrast, lidar point clouds are always sparse and discontinuous compared to visual signals, which makes it impossible to accurately describe the edges of objects.

The sensitivity of neural networks to three-dimensional information and speed changes has also become the basis for Tesla’s complete elimination of lidar.

In fact, Luoheng believes that Tesla is gradually replacing its BEV architecture, which was officially announced last year, with neural networks.

“Does the dynamic object network in their current architecture be replaced by neural networks in the future? I am not sure, but I feel that this trend may also exist.”

BEV can produce a top-down view and divide the plane into some point grids, and mark the height and object attributes within each grid point, which can provide a drivable range.

The BEV model solves the problem of fusing multiple cameras in front without having to perform confidence voting for different angle cameras in the post-fusion stage.

At present, domestic multi-sensor solutions also use BEV models in their perception model architectures to perform mid or post-fusion of lidar data.

“In deep learning, we have been expanding the cognitive boundaries of the neural network by annotating more data, training more models, and enabling the vehicle to recognize and track more events and classify them. This is how BEV expands our cognitive boundaries.” Guo Jishun said.

On the other hand, because the model must first understand what it is and then judge whether it is an obstacle, the BEV model cannot understand all road conditions because it cannot exhaustively annotate the real world.

For example, how to identify broken tire debris on the highway?

It is difficult to annotate all the broken tire debris to train the model through traditional annotation methods.

In fact, such cases where obstacles are not accurately recognized or unrecognized are the reason for traffic accidents involving multiple companies including Tesla.In addition, BEV uses two sets of networks, static and dynamic, to distinguish static and dynamic objects. However, in the real world, any static object may become a dynamic object, such as a roadside barrier or stone pillar that has been hit. When such situations occur, it is difficult to resolve the differences between static and dynamic networks.

Guo Jishun said: “Occupancy grid has solved another problem with BEV, narrowing the boundaries of what we know we don’t know.”

Occupancy grid allows vehicles to judge whether an obstacle affects traffic without knowing what is in front of them. “In my opinion, this is a huge step. Occupancy grid will definitely be a highly concerned algorithm in the field of technology in the coming year.”

So, can the multi-sensor fusion solution also eliminate the need for LiDAR by using occupancy grid?

Guo Jishun said that from a responsible perspective for the system and product, the confidence of LiDAR is still higher and can provide more effective information in all weather conditions (when there is insufficient light). “Rather than waiting for the machine vision algorithm to continuously improve, it’s better to rely on the Moore’s law of LiDAR. But we also hope that Tesla can really expand the technological boundaries of humans in the end.”

Interestingly, Li Xiang, the founder of Ideanomics, mentioned in a reply on social media that LiDAR is an occupancy grid.

However, from a technical point of view, the current mainstream LiDAR output frequency is 10 Hz, while the camera is 36 Hz. This means that LiDAR “sees” about 3 meters once, even without fusion alignment, at high speeds, which increases the chance of “missed detection” compared to the occupancy grid based on cameras.

However, there is still a real problem of larger errors at longer distances with cameras. Although it can be improved in the future with the continuous improvement of camera accuracy, LiDAR still has considerable advantages in scenes beyond the visual range.

Is a high-precision map necessary?

Although occupancy grid cannot completely replace LiDAR at present, the lane network helps FSD completely fulfill the demand for high-precision maps.

The release notes of FSD Beta v10.69.2.3 state: “Added a new ‘Deep Lane Guidance’ module to the vector lane neural network that fuses features extracted from the video stream with rough map data (i.e., number of lanes and connectivity) together. Compared with the previous model, this architecture reduces the error rate on lane topology by 44%, achieving smoother control before the lanes and their connections become obvious.”

In summary, after this version, the possibility of lane changing errors on complex road sections with FSD Beta has been reduced by 44%.

The data is consistent with the test results. Last year, many beta users complained about the issue of incorrect lane change. However, with the introduction of lane network, such complaints have significantly reduced, and the lane change experience has obviously improved.

Deciding which lane to take on complex roads is difficult, even for human drivers. With high-precision maps, autonomous vehicles can achieve centimeter-level positioning of vehicles and road environments, easily solving the problem of “where am I and how do I go”, which is often used as the main source of information in high-speed navigation assistance.

However, due to operational costs and map freshness, high-precision maps have difficulty meeting commercial production needs when entering complex and changing urban scenes.

Due to coverage area, freshness, and cost issues, FSD has never included high-precision maps in its system solution. Previously, it used a simple pixel annotation (depiction) of lane lines to solve the problem.

However, when entering the city, it becomes very difficult to use pixels to depict complex and intertwined roads, which led to the emergence of the lane network, which incorporates the concept of natural language models.

Luo Heng said: Tesla has introduced a new data annotation method, which annotates lane lines as a series of points. Each point has its own clear semantics, such as “start, merge, fork, end”, etc. Thus, the lane lines are labeled as a sentence (“start turn, merge and continue, then end”), instead of just semantic segmentation on the image. This forms a complete connectivity graph of lane lines.

As a result, the vehicle has a clear understanding of its own lane and the relationship with the “visible” road network, which is convenient for changing lanes.

It is worth noting that with the addition of the lane network, FSD can form a 5-dimensional 3D + time + semantics vector space. Through cloud-based data training system, a large amount of rich vector space data generated by vehicles can be collected as a crowd-sourced ADAS map.

From Tesla’s presentation, the freshness and detail of the map are both very high.

Let the Silicon-based handle the data training

“The core of deep learning lies in the data, and how the data is annotated defines the type of algorithm model,” Luo Heng said. Tesla’s progress in automatic annotation has exceeded expectations.In fact, in the first half of 2022, Musk also stated that he would further expand the labeling team, but began to cut staff a few months later. “I think that laying off people is also simple. I found that we don’t need to draw so many boxes.”

When training neural networks based on two-dimensional image data, manual annotation of image regions, including 3D data, attributes, and even temporal alignment, was previously required. “It is a huge contribution of our carbon-based life, but it is very difficult to use when mapping it to the 3D space,” Luo Heng said.

In 2018, Tesla, as well as a considerable portion of data annotation in China, used this method;

By 2019, Tesla began tagging in 3D space, and some domestic enterprises have also introduced automated computational annotation for 3D reconstruction and mapping. In 2020, by using the BEV framework, a bird’s-eye view can be automatically output, and humans primarily perform some alignment work. By 2022, automatic data annotation systems can output completely reconstructed mapped scenes, significantly reducing the need for manpower.

In terms of efficiency, Tesla’s automatic annotation system can now complete the workload of 5 million hours of human annotation in 12 hours.

Moreover, based on efficient automatic annotation of the real world, Tesla can build highly realistic simulation environments that resemble video games, helping its visual perception system continuously converge on rare long-tail scenes. And through data engine, the data can be re-annotated for “corrective training” of the model without the need to rewrite the model.

“In the past practice, I was pessimistic about automatic annotation, virtual simulation, and other automation methods because the accuracy and precision cannot meet expectations, and hardware-in-the-loop methods are still needed to complete the annotation,” Guo Jishun stated.

For the industry, as more and more vehicles equipped with autonomous driving systems are deployed, the more useful structured data (after annotation) is available. The more accurate structured data, the more advantages algorithm evolution will have.”However, this is based on two premises: the first is the accuracy of automatic labeling, and the second is the efficiency of automatic labeling. Based on Tesla’s claims, its automatic labeling accuracy and efficiency are very high, which is enough for us to learn from,” said Guo Jishun.

How to catch up with Tesla?

“(There is still some gap between China and Tesla in the field of autonomous driving), but it is not necessarily impossible to catch up,” said Guo Jishun.

From a technical perspective, laser radar should still be considered a very important supplementary sensor, which can reduce the amount of data that needs to be accumulated in the field of vision. At the same time, the laser radar can be scaled by utilizing it.

The second is based on the scale advantage of the Chinese market, accelerating the scaling of vehicles through a common set of (data training) infrastructure, and the resulting scalability of data;

Third, we should also learn from Tesla’s approach to technical breakthroughs. Tesla has discovered many new true problems in the process of pushing autonomous driving to an unprecedented scale, and achieved technological breakthroughs in the process of solving these problems.

While promoting scale, we should also learn from Tesla’s cautious promotion plans, such as selecting drivers for testing.

“Learn from the wise. Autonomous driving is still in the early stages of the industry, and everyone is working together to achieve this grand goal. If someone runs faster, we can accelerate the process of practical implementation by learning from them,” said Luo Heng.

“Now it’s just that the mass production of autonomous driving has reached a critical juncture, but the industry is still in its early stages, and we have many opportunities,” said Guo Jishun.

–END–

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.