The confusing EVA joint edition mech dragon and the high-profile propaganda slogan “don’t talk if less than four”.

Salon Automotive, a brand that has been questioned since its debut at the 2021 Guangzhou Auto Show, with some saying that it is “blindly confident” in its intelligent driving capabilities and others saying that it “complains without reason” in its design.

At this year’s Chengdu Auto Show, I tried once again to understand Salon Automotive and its mech dragon. The result was good. Through communication with Salon Automotive CEO Wen Fei and Senior Director of the Intelligent Center Yang Jifeng, I solved many of my doubts.

What is the purpose of the perception hardware stack?

To be honest, when it comes to intelligent driving, Salon Automotive conveys a lot of information through the mech dragon, and there are many obscure terms. To make it easier for everyone to understand, I will break down Salon’s core technology and layout from the most core of intelligent driving: perception, decision-making, and execution.

On the perception level, Salon Automotive first proposed the concept of 5-fold 360-degree perception in the industry, with the five levels being:

-

Level 1: 4 LiDAR sensors

-

Level 2: 7 8M HD cameras

-

Level 3: 5 millimeter-wave radars

-

Level 4: 4 HD surround-view cameras

-

Level 5: 12 ultrasonic sensors

In terms of the total number of sensors, Salon Automotive is indeed among the best in the industry. At the same time, because of its relatively aggressive approach, it has been questioned by the outside world, “Whether it is necessary to use LiDAR to cover the 360-degree perception area?” In higher-order perception hardware solutions that we commonly see, 1-2 LiDAR sensors are generally placed, usually to enhance forward sensing.

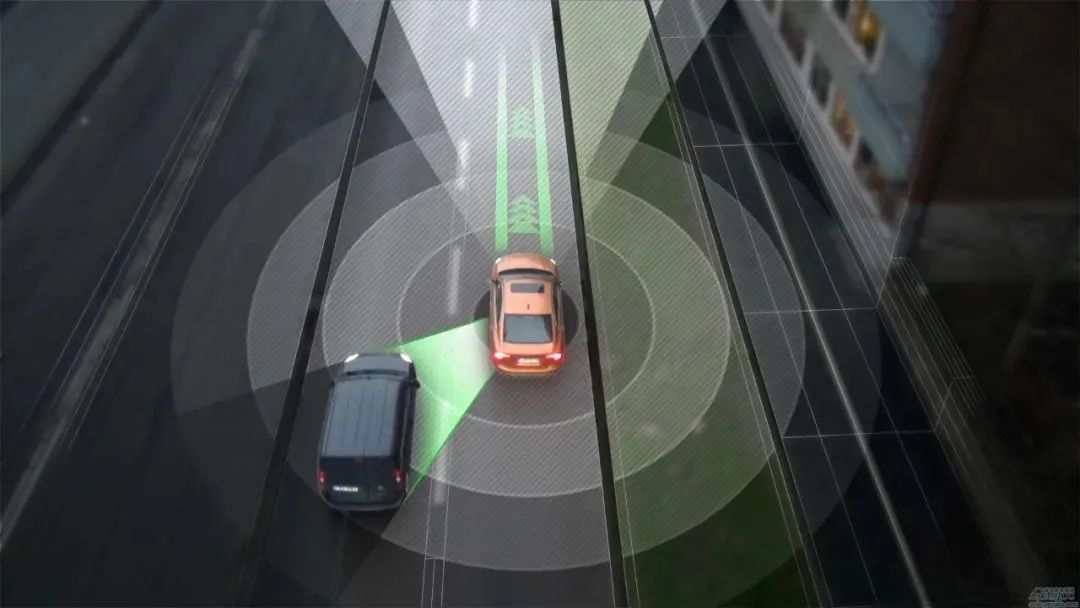

Superficially, Salon Automotive’s perception hardware solution is different from other car companies in terms of perception range; in fact, the biggest difference is that Salon uses a 96-line LiDAR to construct a 360-degree evenly distributed point cloud, while other single/dual LiDAR schemes mainly use LiDAR to enhance detection of ROI (polygon boundary) key areas.

We can simply understand Salon’s approach as “borderless detection” within the covered area, while other schemes only focus on detecting key areas in the forward sensitive area. Compared to the latter, the advantage of 360-degree “borderless detection” is that there will be no situations where the point cloud is too dense in the central area and sparse at the edges, and there is no problem of point cloud quality degradation caused by field of view stitching.

Of course, for Salon, there is a more sensitive question: “Is the rear LiDAR necessary?”During the communication meeting before the Chengdu Motor Show, Yang Jifeng presented two scenarios of using the rear-view LiDAR. For example, when a user is reversing using the path-tracking function, the LiDAR-based scheme performs better in terms of detecting objects compared to the traditional scheme that uses cameras and millimeter-wave radar. Additionally, in normal driving scenarios, the rear-view LiDAR exhibits stronger predictive perception capabilities for incoming cars from behind.

In addition, Yang Jifeng also put forward another point:

“In the era of functional cars in the past, we implemented certain fixed functions by integrating fixed hardware with fixed software, and delivered them in the form of software packages or functional solutions. However, the products of intelligent cars at this stage are essentially a platform, a technology stack. The design process of this technology stack not only considers the long-term perceptual hardware capabilities, computing platform performance and architecture capabilities, and EEA’s system architecture capabilities, but also includes the continuous R&D iteration and continuous OTA delivery capabilities on this platform. This is a fundamental difference.”

What we can clearly see is that before the introduction of LiDAR, the path taken from early L2 level ADAS functions to the current L2+ level highlights advances in product strength through “function stacking.” For example, by adding functions such as turn signal lane changing and high-speed navigation assistance on the basis of ACC + lane keeping. From the current situation, the introduction of LiDAR cannot bring substantial functional improvements.

For example, NIO, we thought that the introduction of LiDAR would promote the landing of the NOP city navigation assistance function. In fact, this function has been delayed time and time again. NIO has simply integrated the LiDAR data into the existing perception system of functions.

LiDAR cannot make intelligent driving assistance more intelligent. Its responsibility is to be a high-precision perception unit and more sensitively detect the unpredictable road conditions.

In the era of advanced intelligent driving, the ODD (design and operation domain) appears more in the form of a geofence rather than a functional boundary. I think this is the core reason why Salon has laid out four LiDARs. The stronger hardware corresponds to a higher ceiling at the functional level, not aimed at piling up functions, but aiming at L4 level intelligent driving.

In other words, in complex urban road sections, more high-precision sensors are also a guarantee of the safety of the intelligent driving system. Of course, the best solution is to subtract from the sensors while achieving L4, but when the first step has not been successfully taken, how can we talk about the best solution?

However, the solution provided by Salon does involve a tradeoff between time and cost for the end user. It is undeniable that the addition of more high-precision sensors will lead to increased costs. However, can relying on lidar bring a significant breakthrough for the user’s driving experience within a normal car usage cycle? This remains a big question mark.

However, the solution provided by Salon does involve a tradeoff between time and cost for the end user. It is undeniable that the addition of more high-precision sensors will lead to increased costs. However, can relying on lidar bring a significant breakthrough for the user’s driving experience within a normal car usage cycle? This remains a big question mark.

The cost-performance ratio of sensing hardware like lidar is also a concern for users.

“Collaboration with Soul”

Secondly, let’s talk about the decision-making level. The decision-making level involves two key elements: intelligent driving computing platform and intelligent driving algorithms.

This time, Salon Automobile used two Huawei MDC610 chips on its Mech Dragon, which provides a computing power of 400 TOPS.

When asked if “Salon Automobile has developed its own intelligent driving algorithm”, Yang Jifeng replied:

“People tend to oversimplify the complex issue of “self-development”. For example, whether it is self-developed or not represents more flexibility, advanced capability and coolness, or whether it is a mature and reliable partner verified through multiple product validations. As a new brand that defines intelligence, we need to determine what know-how we should keep in our own hands, and what capabilities and responsibilities we should keep in our own hands.”

We can analyze whether car companies should develop their own intelligent driving algorithms from two perspectives.

Firstly, observant friends who have been following the development of intelligent vehicles will notice that most new brands will use mature supplier solutions for their first stage of products, because the complete vehicle is the product they eventually deliver to the end user. Therefore, they will delegate the detailed functions and configurations to specialized companies as much as possible.

Secondly, we can also see that the current intelligent driving software and hardware suppliers are divided into tiers. For example, Bosch, an old player, has strong expertise in millimeter-wave radar and basic L2 level functions. Whereas players like Huawei and Momentum, take L2 as the foundation and are committed to breaking through to L4. Of course, there are also many new players in the same path who choose to develop their own algorithms, such as the well-known WeRide.

As a newcomer, Salon has also chosen to use a large number of supplier solutions like many predecessors. However, Yang Jifeng believes that what differentiates them from others is that their collaboration with suppliers has more “soul”.

In Salon Automobile’s intelligent driving solution, we will find a term that we have never seen before, called “triple heterogeneity”. Triple heterogeneity corresponds to three sets of systems:- At the bottom layer is the Intelligent Forward Safety System, built on the IFC forward-looking camera and a mature functional platform, which implements functions such as forward collision warning, lane departure warning, and lane keeping assistance.

-

In the middle layer is the L2 level assisted driving system, based on Bosch’s millimeter wave radar solution, and implementing classic L2 level functions such as ACC, lane change assistance, and oblique rear door opening warning, etc.

-

At the highest level is the advanced artificial intelligence autonomous driving system, built on Huawei’s hardware and MOMENTA’s software. The system’s vision is ambitious, aiming for end-to-end intelligent driving in all scenarios.

Unlike completely “selling the soul” on the intelligent driving side, Salon’s triple heterogeneity integrates the most stable systems on the market with the cutting-edge systems. It not only has the flexibility and high upper limit characteristics of self-developed algorithms, but also the maturity and stability characteristics of supplier solutions. It also solves the problem of weak performance in intelligent driving functions for new vehicle brands’ first-stage products.

So, does Salon simply stack the three systems together? Not exactly, there is also logic of complementation and coordination between the three systems.

To give an example that is easy for everyone to understand, let’s say we are driving northward through an intersection and a vehicle turning right enters from the west. After the perception hardware recognizes the vehicle, it outputs signals to both the middle layer assisted driving system and the highest level autonomous driving system. The assisted driving system triggers a front cross-side warning and informs the autonomous driving system. After acquiring the original perception signal and the warning of the lower layer function, the high-order autonomous driving system makes the final arbitration and outputs the final command to the execution layer.

Due to stronger computing power and algorithms, the decision-making authority of the high-order autonomous driving system is higher. It can not only quickly process the original signals, but also has the judgments of the lower layer system as redundancies to ensure safety.

Six Redundancies to Ensure Execution Layer Safety

Finally, let’s talk about the execution layer. At the beginning of the intelligent driving system activation phase, the most likely problem that the execution layer may face is system failure leading to the inability to execute the instructions given by the decision layer, resulting in accidents. In consideration of system safety, Salon Automotive has designed redundancy systems at six critical points to ensure the normal operation of the system.

-

Communication redundancy: under any communication failure, the communication hot swap can be completed, and the line control communication link for brake, steering, and power is dual redundant.- Redundancy of Perception: Laser radar, high-definition cameras, millimeter wave radars, ultrasonic sensors, high-precision maps, and RTK high-precision positioning redundancy perception;

-

Redundancy of Power Supply: Support independent safe control of the intelligent driving system under power failure;

-

Redundancy of Steering: EPS hardware adopts dual CPU, dual bridge drive, and dual coil motor. In the event of any single circuit failure, it can provide at least 50% steering assistance;

-

Redundancy of Control: The dual high-performance intelligent driving computing platform supports the hot-swapping safety mechanism after single domain controller failure;

-

Redundancy of Brakes: The first domestic IBC+RBC dual redundant brake can achieve two safety failure modes of mechanical redundancy + electronic redundancy, and can support advanced autonomous driving.

Thoughts on Mech Style

Before the Chengdu Auto Show, a friend went to the sale salon car experience store in Chengdu Intime. After coming back, he commented like this: “After entering the exhibition hall, an extremely weird purple-green mech dragon is located in the center of the exhibition hall. There are large-scale models and two-dimensional dolls around it. It’s like entering a middle-aged man’s youth.”

Yes, after communicating with many people, I found that the appearance of the mech dragon is indeed not accepted by most people, and the joint name with EVA is also not well-received by many people.

But the voice of the public seems not so important to Salon CEO Wen Fei, because he clearly told us that the mech dragon is a product designed for a niche group, “explore the small market share of only 10% in the mass market, and obtain 90% share in the small market.” This is the goal of the mech dragon.

Salon adopts the self-operated model, and their sales channels also correspond to the high-end and niche positioning, concentrated in first-tier cities and quasi-first-tier cities with strong consumption ability.

At present, Salon has signed contracts with 8 stores in 7 cities including Beijing, Hangzhou, and Chengdu. In 2022, Salon plans to build 42 outlets in 14 cities, including 28 exhibition halls and 14 delivery centers. By 2023, it plans to lay out a total of 160 outlets.

From another perspective, the style and design are the core competitiveness of a product, but not the core competitiveness of a car company. The core competitiveness of an enterprise lies more in its technology and operational capabilities.### Mechadragon: Salon’s bold attempt

Mechadragon is a very bold attempt by Salon Automotive. It may challenge the mainstream aesthetic, but we can’t make a hasty judgement before the sales figures are out. After all, niche aesthetics are always hard to pin down. As the core competitiveness, mature intelligent technology can support the brand’s style and design innovation attempts more than once.

In conclusion

In this article, we have analyzed the intentions behind Salon’s “astonishing” moves, hoping to help everyone better understand this company.

In the future, we will continue to pay attention to this company, and hope to see them solve the problem of excessive perception hardware costs, and bring about qualitative breakthroughs for users in the field of intelligent driving.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.