At this year’s Chengdu Auto Show, although hastily ended, as the first national auto show event of the year, many new cars still arrived as scheduled on the opening day.

Compared with previous years, one of the biggest changes in the show is that more car manufacturers have started to talk about the intelligence level of the whole vehicle on the exhibition stand, such as what smart driving and cabin functions can be achieved by the new cars, and the prerequisite for achieving them is chips.

Among the models unveiled at the Chengdu Auto Show this year, the models with the chip computing power exceeding 100 TOPS include NIO ET5, Ideal L9, XPeng G9, Avita 11, Salon Mechalon, Feye R7, Gaohe HiPhi Z, and Weipai Mocha DHT-PHEV, and so on.

Among the above models, more than half of them use NVIDIA Orin chips, while the others come from Huawei and Qualcomm. Mobileye, which once occupied half of the ADAS field, is obviously no longer a hot star supplier.

What is the reason behind this phenomenon? What can we get and see from these big-chip-equipped cars?

Mobileye is Cold, Orin is Hot

First of all, we have to admit an objective fact that there were not so many assisted driving chips or solutions on the market a few years ago. As the first assisted driving solution supplier to enter the field of visual perception, Mobileye has won a large number of customers worldwide with its package solution of chip + visual perception algorithm.

However, with the accelerating development of the intelligent driving industry, the requirements of domestic new forces and car manufacturers in terms of feature freshness and richness are very high. Mobileye has no intention, nor capability to cooperate with these car manufacturers for function iteration, and the “black box” delivery also makes it impossible for car manufacturers to touch the most core algorithms, which makes unsustainable cooperation a matter of course.

A more intuitive example is Tesla. Before the Mid-Autumn Festival in 2016, Tesla and Mobileye suddenly announced their breakup, and the two companies even had a verbal fight.# Mobileye CEO Amnon Shashua: “Tesla’s autopilot feature crossed the safety line, so both parties terminated the partnership.”

Tesla, however, said that the main reason for the termination of the partnership was that Mobileye prevented Tesla from developing its computer vision perception technology, not safety concerns as stated by Mobileye.

In fact, what Tesla said about Mobileye preventing us from developing computer vision perception technology is due to Mobileye’s closed system, which prevents any vendor from developing its own features based on the Mobileye EyeQ chip. The trigger for this series of events was that the EyeQ3 embedded in Tesla was involved in several serious accidents, severely damaging user confidence in Tesla’s software.

What about Nvidia’s last-generation chip Xavier, which is earlier than EyeQ4 SOP?

Xavier has a computational power of up to 30 TOPS, 12 times higher than the 2.5 TOPS of EyeQ4, and is also programmable. Then, why is it only used by Xpeng P7?

First of all, although both are system-on-chip (SoC), they have different use cases, and SoC is also divided into GPU chips and ASIC chips. Xavier’s architecture consists of CPU, GPU, and ASIC, which is generally a universal chip, while EyeQ4 is a dedicated visual perception chip.

In short, Nvidia’s Xavier chip has sufficient computational power, but it cannot fully utilize its absolute ability to meet the urgent need for visual perception in intelligent driving.

On the other hand, Nvidia Xavier only provides the basic chip, and car companies need to figure out how to handle domain control integration by themselves.

For example, Xpeng has self-developed computer vision perception algorithms, but building domain controllers is not their forte. Instead, Tier-1 has more experience in this area. Therefore, Xpeng implanted the algorithm into Xavier, and the entire computing platform was integrated by Desay SV.

At the same time, in this IPU03 computing platform created by Desay SV, in addition to Xavier, there is also a chip from Infineon which plays the role of a lower-level processor chip.

The advantage of this kind of multiple-party cooperation like Xpeng is that it can leverage the strengths of each company to create satisfactory products. However, the downside is that there are integration issues. Industry insiders have reflected that cooperation in intelligent driving solutions requires long-term cooperation among multiple parties, and there are generally problems with task progress that cannot be guaranteed.Perhaps the difficulty of multi-party cooperation and self-developed algorithms has caused the phenomenon that domestic automakers are not willing to use the Xavier chip. In contrast, Mobileye, although closed, has stable systems, ready-to-use, and less trouble, with a solid reputation.

Roll up the Functions, and Chips Must Keep Up

But with the addition of driver assistance functions and an increase in sensors, and accompanied by the release of high-performance chips, automakers are like they are suffering from “computing anxiety,” desperately stacking chips.

This behavior is not wrong. After all, as long as the fund is sufficient, adding a few more chips is nothing wrong with needs and redundancy. However, in this trend, Mobileye is the hardest hit.

Simultaneously, the surge in high-performance chips has brought about a boom, with NVIDIA’s orders overwhelming, while Huawei, Horizon, and Black Sesame also entering the market.

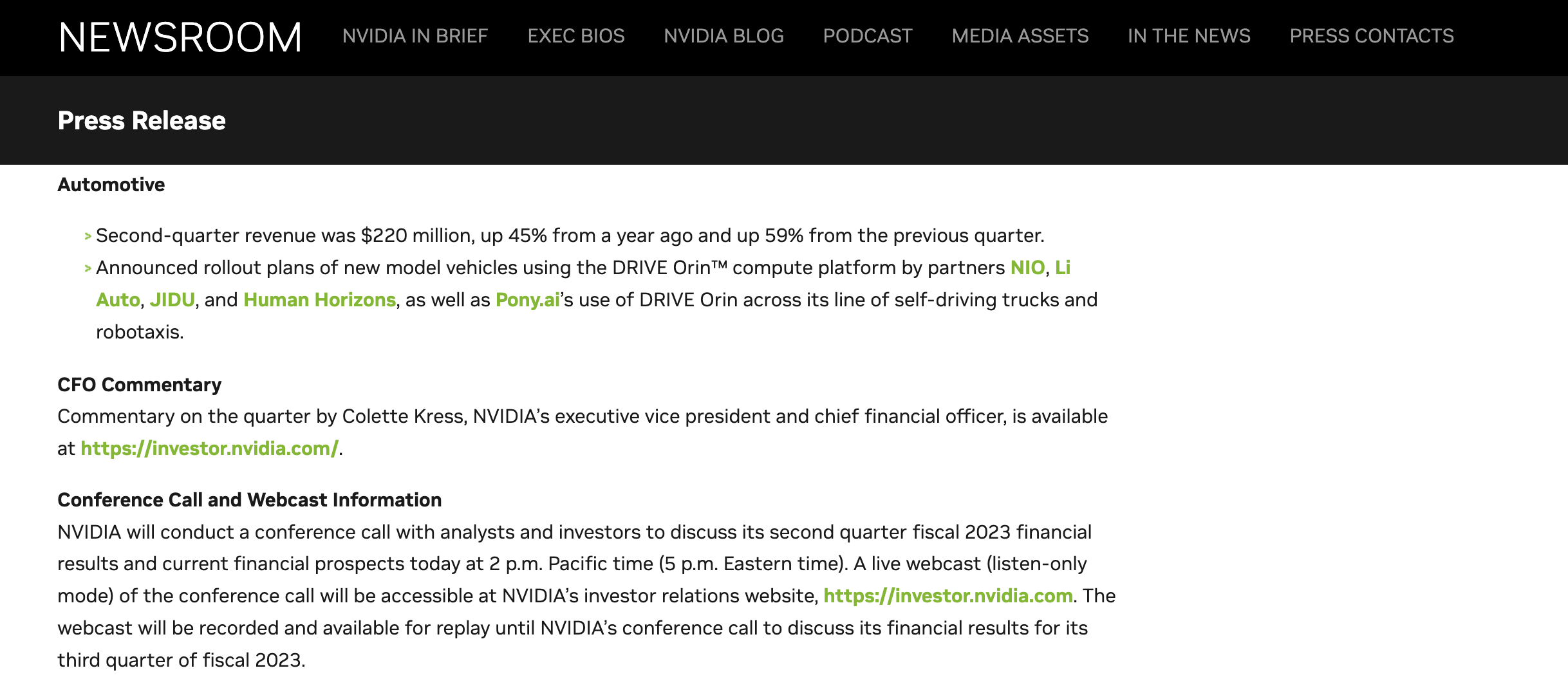

According to NVIDIA’s second-quarter report, the company’s revenue in Q2 this year increased by 3\% compared to the same period last year to reach $6.7 billion.

The main reason for failing to achieve the target of $8.1 billion is that game revenue declined by 33\%. At the same time, NVIDIA’s automotive business achieved its best quarterly income to date, reaching 220 million yuan, an increase of 59\% from the first quarter. The automotive business is NVIDIA’s only growing sector this quarter, apart from data centers.

NVIDIA CFO Colette Kress said on the earnings call:

“The automotive artificial intelligence solutions have driven our strong growth, particularly in revenue related to intelligent cockpit and autonomous driving. We view Q2 as a turning point for our automotive revenue as NVIDIA Orin momentum accelerates.”

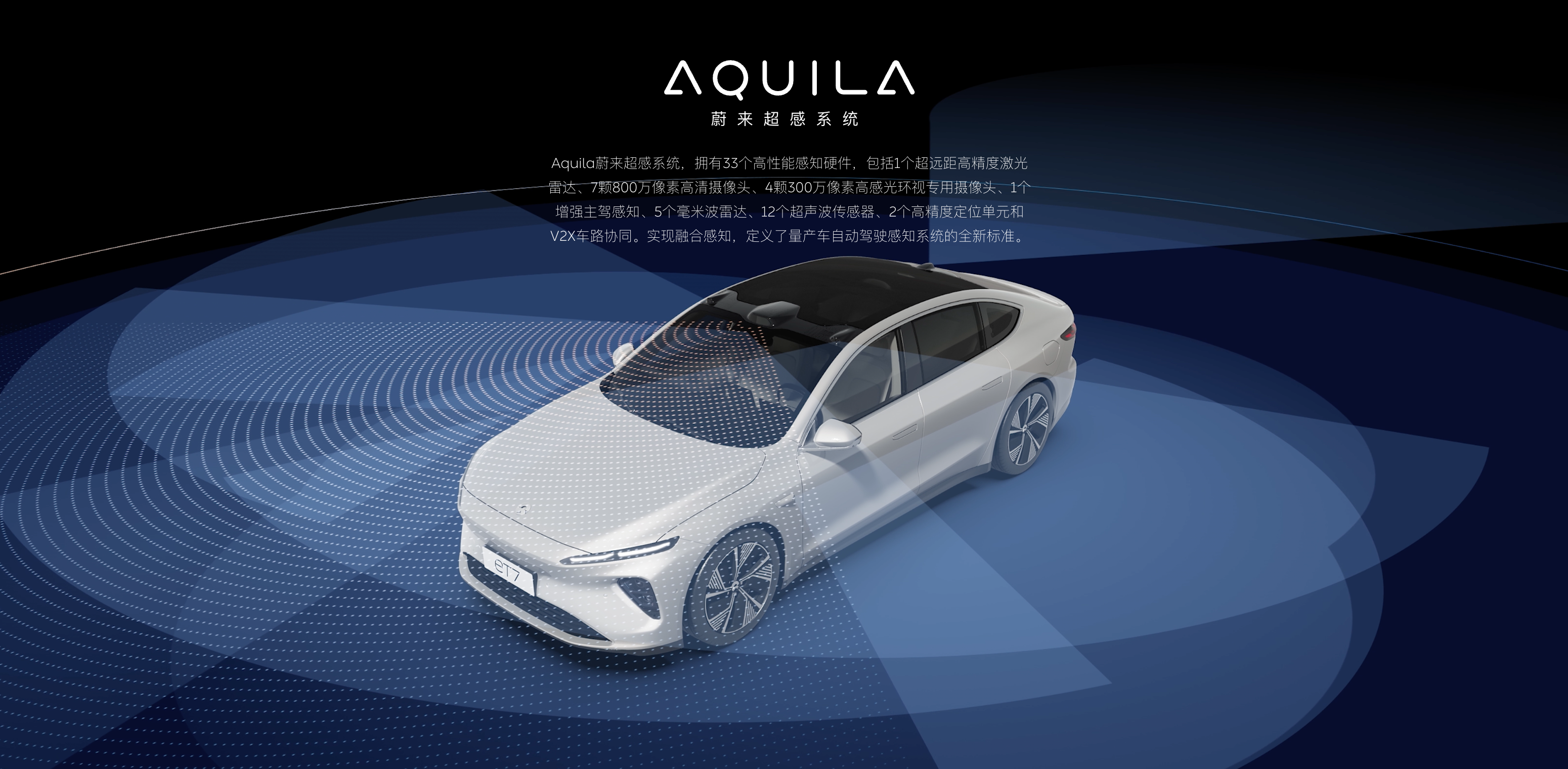

NVIDIA has now received orders from over 20 OEM companies, including NIO, AIWAYS, XPENG, JETTA, Enovate, ZhiJi, Lotus, Jaguar Land Rover, and several orders from the Robotaxi field, including Momenta, Pony.ai, and others.同时Orin芯片不仅算力高,与上一代产品Xavier一样,还具备可编程、可拓展的高开放性。创始人老黄甚至自卖自夸地称:”这是高等级自动驾驶车辆不可或缺的芯片”。

同时在大多数国内车企都非常看中研发”话语权”,想把”灵魂”掌握在自己手里的背景下,投入英伟达的怀抱看起来也就顺理成章了。

同样在这两年频频斩获订单的还有跨界巨头华为。

华为MDC产品库包括:

-

MDC 810,算力 400 TOPS

-

MDC 610,算力 200 TOPS

-

MDC 310F,算力 64 TOPS

-

MDC 210,算力 48 TOPS

目前华为MDC的客户包括了北汽极狐阿尔法SHI版、哪吒S、阿维塔11、沙龙汽车机甲龙,而华为向他们提供的是整套计算平台,包括了SoC硬件、自动驾驶操作系统以及AutoSAR中间件。

严格来讲,MDC作为智能驾驶计算平台,其开放度也不算高。但是在华为软件合作模式上提供了开放接口,方便车企植入算法。

而华为MDC现在最艰巨的任务就是量产,2022年将会有多款新车搭载Orin上市,但是能够量产交付MDC计算平台的目前却只有极狐一家。

新玩家入列

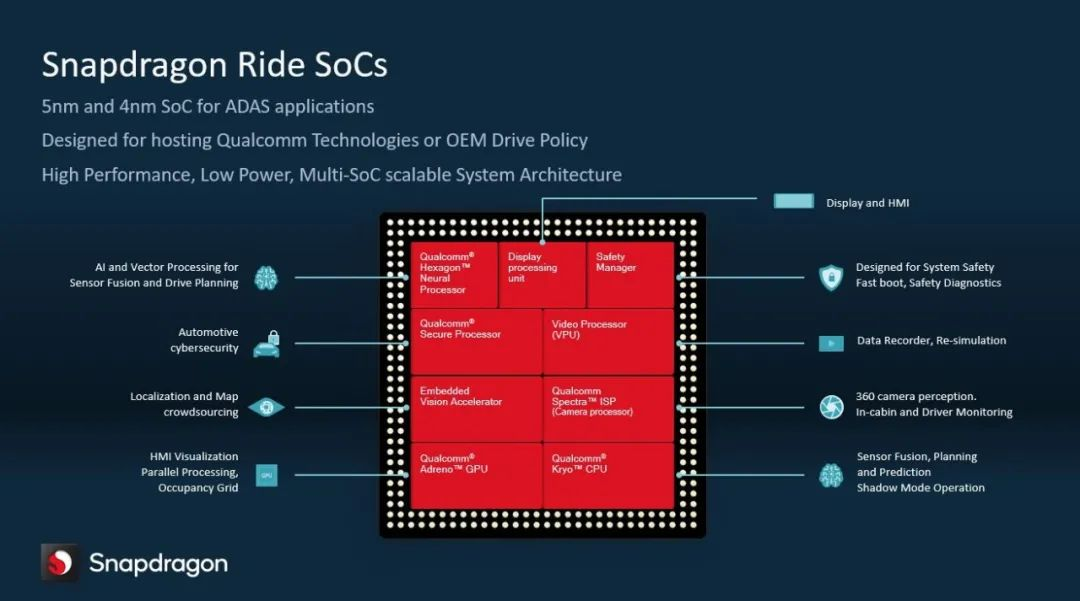

而随着汽车电子电气架构的演进,多域控走向中央域控逐渐成为行业共识,在座舱芯片领域具有绝对领先地位的高通自然也要涉足自动驾驶领域,以确保其不可替代性。

在今年成都车展上,魏牌正式亮相摩卡DHT-PHEV激光雷达版车型,这款车搭载的正是高通Snapdragon Ride智能驾驶计算平台。

Different from Orin’s single SoC, the Qualcomm Snapdragon Ride platform is composed of SA8540P SoC+SA9000, with the Infineon TC397 as the safety redundant chip. It can support the access of 6 channels of Gigabit Ethernet, 12 channels of 8 million pixel cameras, 5 channels of millimeter-wave radar, and 3 channels of lidar information.

Different from Orin’s single SoC, the Qualcomm Snapdragon Ride platform is composed of SA8540P SoC+SA9000, with the Infineon TC397 as the safety redundant chip. It can support the access of 6 channels of Gigabit Ethernet, 12 channels of 8 million pixel cameras, 5 channels of millimeter-wave radar, and 3 channels of lidar information.

Currently, in addition to reaching a cooperation agreement with Great Wall, Qualcomm has also snatched the two major German traditional car companies, BMW and Volkswagen, from Mobileye.

If Huawei and Nvidia Orin are the flagship models of each car company, then the mid-end market and traditional car companies are the main battlefield for Horizon.

Independent Suppliers, Holding the Banner of “Open”

Horizon and Black Sesame, these two domestic suppliers have been very active in the past two years, especially the former, which has almost announced a new OEM every month.

This has put tremendous pressure on Mobileye and Bosch.

Last summer, Horizon officially released the Journey 5, a domestically-made chip that not only has 128 TOPS of computing power, but also a frame rate of up to 1,283 FPS. This chip uses the Bayesian Processing Unit (BPU) architecture independently developed by Horizon, which features the adoption of large-scale heterogeneous process computing.

At that time, Horizon, as a supplier with not very loud reputation, often lowered its attitude and faced partners with an open attitude.

The most illustrative case of Horizon’s open and collaborative attitude is nothing but the cooperation on the Ideal ONE in 2021 with Journey 3 onboard. However, as fixed-point cooperation increases, Horizon obviously cannot provide “nanny-style cooperation” for every OEM.

Therefore, the Horizon team proposed three open cooperation modes. The first is a cooperation mode similar to Nvidia’s, in which Horizon provides the SoC and operating system OS, and users develop on this basis.

The second is that Horizon open sources the DSP underlying software to partners, including SoC, operating system OS, etc., and users can conduct secondary development on this basis to improve efficiency. Typical cooperation cases include Quanergy Intelligent Navigation. The latter develops the NOA function based on the Journey 5, greatly shortening the development cycle.

The third is more thorough, teaching car companies to make their own SoCs. Horizon authorizes the BPU to partners and supports partners with conditions and willingness to develop their own chips, thereby achieving differentiation.When the Horizon Journey 5 was launched last year, the founder of Horizon, Yu Kai, emphasized the expansion of the “circle of friends”. Currently, the domestic large computing power chip Journey 5 has reached cooperation intentions with many automobile companies such as SAIC, Great Wall, JAC Motors, Changan, BYD, NIO, Voyah, Hongqi, and Niuchuang Ziyoujia.

The Chip is in Place, and Landing is All That Matters

This year can be seen as the first year of NVIDIA Orin’s landing. The vehicles that have been equipped with or will be equipped with Orin include: NIO ET7, LiAuto L9, NIO ES7, Fleva R7, XPeng G9, NIO ET5, and IM L7, among others.

LiAuto L9

In these models, some have developed perfect high-speed navigation functions based on Orin, and the fastest progress among them is LiAuto L9. This car was delivered on August 30th, and users can turn on the LiAuto NOA developed based on Orin chips when they get it.

The LiAuto L9 intelligent driving domain controller is provided by Desay SV and referred to as the IPU04. Yes, it is an upgraded version of the IPU03 that XPeng uses. However, since it is based on a large computing power chip, the computing power of this generation of domain control covers 110-1,016 TOPS. Similar to the cooperation model between XPeng and Desay SV, the LiAuto team also transplanted algorithms onto Orin and handed the integrated tasks to the supplier.

With the increase of sensors, the data fusion of multiple sensors also faces challenges for the intelligent driving team. With the delivery of L9 on a larger scale, we look forward to more feedback.

Fleva R7

Originally, in our expectation, the navigation assistance that follows the LiAuto L9 should be the NIO NT 2.0 series models. But unexpectedly, Fleva appeared.

This car company, which has almost no voice in the field of intelligent driving, surprised us. Although the navigation assistance that Fleva R7 is equipped with cannot be delivered on a large scale since the car has not been launched, according to our exclusive internal testing (click here to skip to the video), the high-speed navigation developed based on Orin has achieved a high degree of completion.

How did Fleva do it? We communicated with Fleva internally and learned that Fleva has already established a team of over 500 people for intelligent driving R&D, and insists on full-stack self-research. At the same time, they are also the first car company to obtain a sample of NVIDIA Orin chips.

Therefore, it is expected that in September, Fleva will not only deliver a flagship model with fully loaded hardware, but also a set of navigation assistance systems that break expectations.### XPeng G9

Among various car models this year, most people should be looking forward to the performance of G9. One reason is that this car was unveiled as early as last year and information about its new generation XEEA architecture, XPILOT 4.0 assisted driving hardware, etc. were released, which whetted the appetite of the public. Secondly, XPeng has always been one of the new forces in the car industry that strongly promotes “intelligence” and has led development in the past two years, rolling out many new needs and functions.

Therefore, it is expected that G9, which will be delivered in September, will already have NGP, meeting the expectations. Even with XPeng’s investment and self-research capabilities, it is not surprising that it will have CNGP in some individual cities.

We are looking forward to what surprises G9 with its large gene computing chip can bring to users and to promoting the development of the entire industry.

Leapmotor Alpha S New HI Version

Leapmotor Alpha S new HI version is definitely the most anticipated car by users, and there is no need to add another.

Last year, Huawei surprised users by greatly elevating user expectations with near-L4 capability, but it was not until July of this year that the Leapmotor with Huawei HI was delivered to users. Recently, Leapmotor has pushed its NCA closed road navigation assistant driving function to users. However, according to user feedback, there is still much room for improvement in its capabilities.

Prior to this, Huawei revealed to the outside world that they will push the NCA navigation driving function of city roads in ten cities nationwide before the end of this year, so there are still variables as to who will be the first to land in a city scene.

WEY Mocha DHT-PHEV LIDAR Version

By the way, the most notable car is the WEY Mocha DHT-PHEV LIDAR version. The reason for the anticipation is that it is the only car model to use the high-performance Snapdragon Ride intelligent driving computing platform among the models launched this year.

At the same time, this car brings together the strengths of three first-tier companies: Great Wall, Qualcomm, and Horizon Robotics.

According to WEY’s publicity, they are very eager to seize the label of “first city navigator”, but mass production landing is required to substantiate intelligent driving.

“Take Small Steps Quickly” will become the main theme.After introducing the functionalities that various car makers can achieve or want to achieve in the next two years, you may find that many car makers are using L4/L5 level hardware to perform functions that are similar to what we have now. When will the Chinese version of FSD Beta be available?

Hardware preparation and subsequent software iteration is a common practice in the industry. In this process, whoever can provide users with more advanced functionalities and an experience that refreshes their understanding faster than others can gain a competitive advantage in this marathon race.

The hardware arms race is only the 1.0 stage, and software capabilities of the 2.0 stage are more important.

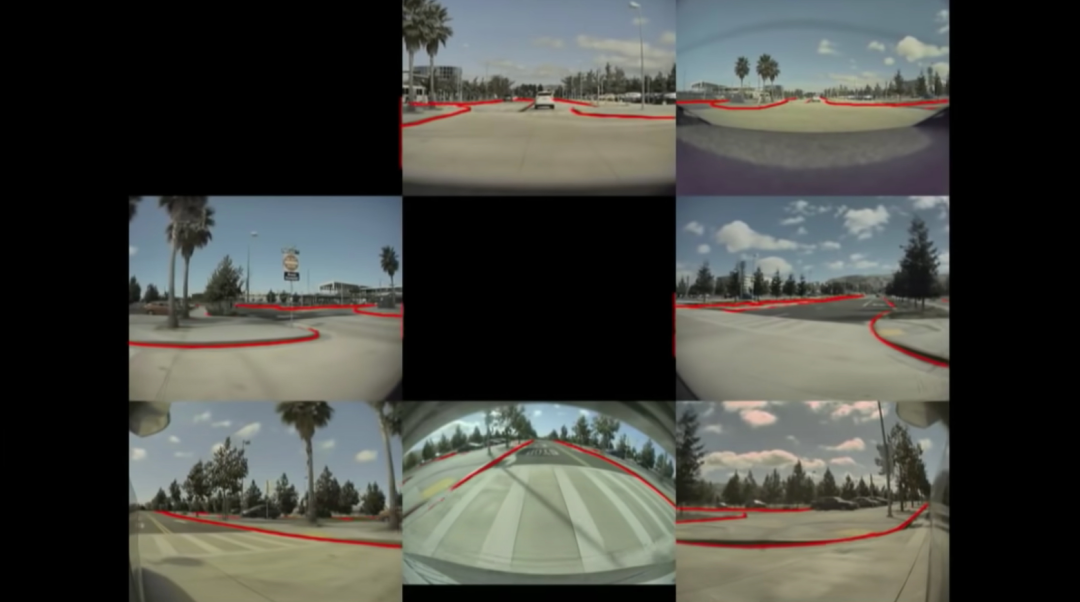

In February 2020, Tesla’s AI Senior Director Andrej Karpathy introduced Bird’s Eye View networks (BEV) at the ScaledML2020 conference.

Tesla integrates the 2D images obtained from the 8 cameras into an Occupancy Tracker. After completion, the images are projected from top to bottom onto the Z+ plane, just like a bird’s eye view.

Previously, Tesla used the vehicle’s cameras to obtain 2D images of the road environment. The images were then separately processed to perform feature extraction and feature recognition and prediction of the road environment in 2D space. However, for the recognition of large objects captured by multiple sensors simultaneously, there were problems with unstable perception recognition and even misrecognition.

This approach is aimed at solving the problem of fragmented and discontinuous perception space.

The use of a vehicle’s multiple directional cameras to construct BEV bird’s eye view has gradually become an industry consensus. XPeng Motors, Hozon Auto, and Li Xiang have followed suit.

Compared to Tesla, the flagship models of domestic leading car makers have richer sensors. Thus, software systems need to integrate sensors by perception stitching.

For example, Xpeng Motors has even adopted a “full-fusion” strategy. Prior to this, the industry generally used front and rear fusion strategies. Front fusion refers to the system integrating sensor perception data to output a perception result, which is then used for decision-making. Rear fusion refers to all sensors outputting perception results for system decision-making. The full-fusion method means that the system will obtain the front perception result and compare it with perception results obtained from each sensor.

More complex sensor architecture and larger perception results will test car makers’ autonomous R&D capabilities.

In the next stage, the development capacity of software algorithms will be the focus of competition.

Final Thoughts

This year’s theme in intelligent driving is based on high-level auxiliary driving grounded on powerful chips. Judging from the development momentum, fast-paced self-developed approaches led by companies such as Li Xiang and XPeng Motors can lead the industry. However, the progress of Li Xiang’s L9 city NOA development is clearly less advanced than that of XPeng Motors.And most traditional car companies are still striving to narrow the gap with new forces, with more and more high-speed navigation driving expected to appear this year, which is definitely a good thing for popularizing functions and improving user awareness.

With the increase of sensors and the enhancement of single vehicle perception ability, some car companies will try to get rid of high-precision maps and continuously expand the ability boundary relying on single vehicle ability.

Currently, the industry is still in a key transformation stage from low computing power to high computing power, from forward-looking to omnidirectional perception, and from vision+millimeter wave radar to multi-sensor fusion. From the perspective of function implementation, we believe that navigational assistance driving will be popularized in the short term, and urban navigation will also come in the not-too-distant future, even if it is not easy to use in the initial stage. But there’s no such thing as an overnight success, and the improvement of capabilities and user awareness is always complementary.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.