Translation

This analysis is based on the 8-minute autonomous driving demo video released by Xiaomi on August 11th, 2022.

I was surprised to see that Xiaomi has directly unveiled their stage achievements in autonomous driving today, and the progress is faster than I expected. This needs to be carefully analyzed. (Please watch the original 8-minute video before reading this article for a better effect.)

First, let’s talk about the conclusions:

-

In terms of autonomous driving technology, Xiaomi’s current demonstration state does not have a leading industry breakthrough.

-

Overall, its level is comparable to that of a ROBO Taxi modified car.

-

However, considering the preparation time for the test car, the progress is still quite impressive.

Modified Car Analysis

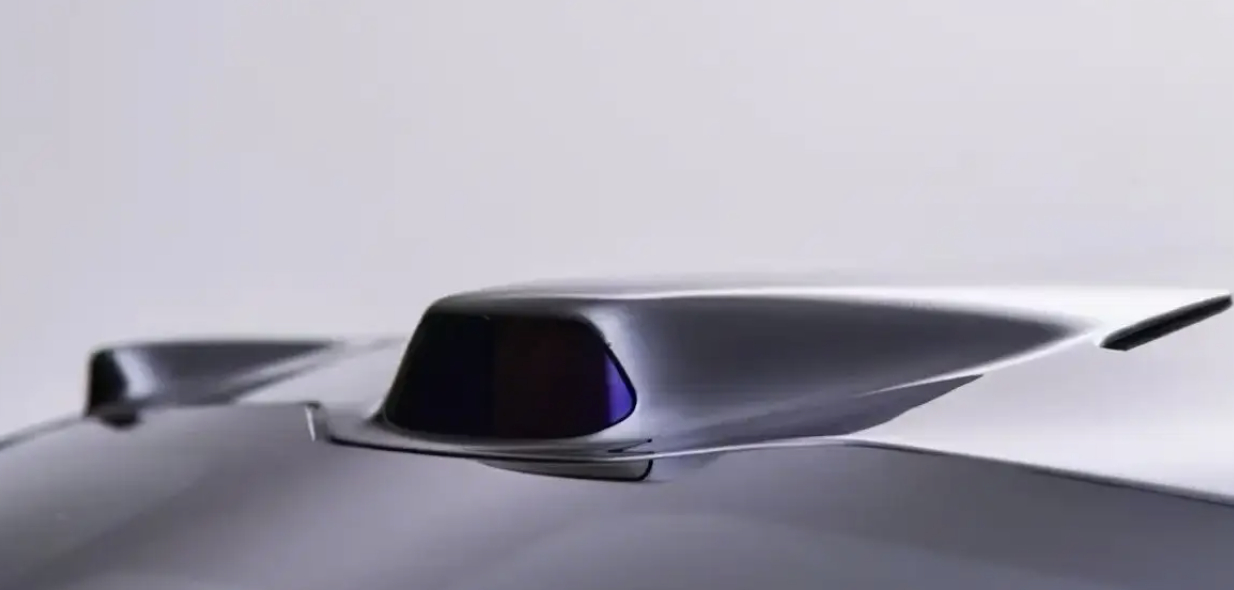

The video showed two types of Xiaomi’s autonomous driving test cars with relatively primitive sensor layouts – a “big hat” mounted on the roof to attach LIDAR and cameras.

This is a modification type commonly used by “autonomous driving companies” of the ROBO Taxi type, which is also suitable for Xiaomi, which has no prototype car for the time being.

The advantage of doing this is to provide the largest sensing space for the sensors; the disadvantage is that home cars do not allow this manufacturing mode. Sensors must be integrated into the whole vehicle industrial design. Therefore, there is still a long process of engineering integration and tedious testing before the status reaches the entire car.

To put it in plain language: We cannot currently speculate on the industrial design and sensor layout of Xiaomi’s new car in 2024 from the status of the autonomous driving test car.

CNOA Status Analysis

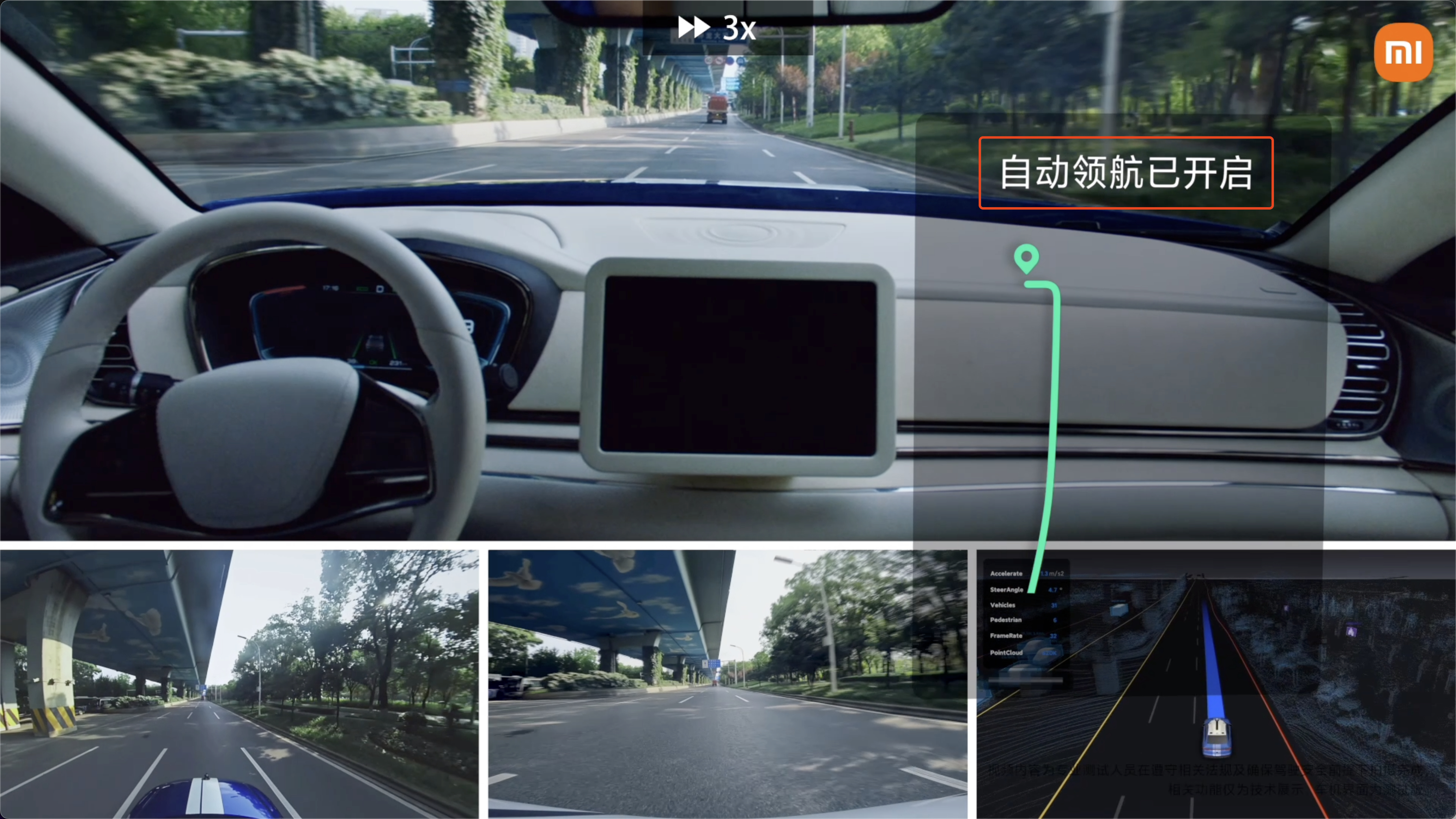

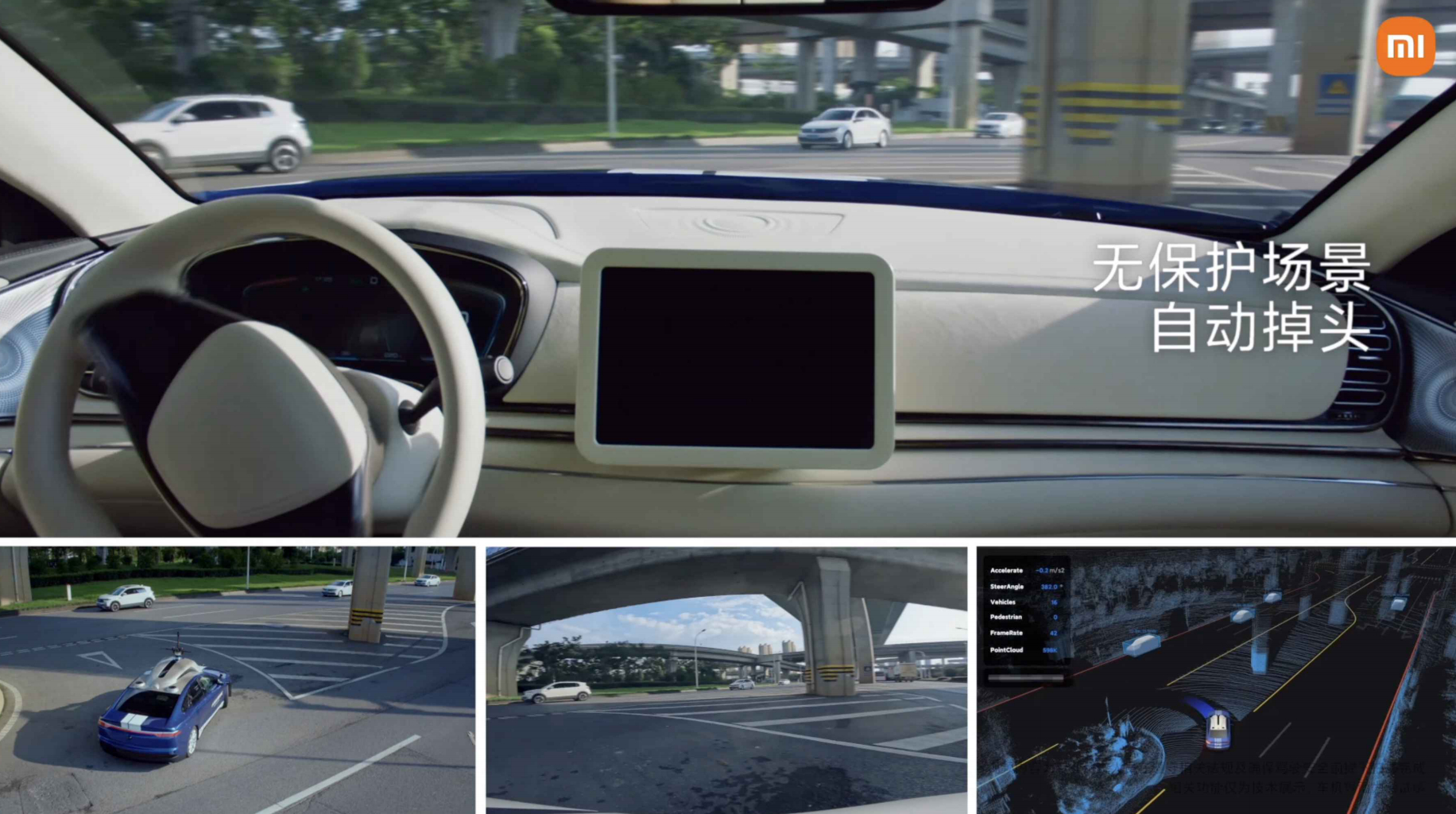

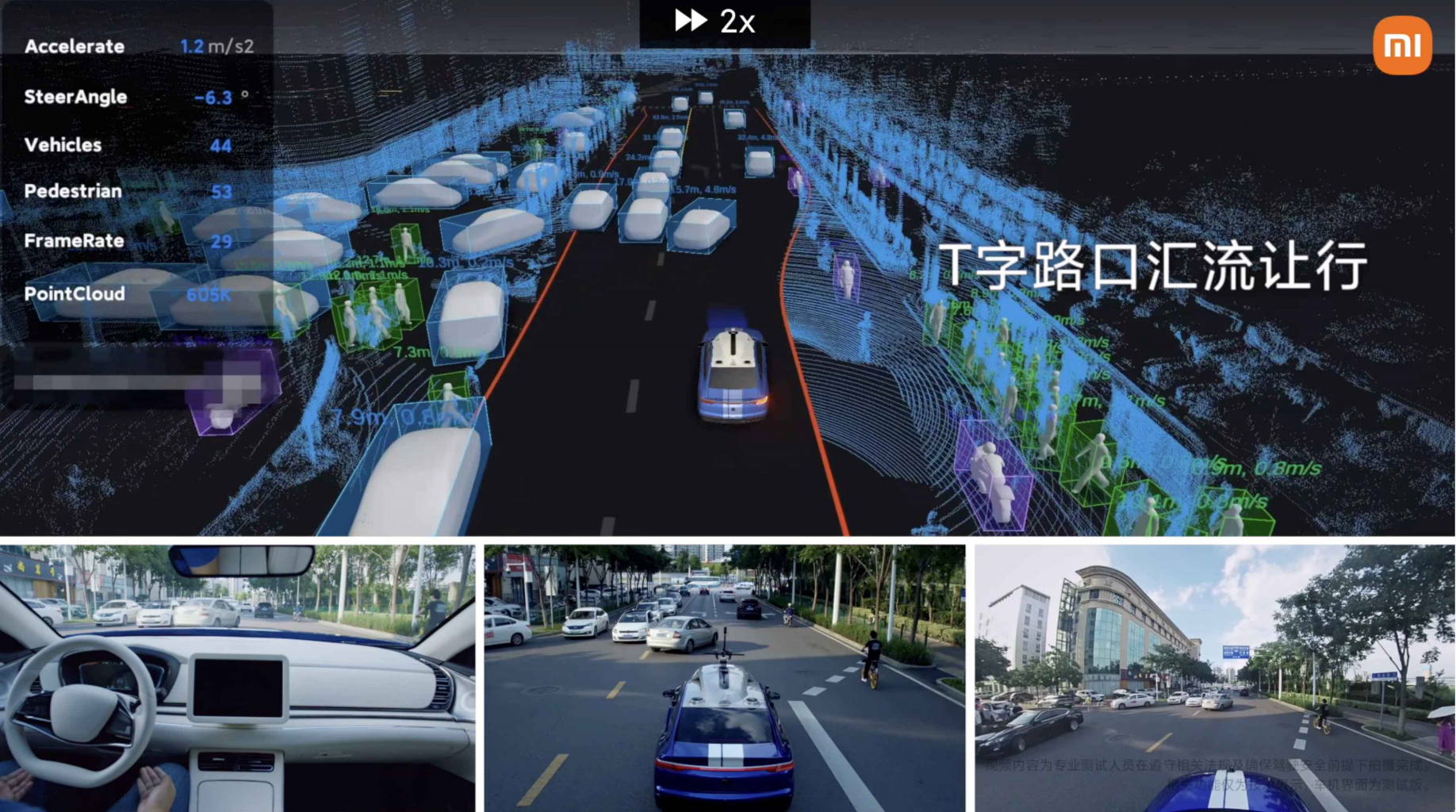

The first part of the video is the urban navigation assistance status, also known as CNOA (City Navigate on Autopilot). This is the status that everyone wants: Open the in-car map navigation, select the destination, and the car will automatically drive to the destination.

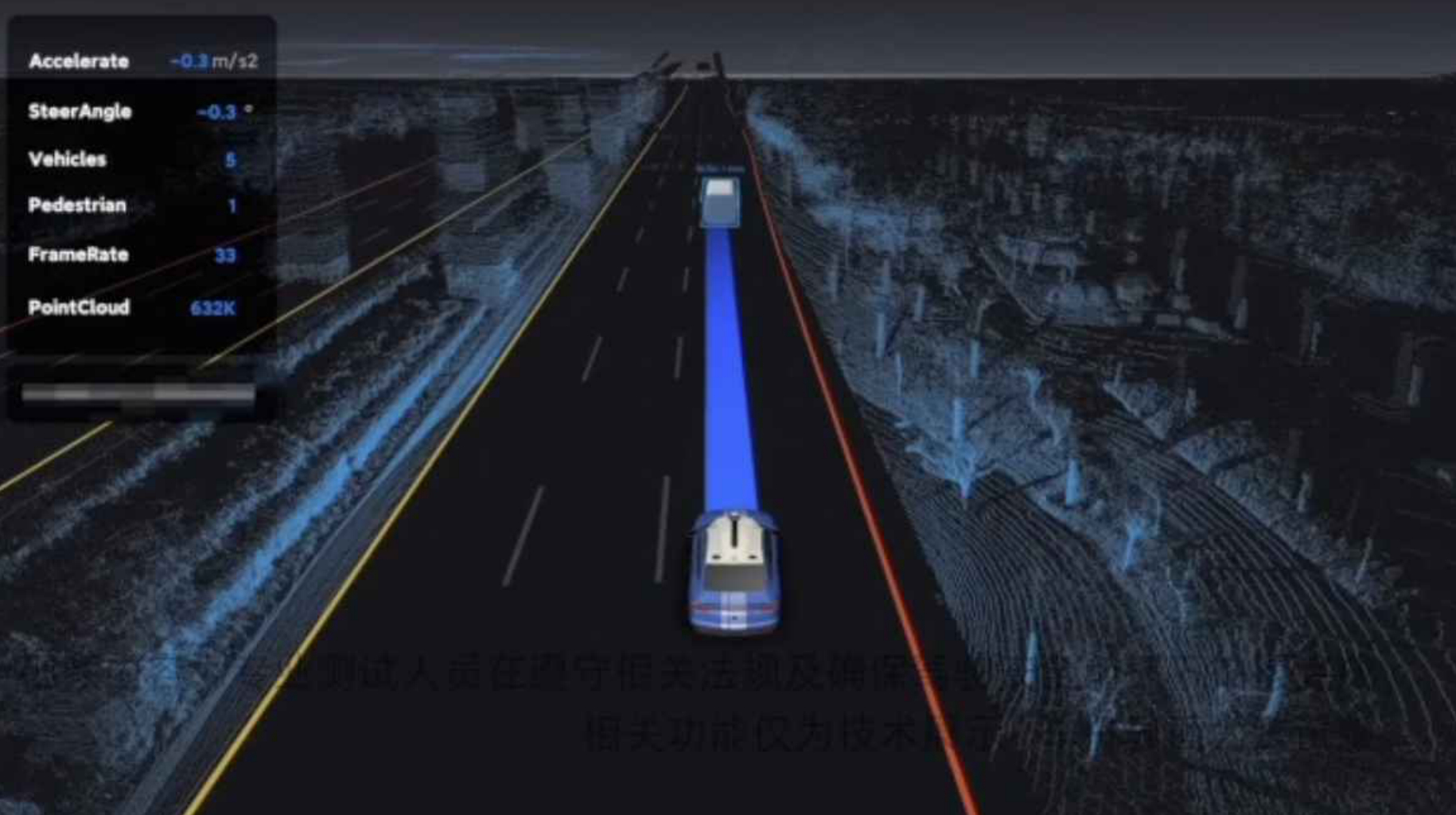

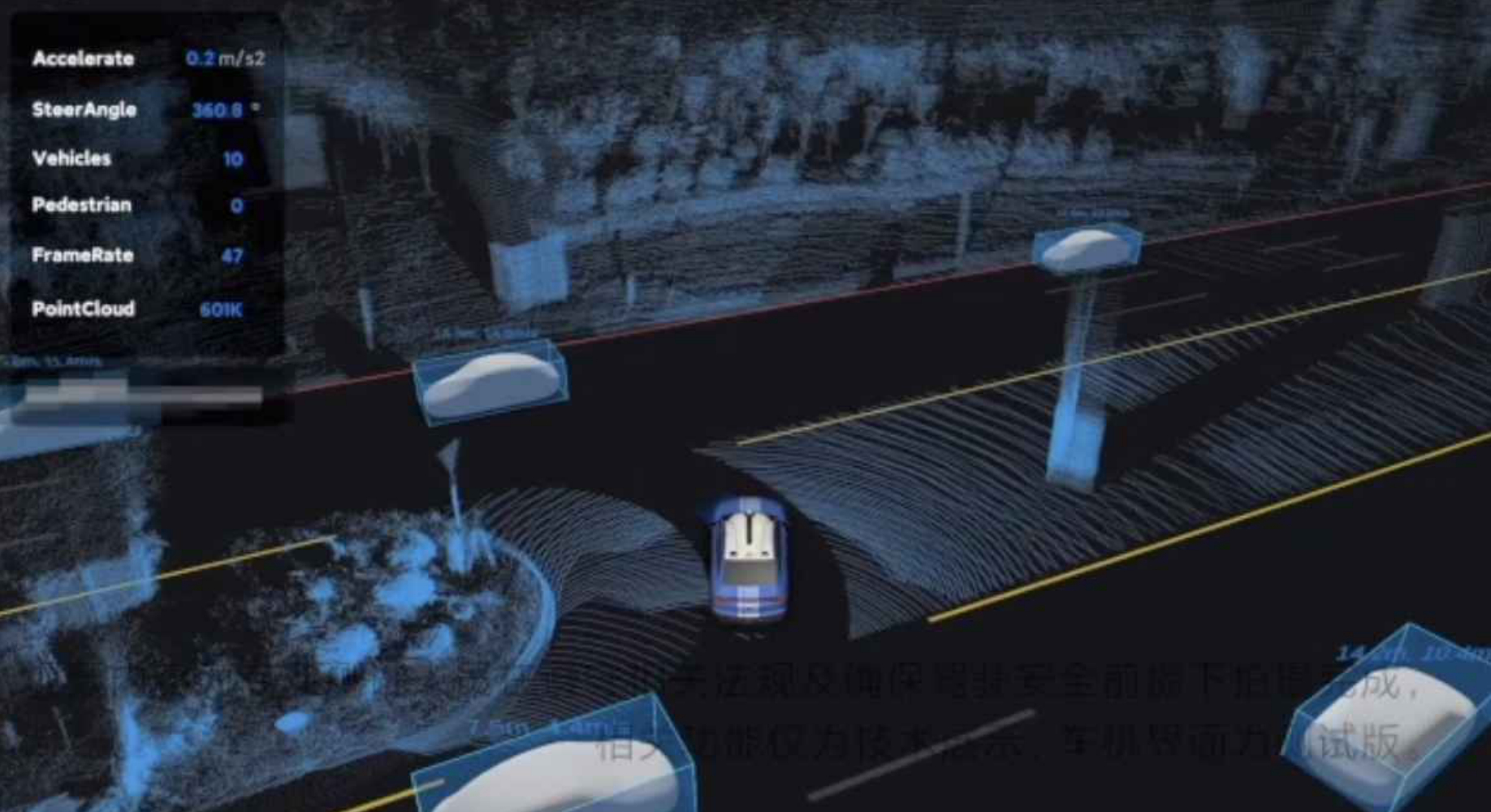

As the leader in progress, Xiaomi’s CNOA must heavily rely on high-precision maps. Pay attention to the visual effects in the lower right corner. The 3D point cloud on the road is unusually stable, and it is unlikely to be real-time modeling, so it is speculated to be the visual reconstruction of high-precision maps.Translate the following Markdown Chinese text into English Markdown text in a professional manner, keeping the HTML tags inside the Markdown and output only the result.

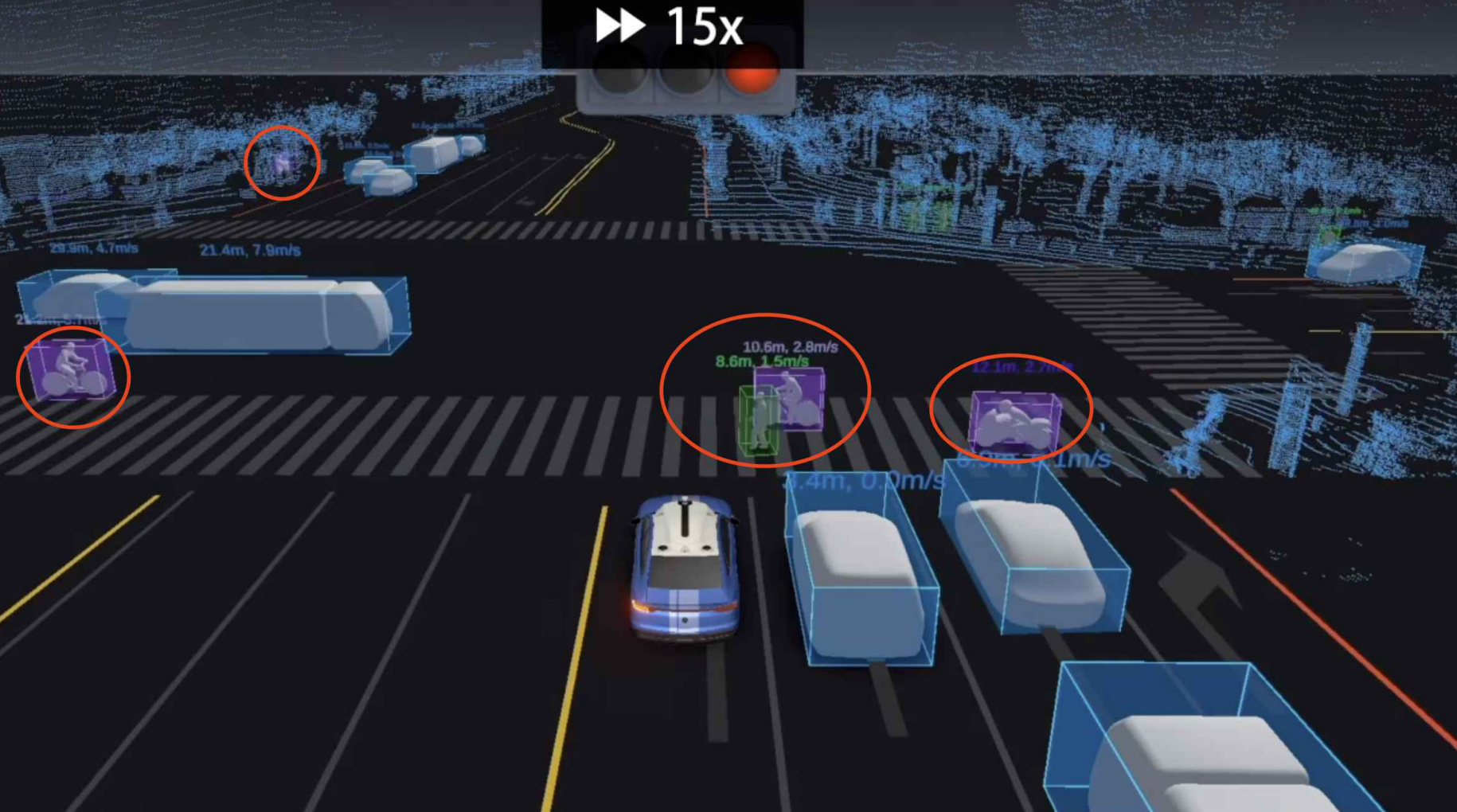

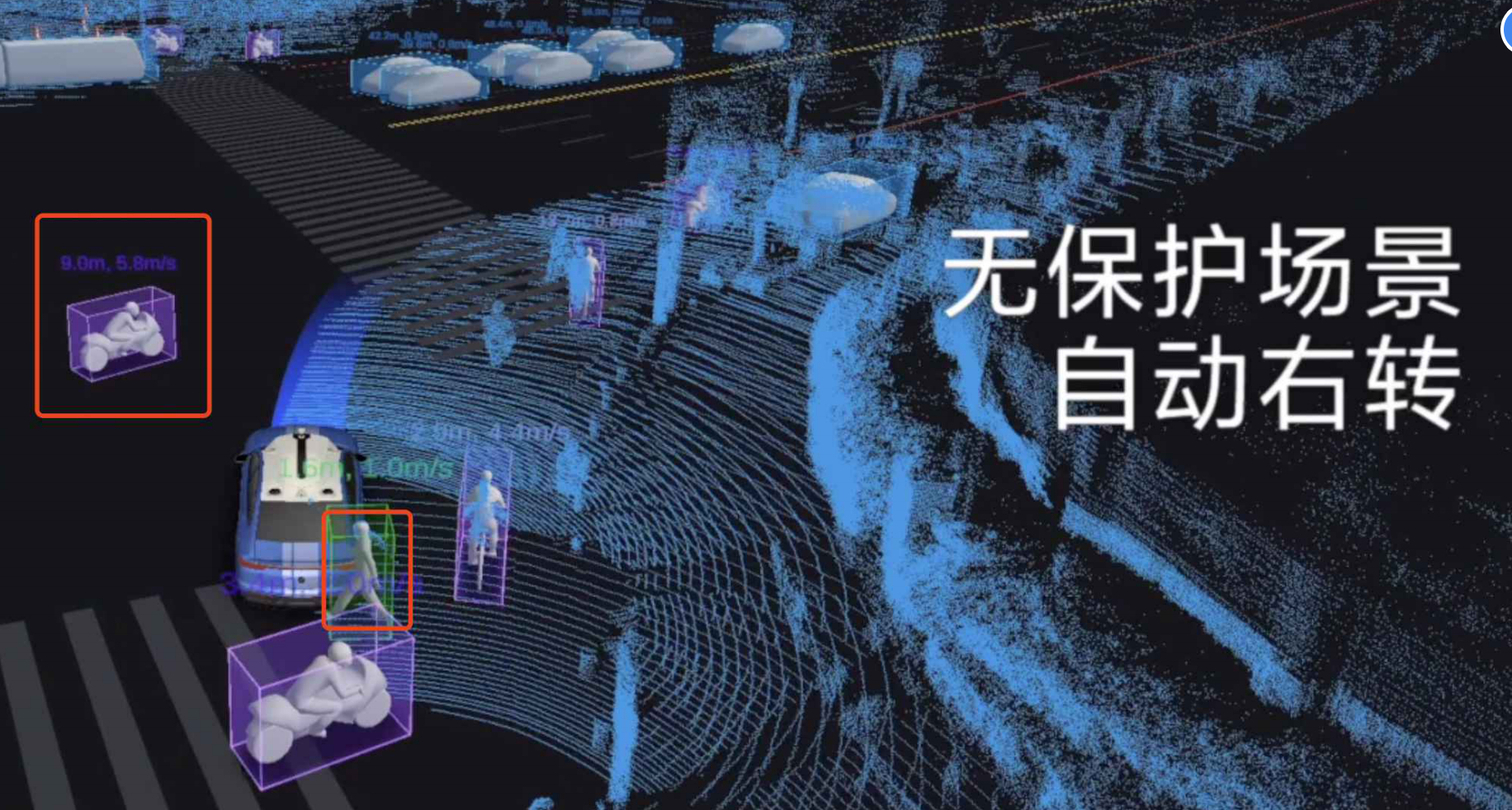

However, Xiaomi’s perception part is written really well. Please note the visual effect here. There is no perceptible severe jitter in the recognition and reconstruction of other traffic flows.

It shows that the perception system of the vehicle is very stable, and it can accurately capture the target. The 3D reconstruction visualization smoothing algorithm is done in place; Moreover, the model is relatively elegant, and the fluency looks pretty good, which I like.

Also, pay attention to the three-dimensional frame wrapped around the 3D model, which is basic operation. In the previous self-driving demonstration video of a certain automaker, the target recognition was a two-dimensional frame, which was ridiculed by peers in the industry.

Avoiding large vehicles and cutting-in cars are routine operations. Xiaomi’s test vehicle has far better perception conditions than ordinary vehicles. It is not surprising that it can be done, and it is a problem if it cannot be done.

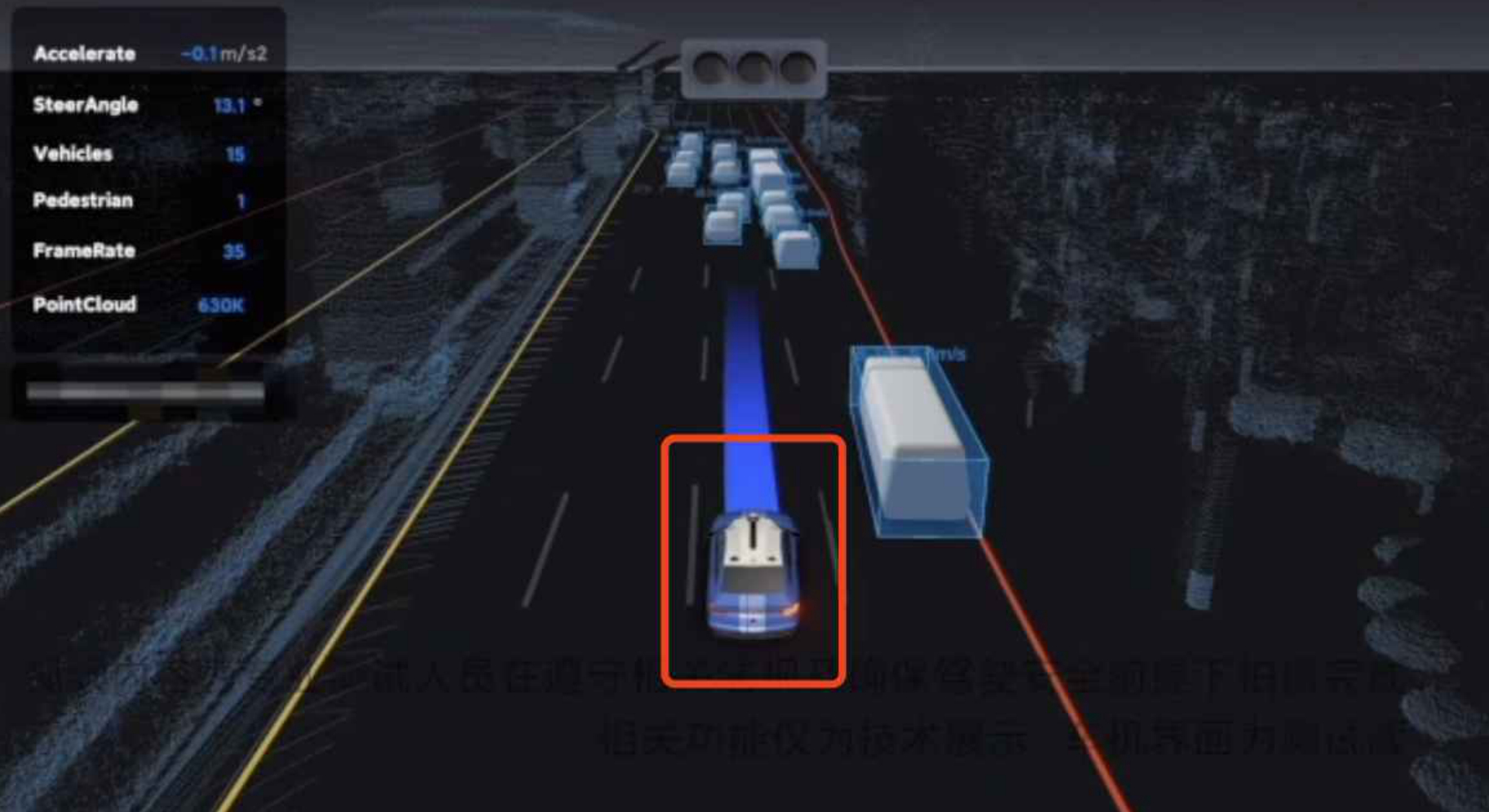

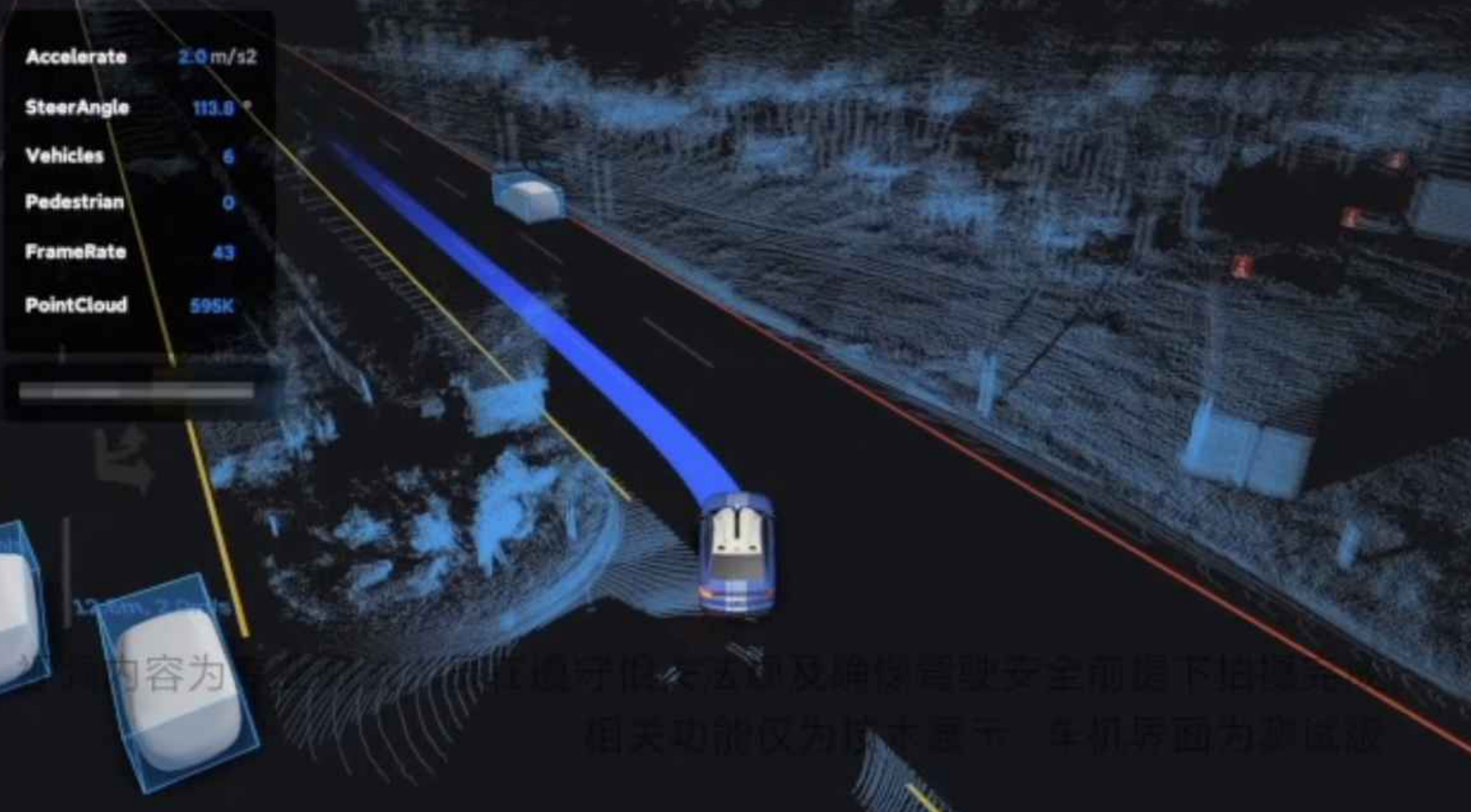

The iconic operation of NOA – self-decision-making on the ramp and active lane change.

Xiaomi’s rule control here is done well. The lane-changing strategy is not rigidly based on the distance between the front vehicle, but based on the relative speed. Although the left lane has not yet exceeded its own lane, the system observes that it has a faster relative speed, so it changes lanes immediately to improve traffic efficiency. This strategy is quite clever, but the video is too fast to see the smoothness of the lane-changing control.

Risk avoidance and normal operation; everyone is working hard to upgrade the perception system and develop multi-layer perception of Lidar+surrounding+millimeter wave, which is what we’re doing.

Risk avoidance and normal operation; everyone is working hard to upgrade the perception system and develop multi-layer perception of Lidar+surrounding+millimeter wave, which is what we’re doing.

Without protection, making a U-turn is a high difficulty scenario. The perception system needs to work at full capacity, while the decision-making system needs to balance traffic efficiency and safety – you can’t turn too slowly or you’ll never make it, but you can’t turn too fast or you’ll have an accident.

Thanks to the excellent observation position on the roof of the car, the perception system of the test car provides 360-degree all-round information, which makes the U-turn relatively smooth.

However, if installed on Xiaomi’s mass-produced cars, Lidar can only provide observation information for the front half of the sphere, and millimeter wave has a lot of dead angles. In this scenario, it can only rely purely on surround vision perception. This is the engineering problem that Xiaomi needs to solve in mass production cars.

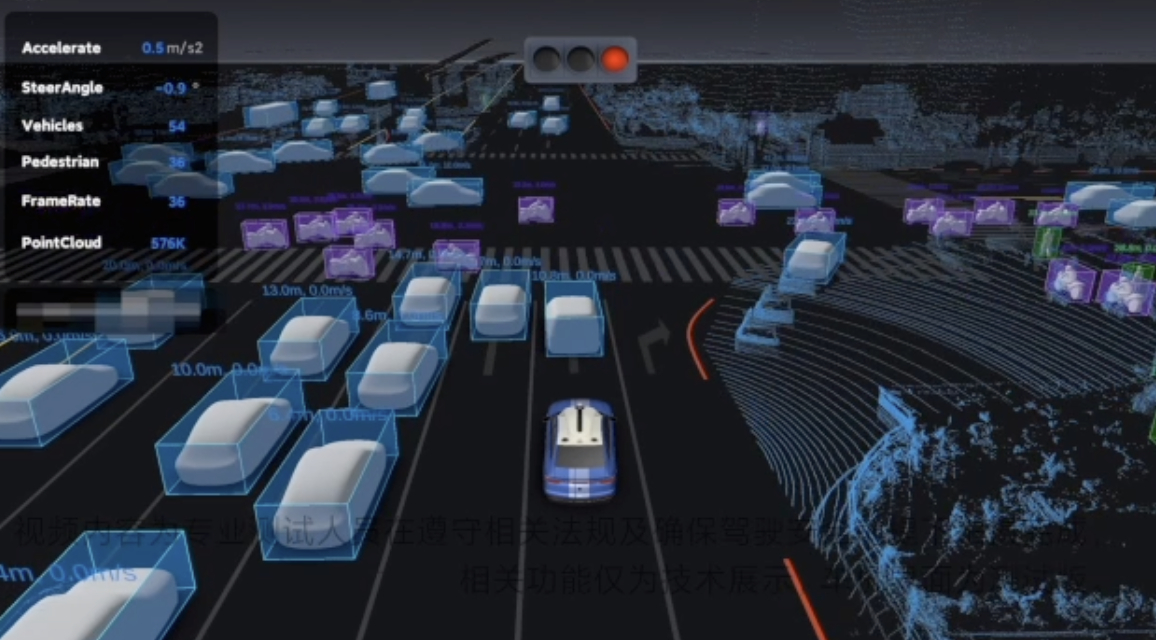

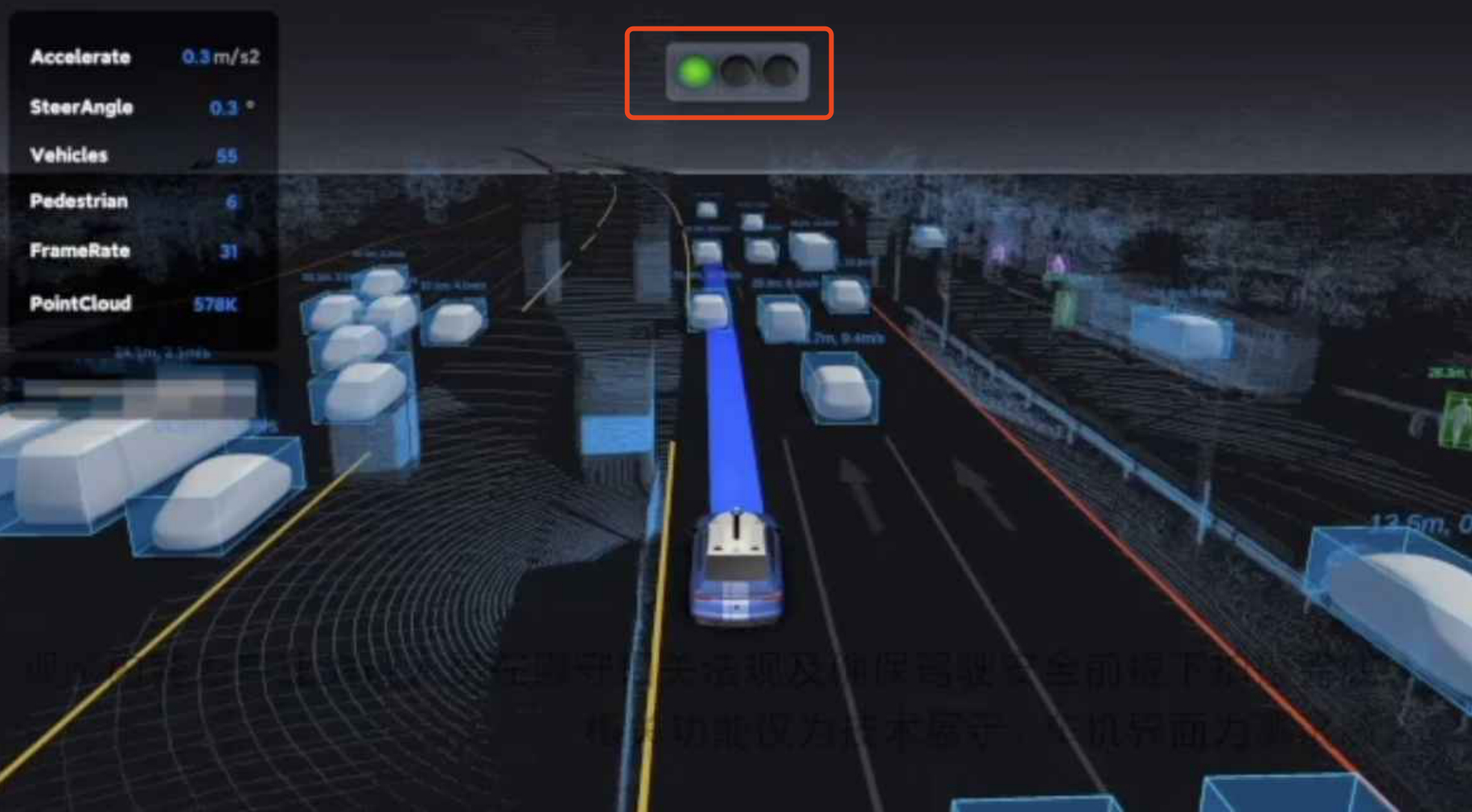

There is a visualization of traffic lights, but it is unclear whether it is information sent back by V2X (running on the Wuhan autonomous driving demonstration road) or information purely recognized by vision. If it’s the latter, then Xiaomi’s technology status is good.

Avoiding buses (large vehicles) is a basic operation.

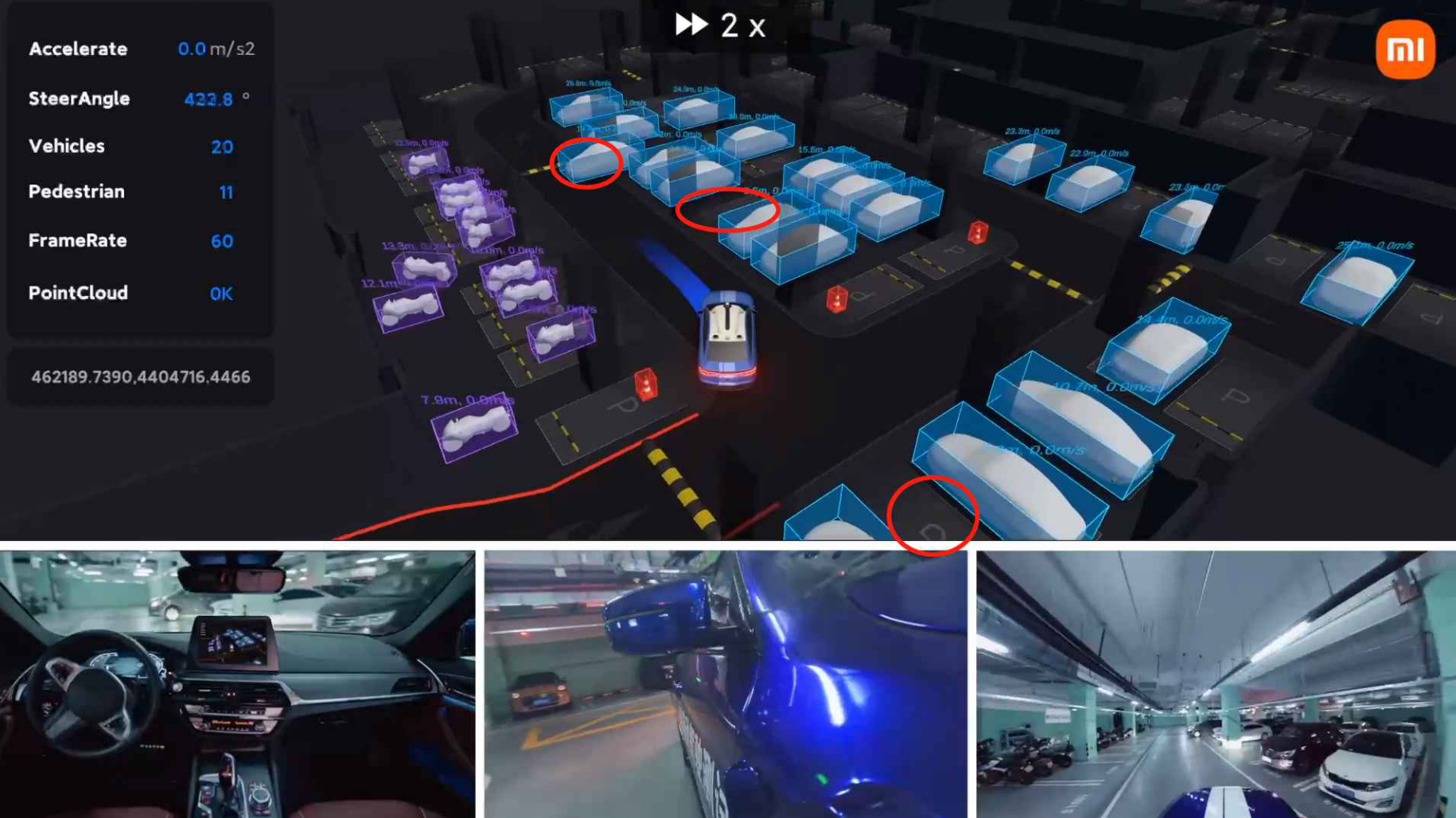

This part is very interesting. Pay attention to the visualization information. Xiaomi’s test car has established a complete pedestrian and two-wheeled vehicle recognition model. It can recognize, avoid, and 3D reconstruct, and the progress is good.

A complex left turn with multiple road conditions. However, with traffic lights, the difficulty is moderate.

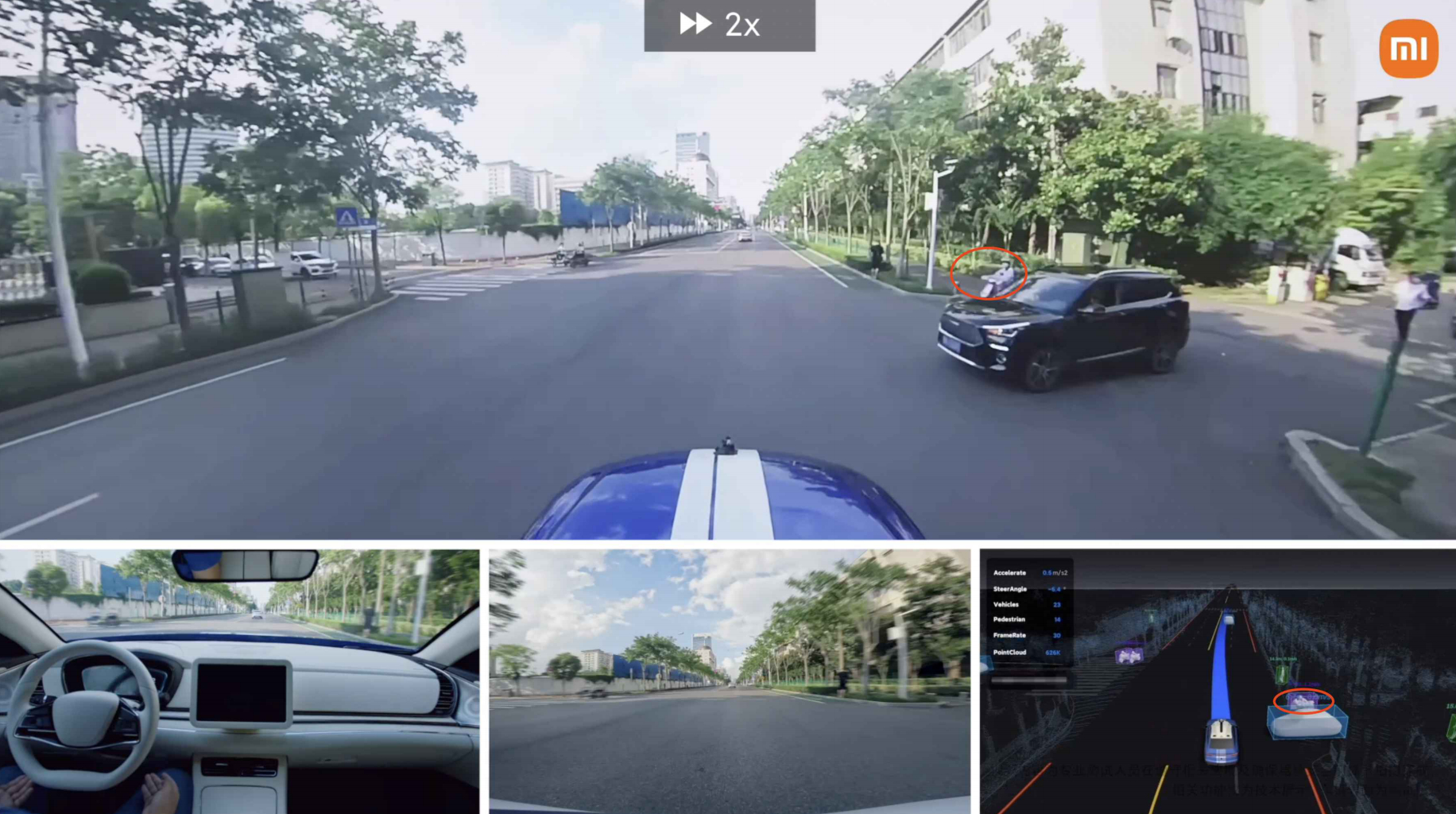

This part is quite interesting: Thanks to its superior perception system, Xiaomi’s test vehicle first detected an electric scooter blocked by an SUV. The recognition was very fast, and the decision to continue driving was very resolute. I wonder if mass-produced cars can achieve such stunning level.

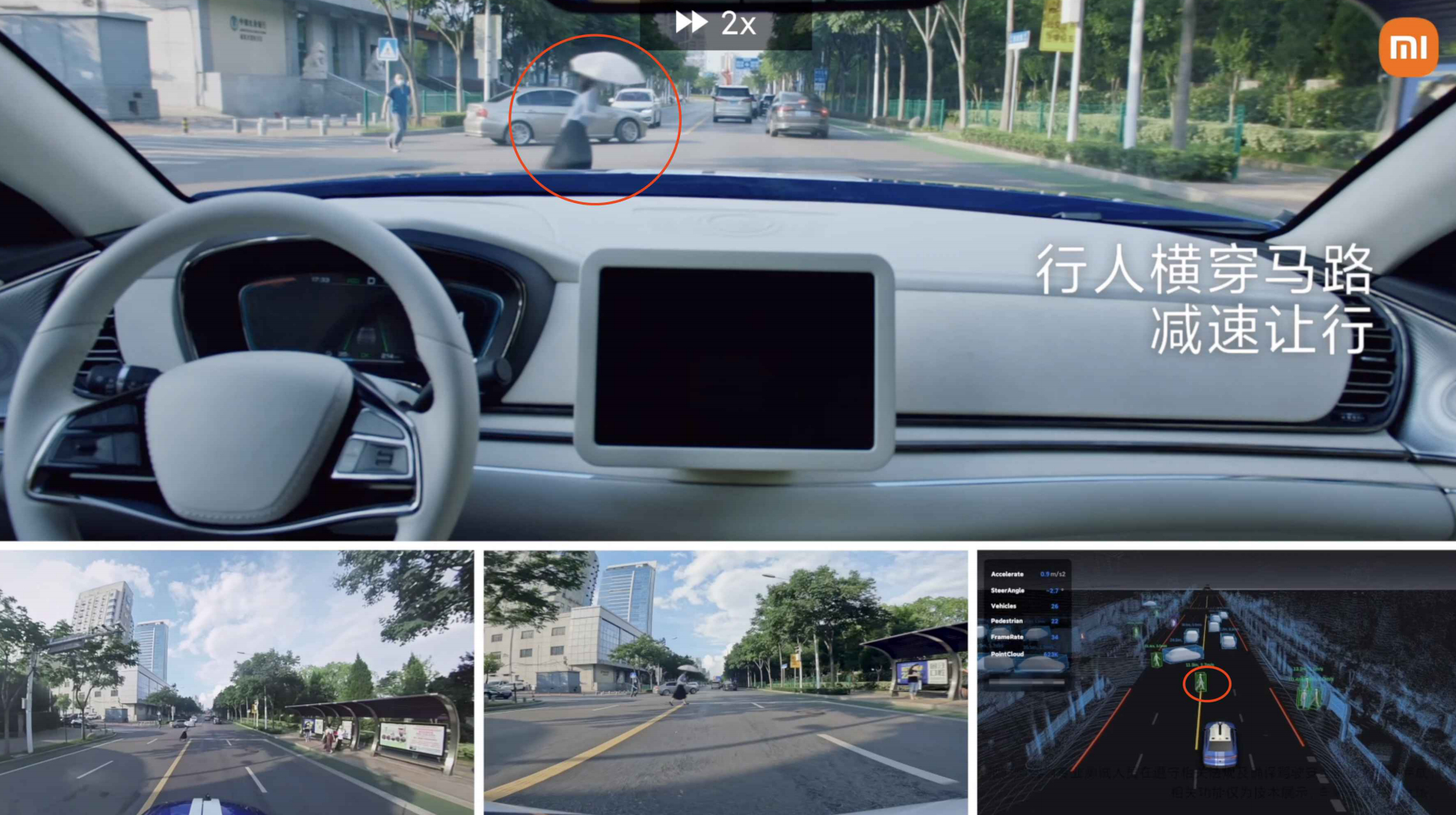

Pedestrian avoidance is basic operation.

Driving around the roundabout is basic operation for Xiaomi’s autonomous driving system (CNOA).

China’s traffic laws and road conditions can be quite unique! In a heavily congested area with mixed traffic, Xiaomi’s test vehicle performed well and handled the regulations well.

No protection for right turn here. At first, I thought it was a common bug – that is, the system recognized the big guy in the red circle, but did not stop in time and drove in front of him before stopping.

Later, I found that it was not the case. The pink electric vehicle on the left was detected, and avoidance was necessary. The safety prediction algorithm was really impressive.

Conclusion on CNOA Status

Overall, Xiaomi’s CNOA system performs smoothly, and it is a typical high-precision map-dependent system for urban areas. Moreover, because all sensors are placed on the roof of the car, the perception conditions are very advantageous.

Many manufacturers can achieve this level, ROBO Taxi (the so-called “L4 autonomous driving start-up companies”) is one example.

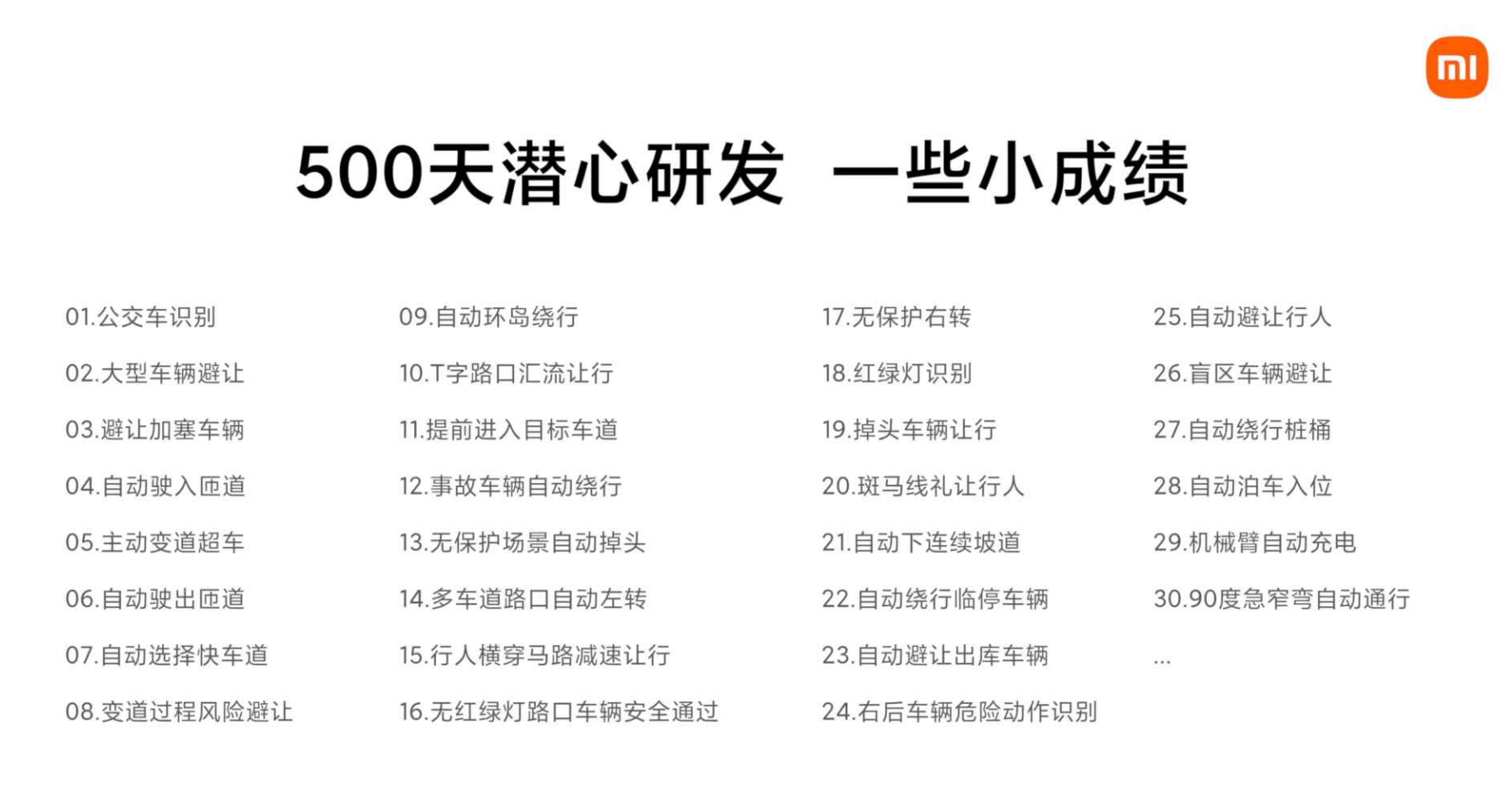

Xiaomi’s excellence lies in its tight schedule – it took less than 500 days to develop this system.

The perception, decision-making, and control algorithms all seem to be fairly mature, and it is worthy of recognition to achieve such a level.

Xiaomi’s challenge lies in the process of engineering and mass production, especially in integrating the sensors, especially the LiDAR, with the design of the entire vehicle, which will greatly limit the perception space.

Lidar will no longer have a 360-degree view, but a maximum of 270 degrees (1 front and 2 side layout), or even less than 180 degrees (car roof forward single Lidar layout), which puts extremely high demands on the perception algorithm for surround vision. We look forward to Xiaomi’s mass-produced cars providing a satisfactory answer.

AVP Automatic Parking Judgement

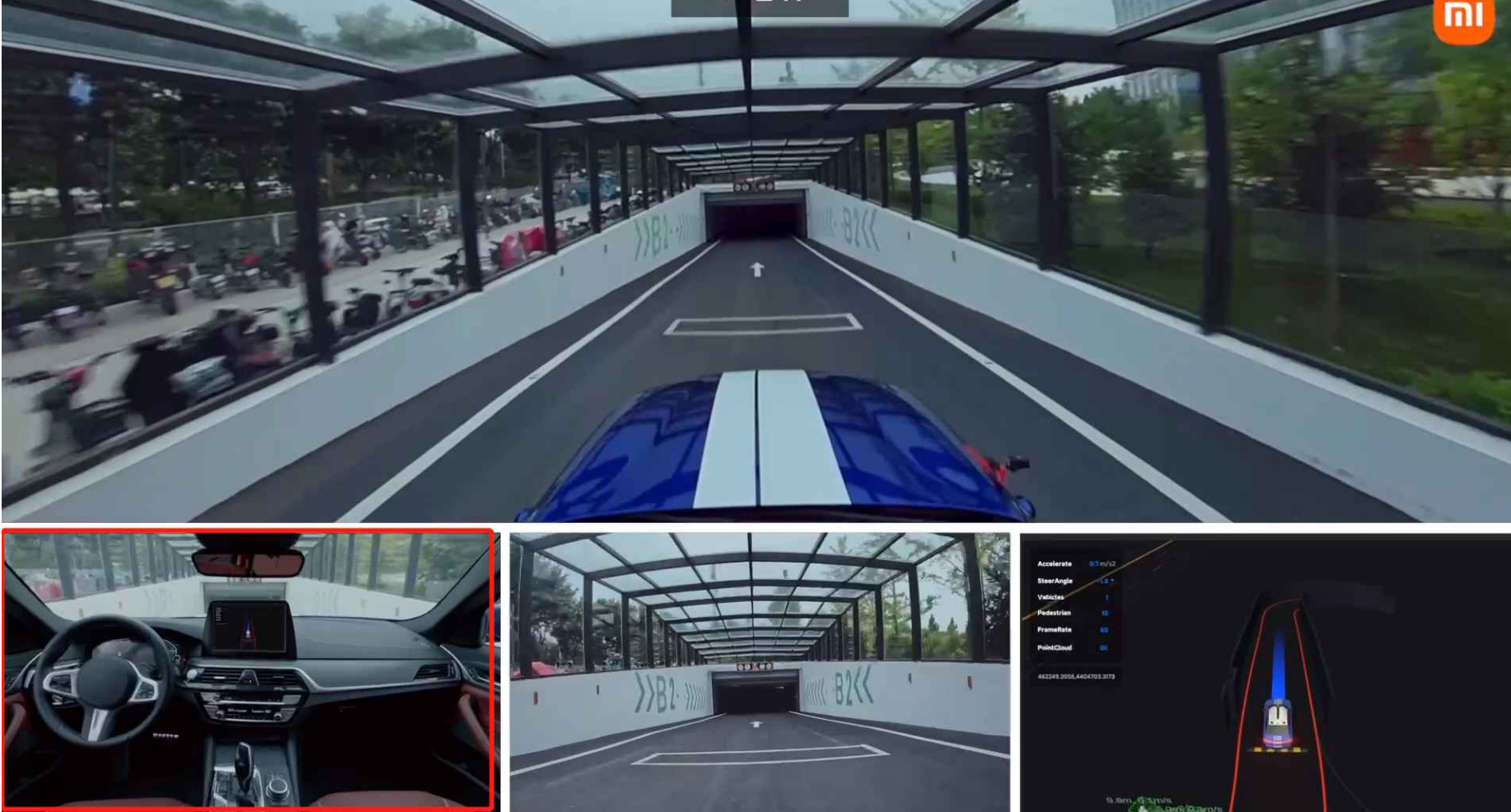

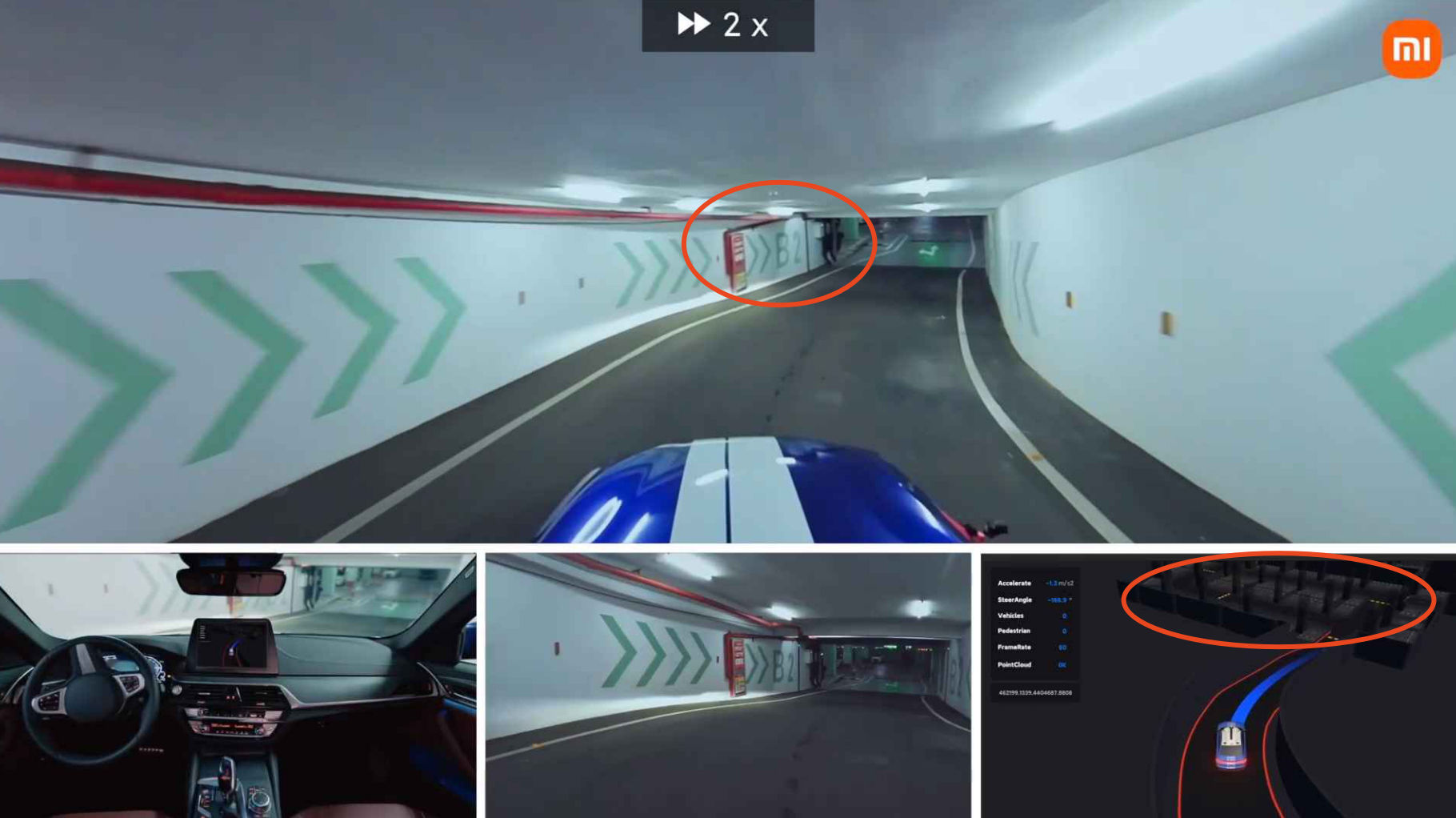

The second part is AVP unmanned parking, as shown in the figure – stand at the garage door, click on the phone, and the car will automatically park in the reserved parking space.

Because the garage environment is relatively simple and the speed is low, the test car just drove forward in an unmanned driving form.

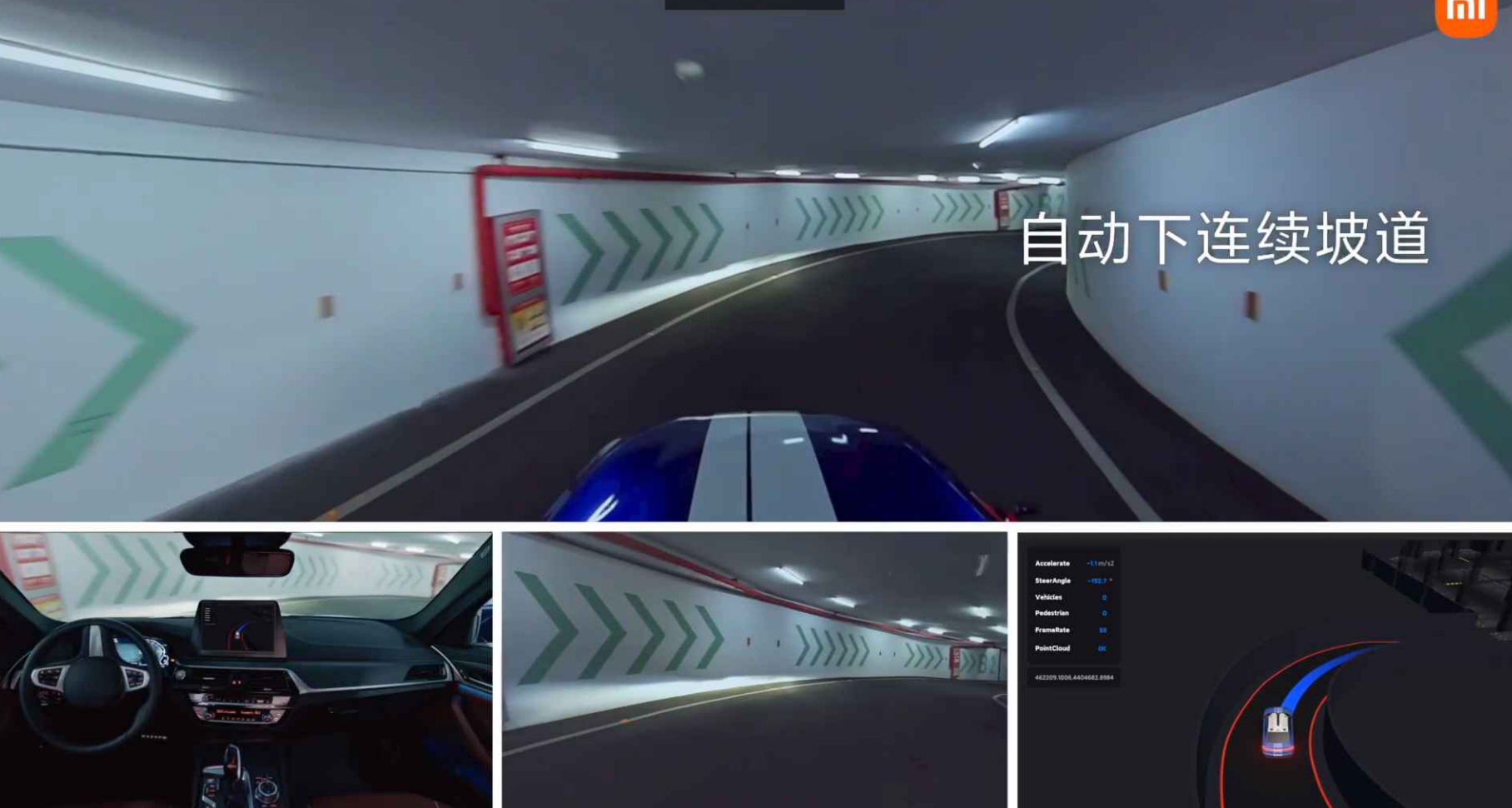

I was quite amazed at the automatic steep slope descent, the perception system is so strong?

But looking at the second picture, emmm, it’s still pre-mapped – that is to say, the car already has a high-precision map of the garage, which makes the difficulty much lower.

This picture will be more obvious, the visualization effect of the garage has already come out (bottom right) even though the car has completely descended and there is still obstruction on the left.

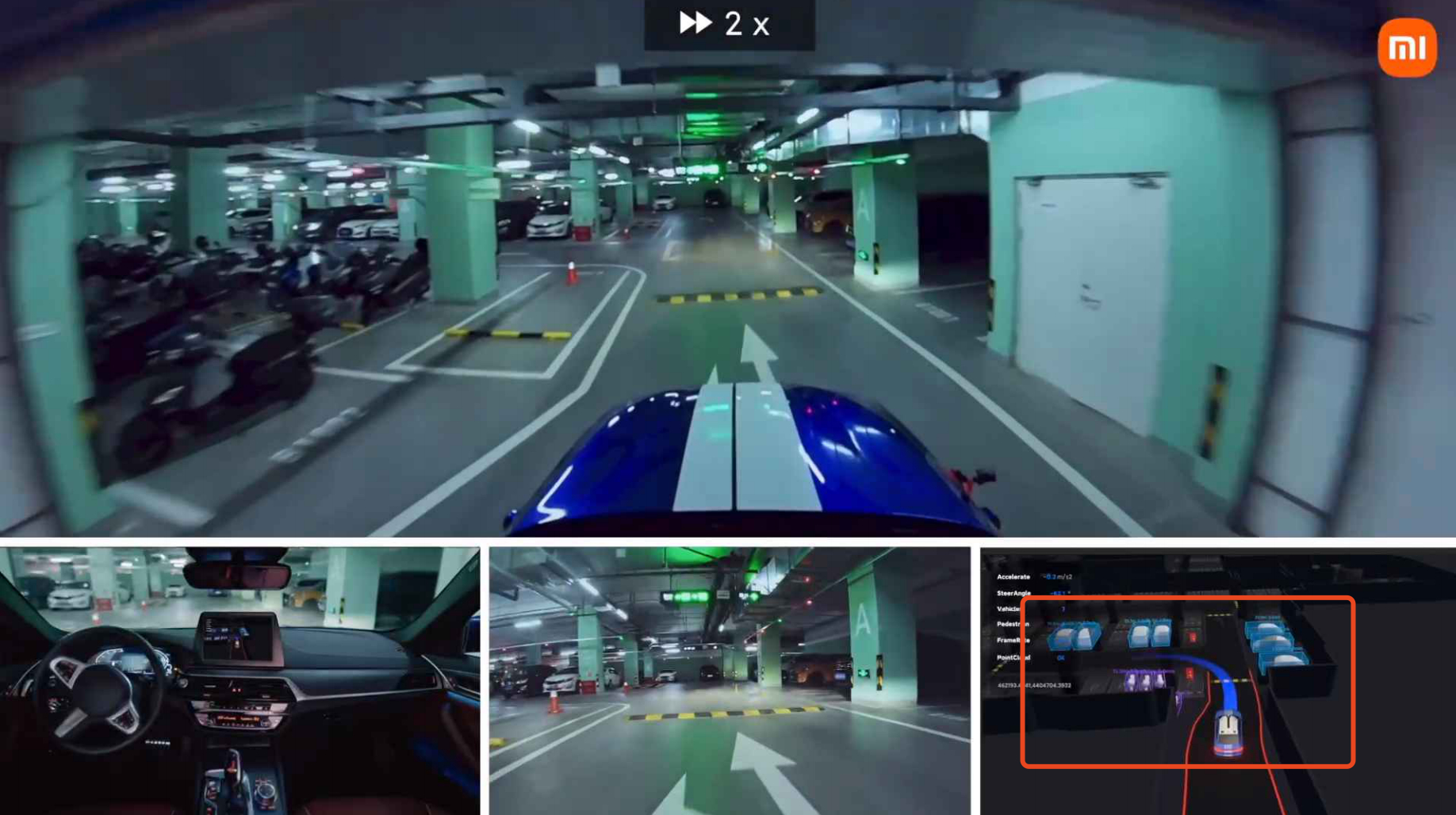

However, the perception system works pretty well, recognizing the speed of the vehicle very quickly.

This picture is even more obvious: the outcoming vehicle is recognized as soon as it crosses the line, and two empty parking spaces are accurately identified. Even with high-precision mapping, the perception system is still crucial.“`markdown

A 90-degree turn with a huge angle of the steering wheel, and the control algorithm is quite powerful; but after all, it is a test vehicle and the situation of mass production vehicles cannot be inferred from the video.

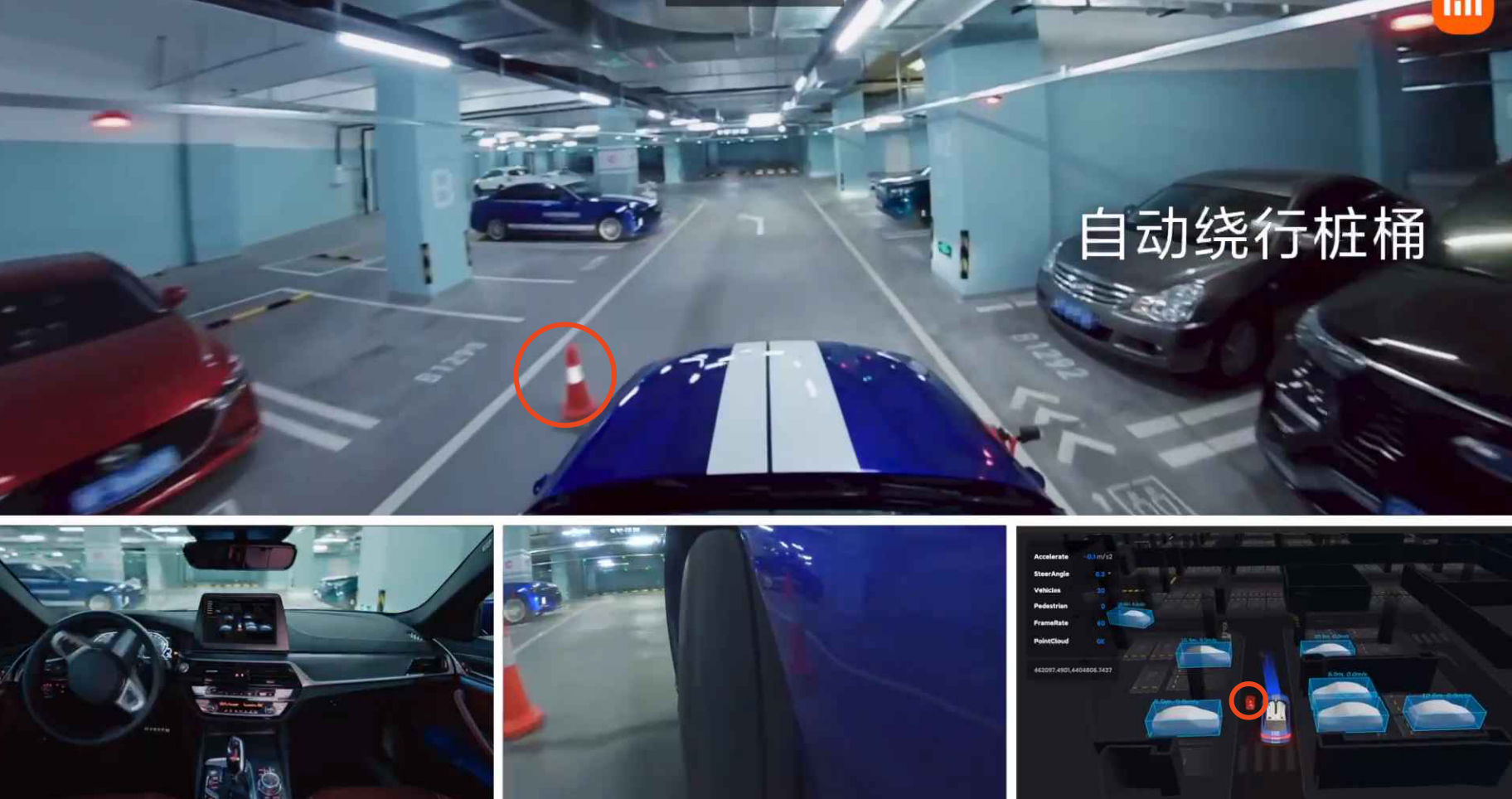

Circling around the ice cream cone, basic operation of AVP.

Backing up into the parking spot, basic operation.

The mechanical arm automatically charging is a good idea, but the practice is very difficult: the charging port of the car is not unified in position and distributed in all directions. The algorithms of just the robot running around are enough for you to have a headache.

Moreover, the charging port cover of many cars is not mechanically ejected. How do you open it? Even if it can be mechanically ejected, how do you activate the eject program?

In addition, the national standard charging port specification is poorly written, with a low level and abnormal locking mechanism that causes the charging gun to lock. If it is locked, the vehicle owner has to go through a lot of trouble to unlock it, or it may not even be able to be unlocked…

In short, if you want to use a mechanical arm to do third-party public automatic charging, it is not very realistic. In the long run, this is still a very labor-intensive job.

But if you are wealthy and have the ability to equip your own home, then ignore what I just said. At this stage, inserting and unplugging the charging gun is indeed not elegant… it will be much better to have a robot do it for you.

AVP state conclusion

Compared with the CNOA state, the AVP state of automatic parking has fewer surprises; mainly because you have already mapped it out in advance, and there is not much room for real-time perception algorithms to play.

Final summary

The 8-minute video displayed by Xiaomi fully demonstrates the status of the engineering modification test vehicle, and also covers the three largest scene fragments of assisted driving, which are “urban area, highway (ring road), and garage.”

“`As I mentioned before, it is very difficult to infer mass production cars from this state; Xiaomi’s biggest goal should still be to establish public confidence, expressing their willingness and ability to stand in the first echelon of advanced driver assistance systems.

The abilities currently displayed do not have strong forward-looking breakthroughs; but as they say —— when are you planning to consider it?

Moreover, their intention is not in this direction. As a new automaker, it is most important to get the test vehicle running first.

From the formal formation of the team to today’s display of the test car status, I think Xiaomi’s ADAS progress is quite fast, faster than I expected; I thought they would only start displaying in the first half of 2023, and start testing disguised cars in the second half of 2024.

My prediction: mass production cars will still employ the first echelon perception hardware —— that is, a 2.5-level architecture of front-half-ball Lidar + surround vision + millimeter-wave.

What interests me more is which computing platform will be used, as by that time, both NVIDIA’s Atlan with a single-chip 1000T computing power and Horizon Robotics’ J6 and Qualcomm’s next-generation Ride platform will also have been launched.

I won’t make further conjectures since there is too little information available. That’s all for now.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.