“Leading the first generation above Tesla” or “Leading the Tesla first generation“

This is a popular meme that has sparked discussions across the internet since Li Yanhong announced at the Baidu AI Developer Conference that “Baidu’s autonomous driving technology will lead the first generation of Tesla.” Despite being a meme, there is an interesting aspect to it. The general opinion is that Li’s statement deserves skepticism. But is that really the case? Let’s discuss.

The Subject is Limited, Leading the First Generation or Possibly

In fact, Li Yanhong’s statement has been misinterpreted. If we take another look at the video, we will see that he actually said:

“In terms of autonomous driving technology, Baidu will be ahead of the first generation of Tesla.“

Rather than:

“Baidu will be ahead of Tesla.”

The difference between these two sentences lies in the subject, which is “autonomous driving technology” rather than the whole vehicle or the autonomous driving experience, among other aspects. Here, there is a huge misunderstanding between autonomous driving technology and the autonomous driving experience.

I personally speculate on three angles to Li Yanhong’s statement:

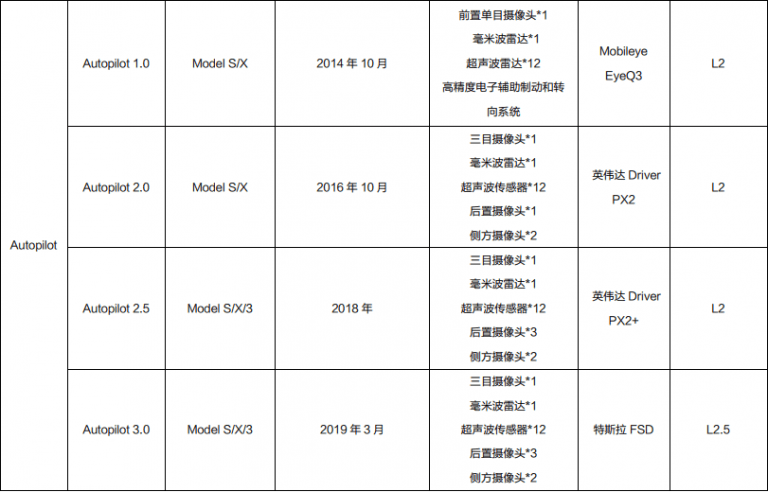

Firstly, Baidu’s Apollo autonomous driving technology is positioned at L4 (fully automatic driving). It has higher design requirements for perception, decision-making, and execution than Tesla’s L3 or L2+. Baidu’s autonomous driving technology comes from the atomization and re-empowerment of Baidu Apollo technology. Therefore, from this angle, we could say that Baidu is ahead of the first generation of Tesla in terms of technology.

Secondly, Baidu’s innovation in cockpit and driving fusion capabilities has taken a further step forward in architecture. While providing safety redundancy, it explores the possibility of merging the intelligent driving domain and the intelligent cockpit domain for the first time. This high-level domain control capability and redundancy system are indeed ahead of Tesla in architecture, as Tesla has not yet established a redundant supercomputing system.Translate the following Chinese Markdown text into English Markdown text in a professional manner, keeping the HTML tags inside the Markdown and outputting only the result.

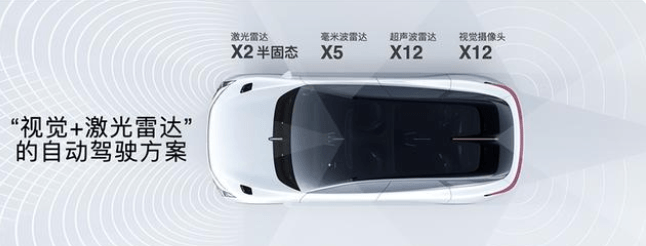

From the last perspective, Jidu’s fusion perception system of laser radar + camera + millimeter wave and Nvidia dual-chip 508TOP supercomputing system, as well as the 8295 chip’s intelligent cockpit system, have surpassed Tesla’s current purely visual system in terms of its computing power and hardware perception domain. If we simply look at the hardware layout and accounting data, these data and hardware capabilities themselves surpass Tesla’s existing products in one generation, and there should be no dispute. For example, Jidu’s camera is 8 million pixels, while Tesla still uses a 2 million pixel camera.

In summary, from these three perspectives, it is safe to say that Jidu has surpassed Tesla’s first generation in terms of autonomous driving technology.

Technology ≠ Experience, Which Side Does the Double-edged Sword Point to?

Technology ≠ experience. This sentence may sound very confusing. Isn’t higher technology supposed to give a better experience?

No, it’s not the case in today’s autonomous driving field. Here’s a simple example that explains it. Get an experienced driver to drive for you, with zero autonomous driving technology, but with a driving experience of 99, which outperforms all current high-level autonomous driving systems. Of course, this example is a bit exaggerated, but that’s basically what it means: more advanced technology may not necessarily yield a better actual experience in a short period of time when put into mass production.

Let’s take a more detailed example. For example, many standard L2-level vehicles currently equipped with 3R1V structured hardware system have a front-facing camera with a viewing angle of only about 70 degrees. Vehicles in the nearby left and right lanes cannot be seen. Therefore, in actual experience, if there is no car cutting in from the nearby left and right lanes, you will find that the assisted driving of our vehicle is very stable and prompt and feels quite good.

However, if you switch to the more advanced 5R11V high-end assisted driving hardware system, the camera’s wide-angle range can be expanded to 130 degrees, or even larger. Then, nearby vehicles in the left and right lanes can be detected. In actual experience, you will often have to slow down because you need to beware of cars in the left and right lanes changing lanes. Your driving experience is actually decreasing, especially under the principle of caution. Many unnecessary slowdowns may occur, such as the adjacent car bypassing the slow car in front of him, but not observed by the monocular camera due to its narrow field of view. However, the three-eye camera can detect this problem, which then triggers a sudden deceleration or even an emergency brake by the vehicle due to the short distance. In fact, in this scenario, most human drivers can anticipate this scenario.

In reality, there are many similar situations in product experience, the most common being many autonomous driving companies releasing a lot of high-speed and even city autonomous driving demo videos, then boasting that they have already reduced costs to this and that, and can achieve this and that in the future. However, when it comes to the actual production and delivery of vehicles to users, there’s almost 0. This is because autonomous driving demos are just the tip of the iceberg, and production of autonomous driving faces the challenge of solving the remaining 90% of problems. In fact, production is the biggest challenge for implementing assisted driving technology. For example, the position of the camera and the perception, decision-making and operation of the autonomous driving system are closely related. Every time you move the camera’s position, related algorithms will deviate on a large scale and require continuous repair. Running a fixed road section on these demos can be done easily, even running the same one every day, it will quickly train a good experience. But when the camera changes position or goes elsewhere, the original algorithms fail.

Dimension reduction, it’s really that difficult?

Many people have a certain understanding of Baidu’s autonomous driving and believe that downgrading the ability of fully autonomous driving to assisted driving by a human is a kind of “dimension reduction” and think that the difficulty is not that great. However, in the field of autonomous driving, this phenomenon does not necessarily exist. An illustrative example is that using missiles to bomb mosquitoes may not have a good effect, and missile technology often cannot be passed down to flyswatter technology. In essence, the design concepts of L4 autonomous driving and the more common assisted driving have many differences. The most crucial one, in my personal understanding, is: how to look at human intervention?

In the field of autonomous driving, true (unmanned) autonomous driving generally considers redundancy in multiple aspects such as acceleration and braking because the system thinks that no one (the driver) can help it and must adopt redundancy for safety. On the other hand, L2+ and L3 assisted driving do not require high levels of redundancy, because the system believes that the driver is itself redundant, and once the system encounters a problem, the driver can take over control. These two product designs based on different concepts can be vastly different. At L4 level, all driving abilities will require redundant capabilities, and for some core abilities, more redundancy is required. But at L2 level, considering that assisted driving is more about reducing the driver’s burden, there is almost less redundancy in capability.# Focus on Technology, Jidu Lets the Future Play Together

Electricity is only halftime, intelligence is the endgame.

These words from Li Yanhong are actually a common theme at many new energy vehicle press conferences. Many companies now always mention intelligence when they release new products. In this context, Jidu undoubtedly has positioned and described its products very accurately: the science and technology of the future generation of people who play together.

From Xijiajia to the metaverse, from science fiction to the machine voice of “Hello, I’m the Jidu car robot,” Jidu is constantly piling up the experience of technology. Whether it’s the early integration of intelligence into the donkey car online, the first demonstration of assisting driving on urban roads, or even the “car robot” and the sharing of intelligent mobility at the Baidu AI conference, Jidu firmly grasps the wind of science and technology, leaving a strong impression of science and technology.

Unlike other OEMs and brands that focus on driving, cars themselves, and services, Jidu almost exclusively focuses (at least for now) on the research of technology itself, continuously providing exposure to the field of technology, and directly announcing that complete intelligent driving and intelligent cabins can be provided at the time of delivery. Jidu is probably the first brand to make such a promise in recent years. I think this is also Jidu’s response to whether it can land Baidu’s autonomous driving ability.

Technology’s greatest charm lies in its high degree of uncertainty. If everything can be predicted, then we will lose too much excitement in our future.

In Q2 2023, let’s see which generation of Tesla Jidu surpasses.

How many degrees will Jidu’s technology eventually reach? I hope it will be one hundred degrees.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.