On July 5th, the 10,000th Lidar from Tudatong officially came off the production line. This means that the delivery volume of NIO ET7 will soon exceed 10,000 vehicles. During the initial delivery of ET7, NIO stated that the open ACC and LCC functions have integrated Lidar data. So how much does the addition of Lidar help with advanced driver assistance?

Recently, NIO held an online salon where all the technical information about this Lidar was shared, and Dr. Bai Jian, Vice President of Intelligent Hardware at NIO, attended the event.

Before joining NIO, Dr. Bai served as Director of Hardware at OPPO and General Manager of Chip and Forward-looking Research Department at Xiaomi. This event was also Dr. Bai’s first media event since joining NIO.

Why do we need Lidar?

Before discussing the strengths and weaknesses of Lidar, we first need to reach a consensus on why advanced driver assistance needs Lidar, or where it can be effective.

Identification of unconventional objects

Currently, all models of advanced driver assistance are based on visual recognition. The biggest advantage of visual cameras is that they can input extremely rich information like human eyes. However, the problem is that visual perception requires training. This process, like newborn babies, does not recognize the objects in the world. Advanced driver assistance is also the same.

However, with the efforts of engineers, the system “recognizes” only conventional “lane lines”, “car and truck tails”, “pedestrians”, and “bicycles.” Therefore, for objects that are not recognized by vision, the system naturally cannot respond, which is the fundamental reason for advanced driver assistance to collide with “white overturned truck” or “construction road signs.”

With such visual capabilities, an advanced driver assistance system can cover 90% of usage environments on highways, but actual traffic conditions are constantly changing. Once untrained objects appear on the driving route, such as debris falling from trucks or stones rolling off the roadside, the system cannot respond at all and requires human intervention to take control of the vehicle.

Although it can “see” these objects, an untrained perception system cannot recognize them.

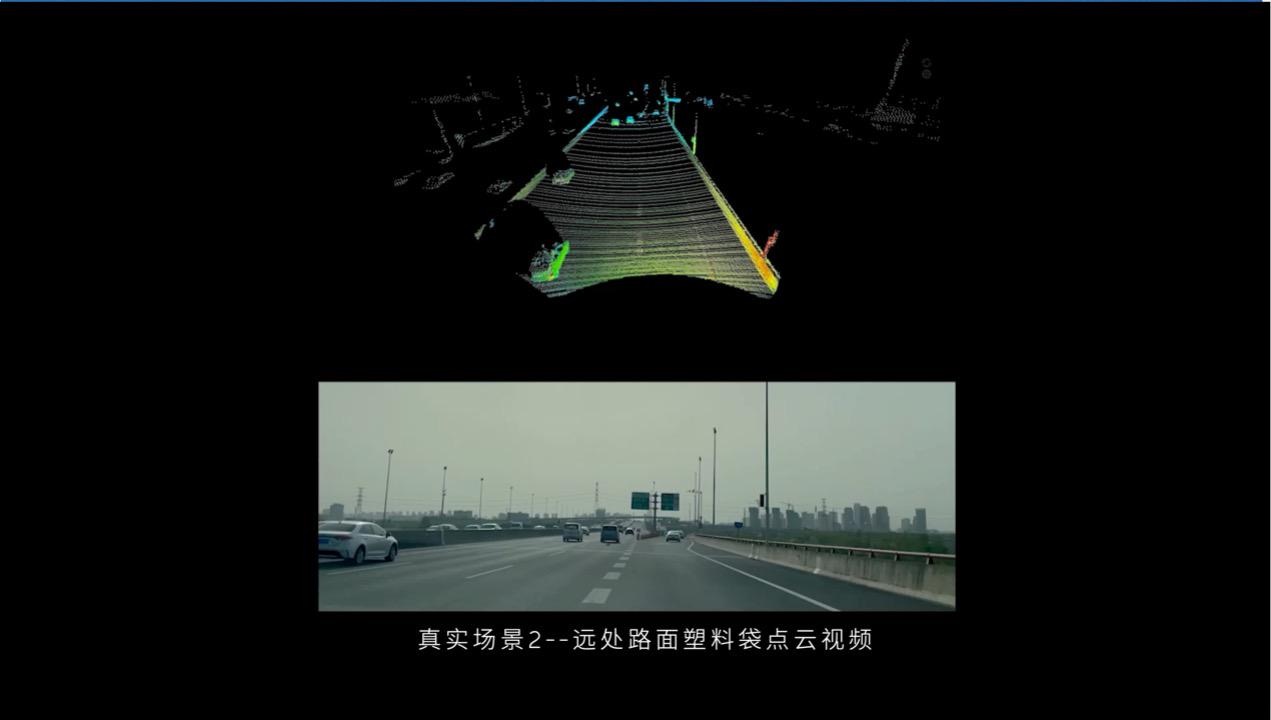

But Lidar is different. This distance-based perception method can analyze the 3D information of objects through point cloud data, thereby analyzing whether these foreign objects will affect the passage of the vehicle.

Translate to English Markdown text while preserving HTML tags inside Markdown:

Translate to English Markdown text while preserving HTML tags inside Markdown:

If there is a plastic bag on the road, we can judge from its condition whether there are foreign objects inside and decide whether to detour through our own eyes. But for cameras, there is not much they can do, while LiDAR can distinguish whether this is a thin object through 3D point cloud data, thereby providing a basis for vehicle decision-making.

Obviously, in the current computing vision development, which is not yet very mature, LiDAR and camera can rely on their respective characteristics to complement the perception system of assisted driving.

Night Scene

This is another weak visual scene, and everyone can imagine the status of human eyes in the night.

In the night scene, cameras and human eyes can only rely on the vehicle lights and the ambient light, but there will still be many visual dead angles and blind spots. Especially when facing the light source of oncoming vehicles, or when driving under the gantry of the speed measuring instrument, the strong light emitted by the searchlight will cause instant blindness to human eyes and cameras.

In this case, adding infrared cameras as a supplement is one way. But there are not many companies currently adopting this solution. Foresight is one of them. This visual perception company from Israel released the QuadSight, a four-eyed perception system in 2018. These four cameras combine two pairs of stereo vision and visible light cameras, theoretically, it can also make the ADAS system work steadily in harsh weather and environments. But until today, this solution is still a niche solution.

The Voyah Free also comes with an infrared camera that enhances night vision, but this camera is not connected to the ADAS system and can only provide the driver with a night market perspective for assistance.

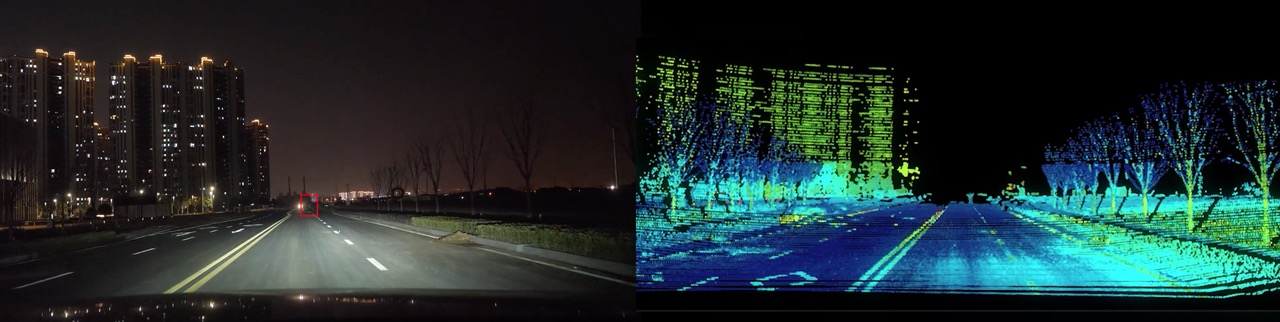

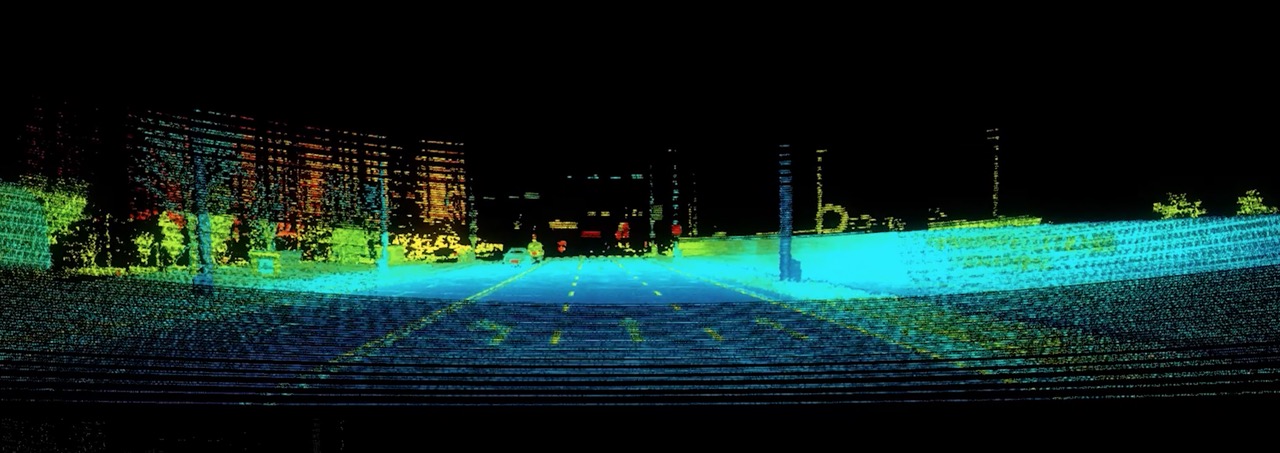

But after adding LiDAR, this problem is solved, because LiDAR is almost unaffected by dark ambient light, which gives vehicles the ability similar to “bats”, even in dark ambience, it can provide rich perception information.

The display in the following figure is very intuitive:

The pedestrian in the middle of the far road is merged into the dark night. In this case, the vehicle needs to be driven to the light range before the camera or human eye can clearly see the obstacle. But LiDAR can discover a “human-shaped obstacle” in the middle of the road very early.## Which indicators of LIDAR are important?

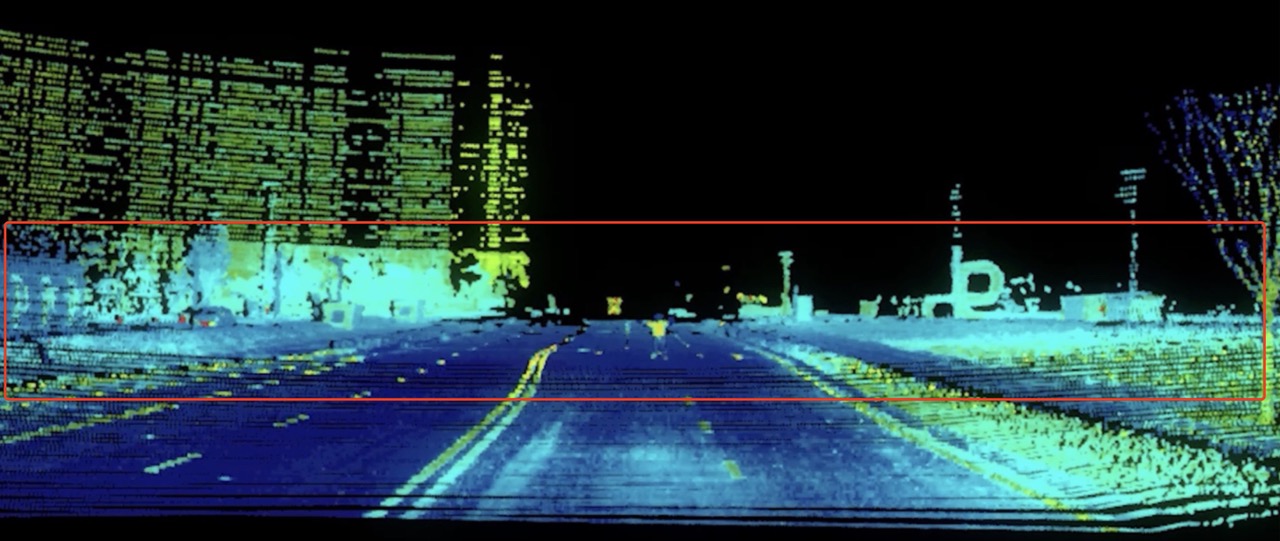

Let’s start by looking at a picture.

If you look closely, you will notice that the point cloud image presented by NIO’s LIDAR is different from the typical “imaging” effect of other LIDARs. The point cloud density of the red box is significantly higher than that of the upper and lower ends.

300 lines or 144 lines?

In NIO’s official publicity, this LIDAR is equivalent to 300 lines, with a high angular resolution of 0.06°*0.06°. However, many people have questioned this data, believing that this LIDAR cannot achieve such parameters.

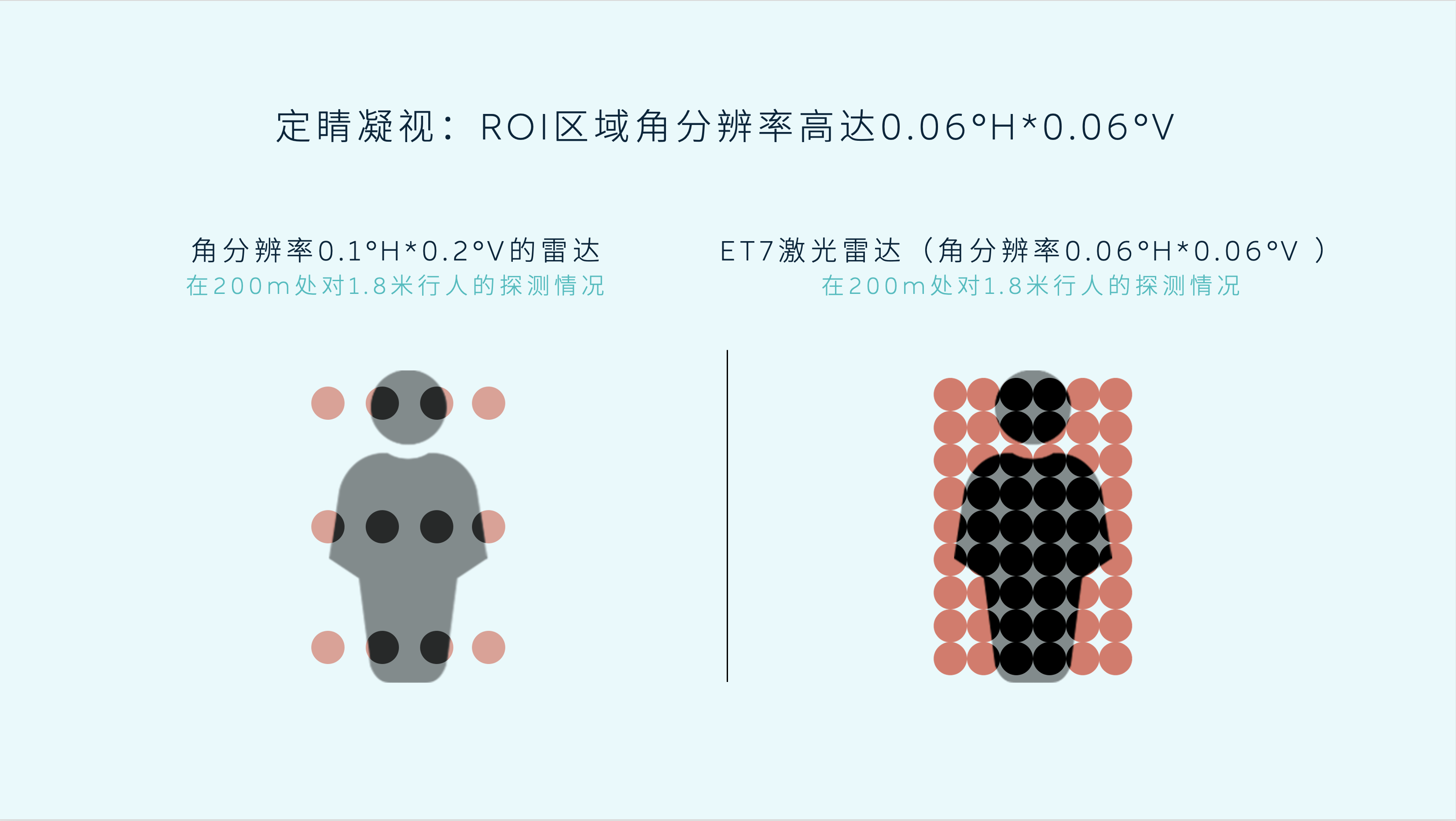

First of all, we can be clear that NIO’s LIDAR can achieve this parameter, but it cannot be realized in the entire domain of 120° x 25°. Only the middle 40° x 9.6° can achieve this, which is the ROI “gaze fixation” function possessed by the ET7 LIDAR.

In the ROI area, the angular resolution of ET7 is as high as 0.06°*0.06°, and the coverage angle reaches 40°H (horizontal) x 9.6°V (vertical). In addition, the “gaze fixation” function can be turned on and off through the system settings, but after extensive testing and verification, NIO believes that this function is very important and can also demonstrate product advantages, so the system will enable this function by default.

The essence of the “gaze fixation” function is to fill critical areas with more points, and the core purpose of doing so is to make the LIDAR “see more clearly”.

Dr. Bai Jian said that autonomous driving imitates human driving behavior, and the role of sensors is actually to act as human eyes. Just like the human eye has zoom function, LIDARs are given similar functions. The ET7 LIDAR can add more points in key areas during driving, thereby improving the imaging quality of the core part.

Let’s take another example. A 1.8-meter adult at a distance of 200 meters can be significantly different in the images obtained by LIDARs with different angular resolutions.

This approach adopted by NIO is not unique in the industry, and the Gaze feature on the Xpeng G9, powered by the Qualcomm Spectra ISP, also has a similar function.

The Gaze feature on the Qualcomm Spectra ISP equipped in the Xpeng G9 is called “Gaze GAZE,” and when this feature is turned on, the resolution of the ROI area will be increased from 0.2° to 0.1° on the M1 which will double the point cloud density and significantly improve the system’s perception capability.

Wu Xinzhou, from Xpeng Motors, mentioned, “The LiDAR on Xpeng G9 can identify small potholes on the road ahead,” and the detailed information can help plan the passability with the height of the vehicle’s wheels and chassis.

Apart from relying on the ROI zoom function, the reception capability of the LiDAR is also an important indicator.

Does the LiDAR also have nearsightedness?

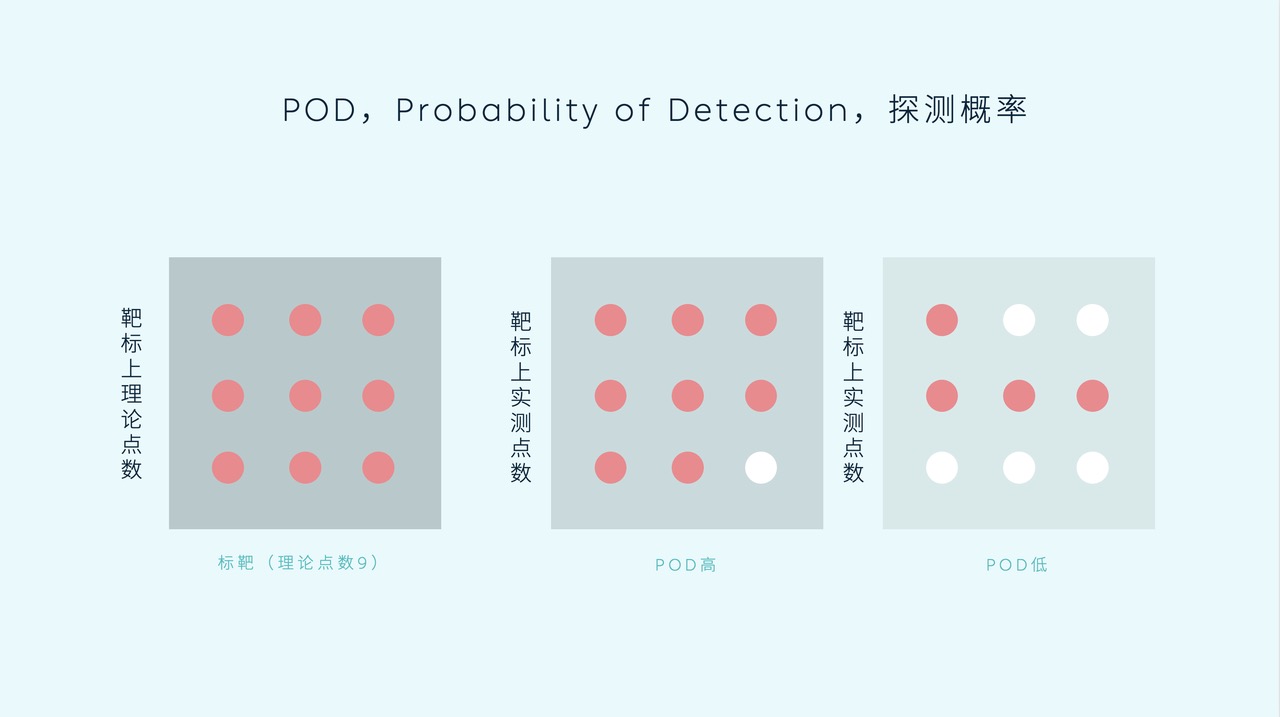

Not every point sent out by the LiDAR can be accurately received. The reception capability is affected by the stability of the system and signal interference. The Probability Of Detection (POD) is the core indicator for measuring the “accuracy” of a LiDAR.

If a LiDAR sends out 100,000 points, but only 90,000 are received, then the POD probability is 90%, and it would be 50% if only half of them are received.

This is like the occupancy rate when buying a house. For the same area, a higher occupancy rate makes living more comfortable, so does the LiDAR. The reception rate of the LiDAR on NIO ET7 can reach 90%.

Dr. Bai Jian, who heads the autonomous driving team at NIO, said confidently, “Currently, the performance of the POD on the LiDAR used in NIO’s vehicles is the best in the industry, while the POD values of many LiDARs are far below this level.”

Is “seeing far” still important?

The laser radar carried by the NIO ET7 can detect up to 250 meters at a reflectivity rate of 10%. Interestingly, NIO’s promotional materials have repeatedly placed the number 1,550 nm in the most conspicuous position.

For comparison, let’s take a look at the laser radar carried by the XPeng G9 and the LI L9, which are also flagship models.

Both the XPeng G9 and the LI L9 are equipped with the Sentrand M1 and the Hesai AT128 laser radar, both of which can detect up to 200 meters at a reflectivity rate of 10%. Compared to the “Falcon” carried by the ET7, there is indeed a difference of 50 meters.

The main reason for the difference is that NIO’s laser radar has a wavelength of 1,550 nm, which means it has a larger power and a longer transmission distance, while the Sentrand M1 and the Hesai AT128 have a wavelength of 905 nm.

In addition to having a larger power, the wavelength of 1,550 nm does not pose a hazard to human eyes. The visible wavelength range for human eyes is typically 308 nm to 760 nm, which is far lower than 1,550 nm, making it unable to focus on the retina and mostly absorbed by water during the eyeball passage.

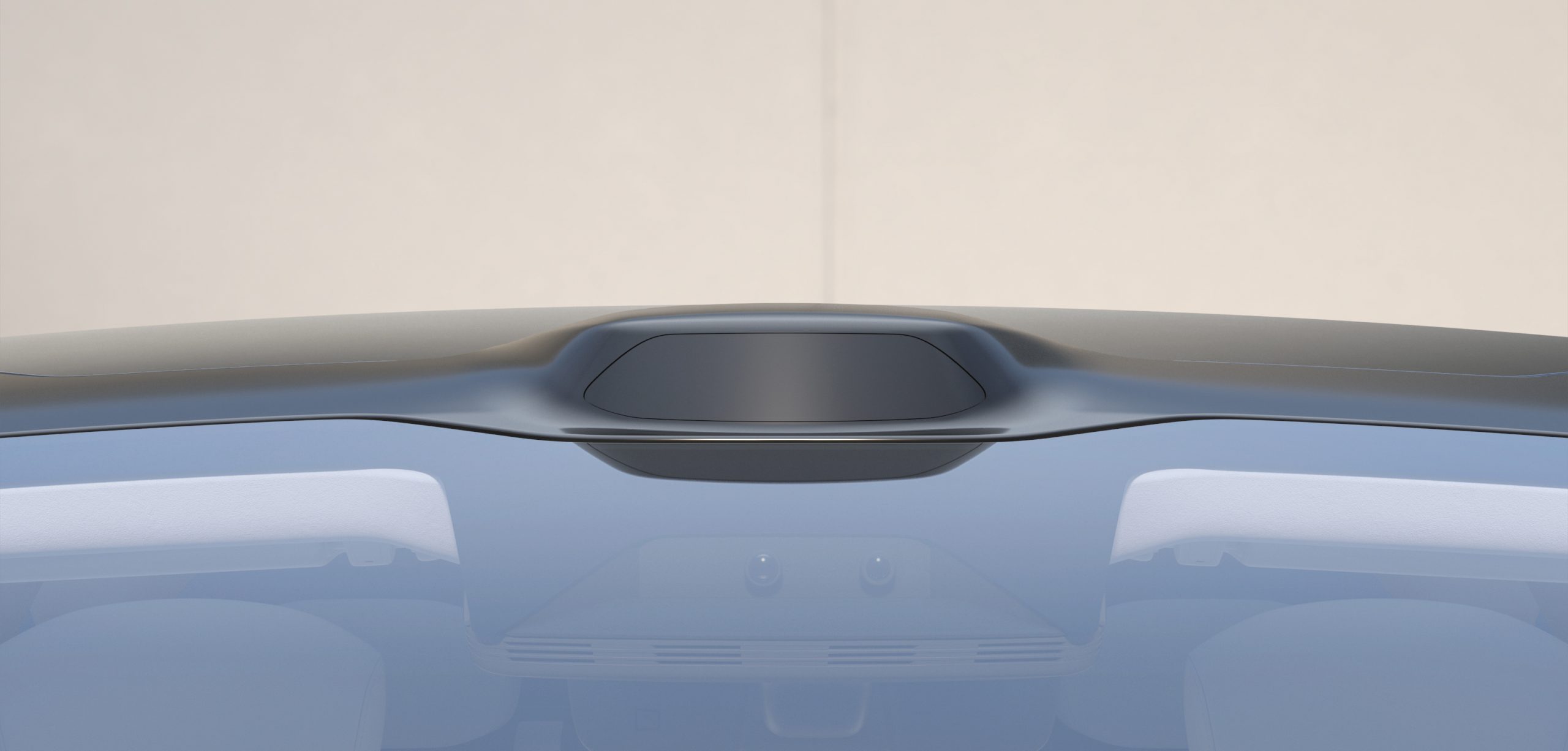

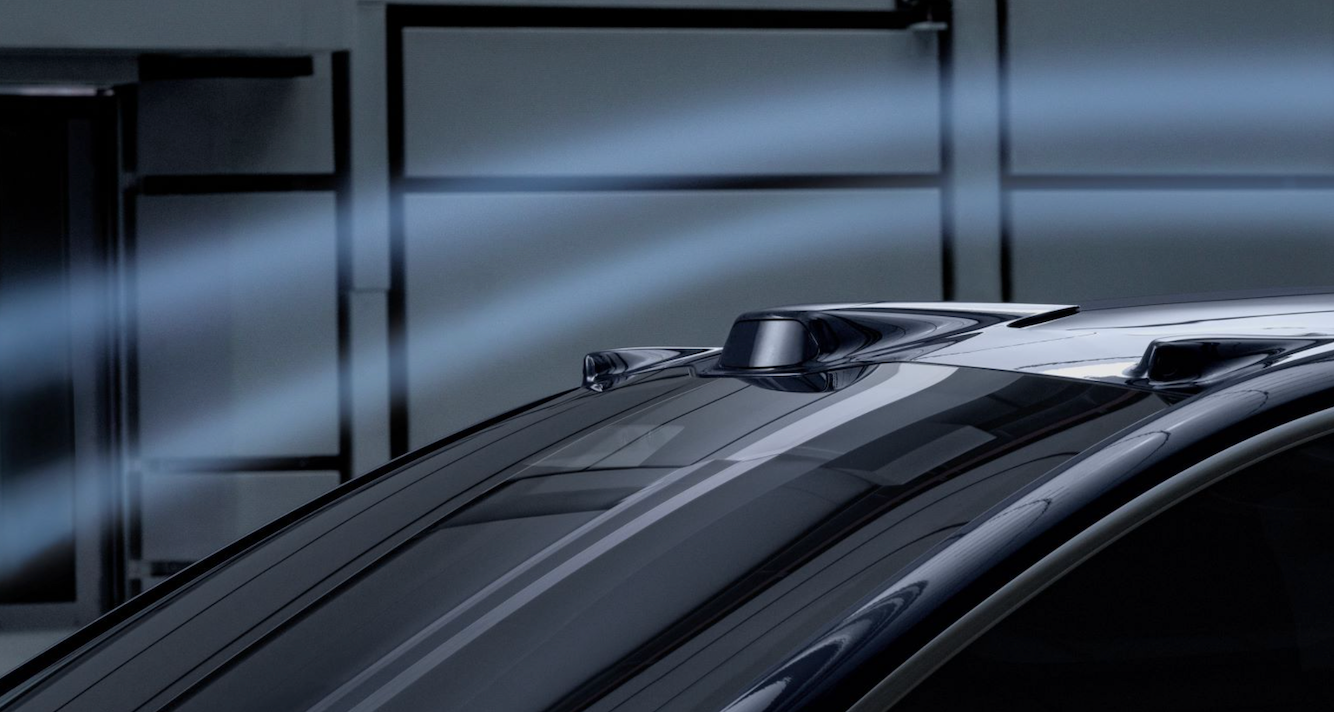

Another major reason for the ET7’s long-range laser radar is the “watchtower” configuration.

In order to fully leverage the advantages of laser radar, NIO did not hesitate to install this uncommon sensor on the car roof. When the ET7 was first unveiled, its unique design was indeed somewhat “challenging aesthetics”. However, with the increase in delivery volume and the contrast with similar models such as the WM M7, the appearance of the ET7 is gradually being accepted by the public. From the actual effect, the forward perception distance of the ET7 is indeed farther than expected.

However, in today’s stage where assisted driving is advancing into cities, the question to ask is whether the detection range of laser radar is still important.We can discuss two points here: the difficult urban roads and the mature highway scenes.

As mentioned earlier, urban scenes are complex, and the addition of LiDAR can provide rich perception information. However, urban roads often have speed limits below 80 km/h, and more emergencies occur in blind spots, such as ground debris and road potholes that are only discovered after the preceding vehicle has driven away. In addition, wild driving by pedestrians can also pose challenges to urban ADAS.

In this scenario, the “long-range” detection of LiDAR does not seem to be as important, and it seems to be more valuable to cover as much area in front of the vehicle as possible.

From this perspective, we will find that XPeng and Nio have completely different strategies. From P5 to G9, although XPeng has replaced them with more powerful LiDAR, they still insist on deploying them under the headlights to deal with sudden situations in the city.

In the high-speed scene, how many camera tasks can LiDAR replace has become the key factor in whether LiDAR can “upgrade.”

The farthest distance that the camera carried by Nio ET7 can see is 223 meters for pedestrians, 262 meters for cones, and 687 meters for vehicles, which is enough perception distance for vehicles to make decisions when driving at high speeds.

Therefore, the most important task of the LiDAR is still to identify objects that cannot be processed by vision. From the perspective of this occasional phenomenon, it seems to be a low-cost and low-value task to stack longer distances to solve low-frequency scenes. This seems to be similar to the reason why L4 level autonomous driving requires billions of kilometers of road tests and a few more 9s after the decimal point for 99.9\%.

What Redundancy Can LiDAR Bring to Autonomous Driving?

After discussing why autonomous driving needs LiDAR, we can explore what redundancy LiDAR can bring to autonomous driving based on the industry’s development today.

Not long ago, XPeng OTA-ed the LCC-L function, which significantly enhanced the lane-keeping ability of the LiDAR version of the XPeng P5, allowing the vehicle to travel steadily even in the absence of lane markings. In addition, the XPeng P5 in LCC-L mode will also make slight evasive maneuvers based on the state of stationary vehicles on both sides of the road, and the function is no longer rigid, creating a more human-like experience.On one hand, this is thanks to the optimization of visual algorithms. On the other hand, XPeng Motors has added laser radar to its program to perceive the surrounding environment. Even if one lane line is not lost, the XPeng P5 can still recognize the road edge and output Freespace, ensuring continued stable driving. In this regard, laser radar plays an important role.

Since laser radar can obtain 3D data itself, the information obtained by the perception system is very intuitive and accurate when the point cloud density is high enough, which largely addresses the insufficient machine vision capability.

The reason why Tesla crashed into the white truck lying on the road was that the perception system recognized it as the sky in the distance. If there was a set of laser radar data in the perception system for double verification, could the tragedy be avoided?

Final Words

Finally, borrowing the original words from Wu Xinzhou in the XPeng “1024 Technology Day” interview in 2020: “the potential of vision is boundless. It is really a treasure. In the long run, vision is unstoppable, but the growth of this ability is a process.”

The growth of ability is a process. We cannot achieve automatic driving solely through vision at present, so laser radar has become an opportunity for us.

However, we still need to understand that laser radar is a service to assist driving, and the strength of laser radar performance is not equivalent to the quality of the assisted driving. We still need to face it with an open mind and hope that auto companies can fully utilize the fusion of multiple sensors to bring us more and better assisted driving functions.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.