Author: Tian Xi

Tesla’s vision path is once again standing on the edge of a cliff.

On July 14th, Andrej Karpathy, Tesla’s AI director, announced his resignation, causing quite a stir in the industry.

As a soul figure in the autonomous driving industry, Karpathy was poached by Musk from OpenAI to Tesla in 2017, serving as the AI and Autopilot vision director.

Over the past five years, he helped Tesla build all the basic infrastructure for autonomous driving from scratch and successfully launched NOA and FSD Beta. Even the “madman” Musk was impressed, praising him as the world’s top AI leader.

This statement may be a bit exaggerated, but it is fair.

Karpathy has quite a high reputation in the industry. At the annual CVPR and Tesla AI Day, he is an absolute protagonist. Every time he shares the latest technology about autonomous driving, it often attracts a large number of industry professionals to stop and watch. The “new concepts” and “new terms” raised at the meeting will soon become catchphrases in the automotive industry and be refined into the core technological highlights of various companies.

Therefore, when such a heavyweight figure announced his departure suddenly, even outsiders couldn’t help feeling regretful. Wang Yilun, the head of the Ideal Automotive AI, said on his personal social media account that Karpathy’s departure was a loss to the industry. For Tesla, which is struggling on the pure vision road, Karpathy’s departure seems to be more than just a “loss.”

The AI executive who worked for Tesla the longest still cannot escape the “vacation law”

Looking back now, Andrej Karpathy’s departure had early signs.

In March of this year, Karpathy posted on social media that he would start a four-month vacation after nearly five years of working for Tesla. Some people guessed that this might be a signal that Karpathy was going to resign.

After all, one of the regular projects for Tesla’s former executives before they resigned was taking a vacation.

In 2018, Tesla’s former senior engineering vice president, Doug Field, took a long vacation “to spend time with his family.” At that time, it also caused a stir. Tesla promised that Doug Field would not leave the company, but a few months later, he announced that he would not return from his vacation.

This time, the same plot is repeated.

At the beginning of his vacation, Karpathy emphasized that “this time will be used to focus on improving his technical advantages and training neural networks.” To dispel external doubts, he also added, “I will miss Tesla’s robots and Dojo supercomputer clusters. I can’t wait to see them even before I leave.”

Result: Karpathy still left eventually.

“On July 14, Karpathy announced in a tweet that leaving was a difficult decision: ‘I am honored to have helped Tesla achieve its goals in the past five years…'” Musk timely sent his blessings: “Thank you for everything you have done for Tesla, and it’s been an honor working with you.”

Going back 5 years, in 2017, Karpathy accepted Musk’s invitation and joined Tesla from OpenAI.

At that time, Tesla was in turmoil. On the one hand, it had recently canceled its partnership with the old supplier Mobileye, after the fatal Tesla autopilot crash that occurred in May 2016. On the other hand, the adaptation to the new supplier Nvidia was not going smoothly, with both parties arguing about whether Autopilot Hardware 2.0 could be used for self-driving.

Behind these struggles, it revealed Musk’s dissatisfaction with the “status quo” – the autopilot function had not yet been applied to the market. Tesla urgently needed a savior, but executives who were unable to bear such a great responsibility chose to leave passively or actively.

In 2017, Anderson, the former head of Autopilot, first announced his “breakup” with Tesla. A month later, Chris Lattner took over Autopilot team. However, he resigned in less than half a year, saying “Tesla is not suited to me at all.”

That’s when Karpathy caught Musk’s eye.

As early as in OpenAI, a non-profit organization focused on artificial intelligence research, Musk had met Karpathy. Musk was one of the founders of OpenAI, and Karpathy was a founding member and research scientist of OpenAI.

Media reports revealed that Musk once said that many people only regarded Karpathy as an excellent AI visual scientist, but he believed that Karpathy would be the “world’s top AI leader.”

Thus, in June 2017, Musk poached Karpathy from within to become the head of Autopilot, marking the “golden age” of Tesla’s autonomous driving.

Thus, in June 2017, Musk poached Karpathy from within to become the head of Autopilot, marking the “golden age” of Tesla’s autonomous driving.

In terms of time, Karpathy can be considered the longest-serving AI executive at Tesla. Although he joked that “this is a new job with an average duration of only six months” when he first joined, he stayed for a solid five years, longer than anyone else.

In The Robot Brains Podcast hosted by Pieter Abbeel, Karpathy shared his work experience. “In fact, I love the feeling of immersing myself in it and am motivated to create something different as soon as possible.”

He led the industry single-handedly to help Tesla achieve autonomous driving from “0 to 1”

Karpathy’s influence on Tesla is self-evident. However, in his resignation tweet, he only briefly summarized his contribution to the industry, “Helping Autopilot evolve from lane keeping to autonomous driving in urban areas.”

After reading this, Yilun Wang, the head of Ideal Automotive AI, made a defense for Karpathy on his social media, supplementing his contribution to the industry, “His multiple public presentations at AI Day and CVPR Workshop have allowed us to understand concepts such as large models, BEV, data loop, and shadow mode, and have promoted the transformation and progress of the industry.”

Back to 2019, as Tesla’s Director of AI, Karpathy made his first appearance and was extraordinary in terms of professional thinking. He directly dissed the highly respected lidar in the field of autonomous driving, “You drive because your eyes see the road, not because your eyes emit laser.”

In Karpathy’s view, since artificial intelligence is replacing humans to achieve autonomous driving, the required sensors should be cameras that function with pure visual capabilities like human eyes.

This novel perspective refreshed everyone’s understanding, and from then on, Karpathy’s keynote speeches at various conferences have attracted great attention. People are closely watching the rookie’s every move, trying to capture the direction of future autonomous driving technology from his speeches.

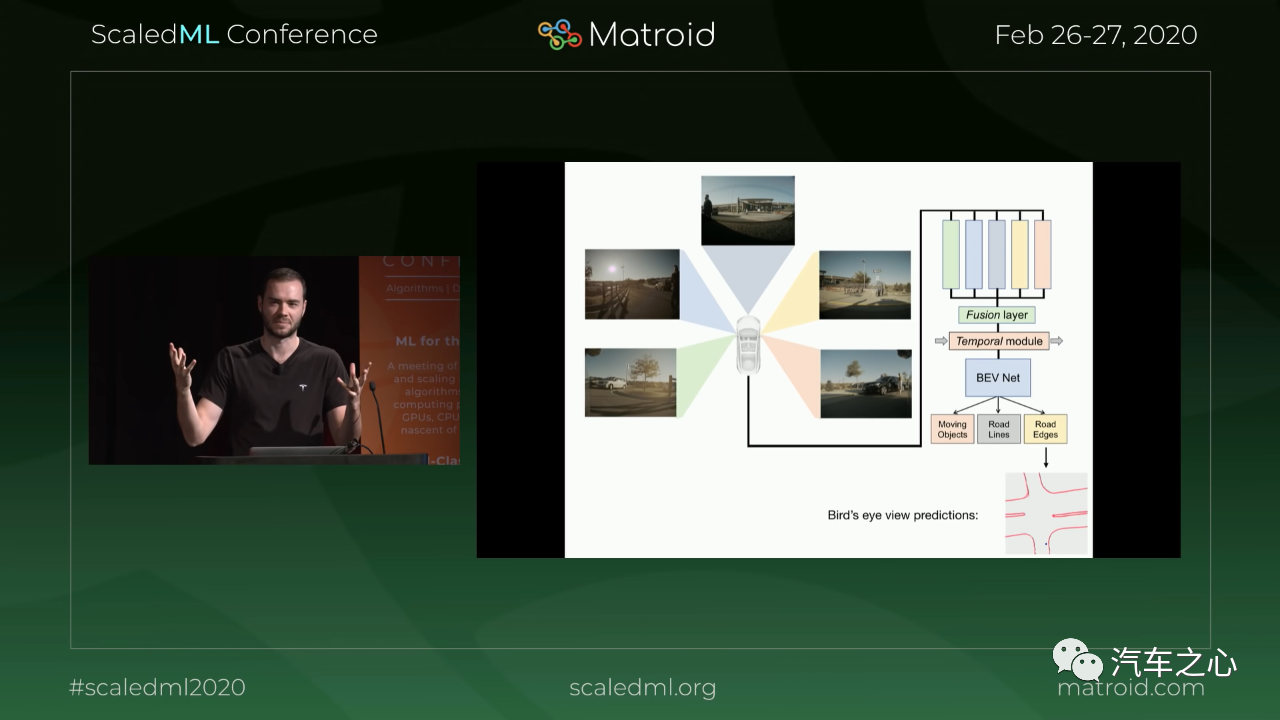

In 2020, at the ScaledML2020 (Scaled Machine Learning Conference), Karpathy introduced how to use a camera to achieve “perception” of the three-dimensional world.

Simply put, Tesla captures 2D images through five directional cameras and converts them into 3D content through a modeler (previously known as “Occupancy Tracker,” later replaced by a neural network) by “stitching” them together, and then projects them onto the Z+ plane from top to bottom, constructing a bird’s-eye view of the surrounding road environment for automatic driving decision-making.

This neural network-based uplifting vision is called “Bird’s Eye View” by Tesla, which is widely known as “BEV” in the automatic driving industry.

It is worth noting that when “stitching” the 3D images, the corresponding material timelines of each viewpoint need to be aligned and stitched continuously for a second time, which appears to be “3D + timeline,” achieving the “4D vision” of changing scenery as one moves.

The leap from image-level processing to video-level processing makes it difficult for the inherent software and training networks of Autopilot to perform. Therefore, in August of the same year, the Autopilot team led by Karpathy decided to rewrite the software’s underlying code and refactor the deep neural network.

On the other hand, the conversion from 2D to 4D causes the exponential growth of the dataset. To cope with the demand for massive data processing, the Tesla Autopilot team mentioned the launch of a brand new supercomputer for neural network (NN) training, called Dojo.

Dojo comes from Japanese, meaning “training hall.” Tesla named it with the aim of surpassing Japan’s Fugaku and becoming the world’s number one supercomputer.

In 2021, at CVPR2021 (the top summit of computer vision), Karpathy announced Dojo Prototype and said in his speech that with the assistance of the supercomputer, vision-based autonomous driving will be more reliable than human drivers. “Even for excellent drivers, their response time is 250 ms, and many people even exceed 460 ms, while computers’ response time is less than 100 ms.”Dojo has another remarkable feature – unsupervised learning, which does not require manual labeling of training data sets, and the system can analyze sample sets based on statistical patterns between samples. Common tasks include clustering. For example, based only on a certain number of image features of “dogs”, the system can distinguish “dogs” images from various other images and videos.

Behind the implementation of this function is the introduction of Transformer by Karpathy in the field of software algorithms.

Transformer was originally proposed by Google as a neural network model for machine translation. It is very suitable for GPU computing environments and abandons RNN or CNN commonly used in NLP. It can achieve very good results.

At CVPR2021, Andrej Karpathy mentioned Transformer twice and fused it with CNN, 3D convolution, or both across time to output 3D information with depth, which is applied to Tesla vehicles.

Transformer quickly became popular in the field of autonomous driving, and it is reported that Momenta is using Transformer for large-scale perceptual training.

Whether it is BEV or Dojo, the completed functions are only at the “perception” level. To achieve truly on-road autonomous driving, the ability of the “decision-making” part must be improved.

In response to this, Karpathy proposed “Shadow Mode,” another leap forward in the field of autonomous driving.

Shadow Mode refers to another “clone brain” outside the vehicle’s main brain, which can also obtain various sensor data from the vehicle, predict the driving conditions, and output driving decision instructions.

The difference is that the predictions and instructions under Shadow Mode are not actually executed, but are only used to compare and improve the test version of the neural network with the actual effect of the decision-making of the main brain.

Through Shadow Mode, Tesla can efficiently and safely obtain the actual on-road effect of the test version of the neural network.

Andrej Karpathy commented, “The human brain can “imagine” distance and have excellent driving skills, and neural networks also have this ability.”

Such a closed loop from data collection to algorithm deployment allows Tesla’s autonomous driving to automatically classify, module test, and deploy long-tail cases under pure visual conditions, with iterative improvements and maturity of system performance.In May 2021, Tesla announced that the Model 3/Y produced for the North American market will no longer be equipped with millimeter-wave radar, as the accuracy of using cameras alone for depth estimation has surpassed that of using cameras and millimeter-wave radar. “There are unsolvable drawbacks to the millimeter-wave radar or lidar solution… Tesla’s vision-based autopilot relies on 8 cameras and the Dojo supercomputer at its core. In principle, we can use it on any road on Earth,” said Karpathy at CVPR2021.

“At Tesla, the geniuses turn left and the lunatics turn right.” While Musk may be the “madman” with fanciful ideas but no consideration for technological feasibility, Karpathy is undoubtedly the “genius” with superlative engineering thinking and the ability to tackle all kinds of “impossible” problems through reverse engineering. Even as bystanders to this “vision only” feast, we can all gain wisdom and food for thought.

Departure of Key Figure, Massive Layoffs in Autonomous Driving Department: Will Tesla’s Vision-Based Plan Change?

As the key figure in Tesla’s vision-based autonomous driving system, Karpathy’s departure will undoubtedly have a major impact on the company’s progress in this field.

Looking back on Karpathy’s contributions to Tesla’s autonomous driving system since joining the company:

- March 2017: Introduced automated parking and automated lane change capabilities.

- October 2018: Introduced Navigate on Autopilot functionality.

- September 2019: Introduced Smart Summon.

- April 2020: Introduced the ability to detect and respond to traffic signals and stop signs (initially overseas).

- October 2020: Conducted small-scale testing of FSD Beta among select employees and owners.

- September 2021: Launched the “Request Button” for FSD Beta based on a safety driving score system, with version 10.1 at the time.

- February 2022: The latest version of FSD Beta is 10.10.2, with about 60,000 owners having received the update.

It appears that Tesla’s FSD will soon be widely deployed. On January 27, 2022, at Tesla’s Q4 2021 earnings conference, Musk said, “I personally expect Tesla to achieve FSD (fully autonomous driving) that is safer than humans in 2022…” However, with Karpathy’s departure, Musk may have to cancel this plan again.During Karpathy’s vacation, the Full Self-Driving (FSD) did not receive major updates, and even due to the “phantom braking” phenomenon caused by the algorithm, it has received a surge of complaints from Tesla owners, from 354 in February to 758 in May.

To dispel doubts from the outside world, on July 14th, Musk revealed on Twitter that the FSD V10.13 beta version will begin internal testing from “tomorrow” (July 15th), aiming to optimize some scenarios and will be open for public beta testing in the next week.

However, this statement failed to convince the public, and there are many signs that Tesla’s autonomous driving technology is changing.

According to records from the California Employment Development Department, Tesla recently closed its office in San Mateo, and 229 people were laid off. Worth noting is that the office had a data labeling team specifically responsible for helping to improve Tesla’s driving assist technology.

Some speculate that Musk may have already known that Karpathy was going to resign and took early action to stop the loss in a timely manner.

With Karpathy’s departure, where will Tesla’s “pure vision” route go?

Recently, Tesla has repeatedly been embroiled in public opinion disputes due to safety issues.

On July 6th, a 2015 Tesla Model S drove into a highway rest area parking lot from Interstate 75 in Gainesville, Florida, and crashed directly into a tow truck parked there. Although it has not been confirmed whether the autopilot function was turned on at the time, coincidentally, the car that was hit was another “white car.”

Even earlier, according to data from the National Highway Traffic Safety Administration (NHTSA), in 10 months up to May 2022, there were more than 200 accidents related to Tesla’s Autopilot software.

As a result, The Washington Post and CNBC have successively published articles criticizing the performance of Tesla’s FSD, which appears to be far from true autonomous driving.

Industry insiders have also become suspicious that the “pure vision” route seems so ideal, but has many hidden dangers in actual operation.

In this sensitive time, Tesla registered a new High Resolution Radar (HD Radar) with the Federal Communications Commission (FCC) on June 7th. From the RF test report, this radar has 6 transmit and 8 receive channels, operates at a frequency of 77GHz, supports three scanning modes, and has a maximum scan bandwidth of 700MHz and a frame period of about 67ms (15Hz).

There is speculation that this might mean that Tesla is going to make a comeback with its millimeter-wave radar.However, everything remains unknown before Musk announces the next AI director.

The trend of returning to colleges and Karpathy: Working with Musk is a double-edged sword

Karpathy’s next move has also sparked strong interest from industry insiders.

Some people say that in the increasingly fierce competition of autonomous driving technology, whoever can successfully invite Karpathy to join will get an ace in the pack.

However, Karpathy himself seems to have no clear plans.

He wrote on Twitter that he hopes to spend more time re-examining his long-term enthusiasm for artificial intelligence technology, open source, and education work.

Education seems to be a job that Karpathy is very interested in. This is not only reflected in his passionate sharing at Tesla AI Day and CVPR Workshop, but also can be seen from Karpathy’s earlier academic experience.

From 2011 to 2016, Karpathy pursued a PhD in Computer Science at Stanford University, under the guidance of the AI guru Fei-Fei Li. During this period, based on his own knowledge, he designed a course called CS231n (convolutional neural networks for visual recognition) and served as the main lecturer, which was open to students at the university and became one of the most popular courses at Stanford. Later, someone uploaded this course to YouTube, attracting millions of viewers.

“I have always been passionate about teaching. During my graduate studies at the University of British Columbia, I also served as a TA for different classes. I like to watch people learn new skills and do cool things,” Karpathy said proudly of this experience.

He revealed on The Robot Brains Podcast, hosted by Pieter Abbeel, that in the first year he taught the course, he focused only on course and teaching design, even putting the entire Ph.D. research on hold.

“I think this may be more influential than writing one or two papers. In the first semester, there were about 150 students in the class. When I left, there were already 700 students in the classroom,” Karpathy called it a highlight of his Ph.D. degree.It is not known whether it is a coincidence or not, but in recent years, we have seen many AI experts returning to academia from the industry, starting with Andrew Ng, followed by Fei-Fei Li, Zhang Tong, and so on. Recently, Chen Yilun, the CTO of Huawei’s autonomous driving system and Chief Scientist of the Car BU, resigned from Huawei and announced that he will be joining the Tsinghua University Institute of Industry and Intelligent Robotics (AIR) as the Chief Expert in Intelligent Robot direction.

It is reported that Chen Yilun received his bachelor’s and master’s degrees from the Department of Electronic Engineering at Tsinghua University, and his Ph.D. from the University of Michigan in Electronic Engineering. In 2018, he joined Huawei and was responsible for the design of high-level autonomous driving technology solutions, and led the full-stack R&D of Huawei’s first-generation autonomous driving system from 0 to 1.

Why do AI experts prefer to return to academia?

An industry insider believes that this is related to the operational modes of enterprises and universities. “In business research, the goal is to create a product as quickly as possible within a specific time frame. In academia, time is more flexible, allowing academic experts to push forward technological development according to their own understanding of the profession step by step.”

This might partially explain Karpathy’s departure from Tesla.

Karpathy once said that working with Musk was a double-edged sword. “If he wanted to see the future yesterday, he would push everyone to do it, and he would inject a lot of energy. He wants this thing to happen as soon as possible, and you also need a certain attitude to truly tolerate this situation for a long time.”

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.