Author: Zhu Yulong

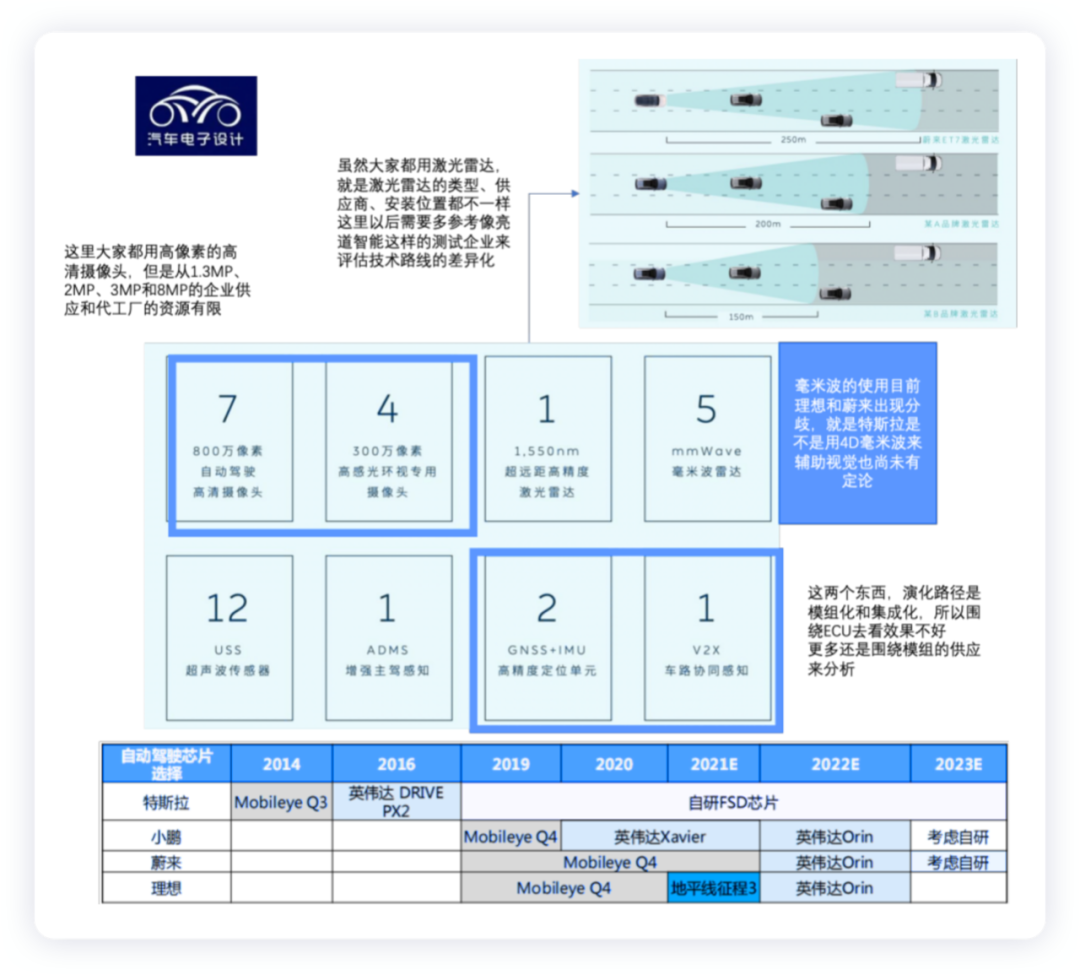

Yesterday, my colleagues were discussing about a disagreement related to the hardware system of autonomous driving. Currently, several pioneering companies exploring in the field have different opinions about the combination of visual perception, millimeter-wave and LiDAR, which are the three main sensory paths, and the evolution paths of different car companies are not converging. This poses a great challenge for us to judge future development and determine investment opportunities. Here are some information that I recorded during the LiDAR seminar, which mainly focused on the LiDAR of NIO.

Currently, several leading companies in this field are dealing with weak links by integrating suppliers and chip companies’ technology to achieve their ability leap. This requires experimenting with software ideas using heterogeneous and high-calculating systems because of a lack of sufficient data sensing and calculating power.

The actual estimated purchasing cost here will not be lower than 20,000 to 30,000 yuan, with the cost of companies like NIO over 40,000 to 50,000 yuan. Although this number is indeed very high, it is a transient phenomenon and will not always be like this. If you want to compare with Tesla’s performance, your cost will be three to four times that of others. The long-term situation depends on the speed of data retrieval by the technical staff of each company, and whether the algorithm can be improved and converged quickly.

LiDAR of NIO

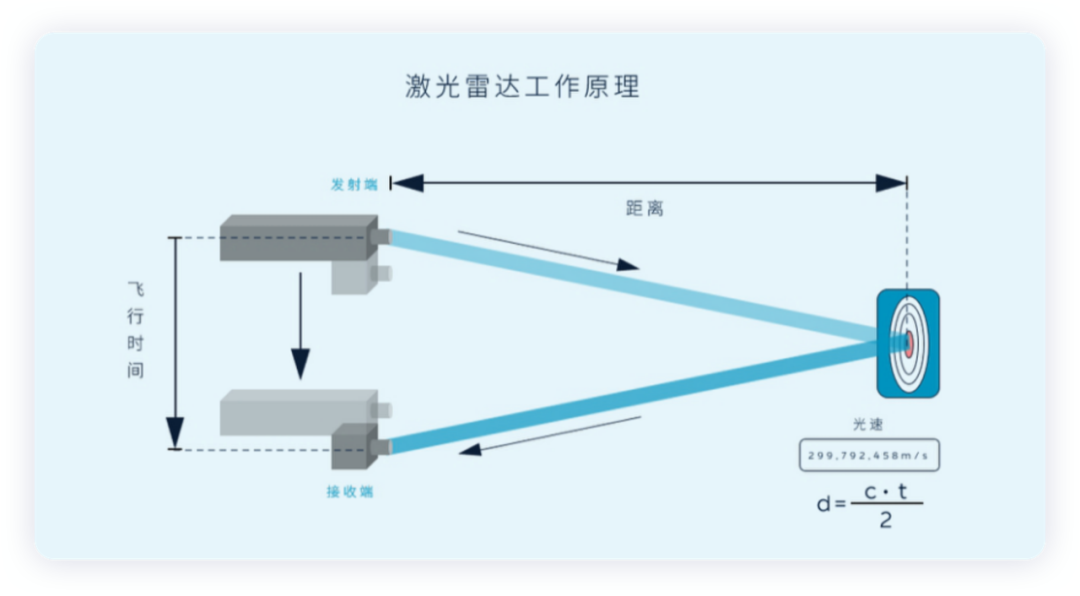

NIO uses Innovusion’s LiDAR, which was established in 2016, and is a member of NIO’s auto component ecology. NIO uses the LiDAR Falcon, which is currently the most expensive product used in mass-produced cars. LiDAR (Light Detection and Ranging) includes a narrow-band laser and a receiving system. The basic principle is that the laser generates and emits a beam of light pulses, which hit and reflect back from objects, and are ultimately received by the receiver. The receiver measures the propagation time of the light pulses from emission to reflection and calculates the distance between the vehicle and the reflective object. The LiDAR emits and receives multiple light pulses, and outputs three-dimensional spatial data by calculating the distance between itself and surrounding obstacles.

LiDAR is originally an instrument and equipment, but is now used on a large scale as a vehicle’s sensory period. The value of this component is expensive, so several foreign LiDAR companies (Ouster, Velodyne LiDAR, Luminar, Innoviz, and Aeva) have been listed on the US stock market, while several domestic companies such as Hesai Technology and Suzhou Juchuang are preparing for IPO.

From a technical perspective, the solution of camera + millimeter-wave radar is difficult to reliably identify obstacles in strong light, tunnel backlight, darkness, and scenes of objects without algorithm training. Even with cameras, or even multiple cameras, coupled with visual algorithms, providing the deep information necessary for autonomous driving perception remains difficult, and the farther away the target object, the lower the accuracy of the depth information. Considering the limitations of perception, China’s goal is to first enter the L2+ level in urban areas. Therefore, facing the complex scenes in China, Chinese car companies seek to use LiDAR to support mass-produced cars and achieve the evolution from assisted driving to urban automatic assisted driving.

LiDAR collects reflected light pulses, outputs three-dimensional spatial data, and provides reliable depth information for visual sensors. With the addition of LiDAR, the overall sensors achieve mutual redundancy, raising the level of vehicle perception and improving the reliability of perception. Vehicles can effectively identify target objects that are currently difficult to recognize with cameras, such as road bumps, missing manhole covers, scattered objects, and large stationary obstacles.

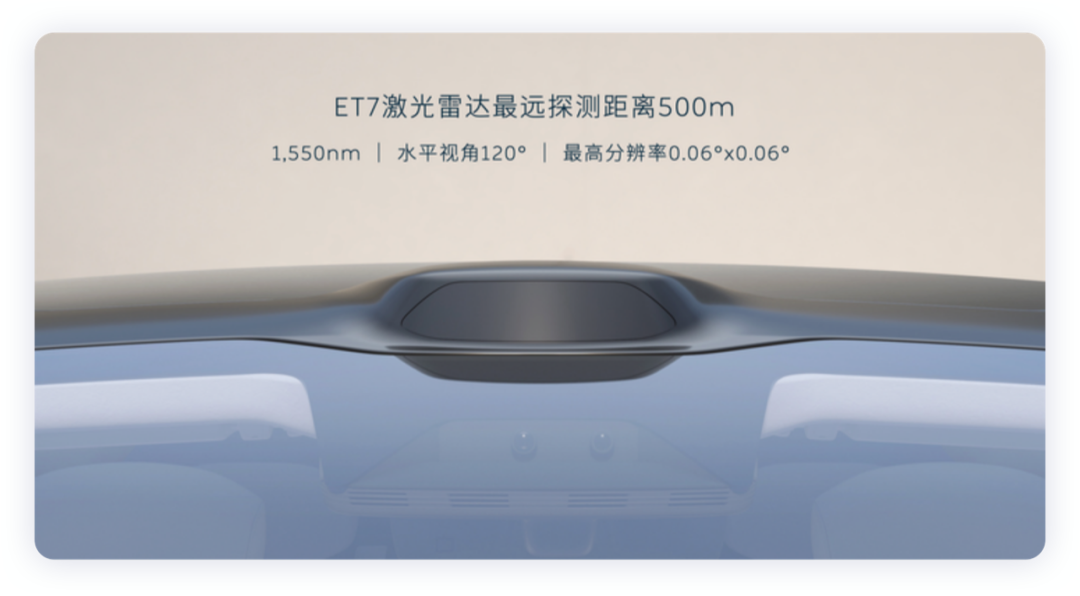

The ultra-long-range high-precision LiDAR carried by NIO ET7, which can detect up to 500 meters and up to 250 meters in a 10% reflection rate scenario, has a 120° super wide horizontal field of view and 0.06°*0.06° super high resolution, making it the world’s first mass-produced 1550nm LiDAR. The basic logic behind it is that 1550nm laser has better eye safety than 905nm laser.

The visible light wavelength range for human eyes is 380nm to 760nm. The use of 1550nm laser can avoid focusing on the retina of the surrounding people during use, and most of the laser will be absorbed by water when passing through the eye, making this product completely eye-safe. 905nm laser is closer to the visible light wavelength and is easy to focus on the retina of the human eye. If a child stares at the LiDAR, it can cause harm, so the upper limit of the optical power of the 905nm LiDAR is lower.

When designing the product, the 1550nm LiDAR can use higher power to shoot the laser further. The anti-interference ability of the 1550nm wavelength laser is strong, the beam is more collimated, and the brightness of the light source is higher. Therefore, the 1550nm LiDAR itself is a synonym for expensive.

The spot of the 1550nm wavelength laser is very small, with a diameter of only 1/4 of 905 at a distance of 100 meters. When detecting pedestrians at 100 meters, the pulse can receive 4 points horizontally and 7 points vertically, clearly detecting the posture of pedestrians. The maximum detection distance of this lidar is 500 meters, and the detection distance under 10% reflection rate standard can reach 250 meters.

The spot of the 1550nm wavelength laser is very small, with a diameter of only 1/4 of 905 at a distance of 100 meters. When detecting pedestrians at 100 meters, the pulse can receive 4 points horizontally and 7 points vertically, clearly detecting the posture of pedestrians. The maximum detection distance of this lidar is 500 meters, and the detection distance under 10% reflection rate standard can reach 250 meters.

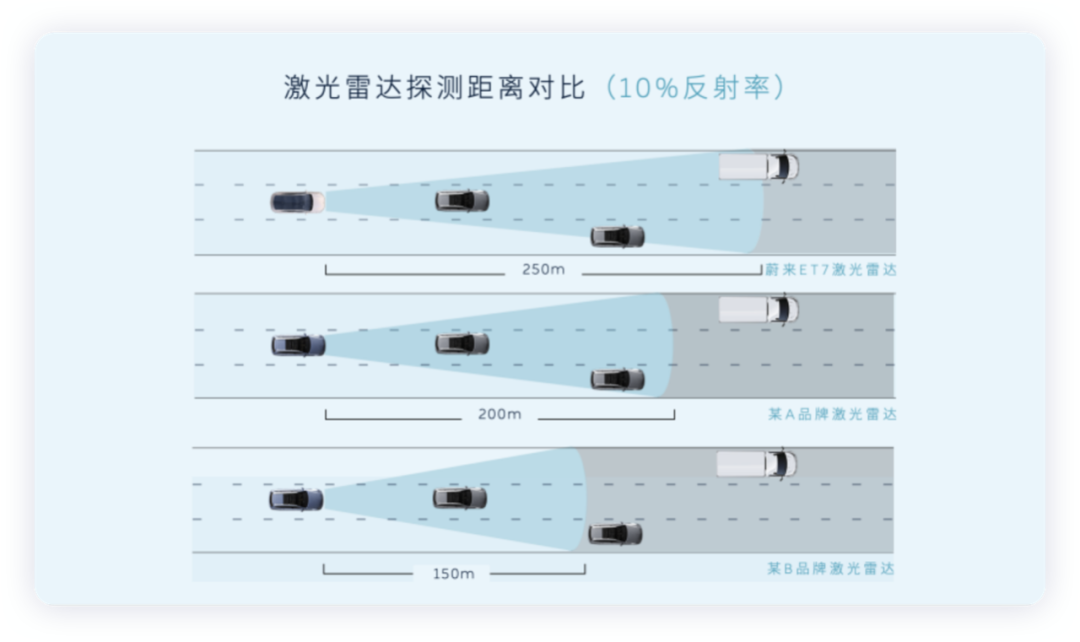

Of course, the difference in detection distance is mainly considered for reducing braking distance on highways. When a stationary roadblock appears on the highway, the vehicle with automatic driving assistance control needs to brake urgently. The higher the speed, the longer the stopping distance required. NIO suffered losses in 2021, so the selection of technology here should choose a longer detection distance, which can help to discover unusual situations such as road repairs earlier during high-speed driving, and take braking or lane change measures in advance.

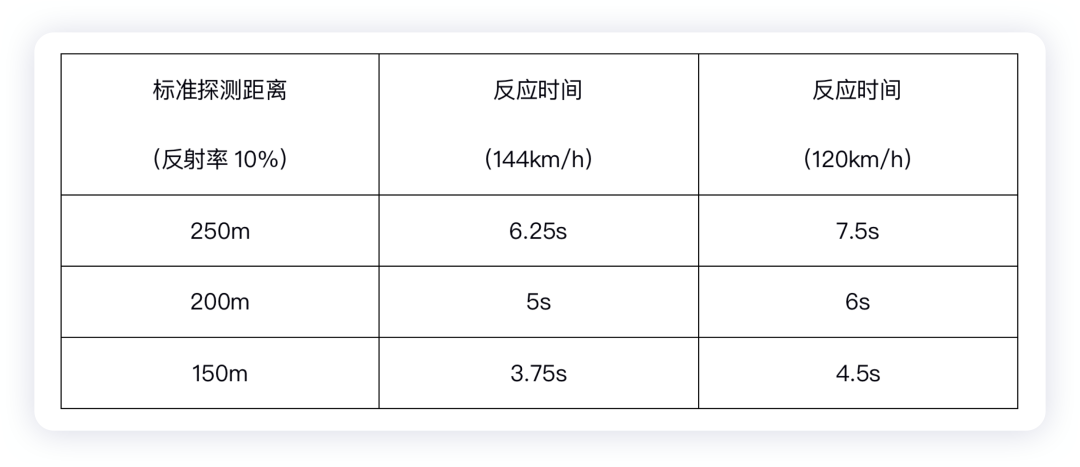

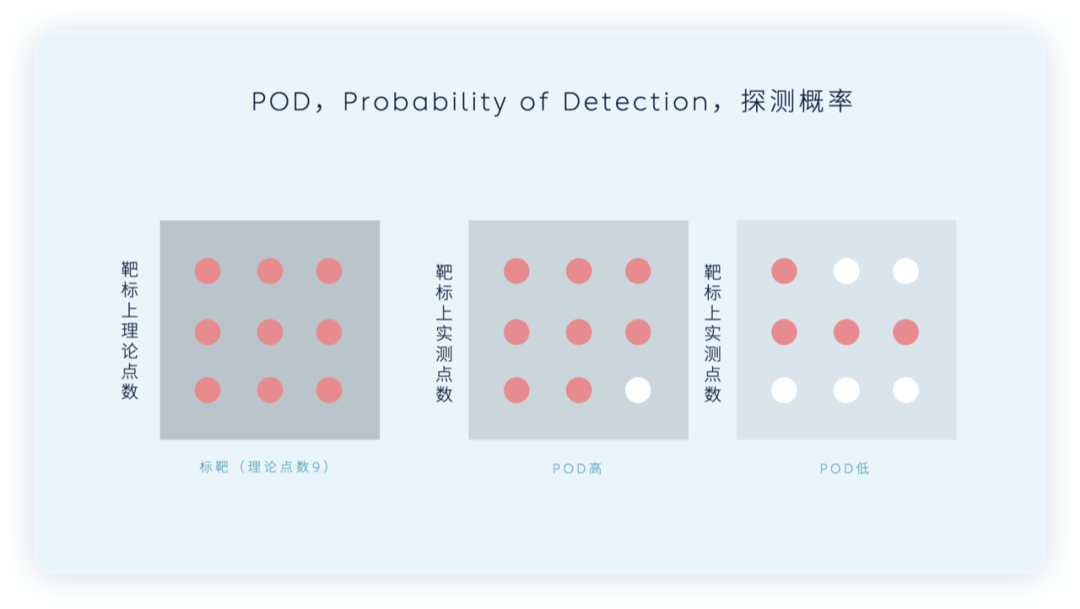

Resolution of Lidar

Lidar also has a resolution issue. For every 0.01° of angular resolution change, at a distance of 200m, the distance between two adjacent points is about 3.5cm. Taking a 0.1° angular resolution lidar as an example, the distance between two adjacent points received is 35cm. For target objects such as pedestrians, bicycles, and motorcycles, the point cloud is too sparse, that is to say, the lidar does not function well.

Therefore, to solve the city’s problems, the LiDAR needs to generate high-density point clouds in the key visual area of the driving, enabling better visibility of the objects within that area. The functional range of this LiDAR is 25°H (horizontal) * 9.6°V (vertical). At a distance of 50m ahead, the coverage area includes 10 lanes horizontally and more than one-story height vertically. In fact, by selecting and encrypting key areas on the road, the resolution of any area can be increased as needed to achieve better perception and tracking of vehicles and pedestrians, even under conditions such as sharp turns or uphill/downhill slopes, enabling early discovery of distant dangerous targets. In the city, early strategies can be taken to decelerate to avoid accidents. (Pilot competitors in the city include Meituan and Ele.me, both of which are excellent companies.) When problems cannot be solved, we rely on consumers to improve the reliability and safety of autonomous driving.

Therefore, to solve the city’s problems, the LiDAR needs to generate high-density point clouds in the key visual area of the driving, enabling better visibility of the objects within that area. The functional range of this LiDAR is 25°H (horizontal) * 9.6°V (vertical). At a distance of 50m ahead, the coverage area includes 10 lanes horizontally and more than one-story height vertically. In fact, by selecting and encrypting key areas on the road, the resolution of any area can be increased as needed to achieve better perception and tracking of vehicles and pedestrians, even under conditions such as sharp turns or uphill/downhill slopes, enabling early discovery of distant dangerous targets. In the city, early strategies can be taken to decelerate to avoid accidents. (Pilot competitors in the city include Meituan and Ele.me, both of which are excellent companies.) When problems cannot be solved, we rely on consumers to improve the reliability and safety of autonomous driving.

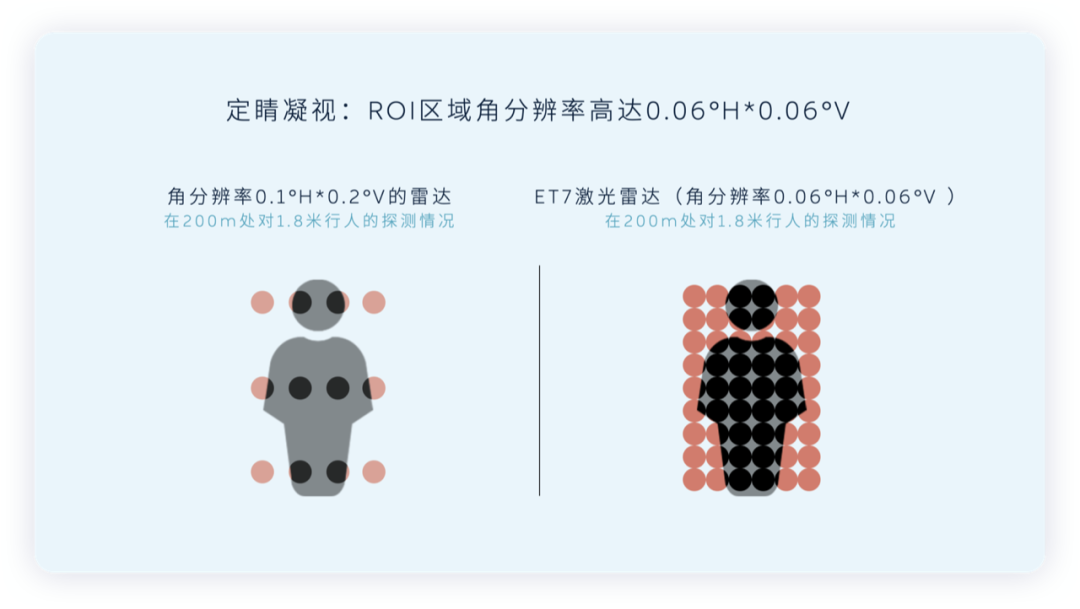

POD, Probability of Detection, indicates the ratio of the number of laser beams emitted (the theoretical number of points) over consecutive 100 frames to the number of laser beams detected (i.e. the effective number of points). POD reflects the ability and stability of the LiDAR to receive returning points, and is an important indicator to reflect the performance of the LiDAR. The detection probability of a 10% reflective object at 250m is over 90%.

Conclusion: When the epidemic in Shanghai improves, I plan to visit Hesai’s headquarters in Qingpu. There is an opportunity for Chinese LiDAR to take a lead. The key is how to quickly enter mass production, and make the R&D, manufacturing, and quality control run smoothly from producing thousand units annually to several thousand per month. Also, how to effectively reduce the cost is crucial for the next investment focus in this field.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.