Author: MuMin

On June 22nd, NIO held an online event about the “intelligent hardware of the car-mounted LiDAR”. The event was presented by NIO’s Vice President of Intelligent Hardware, Bai Jian, who introduced the LiDAR carried by the NIO ET7.

Regarding LiDAR, it is no longer a strange sensor in the field of intelligent driving. In addition to Tesla, other car companies and intelligent driving companies also regard it as a necessary sensor for achieving high-level autonomous driving, thanks to its unique 3D sensing information being able to compensate for the blind spots in perception of other sensors such as cameras in extremely special scenarios. This is commonly referred to in the industry as “blind spot compensation”.

Although LiDAR has a higher cost, most intelligent cars with advanced assisted driving functions launched in the second half of 2021 are equipped with LiDAR, and the NIO ET7 is one of them.

At this event, Bai Jian told us the reason why NIO chose LiDAR and how NIO led the development of this radar.

Conversation with NIO Bai Jian

Q: How is the penetration of LiDAR in foggy weather?

Bai Jian: Because LiDAR uses infrared laser, rainy and foggy weather has some impact on it. The 1550nm LiDAR carried by our ET7 has been tested specifically for rainy and foggy weather. Under general rainy conditions, our perception ability is not significantly affected.

Q: Apart from NIO, other car companies are also gradually equipping themselves with LiDAR, and not just one, how do you evaluate the comparison of the number of LiDARs among car companies?

Bai Jian: Let’s talk about LiDAR, how we look at this from its origin and original intention. I mentioned earlier why NIO uses LiDAR in the communication process.

First, LiDAR can provide depth information; second, the main feature of LiDAR is that it can see far, clearly, and steadily. This order represents our understanding of LiDAR and also answers your question.

Under the current technical conditions, pure visual solutions cannot solve some depth information problems, so we need the help of LiDAR. So when do we need LiDAR the most?

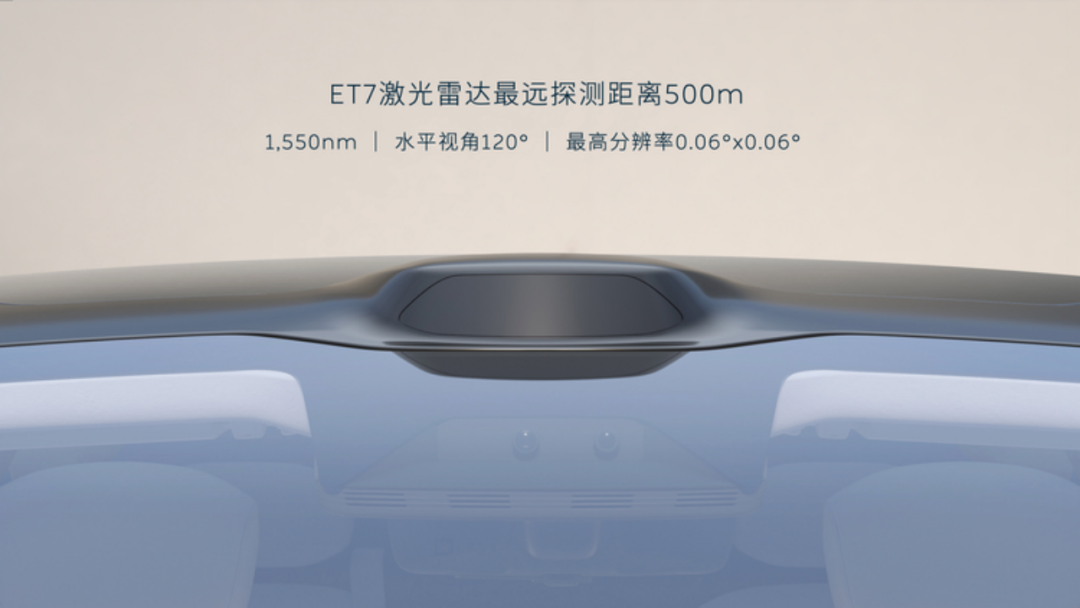

LiDAR is especially needed when the scene in front is complex, high-speed, and a lot of processing is required, so we chose this forward-facing LiDAR, with a horizontal FOV of 120 degrees and a maximum detection range of 500 meters, which is currently the farthest view of the LiDARs in mass production and can solve most of our problems.

In other positions, there are some lateral LiDARs, which are mostly used in unprotected left turns, cut-ins and other scenes where the speed is generally low.In this scenario, Aquila’s camera system, consisting of four side-facing 8M cameras and four panoramic cameras, provides sufficient depth and visual information. In particular, Aquila’s sensors have two Side Front 8M cameras, which are placed relatively high, in a watchtower-like structure, with excellent visibility.

This can help us handle scenes where testing needs to be supplemented. Based on these considerations, we have designed this Aquila system with a certain logic behind it.

Q: Almost all new car companies are laying out their radar businesses. What is NIO’s view on self-research and cooperation? Regarding the 500-meter ultra-long-distance high-precision LiDAR on ET7, how does NIO ensure its stability?

Bai Jian: First of all, we must answer a question: NIO cannot do everything. Firstly, we are a major automotive manufacturer and cannot accomplish everything in the industry.

We believe that there is a very large ecosystem within the industry, and only openness is the solution. Moreover, from the perspective of talents and resources, it is also impossible for one company to completely cover everything.

Secondly, everyone is laying out, and so are we. For us, critical components or ECUs are more willing to grow, deeply customize, and develop with our partners.

LiDAR is a very good example. We have grown together with Innovusion. In this cooperative mode, technology and aesthetics have become possible.

Summing up, we will definitely deepen our layout for LiDAR, and we will cooperate with our partners to achieve a win-win situation, and work together to deepen customization.

In response to your question about watching 500 meters away, how do we ensure its stability and reliability? Today, we propose several important indicators for LiDAR: see far, see clearly, see stably.

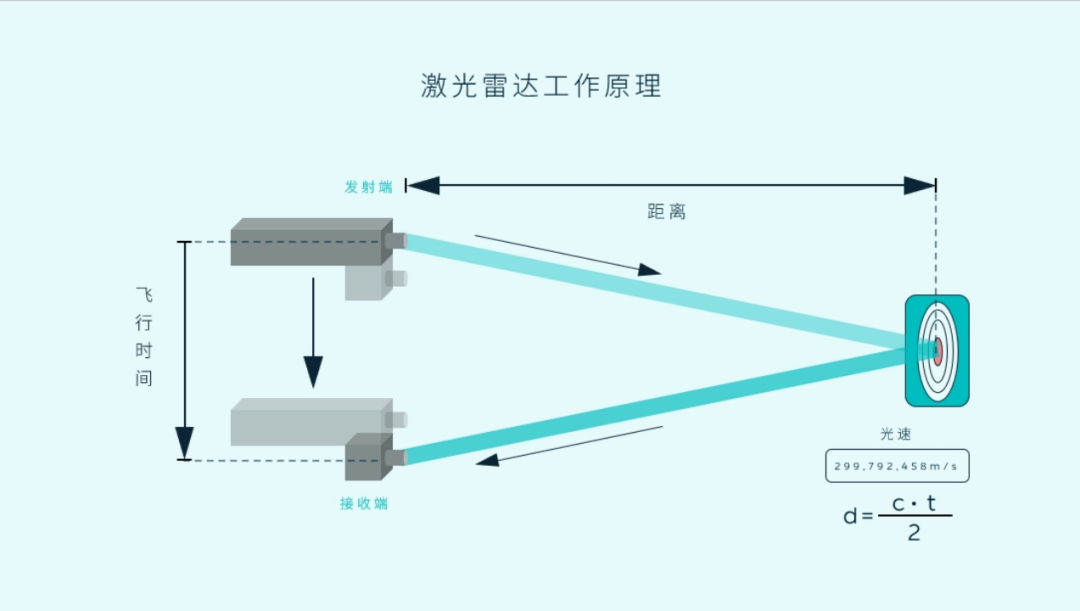

LiDAR is a scanning system, which emits a laser beam. According to your question of 500 meters, our light must reach 500 meters and then reflect back. The attenuation of the laser in the air is not bad. Even the laser beam, especially the 1550nm laser beam, has a certain divergence angle. The divergence angle hits the object, and the outgoing light is a very fine point. By the time the laser hits the object, the spot is already relatively large. When this reflected light returns, my receiving aperture cannot receive all the light. The further the distance, the weaker the echo energy. In order to ensure stability at 500 meters, the energy emitted must be strong, the sensitivity of reception must be high, and the system must be stable and reliable.

There is a concept of POD (Probability of Detection), apart from this POD, the overall system level is also relevant, which is related to our choice of 1550nm at that time. The safety of personnel and the technical route of the laser determine that the energy we emit can be the strongest in the industry, thereby solving the problem of emission.During the reception process, we used technologies such as SPAD and APD. Among these technological routes, we chose APD, which has the best stability and reliability, to ensure sensitivity and reliability. As for the algorithm of the backend system, we ensured that the system can emit and reflect light up to 500 meters away, without significant diffusion and with relatively concentrated energy, and then detect it precisely and steadily. That is the general idea.

Q: Is the “fixed gaze” function mandatory? What is the angular resolution outside the ROI region?

Bai Jian: Firstly, the “fixed gaze” function can be turned on and off through system configuration. However, after extensive testing and verification, we believe that this feature is very important and demonstrates our product’s advantages. Therefore, our system defaults to keep this function on.

Secondly, the angular resolution of the “fixed gaze” area is 0.06° × 0.06°. The resolution outside the region is not fixed but has a gradual change. If we have to make a comparison with other popular lidars that use line numbers, we can see that our scanning beams have roughly 144 lines.

Q: How does NIO’s laser radar fusion with visual cameras compare to other competitors?

Bai Jian: Laser radar and vision must be deeply merged. Media friends might have heard of terms like front-end fusion and back-end fusion.

NIO has mastered several core technologies for smart electric vehicles through independent research and development, including autonomous driving technology.

For autonomous driving, we need to define the fusion method based on system-level requirements of the algorithm, autonomous or assisted driving. We also need to consider the system’s time synchronization requirement, frame alignment, and how to fit the scanning beams together.

We define all these conditions at the system level, then decompose them into various subsystems, including the lidar, to achieve their design goals. Once we have designed the system, we proceed to system-level debugging, including fusion, internal and external parameter calibration, and time alignment.

Q: How does the laser radar perform in foggy conditions?

Bai Jian: In foggy conditions, laser radar is less effective than millimeter-wave radar. Compared with the working frequencies of 60G and even 77G of millimeter-wave radar, laser radar is less able to penetrate fog.

Q: In harsh car-use environments, where the user does not wash or take care of the car, and has limited understanding of the car, how stable is the laser radar? What tests have been done, and how much is the after-sale cost?

No correction and improvement necessary.“`

白剑:We have conducted many tests on anti-dirt. The Lidar system will perform self-checks, and the autonomous driving system will perform system-level self-checks. It has judging standards. If there are spots or dirt on the Lidar, these things have already covered a certain proportion. When the quality of our point cloud has dropped to a level that the system cannot withstand, we will remind the user that there is dirt on the window of the Lidar, and the user should clean it.

We also prepare a cleaning kit for users, a sponge-like brush. Users can wipe it clean. There is no cost issue.

Another issue is that if the Lidar is installed in a lower position, such as the position of the bumper, gravel may splash on the road and smash the Lidar, which may cause repair problems. However, this problem is much milder for us, because the position of the “periscope” is at the top of the car, and there are very few gravel stones hitting such a high position. The layout of the “periscope” can avoid a big type of harm.

Another type is scratching and bumping. Everyone knows that the left and right rearview mirrors or bumpers are easy to scratch, but the layout of the “periscope” is relatively difficult to scratch. All the Lidar outer membranes have hardened membranes. The hardened membranes are about 3-4um thick. If extremely low probability events occur and hit the surface, such as a slight pebble, we have tested that it will not affect the performance of the Lidar and do not need to be repaired. Users can use it with confidence. The risk of this part is relatively low.

Q: High-performance Lidar requires high computing power. In the past two years, the shortage of chips has been a problem that many automakers need to face and solve. How to deal with this problem in the future?

白剑: Our computing power requirements are indeed high, but there is no need to worry too much. The computing power of NIO’s NT2 platform model is as high as 1016 TOPS, so there is no need to worry about this.

Regarding chip supply, we have taken many measures to ensure supply, which is also due to our deep involvement in Lidar development. We have led the design of circuit boards, and many chips have been considered in the selection stage. In some cases, once a temporary shortage occurs, we have contingency plans.

We see that the entire supply situation is gradually improving, much better than last year. All of the above have determined that our current Lidar production capacity is very good, and production capacity climbing is smooth.

Q: How is Lidar and camera perception allocated? Who has the higher decision-making level, or when the perceptions of the two sensors are different, who has the final decision?

白剑: The algorithm model will independently process data from different sensors and then process them in the fusion module.

Q: What is the lifespan of Lidar? Compared with the entire life cycle of the vehicle, do Lidar retire early and need to be replaced midway?

白剑: Every part we put on the car has quality standards, including a service life, and the Lidar is no exception. The Lidar has undergone rigorous durability testing. From the technical architecture of the system, it does not have very fragile parts that need to be retired early. The quality requirements of Lidar are the same as other parts of the vehicle.

“`Q: Given that NIO is now taking the route of multi-sensor fusion in visual perception, will there be a route for pure visual perception in the future?

Bai Jian: In the visible time period I can see, I don’t think so. At least for five or six years, it will be difficult for us to calculate the depth, focus, auto focus and other performance of 2D cameras as well as human eyes.

We still need to rely on a fusion approach to approximate human eyes in a way that 1 + 1 > 2, allowing algorithms and computing power systems to approach the human brain infinitely, and we believe that only in this way can autonomous driving be solved.

Q: There are some LIDARs on the market that have a lot of lasers, and even up to 128 lasers are advertised. What are the differences in principles and pros and cons compared to NIO’s LIDAR?

Bai Jian: Whether a LIDAR can see clearly is related to resolution and also to a certain extent to the number of lasers. There is no very certain relationship. To achieve high resolution, fewer laser points can allow motors to rotate faster and scanning to be quicker, which can be high resolution without much coupling. Returning to the original intention or essential, we must focus on resolution.

Secondly, it is highly probable that this 128-line LIDAR is a VCSEL, with 128 points, that is, 128 lines. If one point is bad, one line is missing, becoming 127 lines. This is not just a factor of reducing one line, if a perception occurs and the laser image in each frame is missing one line, and that line happens to be scanning a very critical element point.

This is a very scary thing for perception. It is not that more points are better. We should say that not only is higher resolution better, but also higher reliability is better.

Q: What are the dimensions of the quality of laser beams? What is the difference between different wavelengths and laser beam quality? For example, at a distance of 200 meters, what are the divergence angles and spot sizes of the laser beams at 905nm and 1550nm?

Bai Jian: The two spot sizes are not exactly the same, which is also related to collimation design. What you just mentioned is the physical characteristics of the laser, and there is also collimation. In terms of physical characteristics, the spot size of 1550nm is more convergent, and in terms of collimation design, the collimation capability of this 1550nm LIDAR is still very strong, and the spot size is still very small, which is particularly helpful for “seeing accurately,” and the improvement in vehicle distance accuracy is also quite evident.

Q: Can you explain in detail the difficulties and key points of vehicle-mounted LIDAR in meeting vehicle regulations, as well as the quality assurance of the GEEK+’s LIDAR?

Bai Jian: Most of the LIDARs we are currently producing are semi-solid state and contain a certain number of mechanical components. Moreover, the working environment of the LIDAR is the same as that of a vehicle, which is a very harsh and constantly changing environment. To ensure the durability and reliability of the LIDAR, we have conducted rigorous tests of impact, vibration, high temperature, high humidity, and low temperature.Q: What are the dimensions to judge the quality of a car-grade LiDAR and what specific parameters and functions need to be considered? Is there any way for an ordinary consumer to identify the quality of a LiDAR?

A: It is indeed difficult for consumers to judge. We mainly judge LiDAR by its ability to see far, see clearly, and see stably.

Q: Can a single LiDAR ensure the redundancy and demand for advanced autonomous driving detection?

A: The redundancy of the entire autonomous driving system is not completed solely by LiDAR. In the design of the whole NAD system, from algorithm, software to hardware, receiver level, all need to consider functional safety design.

For example, there are four chips on the NAD controller, which are two pairs of backups. If the LiDAR fails, the entire system can still rely on other aspects to achieve our safe operation goal, such as letting it park by the side or continue to drive on this road to a safe place.

We aim to ensure that if there is a problem with LiDAR, the system can still maintain our operation and achieve the goal of safety.

Q: Where will the LiDAR be used in NOP+ delivered in the second half of the year?

A: With the help of LiDAR, the detection distance for various targets will be stronger, it can see farther and more accurately, and it brings an overall improvement in forward perception ability. All functions that depend on forward perception can benefit from it.

In the daily use of users’ cars, the most direct and highest frequency experience improvement is that the acceleration and deceleration when following the car will be smoother, the car’s deceleration will be more timely and comfortable when a slow vehicle appears in front, and the timing and comfort of deceleration when being cut in by another vehicle will also be better. The overall acceleration and deceleration performance will be more like human drivers.

Q: Is the “staring” function of LiDAR something that needs to be set in advance, or can it be adjusted at any time to use on areas of interest to the driver?

A: LiDAR can adjust the area of “staring” in real-time, but our perception algorithm currently does not require frequent changes to it.

The “staring” function is for the autonomous driving system. The algorithm of autonomous driving, NAD’s algorithm, will control and adjust it as needed, but for safety considerations, there is currently no plan to open this function to drivers.

Q: How is the fusion perception of LiDAR, millimeter-wave radar and cameras realized? Does information perceived by different sensors have different priorities in the decision-making stage of autonomous driving? What is NIO’s strategy? What factors does LiDAR need to integrate? Is the current solution the best, or is there still room for improvement in this layout plan?

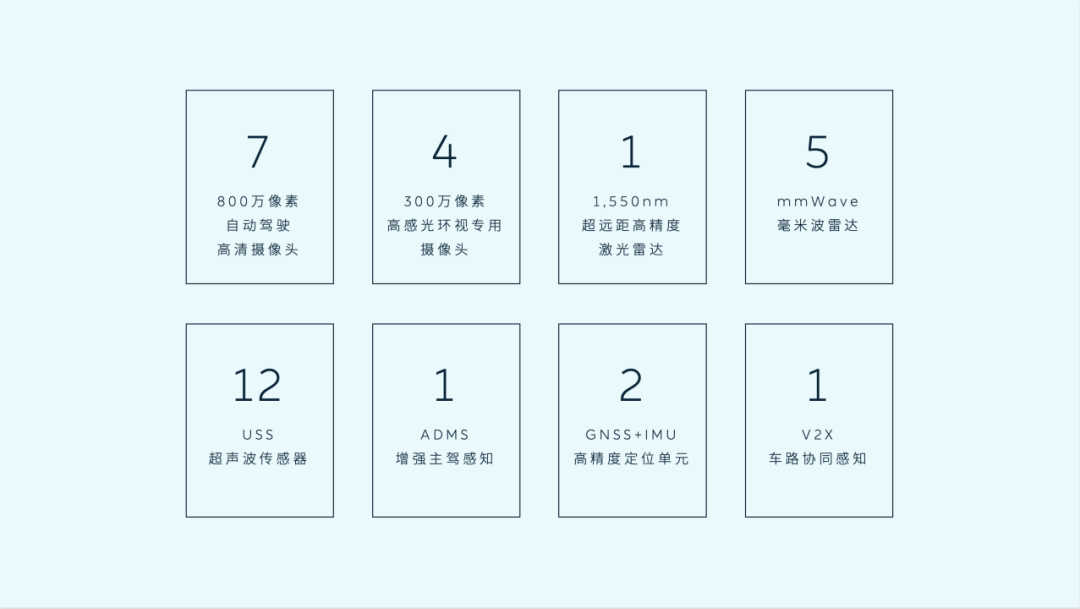

A: Answer omitted as the Chinese text contains a typo that makes it unclear. Please provide an updated text for translation.Jian Bai: The first question, the highest priority in our autonomous driving system is the LIDAR and 11 cameras, including 7 8-million-pixel high-definition cameras for autonomous driving and 4 3-million-pixel high-sensitivity perimeter-view cameras. We use them in almost all scenarios.

It is at the system level and has been assigned time and aligned pixel and frame sync since Day 1 of the system.

Is Aquila’s layout the most optimal at present?

We need to know that the entire autonomous driving algorithm is constantly evolving, and what we currently consider optimal, including what we have tested, may not be used consistently in the future.

From the trend of technological development, as AI and chip capabilities continue to improve, sensor capability will also become stronger. With this trend, it is possible to remove some sensors and add others.

NIO values innovation and research and development. We do not believe that this system will be unbeatable, and I think it will continue to evolve.

Q: Many automakers, including NIO, have chosen mixed solid-state LIDAR. What is the main reason for this, and what are the advantages over pure mechanical rotation? What is the current overall cost of LIDAR? Will it continue to decrease in the future?

Jian Bai: Currently, pure solid-state is difficult to achieve long range and high resolution, and the industry is working hard to research this. Similar to military radar systems, they also use a phased array with a Doppler pulse radar. There is an active phased array and passive phased array, which have a dispersion process, and of course, it takes a long time.

I believe that on-vehicle LIDAR also needs to evolve slowly, and for a long time in the future, mixed solid-state is producible and the main radar has the highest performance requirements of all radar.

Purely mechanical may slowly fade out due to cost and performance reasons, and currently, it is mostly on the line of mixed solid-state.

Regarding mass production, LIDAR is indeed expensive, but it is not as expensive as what our foreign competitors’ bosses have mentioned. Our Chinese supply chain and design capabilities are particularly adept at this. The cost of the laser radar on the NT2 that we have seen is indeed high, but with the scale of mass production, there is still considerable space and potential for the cost to decrease. We really look forward to a rhythm of continuous rapid cost optimization.

Q: When facing an oncoming car with LIDAR, will it interfere with our vehicle’s LIDAR? Are there any encryption and anti-interference algorithms in place?

Jian Bai: The LIDAR is a system between small receiving holes, and it is relatively straight when it is emitted. In this sense, the level of interference we tested has not affected oncoming vehicles yet.Our laser radar has certain encoding capability. Without such capability, interference may occur as laser pulses sent out to far distances may be followed by others that are sent out to closer distances and return earlier. Each pulse we send and receive has its own ID that allows us to identify and distinguish them.

Pure solid-state solutions require one emitter and one receiver for each point that needs to be scanned. Mixed solid-state solutions optimize this by using a rotating mechanism to direct the emitted light to different directions, allowing fewer emitters and receivers to be used for the same level of resolution. While solid-state laser radar is viewed as a trending and promising technology, it is still immature and not yet cost-effective for high-resolution requirements in the industry. The next five years will be crucial for its development and towards building a mature supply chain.

Regardless of the cost differences among various solutions, seeing far, seeing clearly, and seeing stably are the key factors to measure detection performance. As to the impact on production cycle, we conduct inspections, calibrations and aging tests on components of laser radar assembly lines during the production process. We also conduct external benchmarking and calibration tests after the vehicle is assembled to ensure the correctness and consistency of laser radar, cameras, and whole-vehicle systems in a 3D coordinate system.## Why Automated Driving Needs LiDAR

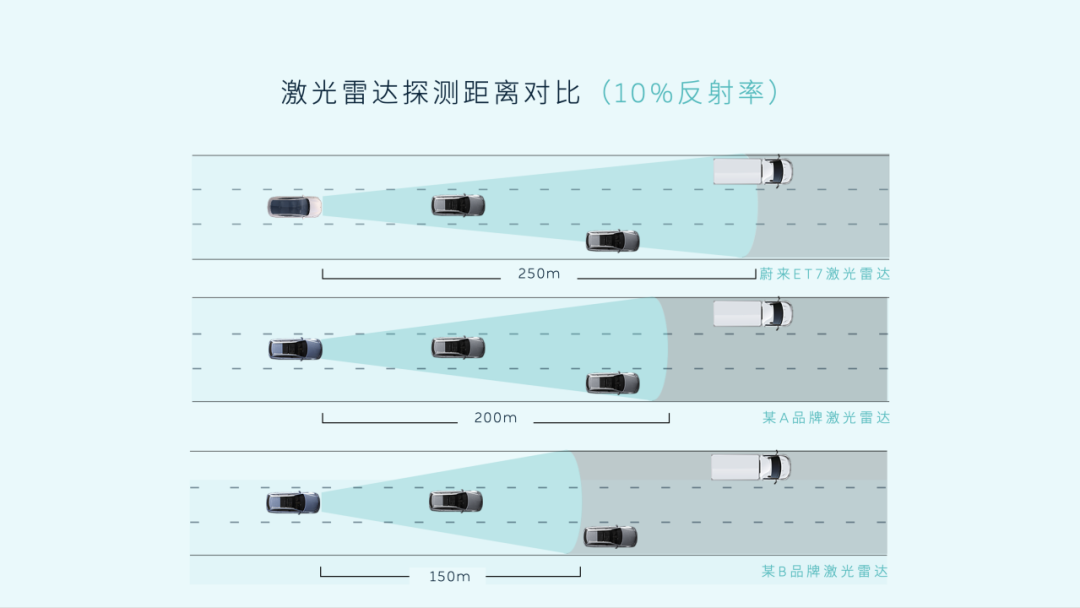

The NIO ET7 features the ultra-long-distance, high-precision LiDAR from Innovusion, which has a detection range of up to 500 meters and up to 250 meters at 10% reflectivity. It also has a 120° ultra-wide horizontal field of view and a high resolution of 0.06° x 0.06°. It is the world’s first 1550nm LiDAR that can be produced in large quantities.

1550nm: More Eye-friendly and Clearer Vision

The 1550nm laser is safer for the eyes than the 905nm laser, as the visible light wavelength range for the human eye is typically 380nm to 760nm.

The 1550nm laser is beyond the human eye’s recognition range and cannot focus on the retina. Most of the laser is absorbed by water as it passes through the eye, so it hardly poses a risk to the human eye. In contrast, the 905nm laser is closer to the visible light wavelength and can easily focus on the human retina.

To protect the eyes, the maximum laser power output for a 905nm LiDAR is typically lower. A 1550nm LiDAR, which has better eye safety, allows for higher output power, thereby achieving longer detection range.

In addition, the 1550nm laser has strong anti-interference ability, better beam alignment, and higher brightness, making the emission and reception of the laser more efficient in the precise identification of objects.

The beam spot of the 1550nm laser is very small, with a diameter only one-fourth the size of the 905nm laser at a distance of 100 meters. When detecting pedestrians at a distance of 100 meters, it receives four pulse points in a row and seven pulse points in column, providing a clear picture of the pedestrian’s posture.

See Farther: Improve Driving Safety by Detecting Obstacles Earlier

The farthest detection distance of the laser radar of NIO ET7 can reach 500 meters, and the detection distance under the 10% reflection rate standard can reach 250 meters, leading the industry.

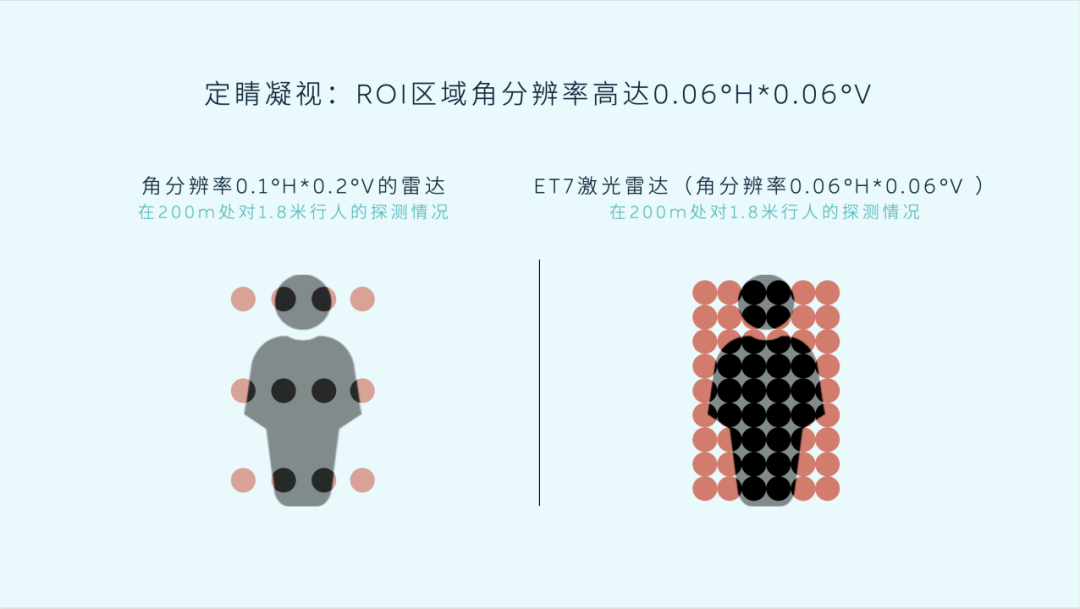

Seeing clearly: “Stare” function achieves ultra-high resolution of 0.06° * 0.06°, achieving laser radar at the image level.

For every change of 0.01° in angle resolution, at a distance of 200 meters, the distance between adjacent points is approximately 3.5cm. Taking a laser radar with an angle resolution of 0.1° as an example, the distance between two adjacent points it receives is 35cm. For target objects such as pedestrians, bicycles, and motorcycles, the point cloud is too sparse, posing a great challenge to the algorithm.

1. Strategic encryption of critical areas of the field of view improves the effectiveness of data utilization.

The ET7 laser radar has a “Stare” function that flexibly zooms in much like a bionic human eye and generates a high-density point cloud with an angle resolution of up to 0.06° * 0.06° in the critical visual area of driving, making the targets in that area more visible.

The Stare function covers a range of 25°H (horizontal) * 9.6°V (vertical). At a distance of 50 meters, this area covers more than 10 lanes horizontally and more than an entire building vertically, fully covering the driving area ahead.

The Stare function strategically selects and encrypts critical areas of the field of view, rather than covering the whole area with points. Therefore, the resolution of any area can be increased as needed at any time, avoiding unnecessary computing resources, reducing power consumption and bandwidth, and enhancing the effectiveness of data utilization. This reduces pressure on the algorithm and makes it more conducive to the operation of the entire vehicle perception system, thus really putting the “points” to use.

2. Dynamic adjustment of ROI areas ensures safe driving.

Dynamic adjustment of ROI areas can effectively detect and track objects such as vehicles and pedestrians, even under conditions such as large curvatures or uphill and downhill slopes, allowing for an early discovery of distant dangerous targets.

Early detection allows for earlier deceleration and warning to users, thereby improving the reliability and safety of automated driving.

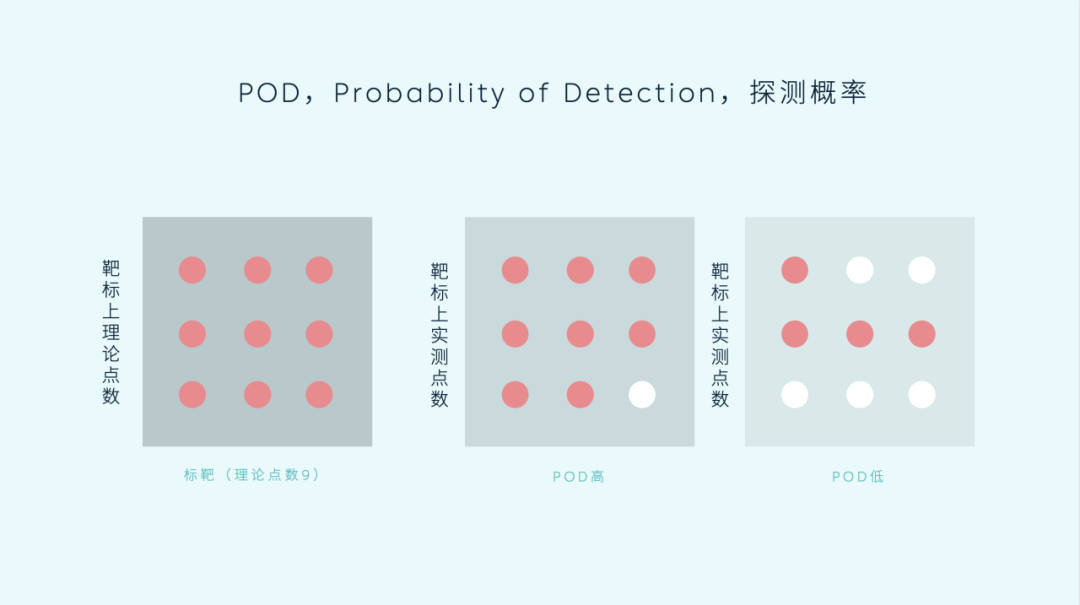

Seeing steadily: POD is an important indicator of laser radar performance.

POD detection probability is generally the ratio between the number of laser beams (the theoretical point quantity) emitted continuously for more than 100 frames and the number of effective points detected.

POD reflects the ability and stability of a lidar to receive return points, which is an important indicator of lidar performance.

The lidar carried by NIO has excellent detection ability, with a detection probability of over 90% for objects with 10% reflectivity at a distance of 250m.

Higher POD can make the vehicle perceive target objects more clearly and reduce algorithmic pressure, achieve a longer effective sensing distance, and enhance the overall safety of the autonomous driving system.

Lidar Durability Testing

Without a unified industry standard, NIO and TuDatong jointly developed reliability standards for the ET7 lidar and designed the experimental conditions, detection items, performance, and experimental requirements for the DVPV experiment.

- Impact test: the product can withstand a 50G experiment

- Hot and cold shock test: endures over 100 cycles from extreme heat (85℃) to extreme cold (-40℃)

- Temperature and humidity alternation test + frosting test: endures over 10 cycles of rapid cooling down to -10℃ in a temperature and humidity alternation environment

- Environmental test: endures corrosion tests of harmful gases such as SO2, NO2, CO2, and CL2; and chemical reagent tests of windshield cleaning solution, car wash chemicals, glass cleaner, contact spray, caffeinated and sugary drinks, etc.

- Light exposure test: simulating the effects of sunlight radiation and ultraviolet rays on components, runs continuously for 2000-3000 hours under the strongest light exposure (> the intensity of sunlight in Turpan in summer)

These are all the performance characteristics of the NIO ET7 lidar.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.