You Are My Eyes

Article by Zheng Wen; Edited by Zhou Changxian

“In front of me, black is not black, and the white you speak of is not the white of snow.” The song “You Are My Eyes” by blind singer Xiao Huangqi plays in the headphones, passionate and powerful.

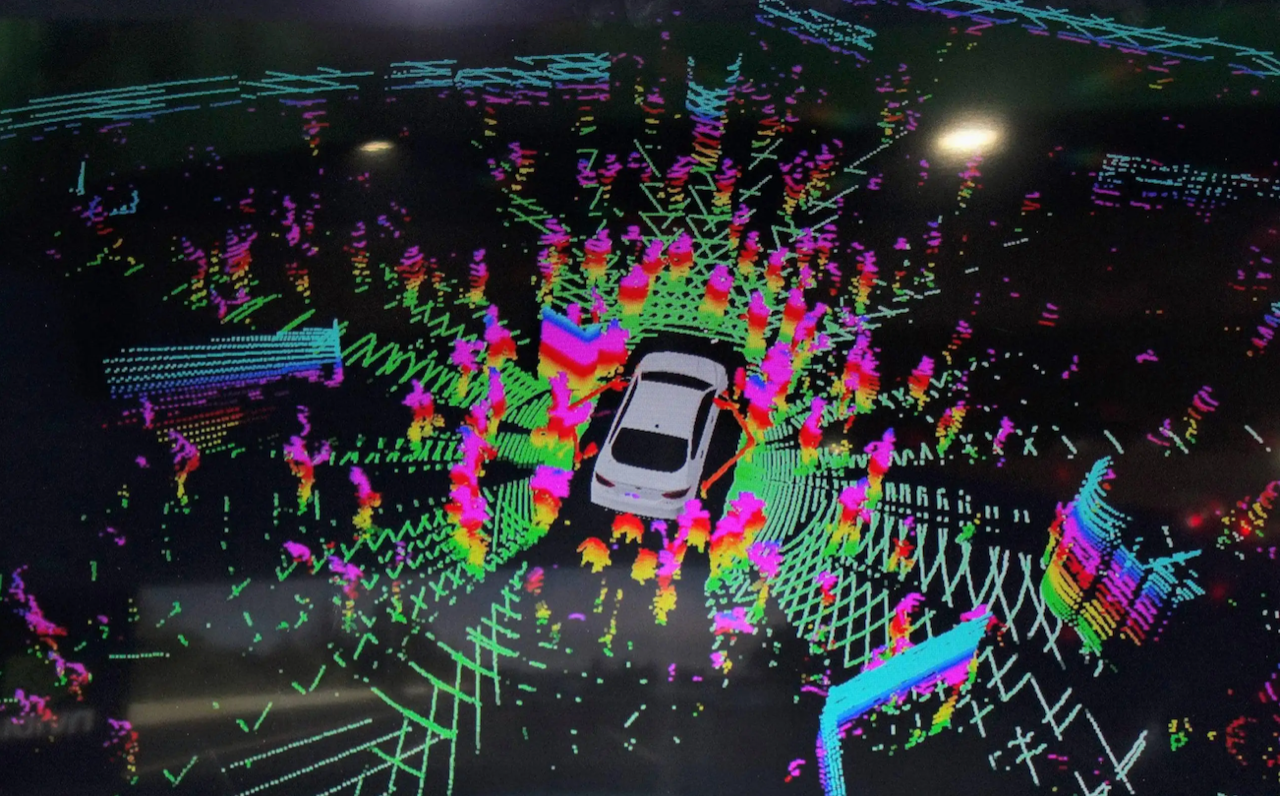

On the computer screen, a video displays a highly advanced autonomous driving vehicle driving through a crowded city. It changes lanes and overtakes other vehicles autonomously, recognizes red and green lights, turns left without protection, and navigates narrow roads through the use of the emergency lane… The driving style is skilled and experienced, as if driven by an old pro, but it is difficult to tell from the perspective of pedestrians outside the vehicle that it is autonomous.

Upon close inspection, however, one may notice that the design of the roof, front and rear of these vehicles is likely to differ significantly from that of traditional vehicle models, with installed laser radar acting as the “eyes” of these smart vehicles, resembling strange and cute creatures, but just as effective in allowing the vehicles to see through the darkness.

Completing high-level automatic driving behavior involves three steps: perception of the environment, path planning, and vehicle control. The most challenging aspect is perception.

Why is perception so difficult? Perhaps one can explore some reasons from the human eye. In the past, people seemed to only focus on the brain as the most important human organ, but it is little known that the eye occupies over 65% of the “memory” of the brain. Its perceived information is not only abundant, but also three-dimensional and dynamic. However, corresponding with such results, implementing perceptual hardware greets a great difficulty.

Currently, how to match the hardware of perception for high-level intelligent driving is still under continuous exploration and optimization. Even the fact that laser radar could reach its current position as a must-have for high-level intelligent driving was not achieved overnight. The discussion on whether or not autonomous driving requires laser radar has been ongoing since spring 2018 and continues still today in winter 2021.

The principle of visual perception is to mark local features and perform feature matching in a massive database, thereby determining the overall characteristic of an object. Although this process simulates the process by which the human brain recognizes objects, it cannot determine distance. In order to solve this problem, there are two main solutions for recognizing and measuring distance using cameras only, without radar:

- Monocular ranging

Recognize the size of an object, its surrounding environment, and calculate the focal length and proportion. This solution has the advantage of low hardware requirements and a long lifespan. However, it is inferior to the recognition algorithm for object type, which requires a high degree of accuracy, such as with car height data, otherwise, the error may be as high as 20%-30%. Mobileye and other similar solutions have an error rate of 3%-15%.

- Binocular stereoscopic ranging

Use the disparity between the viewpoints of multiple cameras at different angles to perform proportional calculation. This solution does not rely on an image database but requires real-time matching and high computational power and hardware cost. The error caused by lens processing, installation, and driving vibration generally reaches 5%, with an error range of the order of magnitude of 10 meters.

There are many defects in visual perception in practical applications:

-

Visual sensors may be blinded by weather and environmental factors such as backlit dazzling, sandstorms, etc.

-

Small target objects may cause delayed recognition in medium and low resolution visual perception systems, such as speed bumps, small animals, cones, etc.

-

Untrained irregular targets may cause missed recognition and cannot be matched, such as road debris, dropped tires from other vehicles, etc.

-

Lateral ranging errors.

-

Errors in detecting false target objects, such as projecting human shadows onto the road, which may cause a test Tesla Model X to make an automatic emergency brake as if there were really someone there.

-

Errors in detecting false lane lines, if brighter images of dividing road lines are projected, the test vehicle will travel along false lane lines.

In addition to cameras, some visual perception schemes may integrate or embed millimeter-wave radar and ultrasonic radar for mutual complement to make up for blind spots, but in the infinite corner cases of advanced autonomous driving, both cameras and millimeter-wave radar may fail.

For example, in short-range target recognition, cameras can only recognize partial vehicle information, and millimeter-wave radar is prone to echo interference in short-range target recognition, making it difficult to effectively recognize targets from short range; in the case of heavy night-time traffic flow, millimeter-wave radar is prone to multipath reflection interference, and cameras may also fail to accurately identify objects.

The latest universal answer in the automotive industry to the problems arising from the above scenarios is that the near-field perception of laser radar and cameras + millimeter-wave radar are complementary to each other. There is a strong complementarity among sensors. Laser radar has high-precision perception, vision has rich semantic information, and millimeter-wave radar has good penetration and can still have stable perception output in harsh weather conditions.

Today, the basic consensus is that laser radar is an indispensable component of perception systems for autonomous driving above L3, and its target detection capability is very significant.

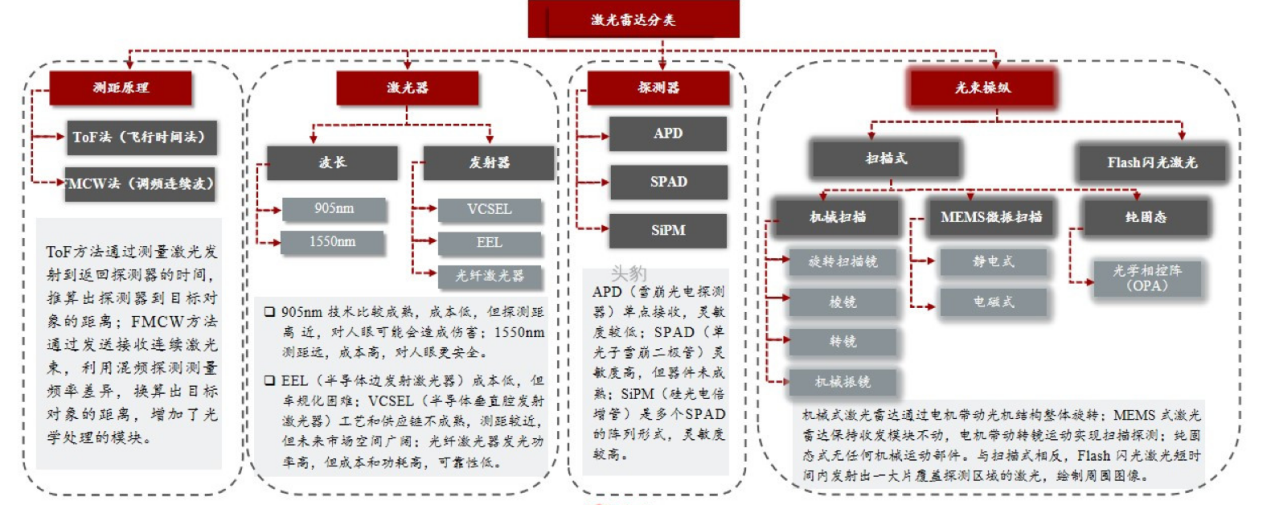

There are also many types of laser radar, classified according to ranging principle, that can be divided into mechanical, hybrid solid-state, and solid-state laser radar, and can be further subdivided.

Although in overall thinking, solid-state laser radar is the future, and the hybrid solid-state technology route is the present, and the ones announced for mass production and installation on vehicles are also mostly hybrid solid-state, the advantage of the more accurate technical route is difficult to discern. Due to different practical application scenarios, these laser radar systems are not purely competitive.

Previously, in a communication with Jue Xueming, the founder of Liangdao Intelligence, at the AutocarMax Mobility Forum, he also pointed out that the laser radar market is in a period of diverse technological paths, and there is no problem of one technology path needing to eliminate the others, that is to say, different technological paths will coexist.

Different technologies of LiDAR have their own advantages and disadvantages and need to be combined in a comprehensive way. Some technologies are suitable for medium-long distance or even ultra-long distance, while some technologies tend to be towards medium-short distance or even short distance. From the perspective of different functional requirements for autonomous driving in the future, they all have application space.

“In our understanding, Flash may have good landing space for some medium and near-range applications in its current state, but for forward-looking high-resolution and cost-effective products based on MEMS, they are currently more suitable for forward-looking direction. This is a combination relationship.” In Ju Xueming’s view, scene coverage is very important.

Just over a month after this statement, on May 13th, Luminan Intelligence released the first pure solid-state Flash lateral LiDAR product in China-LDSense Satellite. The product will be SOP in the second half of 2023.

As Ju Xueming emphasized, this lateral compensation product was born based on the very obvious pain points of the current scene. 1. Early identification of emergency cut-in scenes to effectively reduce collision occurrences; 2. Increase the vertical field of view to increase detection and perception of low-profile objects; 3. Increase the identification of static objects around the road to achieve more accurate lane-level positioning of vehicles.

Scene 1: Early identification of emergency cut-in scenes to effectively reduce collision occurrences.

Currently, the mainstream forward-looking LiDAR is installed in the front of the car, with a horizontal field of view (H-FOV) of 90° to 120°. According to the calculation of the H-FOV as 120°, emergency cut-ins can only be detected when the car is more than 3.6m away from the front of the car, which could lead to collisions.

By installing a lateral compensation LiDAR, the perception range of 180° in front of the car can be achieved, and the moment when the other vehicle is trying to cross the car can be detected.

When using a configuration of one forward-facing and two lateral-facing LiDARs, the perception range can be further expanded to more than 270°, achieving a more comfortable automatic driving path planning solution.

Scenario 2: Increase the vertical field of view and enhance detection of low objects.

Temporary dropped objects in the lane ahead, sudden appearance of cats and dogs can be easily filtered out by the limited redundancy of camera environment and limited training data for algorithm in extreme conditions. Cameras cannot achieve precise location for roadside edges and lane marking, and the limited vertical field of view (V-FOV) of the front-facing main LIDAR creates visual blind spots ranging from 3m to over 7m based on installation height.

The major feature of the lateral supplementary LIDAR, LDSense Satellite, is its large vertical field of view, exceeding 75°. The lateral LIDAR, which has a vertical field of view of no less than 75°, can complement point cloud of near-field blind spots of a front-facing LIDAR, enabling detection of low targets such as roadside edges, speed bumps, cats, dogs, and nearby lane markings.

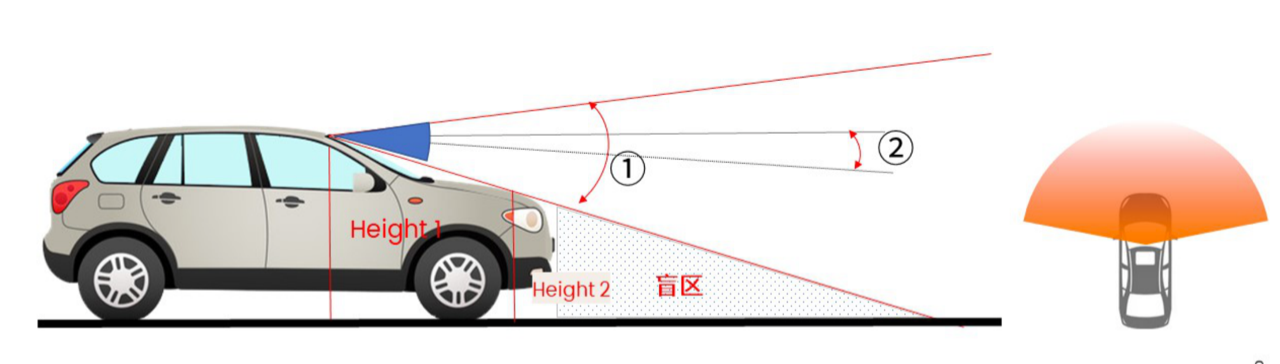

The size of the blind spot is determined by several factors: the vertical field of view, the installation height of the LIDAR (Height1), the height of the front hood (Height2), and the adjustability of the LIDAR tilt angle.

The 1+2 solution currently available on the market does not have LIDARs installed on both sides specifically for blind spot supplement scenarios, and the vertical FOV is generally only 25°, which cannot meet the requirements of blind spot supplement.

As shown in the example in the figure below, when a long-range LIDAR with a 25° vertical FOV installed on the roof of the car, there is a large blind spot at low heights. Even if the LIDAR is installed at the position of the headlamp, the blind spot will still be significant. However, if a lateral LIDAR with a 90° vertical FOV is installed in the headlamp or side fender position, the low-height blind spot will almost disappear.

Even if we take a 10 cm-high roadside edge as an example, the lateral supplementary LIDAR can not only achieve near-field detection from 0.35m to 15m, but also achieve ultra-high-resolution detection of 30 rows of point clouds at 0.35m.

Scenario 3: Enhance recognition of static objects around the road and achieve more accurate lane-level positioning.In road sections or intersections without clear lane markings or with blurred lane markings, cameras may plan trajectories with significant fluctuations, which affects driving experience. However, when the side-mounted LiDAR is installed at the top position, it can not only perceive adjacent lane targets in reality, but also obtain accurate relative position information by perceiving static road signs around the road, combined with high-precision maps, to achieve lane-level precise positioning.

In addition, in many scenarios, the lateral auxiliary LiDAR can also play a role in improving the performance of functions. These functions include AEB front (ped/cyclist), AEB-crossing, ACC S&G/i-ACC adaptive cruise system, ICA intelligent cruise assist, TJA traffic congestion assist driving in front detection, LCAS/ALC/AES traffic congestion assist driving in rear detection, RCTA rear traffic crossing prompt, DOW car door opening warning in rear detection, APA/RPA, AVP, AVM in parking, and more. Adding LiDAR as a supplementary driving sensor for 5V5R can improve the performance and reliability of perception, thereby enhancing the safety of the system.

As for path selection, Flash is a comprehensive consideration. It is a pure solid-state technology scheme, which transmits and receives through chip-based devices without any moving parts. It has high reliability, long life, and is easy to produce, making it easier to achieve full automation in production, resulting in higher product consistency.

Although Flash LiDAR is limited by the laser density of its VCSEL laser, with low power density and short detection distance, its sensing coverage range is enough to support the demand for blind spots (coverage range is from 30m to 50m). Flash technology is complementary to the primary forward-looking radar for sensing.

With practical pain points and needs, a lateral auxiliary LiDAR is born, and the perception solution for autonomous driving is becoming more accurate under the promotion of LiDAR.

The dawn of autonomous driving has not yet arrived, and the story of LiDAR is far from over.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.