Author: Engineer X

Editor’s note:

-

For the convenience of readers’ understanding, this article follows the customary use of the “L3” concept in the industry. However, it must be clarified that in the actual implementation process, considering regulatory and liability issues, advanced level intelligent driving originally designed according to the “L3” standard typically only dares to be used as L2 — strictly according to the standards of SAE and the Chinese Ministry of Industry and Information Technology (from the perspective of the responsible party), some vehicle manufacturers’ “L2.5” or “L2.9” are still L2; even if vehicle manufacturers do not dare to say “I will take responsibility if something goes wrong with automatic driving”, even if they name it “L3.9” in market promotion, it is still only L2.

-

Although the industry generally believes that TJA belongs to L2 and NOA belongs to L3, strictly speaking, there is no necessary correlation between the two, because TJA and NOA emphasize the “application scenarios”, which is about “where to run”, while the automatic driving level is about “who will take responsibility if something goes wrong”.

Many people have not noticed that what is referred to as L3 in Europe, America, and South Korea, also known as “single lane automatic driving”, is actually about “lane keeping”. From the context of “single lane”, it is only slightly stronger than what most people understand as L1. But it is also okay for Mercedes to say that their model’s automatic driving level is L3, because they dare to say “I will take responsibility if something goes wrong”.

In China, NOA is translated as “navigation assisted driving”, and this translation is worth discussing. On the one hand, vehicle manufacturers hope that consumers will think that “NOA is L3” (human-assisted system), enticing car owners to “free their hands”; on the other hand, in order to avoid liability disputes, they emphasize that this is only “assisted driving”, which returns to L2 under SAE standards.

In fact, Japan previously revealed such a mentality in formulating regulations on L3 – hoping that domestic manufacturers could seize the opportunity, but also not wanting them to bear too much pressure; both encouraging consumers to “boldly try”, and fearing that they are “overly bold”.

These issues have been thoroughly analyzed in the article “Guiding Thought on Landing of L3 Level Automatic Driving: High-speed Assistance for Humans, Low-speed Replacement for Humans” by Jiuzhang Autonomous Driving in April 2021 (Insert hyperlink here). Interested friends can click the hyperlink to read it.

-Su Qingtao

IntroductionCurrently, the development of autonomous driving is based on functions, such as Adaptive Cruise Control (ACC), Traffic Jam Assist (TJA), and Navigate on Autopilot (NOA), which are well-known. Typically, developers of autonomous driving products, including new automakers, traditional OEMs, traditional Tier 1 suppliers, technology companies, and internet giants, have a clear plan on function development.

At the same time, the industry has gradually reached a consensus that the evaluation and experience of autonomous driving should be based on user scenarios. Users, as the users of autonomous driving products, cannot study various functions and indicators in depth and detail like developers. What they care about the most is the user experience of a product.

We can understand functional development as research content on the development side, forming a unique functional plan and functional system, which is the main focus of developers. User scenarios constitute research content on the user side, forming a systematic and standardized user scenario system, which is the main focus of evaluation institutions and user experience research.

So what is the current general functional system of autonomous driving? How should we build a user scenario system? How can we bridge the gap between functional and scenario systems to achieve synchronous user experience and functional development? This article will provide detailed analysis of these issues.

Functional System

During the development process, due to the completely different attributes of the target objects, algorithms, especially decision algorithms applied for high-speed driving and low-speed parking, the functions of autonomous driving are usually divided into two categories: driving and parking.

Driving Functions

We have summarized the current mainstream driving functions and corresponding intelligent levels, function implementation effects, and other contents, as shown in Table 1. The function levels refer to the latest standards of the Society of Automotive Engineers (SAE), as shown in Figure 2.ACC, which stands for Adaptive Cruise Control, is an adaptive cruise control system. As a basic function of intelligent driving, ACC is a familiar feature that has been developed relatively maturely. Through the perception of the road environment and obstacles, it can automatically control the throttle and brake system, realize automatic acceleration and deceleration of the vehicle within the lane, and carry out actions such as starting and stopping. ACC can help drivers to free their feet and relieve the fatigue of straight-line driving.

LCC, which stands for Lane Centering Control, is a function of pure lateral control. By recognizing the lane and controlling the steering system automatically, LCC can free up drivers’ hands and allow the vehicle to automatically stay within the lane.

ALC, which stands for Auto Lane Change, is an automatic lane changing assistance system. Although the literal name is “automatic lane changing”, the mainstream approach is actually “command-style lane changing”, which generally controls the vehicle’s steering system through the turn signal lever to achieve automatic lane changing. ALC can effectively assist drivers in changing lanes and free up their hands.

TJA, which stands for Traffic Jam Assistant, is an assistance system for traffic congestion. TJA can be understood as a combination of ACC and LCC functions, belonging to the L2-level function. When there is a traffic jam, this function can automatically control the vehicle’s starting and stopping, acceleration and deceleration, as well as fine-tune the driving direction, to achieve automatic keeping the vehicle aligned with the center of the lane or cruising.

NOA, which stands for Navigate On Autopilot, is an assistant driving system for navigation. Based on navigation maps, NOA can automatically drive the vehicle according to the navigation path, freeing drivers’ hands and feet for a long time. NOA belongs to the L3-level intelligent driving function and is a combination of low-level intelligent driving functions such as ACC, LCC, and ALC.

Depending on the available areas, NOA mainly includes high-speed navigation driving assistance and urban navigation driving assistance. Due to technical limitations, the NOAs currently in mass production are all high-speed navigation driving assistance systems. Emerging automakers such as Tesla and WmAuto have been exploring navigation driving assistance functions in urban roads and are about to start mass production.

Currently, intelligent driving functions such as ACC, LCC, and TJA that do not involve lane changing have been widely popularized, and almost all car manufacturers have launched related functions. ALC functionality has higher requirements for hardware and algorithms due to involving lane changes, and currently only a few players have realized mass production. NOA functionality is currently the highest level intelligent driving function that has been mass-produced. Only leading automakers and technology companies have realized high-speed navigation driving assistance, and urban navigation driving assistance is the trend for the next step.

Parking Functions

Table 2 summarizes the current mainstream parking functions, their corresponding level of intelligence, and their implementation effects.

APA (Auto Parking Assist) is a function that enables automatic parking and unparking of vehicles. Once enabled, it recognizes available parking spaces around the vehicle, controls the vehicle’s horizontal and vertical movements, and automatically parks and unparks the vehicle in the designated parking spot. The APA function requires the driver to remain in the car and be ready to take control at any time. Currently, the APA function has matured and has become a standardized configuration for vehicles.

RPA (Remote Parking Assist) is a function that enables the driver to control the vehicle to park and unpark automatically through remote methods, such as a mobile app, after the driver has exited the vehicle.

SS (Smart Summon) is a function that was first introduced by Tesla, which allows the owner to remotely control the vehicle to drive to a specified location through a mobile app outside of the vehicle.

HPA (Home-zone Parking Assist) is a function that automatically completes parking in a specific area (such as the home or company parking lot), where the vehicle remembers specific parking spots and driving routes through self-learning. Currently, XPeng has achieved mass production of HPA function. However, since the available area is limited to the parking lot and the driver needs to be ready to take control at any time, HPA belongs to level 3 intelligent driving.

AVP (Automated Valet Parking) is a fully automatic driving function that enables the vehicle to park and unpark completely on its own without prior learning, even in completely unfamiliar parking lots. It does not require the driver to be in the car. As a level 4 intelligent driving function, the current software and hardware, especially algorithms and safety requirements, are quite high, and there are currently no mass-produced products.

Safety Functions# Translate Text

Except for the two major functions of intelligent driving and parking, intelligent driving also includes basic active safety functions, as shown in Table 3.

Table 3 shows that various active safety functions are strongly related to the location of the dangers relative to the vehicle and are not directly dependent on the scene, so they are not the focus of this article. In addition, safety functions have also been developed relatively mature and gradually become mandatory requirements of regulations, so this article will not be introduced one by one.

Scene System

From the perspective of user experience, common travel scenes include three major areas: highways, urban areas, and parking lots. Highways and urban areas belong to driving scenes, while parking lots belong to parking scenes.

Driving Scene

The scene of driving on the road is called the driving scene. From the level and application scenarios of intelligent driving, there are several basic driving scenes:

(1) Driving in the current lane;

(2) Changing lanes;

(3) Crossroads;

(4) Ramps.

Different factors affect the user experience in different scenes. For example, when driving in the current lane, the vehicle’s acceleration and comfort will significantly affect the driver’s experience, while during lane changes, the success rate and timing of the lane change are more important; in the ramp scene, the impact of the entry and exit strategies and the stability of the ramp driving are higher.

Therefore, we need to analyze the significant factors that affect the user experience in different scenarios and convert them into the performance indicators of the intelligent driving system.

Driving in the Current Lane

Driving in the current lane without involving lane changes is the most basic driving scene. Based on the situations that may be encountered when driving in the current lane, it can be further divided into four sub-scenes: driving on straight roads, driving on curved roads, following cars, and cut-in/out.

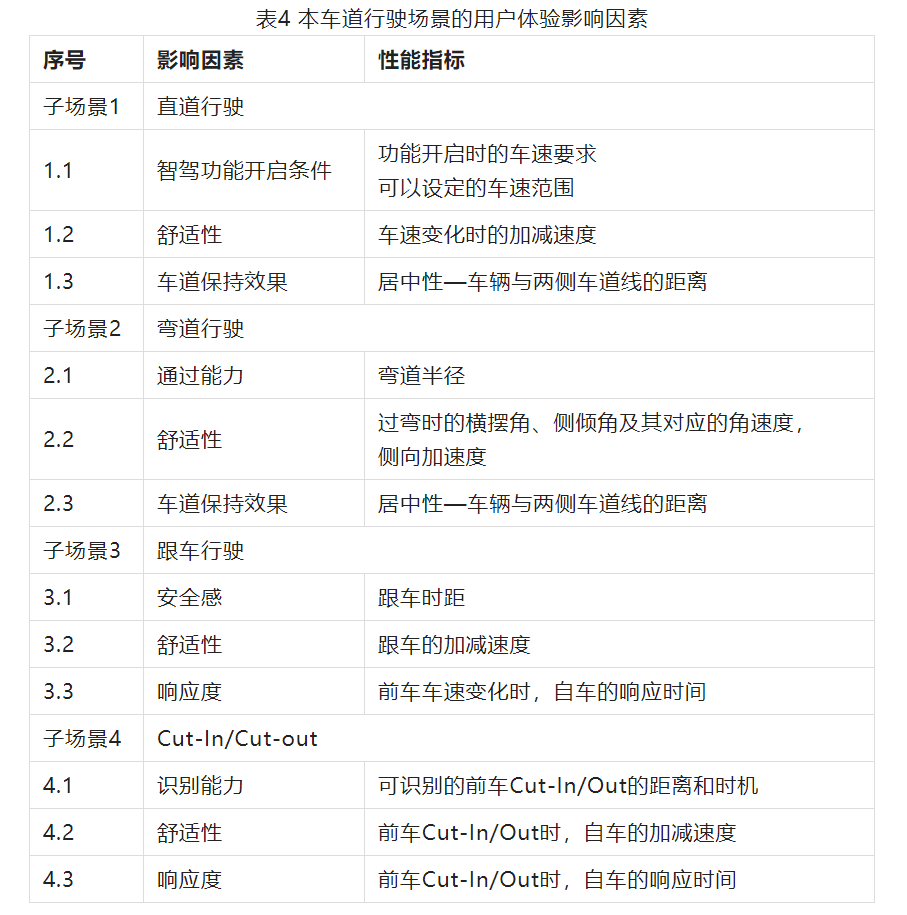

Next, we will analyze the significant factors that affect the user experience and the corresponding performance indicators in different sub-scenes. The summarized content can be found in Table 4.

Under normal circumstances, users will turn on the intelligent driving function on straight roads. Therefore, the conditions for turning on the intelligent driving function on straight roads are a factor that affects the user experience. There must be clear, easy-to-remember, and convenient conditions for turning on the function, and users will be willing to use it.The corresponding performance indicators mainly include vehicle speed. When the ACC function is enabled, there needs to be a reasonable initial speed requirement and a speed range limit. Excessively high or low speed limitations will affect the user experience. The current mainstream approach is to limit the opening speed to above 30kph, but with the advancement of algorithms and confidence in their own technology, new players such as Wei Xiaoli are gradually lowering the speed requirements, even down to 10kph or lower.

In any scenario, comfort is a direct factor that affects the user experience. When driving straight, comfort is mainly reflected in the acceleration when the vehicle speed increases, and the deceleration when the vehicle speed decreases. Excessive acceleration or deceleration will make users feel dangerous, and too small acceleration or deceleration will result in a sluggish system response, leading to complaints.

In addition, when driving straight, lane-keeping effect is also important. Smoothly keeping within the lane is a basic requirement for drivers and passengers. Lane-keeping effect can be reflected by vehicle centering, which is the distance from the vehicle to the side lane markings.

When driving through curves, the automatic turning capability of the Intelligent Driving System is the first factor to consider. The curve radius can directly reflect the system’s turning capability. The smaller the curve radius, the sharper curves can be made, the stronger the system’s turning ability, and the higher the user’s trust in the system.

Curved scenarios and straight scenarios have common influencing factors: comfort and lane-keeping effect.

The comfort when driving through curves is mainly reflected by the vehicle’s lateral status parameters, such as yaw angle, tilt angle, and lateral acceleration. Of course, the user’s subjective feeling is also an important indicator of comfort.

In the following distance scenario, safety is very important due to the involvement of external vehicles, and the following distance is closely related to safety. An appropriate following distance can make the driver feel no collision risk, no depression, and can also avoid frequent cut-ins.

In addition, comfort and response are also factors to consider. When the preceding vehicle speed changes, the response time, acceleration and deceleration of the vehicle will all affect the user experience.

The Cut-In/Out scenario is an emergency situation for driving in the same lane, so the recognition capability of the Intelligent Driving System is particularly important. The farther the distance and the earlier the time that can be recognized in advance, the more dangerous can be avoided and safety can be ensured.

In addition, like the following distance scenario, comfort and response also directly affect the user experience in the Cut-In/Out scenario.

In addition to the four basic and typical sub-scenarios of straight road, curved road, follow-up, and Cut-In/Out, there are other scenarios that also belong to driving in the same lane, including some special scenarios. Such as lane merging, branching, disappearing, obstacles in the lane, and construction guidance for changing lanes, etc., which also need to be considered.

#### Lane Change

#### Lane Change

Lane change is a very common scenario in driving scenarios. Lane change actions occur when overtaking, terrain changes, lane closures and other situations occur.

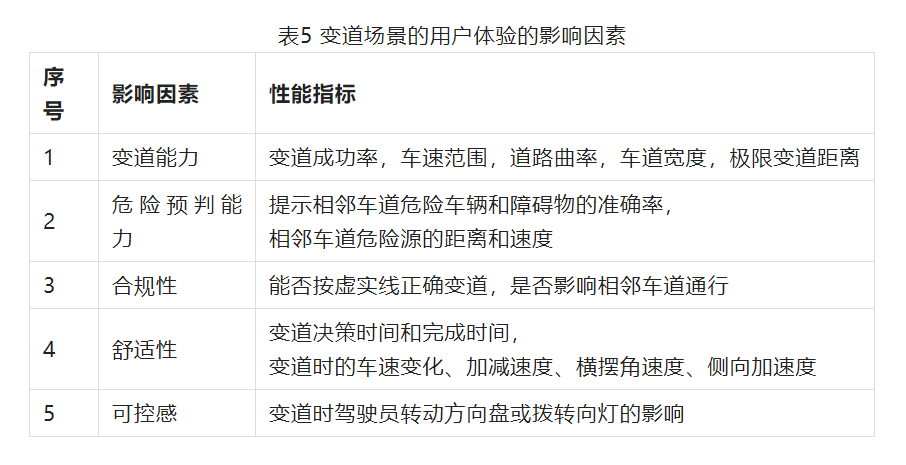

The lane change ability reflects the boundary ability of the intelligent driving system in the lane change scenario. The success rate of lane change, the range of vehicle speed requirements for lane change, the range of road curvature, lane width range, and the maximum distance for lane change are all indicators of the system’s lane change capability. Among them, the success rate of lane change is a statistical data that requires a lot of test results to obtain relatively accurate conclusions.

The current production intelligent driving function has certain requirements for the vehicle speed range when changing lanes, commonly known as the lowest 45kph, the lowest 60kph, etc. As algorithm capabilities improve, the requirements for vehicle speed, road curvature, width, and other conditions are gradually being relaxed.

The ability to predict danger is a guarantee of user safety and trust. Only when the system can predict risks in a timely manner and prompt users, can users gradually develop trust in the system and feel safe. Imagine, if users can find that a vehicle in the adjacent lane is approaching rapidly and cannot change lanes, but the system has not identified it, how can users trust this intelligent driving system?

The ability to predict danger during lane change mainly lies in the system’s recognition rate of danger sources, as well as judgment criteria for danger sources such as distance and relative velocity. The higher the recognition rate and the farther ahead the system can detect danger, the stronger the ability of danger prediction.

Compliance and legality are also indispensable factors, especially in lane change scenarios, where illegal operations are more likely to occur. Therefore, accurately identifying solid and dotted lines and correctly changing lanes according to lane lines are important factors in evaluating the compliance of lane change.

Comfort is an eternal theme. In lane change scenarios, the system’s decision time and completion time will affect users’ evaluation of the system’s capabilities. Vehicle state parameters such as speed change strategy during lane change, acceleration and deceleration, lateral angular velocity, and lateral acceleration directly affect users’ comfort experience.

The sense of controllability is an important factor in human-machine driving. Regardless of any function, as long as it is not fully autonomous driving, it is necessary to ensure the driver’s sense of control over the vehicle. In the lane change scenario, the response of the vehicle to the driver’s operations, such as turning the steering wheel or reversing the turn signal, is the main indicator for assessing controllability.

Intersection

Intersections are common scenes for city driving and are also relatively complex. Various traffic static elements such as lane lines, zebra crossings, arrows, and guide lines, and various dynamic participants such as vehicles, pedestrians, bicycles, and animals, together with the constantly changing traffic lights, collectively form the classic urban scene of the intersection.The behavior of vehicles at intersections mainly includes stopping, going straight, turning, and making a U-turn. Therefore, we need to consider the user experience factors, which can partially refer to the factors mentioned in the previous scenarios of going straight, turning, and following a car. In addition, the ability to identify traffic lights and automatically drive according to traffic lights is a key factor to consider at intersections.

Ramp Scenes

Ramp scenes are unique scenarios for highways and urban interchanges. As the connecting part between different main roads, the experience in ramp scenes is an important aspect to evaluate the intelligent driving system.

Details of the ramp scene can be subdivided into three sub-scenes: driving on the ramp, entering and exiting the ramp.

As most ramps are curves currently, the user experience factors and indicators for driving on a ramp can refer to the previous section on turning.

In the scenes of entering and exiting the ramp, the key issues to consider are the strategies for entering and exiting the ramp, and the change in vehicle speed. For example, when entering the ramp, it is necessary to change lanes to the right lane in advance and slow down in advance, so the timing of lane changes and deceleration is important. As for exiting the ramp, the factors such as how the vehicle speed changes and whether it can automatically accelerate to the speed limit of the road are all important factors that affect the user experience.

In addition, the success rate of entering and exiting the ramp and entering the main road is also an important indicator to evaluate system performance and user experience.

Parking Scene

The parking scene mainly occurs in parking lots, and is therefore simpler compared with driving scenes.

According to the complete process of parking, the parking scene includes automatic driving in the parking lot, searching for parking spaces, parking and exiting parking spaces, etc.

Driving inside the parking lot

Currently, parking lots can be mainly divided into the following four types: underground parking garage, parking building, open-air parking lot, and roadside temporary parking spaces. The infrastructure, road conditions, lighting conditions, etc. of different types of parking lots are different, so the performance of vehicles driving inside different parking lots will also vary.

Overall, when driving inside a parking lot, the main consideration is the vehicle’s trajectory planning ability, perception and positioning ability, and the ability to identify obstacles.

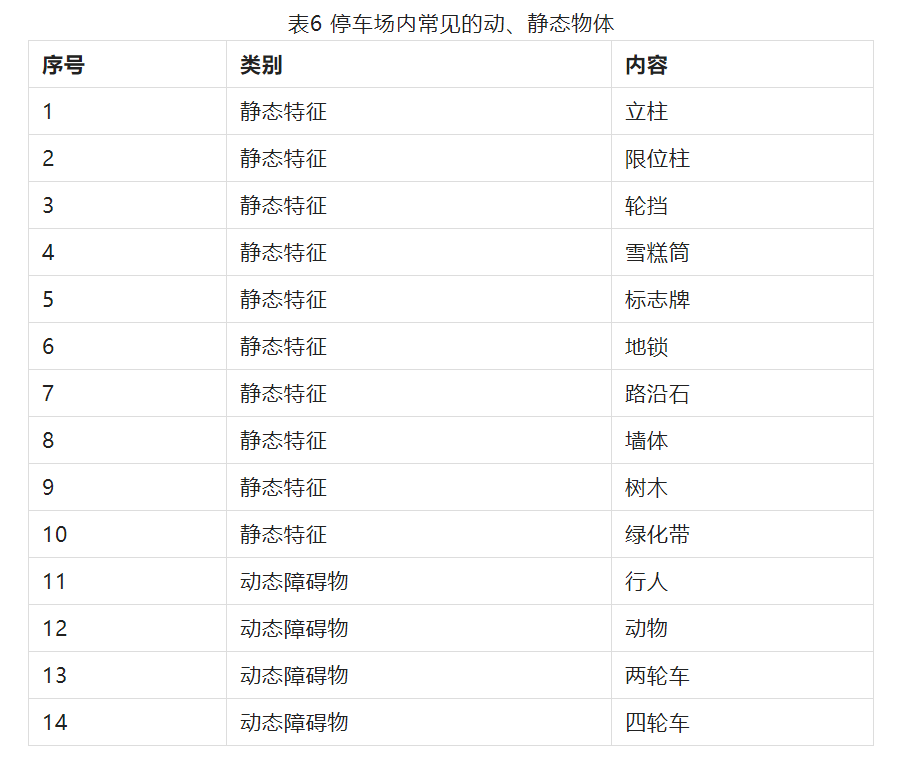

Table 6 summarizes the common static features and dynamic obstacles in the parking lot. The intelligent driving system needs to accurately identify these features and obstacles to achieve safe and efficient driving inside the parking lot.

The user experience factors and indicator items in automatic driving inside the parking lot are similar to those in low-speed driving scenes.

Search for Parking Spaces

The user experience of searching for parking spaces mainly depends on the vehicle’s ability to recognize parking spaces. The higher the accuracy of parking space recognition, the stronger the ability of the vehicle to recognize parking spaces, and the better the user experience.

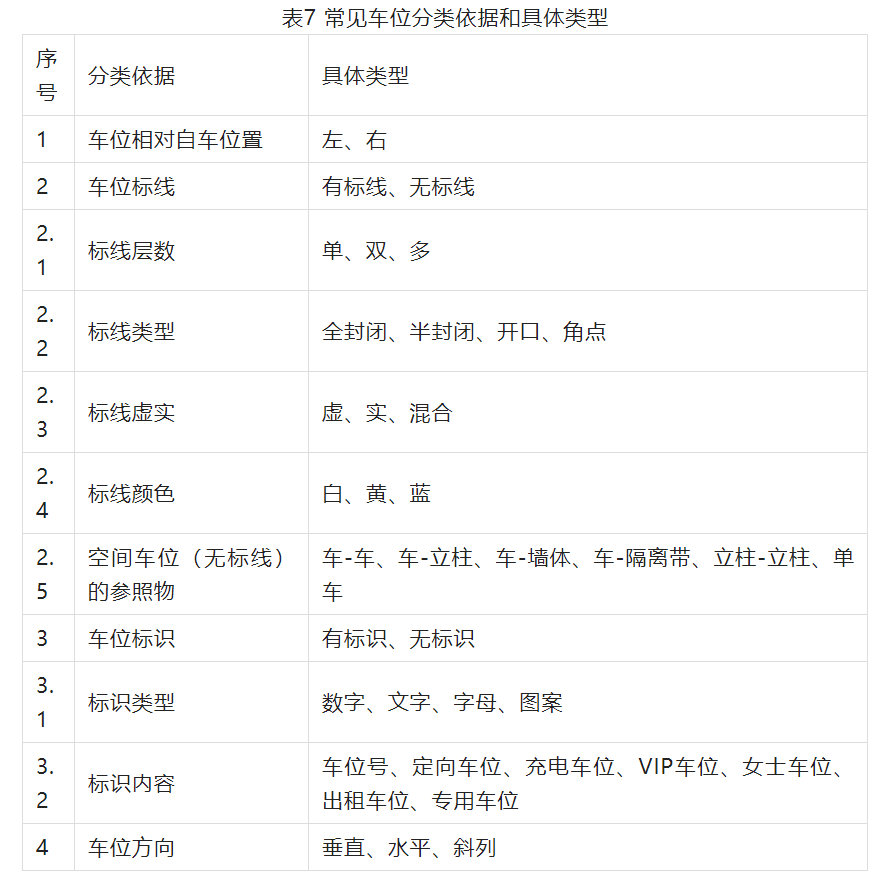

There are many types of parking spaces, which can be divided into marked parking spaces and unmarked parking spaces according to the situation of parking space lines, and can be divided into vertical parking spaces, horizontal parking spaces, and diagonal parking spaces according to the direction of parking spaces. Table 7 summarizes the common classification basis and specific types of parking spaces.

It should be noted that the ability to search for parking spaces should also be evaluated based on statistical data from multiple tests, and a small sample size does not have universal significance.

Parking-in and Parking-out

Parking-in is the last step in the parking process and is also the initial application scenario of smart parking.

When a suitable parking space is found, the intelligent driving system controls the vehicle to automatically park in the parking space, and the system automatically completes operations such as horizontal and vertical control and gear switching.

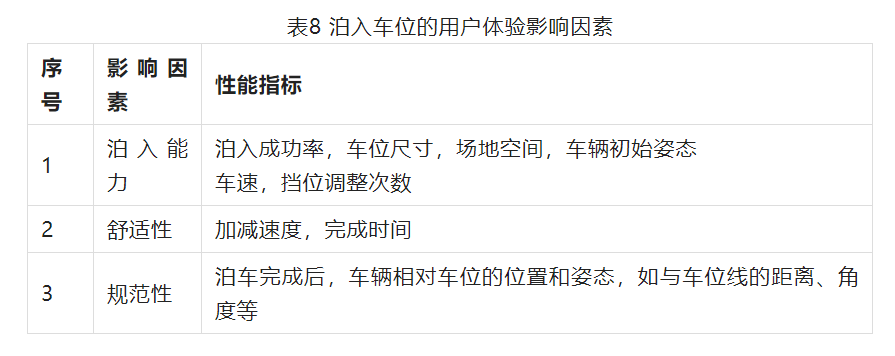

The parking ability is the primary factor affecting the parking experience, reflecting the parking ability of the system. The parking ability indicators include success rate, size range of parkable parking spaces, and speed range, and need to comprehensively consider vehicle status parameters and parking space parameters, etc.

Comfort is also an important influencing factor. For the intelligent parking system in the car, comfort directly affects the user experience. The indicators of the acceleration and deceleration of the vehicle during the parking process and the time it takes for the system to complete the parking can reflect comfort.

The standardization of parking is another influencing factor. Neatly parked vehicles will increase users’ favorability and trust. Whether the parking is upright, whether the position is centered, and the distance from the parking space line or adjacent vehicles all reflect the standardization of the parking system.

Parking-out is the opposite process of parking-in, and its influencing factors are basically the same as parking-in scenarios.

Relationship Between Functions and ScenariosWe have interpreted in detail the functional and scenario systems of intelligent driving in the previous article. These two systems represent the development side and the user side respectively. Therefore, analyzing the relationships between different functions and scenarios, identifying their inherent connections, is an important way to bridge the gap between the development side and the user side.

Driving Function and Scenario

According to the interpretation of the intelligent driving functional system in the previous article, the driving functions mainly include ACC, LCC, and ALC at L1 level, TJA at L2 level, and NOA at L3 level, among which NOA is further divided into highway NOA and urban NOA.

From the functional descriptions, it is not difficult to see that ACC’s main function is to automatically control the vehicle’s longitudinal driving, while LCC is mainly used to keep the vehicle in the middle of the lane. Therefore, ACC and LCC are mainly applied to scenarios where the vehicle is driven in the same lane. During the development process of these two functions, it is necessary to focus on the performance indicators involved in the scenario of driving in the same lane mentioned in the previous article. ACC needs to consider all indicators, while LCC focuses on lane-keeping effect and comfort.

ALC’s function is to change lanes, so it is applied to lane-changing scenarios. During the development of ALC, developers need to focus on the impact of user experience factors in the lane-changing scenario, such as lane-changing ability, comfort, compliance, and their corresponding performance indicators.

TJA is the superposition of ACC+LCC+ALC functions, so it needs to include the scenarios of driving on the same lane and changing lanes, including the corresponding user experience impact factors and performance indicators.

NOA function is divided into highway NOA and urban NOA. The highway NOA scenario includes the scenarios covered by TJA, plus the ramp scenario. The urban NOA scenario is the TJA scenario plus the intersection scenario. It can be seen that NOA covers the most comprehensive range of scenarios, and a large number of user experience and performance indicator items need to be considered during the development process. Therefore, developing a good NOA function is of certain difficulty.

Of course, the scenarios involved in NOA function are very complicated. We only listed typical basic scenarios here. There are other scenarios that developers need to continuously explore and supplement, such as bridges, tunnels, unstructured roads, schools, etc., each with its unique characteristics. Based on the basic scenarios, continuously expanding and enriching the scenario library is a long-term and meaningful work for the development of intelligent driving, which is very helpful for function development and improving user experience.

Parking Function and ScenarioThe parking function includes L2 level APA and RPA, L3 level SS and HPA, and L4 level AVP.

APA and RPA work in the parking area to automatically park and unpark the vehicle near the parking space. The difference is that APA allows the driver to monitor and take control from inside the vehicle, while RPA allows the driver to monitor and take control remotely.

Therefore, APA and RPA apply to parking and unparking in parking spaces, and developers need to consider factors such as parking capacity, comfort, and standardization that affect user experience in the development process.

SS and HPA work in the parking lot, including parking spaces and roads within the parking lot. SS is responsible for calling the car from the parking space to the designated location, while HPA is responsible for parking the car from the entrance of the parking lot to a specific parking space.

It can be seen that the application scenario of SS is unparking and driving within the parking lot, while the application scenario of HPA is driving within the parking lot, searching for parking spaces, and parking. Coverage of AVP, as the ultimate solution for intelligent parking, includes the entire process from the owner leaving the car to the vehicle parking, as well as the reverse process of calling the car. The application scenario of AVP is the superposition of all parking scenarios mentioned above.

In addition, AVP function needs to fully consider the user experience and performance indicators under all parking scenarios. Furthermore, given that AVP function is initiated when the user leaves the vehicle, high safety and robustness are also imperative and require sufficient safety redundancy design.

The distance from the car owner’s departure point to the parking lot is not considered here because this scenario exists outside of the parking lot and has uncertainty.

The figure below shows the relationship between the parking function and the scenario.

In conclusion, this article analyzes the current functional and scenario systems of intelligent driving functions and establishes an associative architecture between the two. Comprehensive consideration of the relationship between functions and scenarios and the performance indicators based on function planning and application scenarios is beneficial to breaking through the barriers between developers and users in the early development stage and incorporating user experience into the development process in a synchronous manner.# Function and scene continuous iteration in intelligent driving development

Of course, functionalities are in continuous iteration, and scenes are in continuous improvement. In the process of intelligent driving development, we need to continuously upgrade and expand based on these basic functionalities and scenes, truly achieving that product needs stem from users, intelligent driving functionalities serve users, and building a high satisfaction intelligent driving solution.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.