Author: Su Qingtao

I have been skeptical about Tesla’s “pure visual route” that they have been sticking to. My point of doubt is: can algorithmic progress compensate for the physical limitations of camera performance? For example, when the visual algorithm is powerful enough, will the camera have ranging capability? Can it see at night?

The former doubt was dispelled in July 2021, when Tesla was reported to have developed “pure visual ranging” technology. However, the latter doubt continues to exist.

At one point, I even thought that if we compare cameras to human eyes and visual algorithms to “the part of the brain that coordinates with the eyes,” then the view that “when the visual algorithm is powerful enough, laser radar is not necessary” is equivalent to saying “as long as my brain is smart enough, it doesn’t matter if my eyes are extremely nearsighted.”

But recently, Musk mentioned the plan to “kill ISP” in HW 4.0, which overturned my understanding. In an interview with Lex, Musk said that the raw data of all Tesla’s cameras will no longer be processed by ISP but will be directly input into FSD Beta’s NN inference. This will make the cameras super urgent and powerful.

With this topic in mind, I had a series of exchanges with many industry experts, including Luo Heng, responsible for the BPU algorithm of Horizon, Liu Yu, CTO of Yuwenzhi, Wang Haowei, Chief Architect of Junlian Zhihang, Huang Yu from Zhitu Technology, and co-founder of Cheyou Intelligent, etc. I then realized that my previous doubts were simply “overthinking it.”

The progress of visual algorithms is indeed gradually expanding the boundaries of the physical performance of cameras.

What is ISP?

ISP stands for Image Signal Processor, which is an important component of the in-vehicle cameras. Its main function is to perform operations on the signal output by the front-end image sensor CMOS, translate the raw data into images that can be understood by the human eye.

Simply put, only with ISP can drivers “see” the scene details through the camera.

Based on first principles, autopilot companies also use ISP mainly to perform white balance adjustment, dynamic range adjustment, filtering, and other operations on camera data based on the actual situation of the surrounding environment, in order to obtain the best quality images. For example, adjusting exposure to adapt to brightness changes, adjusting focus to focus on objects at different distances, etc., to make the camera performance as close to the human eye as possible.

It is obvious that pushing the camera to “be as close to the human eye as possible” cannot meet the needs of autonomous driving – algorithms need the camera to work normally in situations where the human eye also “fails”, such as strong and weak light interferences. In order to achieve this goal, some autonomous driving companies have to customize ISPs that can enhance the performance of cameras in strong and weak light and interference conditions.

It is obvious that pushing the camera to “be as close to the human eye as possible” cannot meet the needs of autonomous driving – algorithms need the camera to work normally in situations where the human eye also “fails”, such as strong and weak light interferences. In order to achieve this goal, some autonomous driving companies have to customize ISPs that can enhance the performance of cameras in strong and weak light and interference conditions.

On April 8, 2020, Alibaba Damo Academy announced the independent development of an ISP for vehicle-mounted cameras based on its unique 3D denoising and image enhancement algorithms, which ensures that autonomous vehicles have better “vision” at night and can “see” more clearly.

According to the road test results of Damo Academy’s autonomous driving laboratory, with the use of this ISP, the object detection and recognition capability of the vehicle-mounted camera in the most challenging night scenes has been improved by more than 10% compared to mainstream processors in the industry, and originally blurred labels can now be recognized clearly.

Motivation and Feasibility of Abolishing ISP

However, the original intention of ISPs was to obtain a “beautiful” image in a changing external environment, but whether this is the most needed image form for autonomous driving is still undefined in the industry. According to Elon Musk, neural networks do not need beautiful images, but rather raw data directly obtained by sensors and raw photon counts.

In Musk’s view, regardless of the processing methods used by ISPs, a certain amount of raw photons will be lost in the process of passing through the lens and being converted into visible photons by the CMOS.

Regarding the difference between lost and unlost raw photons, Huang Yu, Chief Scientist of Zhitu, said: “When photons are converted into electronic signals, some noise is indeed suppressed, not to mention that ISPs do a lot of processing on the original electrical signals.”

The co-founder of Carzone, in the article “From Photon to Control – Tesla’s Technological Taste Becomes Heavier and Heavier”, makes a detailed analogy between human perception of information and electronic imaging systems and provides a summary as follows:

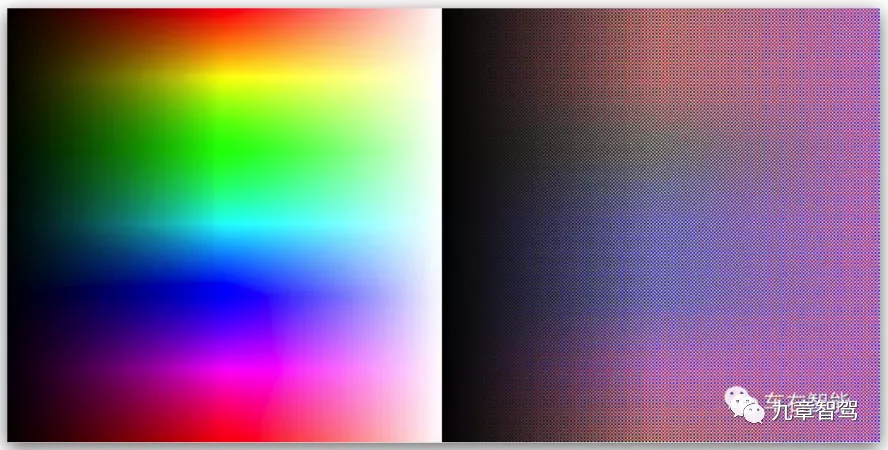

As shown in the above figure, the logic of the human visual system and the electronic imaging system is exactly the same. The color and pixel matrix of the retina are actually more representative of the external objective world, and the real perception of color by humans requires the participation of the brain (equivalent to the ISP and higher-level backend processing).

The left image shows a standard color chart with saturation gradient and intensity gradient, while the right image is the corresponding original chart with raw colors. Comparing the two, imaging systems designed with human visual perception as the core provide us with pleasing and subjectively appealing image information, but may not necessarily reflect the objective real world.

The left image shows a standard color chart with saturation gradient and intensity gradient, while the right image is the corresponding original chart with raw colors. Comparing the two, imaging systems designed with human visual perception as the core provide us with pleasing and subjectively appealing image information, but may not necessarily reflect the objective real world.

Musk believes that in order to make the images look “better” and more suitable “for human viewing,” many originally useful data is processed by the ISP in the post-processing phase. However, if the purpose is just for machines to see, these processed data can also be useful. Therefore, if the post-processing step can be omitted, the effective information will increase.

According to Liu Yu, CTO of Anyue Technology, Musk’s logic is:

-

Because of the richer raw data, the range of the camera’s detection may be larger than human eyes in the future. When the light intensity is very low or very high, we humans may not see anything (because it’s too dark or too bright), but the machine can still measure the number of photons and therefore output an image.

-

The camera may have a higher resolution for light intensity, which means that two dots that look very similar may not be distinguishable by the human eye in terms of brightness or color differences, but the machine may be able to do so.

According to a certain AI engineer from the Four Little Dragons, a good camera has a much larger dynamic range than the human eye (in a relatively static state), that is, the range from brightest to darkest that the camera can observe is wider than what the human eye can observe. In extremely dark conditions, humans cannot see anything (almost no photons), but the camera’s CMOS can receive many photons, and therefore can see things in the dark.

Several experts interviewed by “Nine-Chapter Intelligent Driving” expressed their agreement with Musk’s logic.

Horizon BPU algorithm leader Luo Heng explained: “Tesla’s data annotation currently includes two types: manual annotation and automatic annotation by machine. However, not all manual annotations are based on the current image information; they also contain human knowledge of the world. In this case, the machine also has the probability to use richer original data; while the machine’s automatic annotation is based on post-observation and consistency of a large number of geometric analyses. If the original data is used, the machine has a high probability of finding more relevance and making more accurate predictions.”

In addition, Wang Haowei, the chief architect of Joyson Intelligence, explained: “Tesla has spliced the original image data before it enters the DNN network, so there is no need to post-process the perception results of each camera.”

By eliminating the ISP to improve the camera’s recognition ability at night, this seems to be opposite to Alibaba DAMO Academy’s self-developed ISP strategy. So, are these two strategies contradictory?

According to an explanation from a visual algorithm expert from a certain autonomous driving company, the demands of the two companies are actually the same. In essence, both Alibaba DAMO Academy and Tesla hope to improve the camera’s ability through the combination of chips and algorithms.But the difference between the two lies in the fact that Alibaba DAMO Academy processed and enhanced raw data using various algorithms so that they were visually perceivable, while Tesla removed the part of data processing in algorithms that was intended to accommodate visual perception and instead developed the data and corresponding capabilities necessary for enhancing camera in various lighting environments.

Moreover, Musk said that delay could decrease by 13 milliseconds without the ISP processing because each of the 8 cameras produces a delay of 1.5-1.6 milliseconds during ISP processing.

Once Musk’s idea is proven to be feasible, other chip manufacturers are also likely to follow suit. Some chip manufacturers are already doing this.

For example, Feng Yutao, the General Manager of Ambarella China, mentioned in an interview in January that “CV3 can fully support feeding the original data directly into neural networks for processing if customers require that.”

The Physical Performance of Cameras Also Needs to Be Enhanced

Not everyone is completely convinced by Musk’s plan.

A certain VP of technology at a leading Robotaxi company said, “Tesla is not wrong, but I think the development difficulty of algorithms will be very high, the cycle will be very long, and the development time may be very long. If we add a lidar, we can directly solve the three-dimensional problem, and of course, we can use pure vision to construct the three-dimensional model, but it consumes a lot of computing power.”

The co-founder of Carzone AI believes Musk is a “master of agitation” whose “way of promoting exaggerates to the point where you can’t help but feel a sense of awe for the technology.”

He said, “Some image experts believe that giving up all ISP-level post-processing is unrealistic, such as gaining intensity and color debayer images, which can create many difficulties for subsequent NN identification tasks.”

According to a recent article in Carzone AI, in what scenarios is the scheme of feeding raw data that has not undergone ISP directly into a neural network feasible? Is it compatible with Tesla’s current cameras or does it require better visual sensors? Is it present in all NN head tasks of FSD beta, or only in some of them? These are all uncertain answers.

Let’s return to the question the author asked at the beginning: Can the improvement of visual algorithms break through the physical performance bottleneck of cameras themselves?

A certain CEO of a Robotaxi company with a background in visual algorithms said, “Perception of backlighting or sudden exposure to strong light when a vehicle emerges from a tunnel is difficult for human eyes and impossible for cameras. At this point, it must be equipped with a lidar.”According to Liu Yu, theoretically speaking, if cost is not a consideration, it is possible to create a camera with performance that surpasses the human eye. “But the low-cost cameras we currently use on our cars still seem far from reaching this level of performance.”

Implying that solving the problem of how cameras perceive in low or high light conditions cannot rely solely on the improvement of visual algorithms, but must also focus on the physical performance of the cameras.

For example, if a camera is to detect targets at night, it cannot rely on visible light imaging and must be based on the principles of infrared thermal imaging (night vision camera).

An AI engineer from the “Four Little Dragons” project believes that “photon to control” most likely means that Tesla’s cameras, in combination with the HW 4.0 chip, will be upgraded to multi-spectral cameras.

This engineer said: Currently, the non-visible light part of driving cameras is filtered out, but in reality, the spectrum of light emitted by objects is very broad and can be used to further distinguish object characteristics. For example, a white truck and a white cloud can be easily distinguished in the infrared band; for collision prevention with pedestrians or large animals, an infrared camera would be easier to use because of the easily distinguishable infrared light emitted by warm-blooded animals.

“Car Intelligent” also mentioned a question in their article: will Tesla update their camera hardware based on the concept of photon to control and introduce a true quantum camera or still use the current camera to bypass the ISP? At the same time, the author also pointed out that if the camera hardware is to be upgraded, “Tesla will have to completely retrain its neural network algorithm from scratch because the input is so different.”

In addition, no matter how much camera technology progresses, it may still be affected by dirt, such as bird droppings and mud.

Lidar uses an active light source that emits light before receiving it. Pixel points are large, and general dirt is difficult to completely block. According to data provided by a lidar manufacturer, under conditions of dirt on the surface, the detection distance of the lidar only decreases by less than 15%. Moreover, when there is dirt, the system will automatically issue a warning. However, the camera is a passive sensor, and each pixel is very small. A small amount of dust can block dozens of pixels, which directly causes the camera to become “blind” when dirt exists on the surface.

If this problem cannot be solved, is it not a delusion to try to eliminate the cost of lidar by relying on the improvement of visual algorithms?

A few additional points:

-

How chip manufacturers design is just one aspect of the problem, but if customers are unable to fully utilize raw data, they cannot bypass the ISP.2. Even though both chip manufacturers and customers have the ability to bypass the ISP, most manufacturers will still retain the ISP for a considerable period of time in the future, as one key reason is that in the L2 stage, the driving responsibility still lies with humans, and the information processed by the ISP displayed on the screen is convenient for interaction and can provide drivers with a sense of security.

-

Whether to bypass the ISP or not is still a continuation of the debate between the “pure vision camp” and the “lidar camp”. In this regard, the view of the Robotaxi company’s technology VP mentioned earlier is very enlightening:

“In fact, the pure vision scheme is not about competing with the lidar scheme to see who is better. What really matters is how long it takes for the algorithm development of the pure vision scheme to reach the level of the lidar scheme, and how long it takes for the cost of the lidar to drop to a similar level as the pure vision scheme. In short, whether the former’s technological progress is faster or the latter’s cost is reduced faster.”

Of course, if the pure vision camp needs to add sensors in the future, and the lidar camp needs to reduce sensors, how much impact the algorithm will be affected, how long it will take to modify the algorithm, and the cost issues, are all questions that require further observation.

Reference article:

Musk’s latest interview: The hardest part of autonomous driving is establishing a vector space, and Tesla FSD may reach L4 by the end of the year | Alpha Storyteller

https://mp.weixin.qq.com/s/rSrN6FV3W4GRSSkfF9K_kg

Tesla chooses pure vision: camera ranging has matured, and lidar defects cannot be compensated

https://m.ithome.com/html/564840.htm

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.