The next-generation chip from Mobileye has been announced at CES, and it was also revealed that Intel wants to spin off and take Mobileye public before that.

I personally believe that after a cycle of development, the field of automotive vision is about to enter a new stage where many new things will emerge. We can examine the technology and investment possibilities in this market segment by looking at this case.

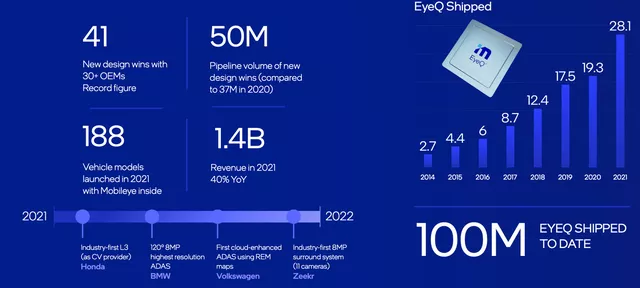

Note: In 2021, the shipment volume was 28.1 million, which objectively demonstrates the rapid penetration rate of vision in the ADAS field.

Enhanced Perception

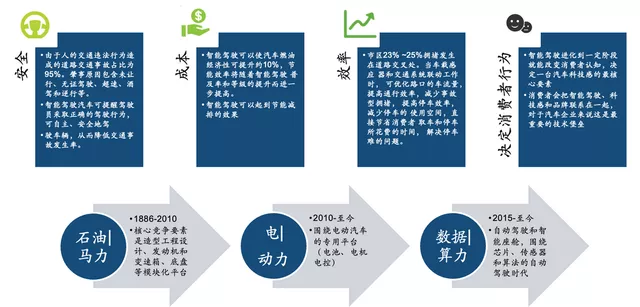

I really like the quote “Autonomous driving is the soul of the automotive industry.” The development of intelligent driving technology will change human driving behaviors, and will promote the overall development and progress of society in terms of traffic safety, transportation costs, vehicle efficiency, and air pollution. This is an industrial revolution driven jointly by the industrial and transportation sectors.

In the investment in the autonomous driving field, the main concern is the potential benefits, and achieving the overall goal will completely overturn traditional perceptions of benefits for society and automotive companies.

Note: This is the potential large output in terms of accounting, as the market is so huge that everyone can see it, which has led global venture capital and industrial capital to invest in it. But when the output will come is unclear in the short term.

From society to enterprises, this highland has been highly valued in China, especially in 2021 where there has been a great breakthrough. For example, most automotive companies are increasing their efforts on cameras. Based on the current situation, vision is the most certain perception pathway, and almost all automotive companies are developing a set of main evolutionary rules based on vision perception.

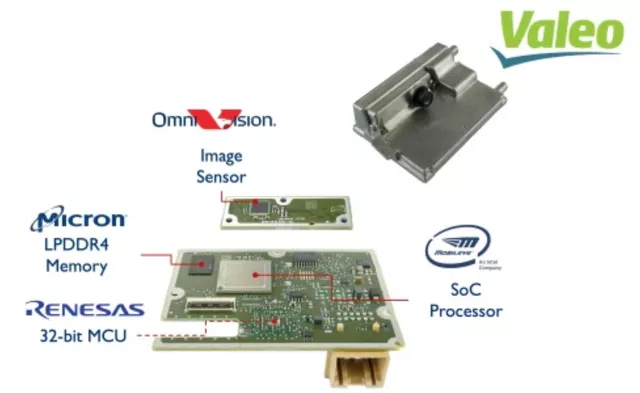

As shown in Figure 4, in the platform architecture of Volkswagen’s MEB, it relies on Mobileye’s visual chips and algorithms, and the perception solution is sent back to ICAS through Renesas’ chips (which has not been deployed yet).

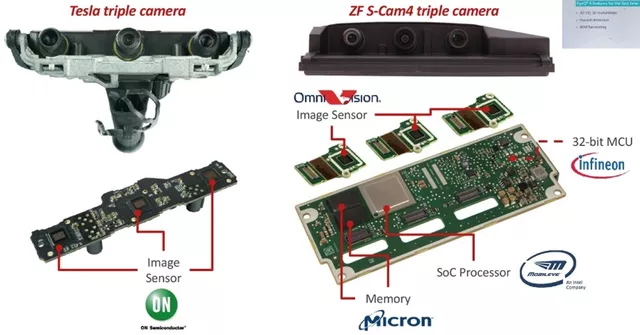

At the camera level, two fundamental differences have emerged: one is the mode of processing distributed cameras – automotive companies focus on the algorithm of perception fusion. Essentially, it involves whether car companies should have their algorithm engineers work on integration in vision processing.

The other mode is adopted by Tesla, which differentiates from core areas. The logic is simple: Tesla’s procurement department finds global technology suppliers with cost-effectiveness. For instance, in the camera field, Tesla will import from LG Innotek, coupled with Samsung Electronics’ previous participation. Two Korean lens module manufacturers easily secured projects worth KRW 10 trillion and KRW 490 billion – if vision processing chips are not included, this is a massive amount.

Note: This is reported by South Korean media that LG Innotek’s electric cameras will be deployed on the Tesla Model Y and 3 for North American and European markets and the upcoming electric truck Semi.

How to differentiate the second half of the visual market

There is much to be seen in the information disclosed by Mobileye. In terms of the new generation of L3 systems, traditional cars are also undergoing a core change from distributed cameras to centralized processing.

Note: Mobileye is also developing 4D millimeter-wave chips, which is a very interesting change.

# 2021: A Focus on Centralized Autonomous Driving Computing Platforms in the Automotive Industry

# 2021: A Focus on Centralized Autonomous Driving Computing Platforms in the Automotive Industry

Major automotive companies are focusing on using a centralized autonomous driving computing platform architecture to develop new systems. This system includes two main components: a heterogeneous distributed hardware architecture and an autonomous driving operating system.

Autonomous Driving Operating System

This software is based on the heterogeneous distributed hardware architecture and includes system and feature software. The focus is on reliability, real-time operation, distributed elasticity, and high computing power. The software has features such as sensing, planning, control, network connection, scalability, and cloud control.

Heterogeneous Distributed Hardware Architecture

This architecture is focused on level 3 and above autonomous driving platforms that require compatibility with multiple types and quantities of sensors. Existing single-chip systems cannot meet the requirements for multiple interfaces and computing power, so the hardware solution often uses heterogeneous chips. This is reflected in single-board integration of multiple architecture chips and single-chip integration of multiple architecture units.

After systematic practice, major automotive companies have clarified their directions and are developing high-performance systems, using both self-development and the use of luxury high-performance hardware sets.

Following this trend, Eye Q ULTRA is also planning to transition from a distributed system to a centralized system. In my opinion, this type of thinking is more in line with the cost-based model of traditional automotive companies.

Focusing on selling autonomous driving at different levels as a service, the overall hardware cost is not restricted by deployment, resulting in significant differences. The appearance of Qualcomm has also led to multiple options for the choice of vision processing main chips.

In summary, the era of vision focusing on small computing power and algorithm accumulation has become a “tradition.” We can see the speed of technological evolution in cars. The difference between the technology shown by Mobileye in 2017 and now is enormous, and the ceiling is set by the string of companies following behind Eye.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.