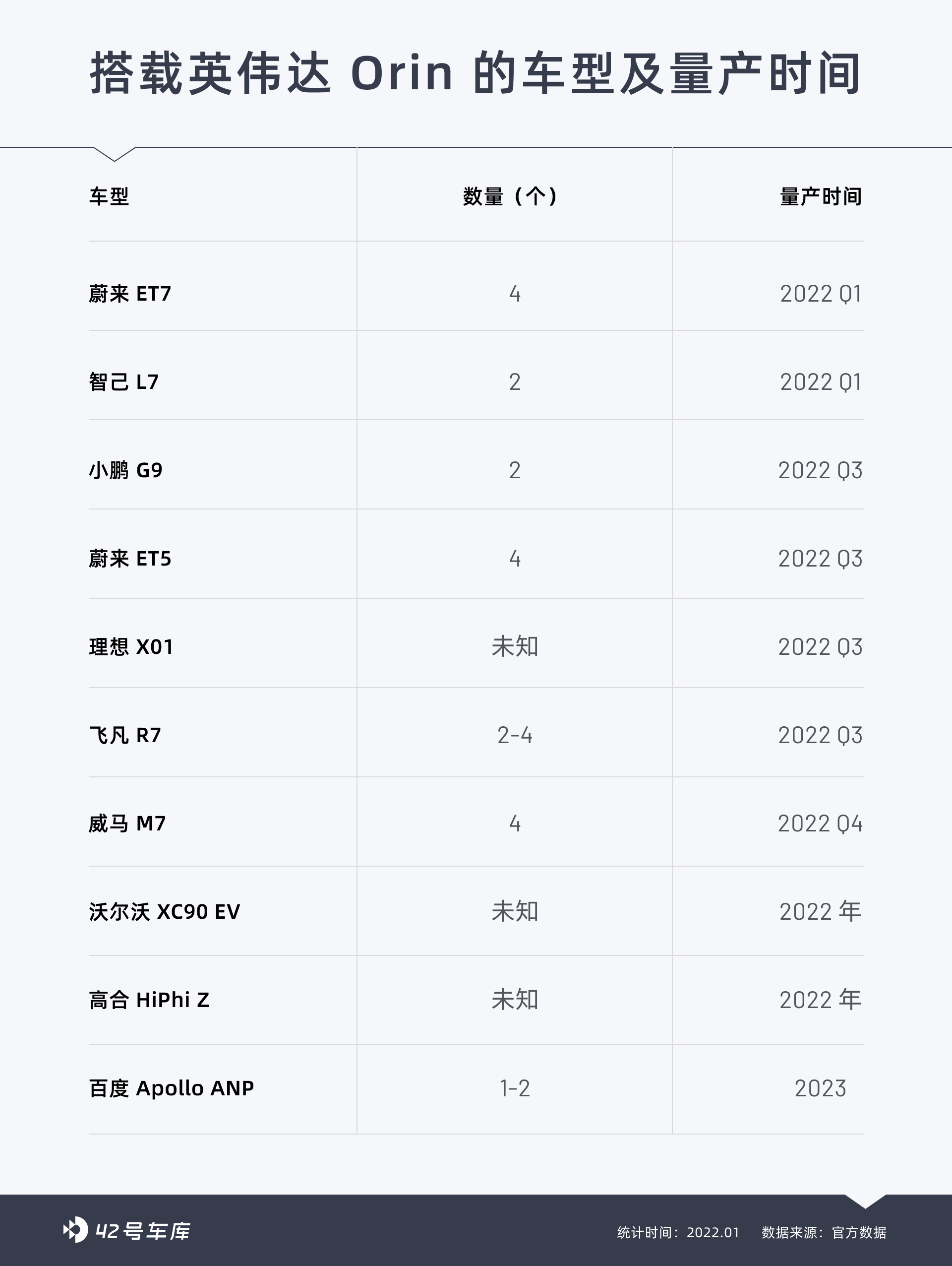

Now the criticism of Mobileye in China is getting more and more intense, and the core reason is that from 2020 to 2021, the major intelligent electric vehicle brands have basically chosen NVIDIA Orin as their autonomous driving chip.

Before 2019, Mobileye EyeQ4 dominated the entire industry. By 2020, only the extremely rare Xpeng P7 and BMW iX were equipped with Mobileye EyeQ5. However, Xpeng has also announced that it will switch to other brands of chips in the next generation of models, and BMW has clearly stated that it will use Qualcomm Snapdragon Ride platform to create autonomous driving by 2025.

There are two reasons why these automakers have turned to NVIDIA. On the one hand, Mobileye adopts a black box solution, and top automakers pursuing breakthroughs in software have no operational space at the software level, and have to turn to the more open NVIDIA or Horizon platforms.

On the other hand, top automakers hope to achieve autonomous driving as soon as possible, so they will install as much perception hardware as possible. Faced with the massive data generated by these hardware, the demand for chip computing power has naturally grown. However, Mobileye’s latest EyeQ5H chip computing power is only 24 TOPS, which is difficult to meet everyone’s needs, so they have turned to NVIDIA or Horizon chips with large computing power.

But is it really true that Mobileye’s accumulation since 2007 is not worth mentioning? Can newly-emerging autonomous driving chips really replace Mobileye? Is Mobileye really not good enough?

After watching this year’s CES Mobileye release conference, I think it is still too early to draw conclusions.

It is undeniable that Mobileye has a leading accumulation in technology, but it has not kept up with the changes of the times in the commercialization transformation. NVIDIA stepped on the needs of manufacturers and was born at the right time. However, the algorithmic ability of vehicles using NVIDIA chips depends on the manufacturer’s ability, and at least no automaker has shown off their muscle so far.

Therefore, what is more worth observing is whether the adjustment speed of Mobileye’s commercialization strategy is faster, or the speed of manufacturers’ self-developed algorithms is faster.

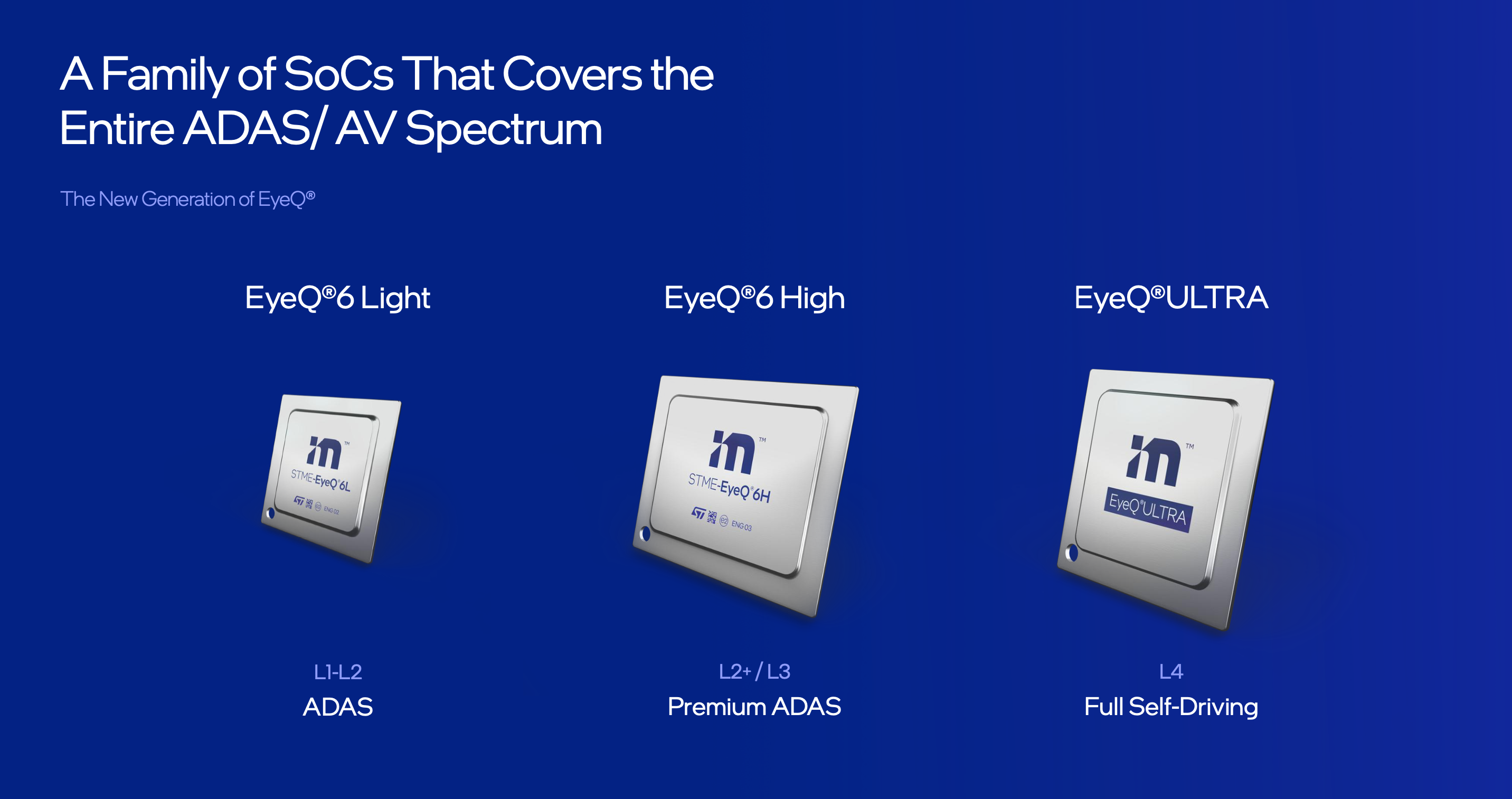

From the perspective of the development of the times, the development of autonomous driving chips has entered a stage of rapid growth, and competitors have rapidly increased, making competition indeed fierce. Mobileye has lost orders from top automakers, but facing larger markets and waist-level automakers without algorithmic capabilities, Mobileye is still the best choice.Mobileye unveiled three chips and unveiled the progress of their three strategic technologies at CES this year.

After the press conference, we had the opportunity to interview Erez Dagan, Vice President of Products and Strategy Execution at Mobileye and Vice President of Intel. During the interview, many sharp questions were asked of Erez, such as:

“Because Mobileye uses a black-box technology route, there are doubts and hesitations in cooperation between domestic manufacturers and Mobileye. How does Mobileye view this phenomenon?”

“The computing power of the EyeQ Ultra chip is 176 TOPS and is not considered high compared to some industry competitors such as NVIDIA. How does Mobileye view the relationship between the computing power and autonomous driving at level L4 and above?”

But before discussing these blunt issues, let’s first review what Mobileye released at CES this year.

What Is the Level of These Three Chips?

At CES this year, Mobileye unveiled three chips: EyeQ Ultra, EyeQ6L, and EyeQ6H, which are the most high-profile aspects of CES this year. Let’s start with the most powerful EyeQ Ultra chip.

EyeQ Ultra

The computing power of EyeQ Ultra is 176 TOPS, with 12 RISC-V CPUs, each CPU has 24 threads, a total of 64 core accelerators, utilizing 5-nanometer process technology, and a power consumption of less than 100W.

The accelerators mentioned earlier include four types: one is pure deep learning calculation; the second is an FPGA accelerator called DGRA; the third is a long instruction set accelerator similar to DASP; and the fourth is a multi-threaded CPU, with each kernel responsible for different workloads.

The engineering samples of EyeQ Ultra are expected to be produced in the fourth quarter of 2023, and the official production time is 2025.

Mobileye defines this chip as “a single chip that can support level L4 autonomous driving.”But after the release of the above data, there are generally two doubts. One is whether the 176 TOPS of computing power can achieve level 4 autonomous driving. After all, chips with computing power exceeding 1,000 TOPS are already installed on the NIO ET7 and ET5. The other doubt is that advanced driver assistance will enter a period of rapid development from 2022, while Mobileye’s chip will not be mass-produced until 2025, which is too late.

From a time perspective, the pace of the disclosed data from the top car companies is indeed faster than Mobileye. However, there is also the possibility of car companies exaggerating, as evidenced by the fact that only XPeng P5 was mass-produced since the laser radar incident that began in early 2020, and we are now in early 2022.

Regarding computing power, compared with the NVIDIA Orin chip delivered in 2022, EyeQ Ultra, which will not be delivered until 2025, does not have any advantages in terms of computing power data. But Mobileye CEO Shashua seems to have anticipated that everyone would say so and made an explanation at the press conference:

“Compared with the numbers of our competitors, 176 seems like a small number, probably only one-fifth of what our competitors claim to have in terms of computing power. But the key is not just computing power, but efficiency, which requires an in-depth understanding of the interaction between software and hardware, an understanding of what is core, and what algorithms to support the corresponding core.“

At the same time, Shashua also gave an example of JiXiang’s SuperVision, which uses 11 8 million-pixel cameras. Adopting 2 Mobileye EyeQ5 chips, the JiXiang 001 completes a full-cycle of perception, regulation, and execution. Its computing power is only 48 TOPS.

Regarding the debate over computing power, we also further interviewed Erez Dagan, Mobileye’s Vice President of Product and Strategic Execution and Vice President of Intel Corporation, and will discuss it further later.

EyeQ6H

EyeQ6H is an advanced version of EyeQ5H, with computing power of 34 TOPS and 7-nanometer process technology. According to official introduction, the computing power is nearly tripled compared to EyeQ5, but energy consumption is only increased by 25%.

Currently, Mobileye achieves SuperVision through 2 EyeQ5 chips. The next generation of SuperVision will be achieved through 2 EyeQ6H chips, and even 1 EyeQ6H chip can achieve it.

EyeQ6 is expected to deliver engineering samples in Q4 2022 and start mass production in 2024.

EyeQ6L

EyeQ6L can be understood as a low-end version of EyeQ6, also adopting 7nm process technology, with computing power of 5 TOPS and power consumption of only 3 watts. Engineering samples have been delivered half a year ago and are expected to be mass-produced in 2023.

There are mixed reviews on this chip. Many netizens think it is a step backward because there is not much improvement in computing power.

Compared with the high-performance chips that have left a deep impression in everyone’s mind, seeing a chip released in 2022 with only 5 TOPS of computing power would indeed cause certain disappointment. Setting aside the statement of “not judging solely based on computing power,” a more compelling data point is that the order volume of EyeQ6L has exceeded 9 million.

In an interview at the press conference, Erez Dagan also said: “EyeQ6L has stronger computing power and lower power consumption. It is used to meet the needs of the basic ADAS segment. In this market, we need a highly integrated, very efficient, cost-effective, and low-power solution, and EyeQ6L undoubtedly meets market demand in these two aspects.

In addition, the product also considers the potential of additional cameras, such as driver monitoring systems or AEB. EyeQ6L, as a basic ADAS, was developed to meet different standards worldwide.”

This is where Mobileye’s core competitiveness lies, compared to chips

In addition to the chips mentioned above, Mobileye also revealed the progress of its three-pillar strategy at the press conference.

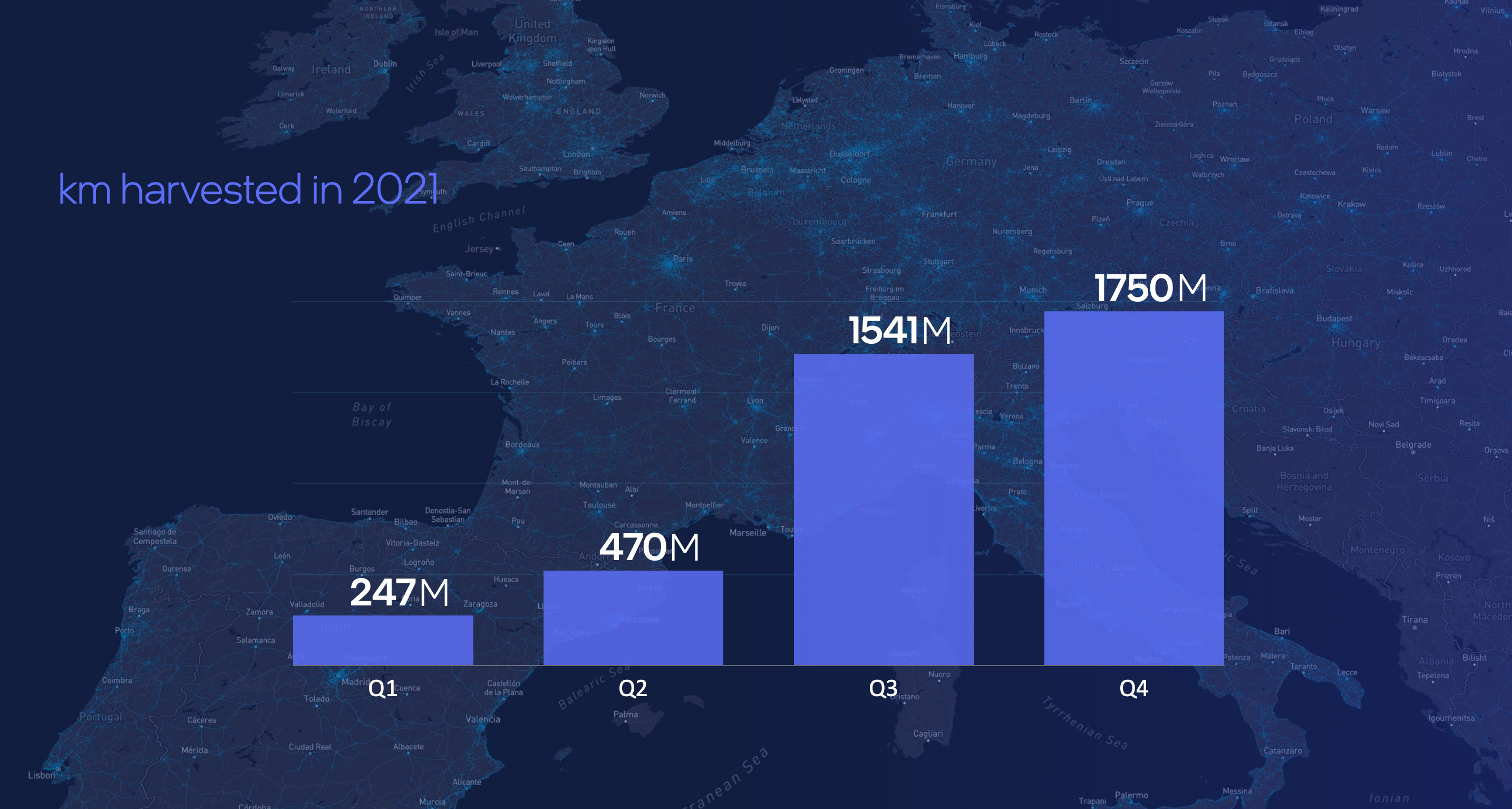

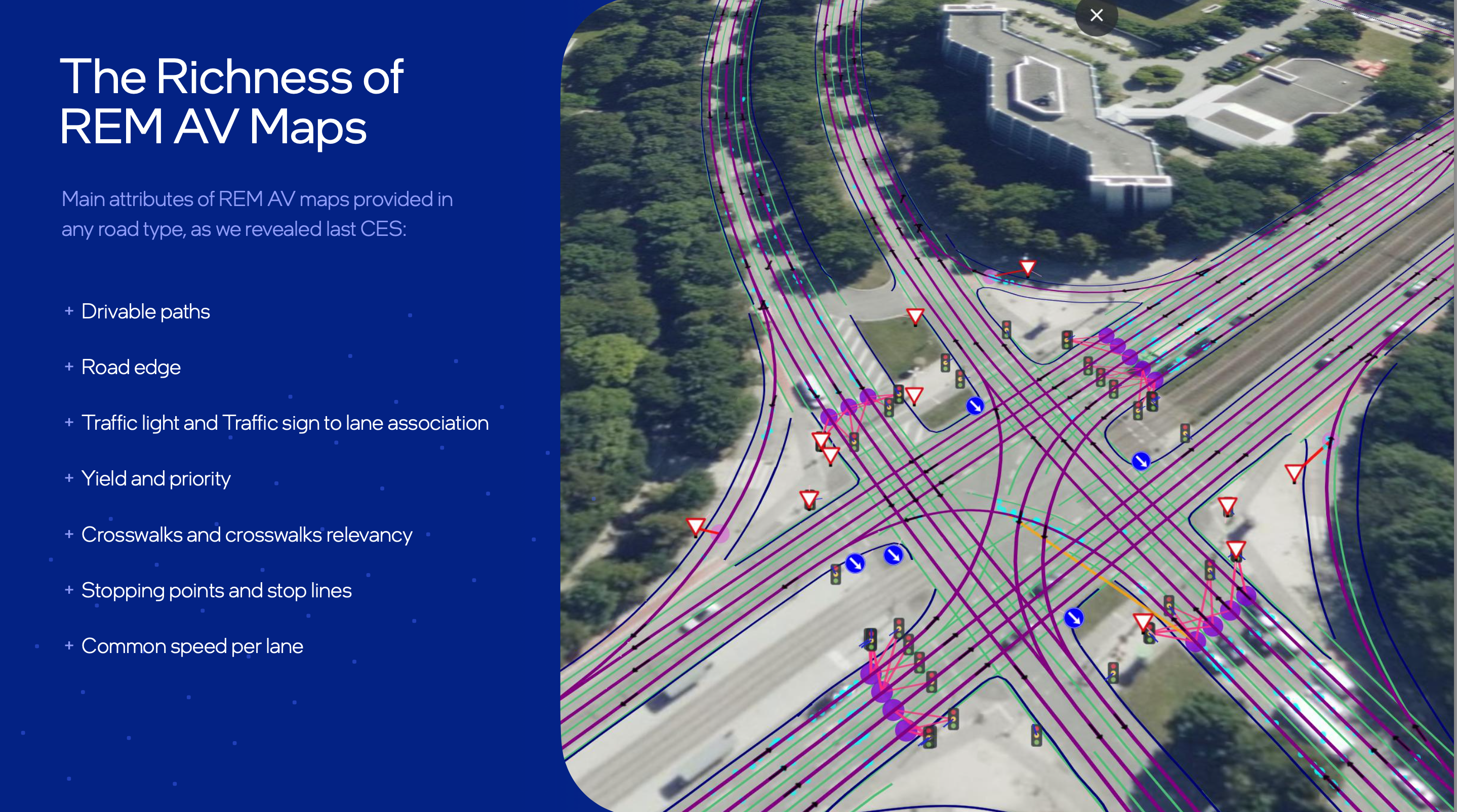

Crowdsourced map building technology REM

At this year’s CES, Mobileye announced that the existing fleet of crowdsourced vehicles had collected a total of 4 billion kilometers of data during 2021, and currently collects 25 million kilometers of data per day, which means 90 billion kilometers of data can be collected in 2022 at this rate.

Compared with high-precision maps, REM maps have the characteristics of being easier to collect, faster, cheaper, and fresher.Unfortunately, at the launch event, Mobileye did not provide detailed data, and due to legal restrictions on Chinese roads, REM has not officially entered the country. Therefore, for the majority of consumers, they cannot enjoy the advantages brought by REM.

According to Garage 42, the landing time of the Zeekr 001 navigation assisted driving function is uncertain due to the lack of REM, and compliance issues can only be resolved by relying on Geely. Currently, the highlight of foreign progress is that the Volkswagen ID.4 has implemented lane-free assisted driving based on REM maps.

In addition, Ford’s next-generation Blue Cruise and Zeekr equipped with Mobileye SuperVision will also use REM maps to achieve higher-level intelligent driving.

Radar with Imaging Capabilities

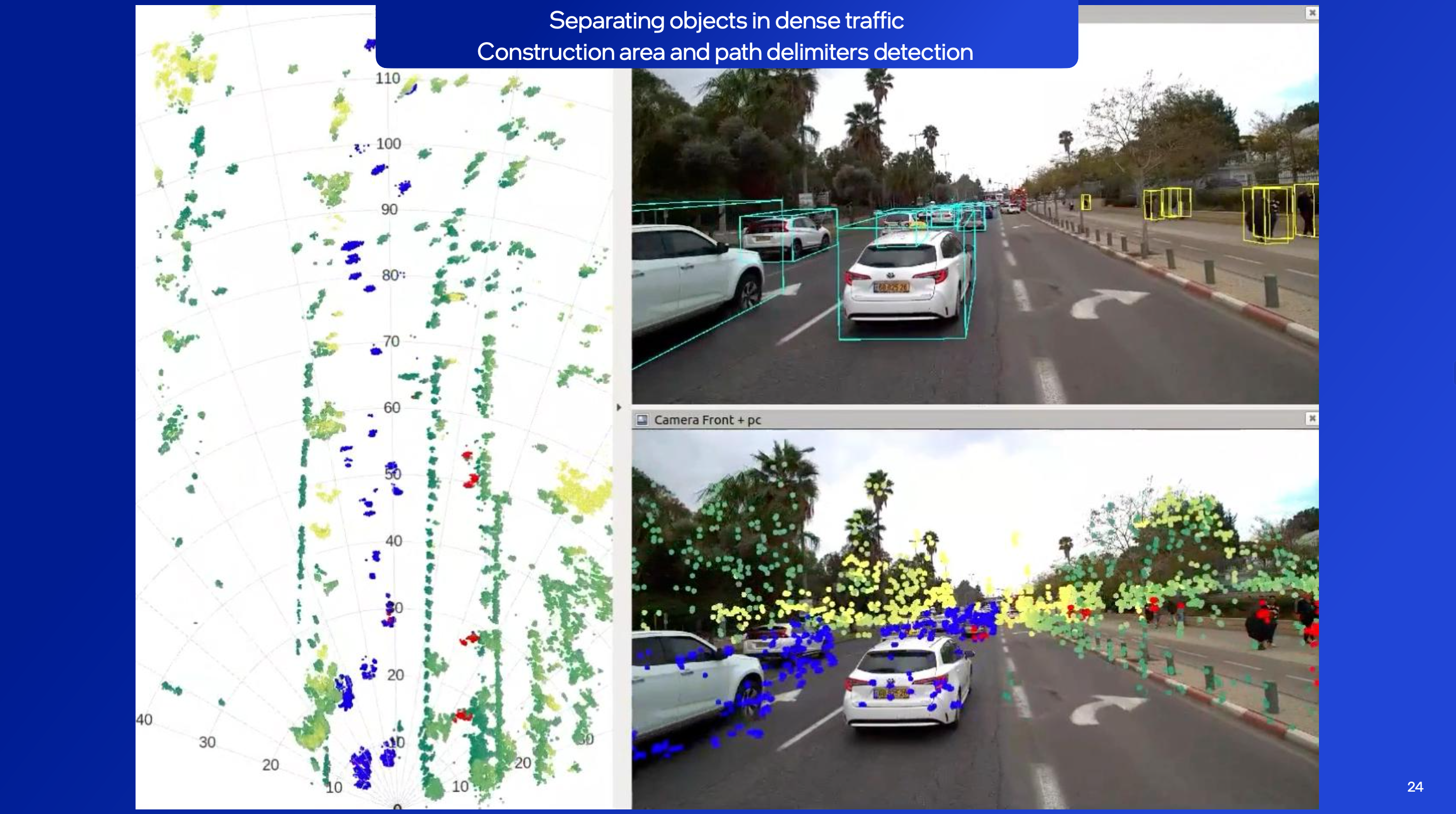

In the public’s understanding, Mobileye takes a purely visual approach similar to Tesla. This statement is not wrong, but it is not particularly rigorous. Mobileye adopts a visual-based approach, but it also has its own achievements in the fields of millimeter-wave radar and lidar.

Not All Millimeter-Wave Radars Are Created Equal

Currently, the mass-produced millimeter-wave radars on vehicles only have the ability to measure distance and speed, and they do not have the ability to image. This means that compared to vision cameras, millimeter-wave radars can only detect that there is something far away, but they do not know what this thing is. They also cannot produce models of the surrounding environment to provide more valuable information for intelligent driving, and their ability to operate in congested environments is very limited.

Shashua also candidly stated at the launch event: “Traditionally, this type of conventional radar as an independent sensor is basically meaningless.”

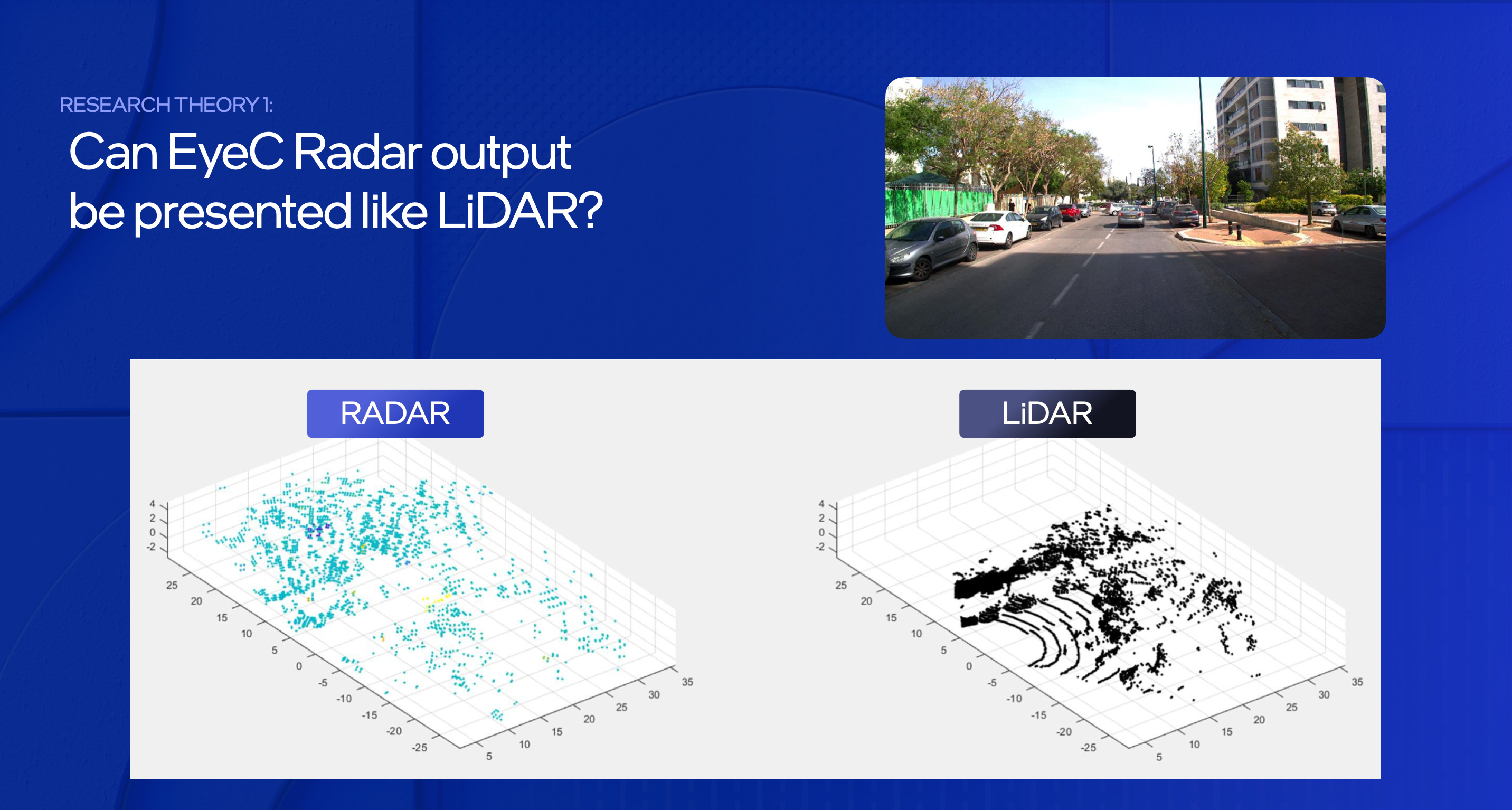

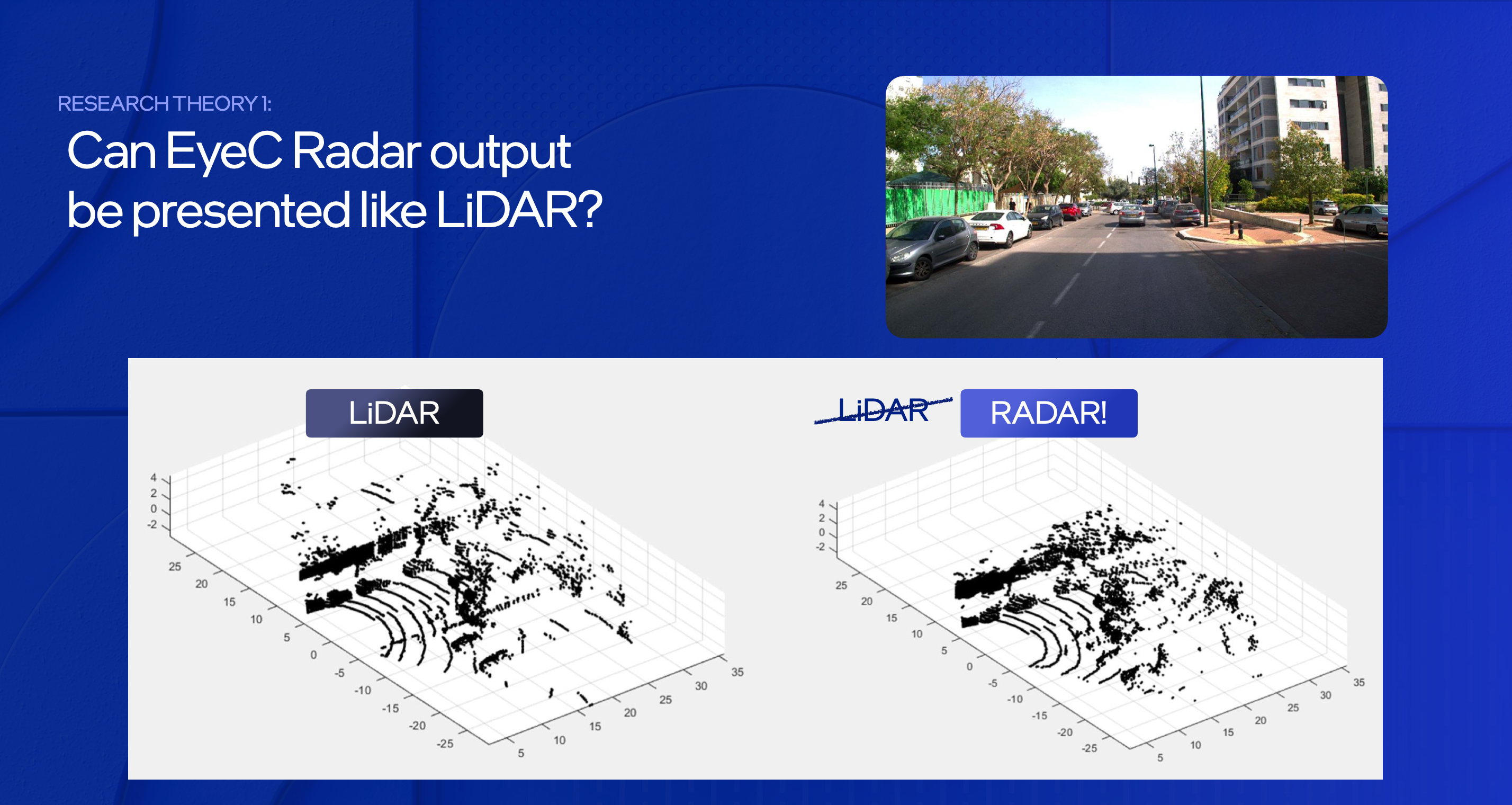

The millimeter-wave radar defined by Mobileye has imaging capabilities, similar to 4D millimeter-wave radar, and information about this radar has been released at CES last year.

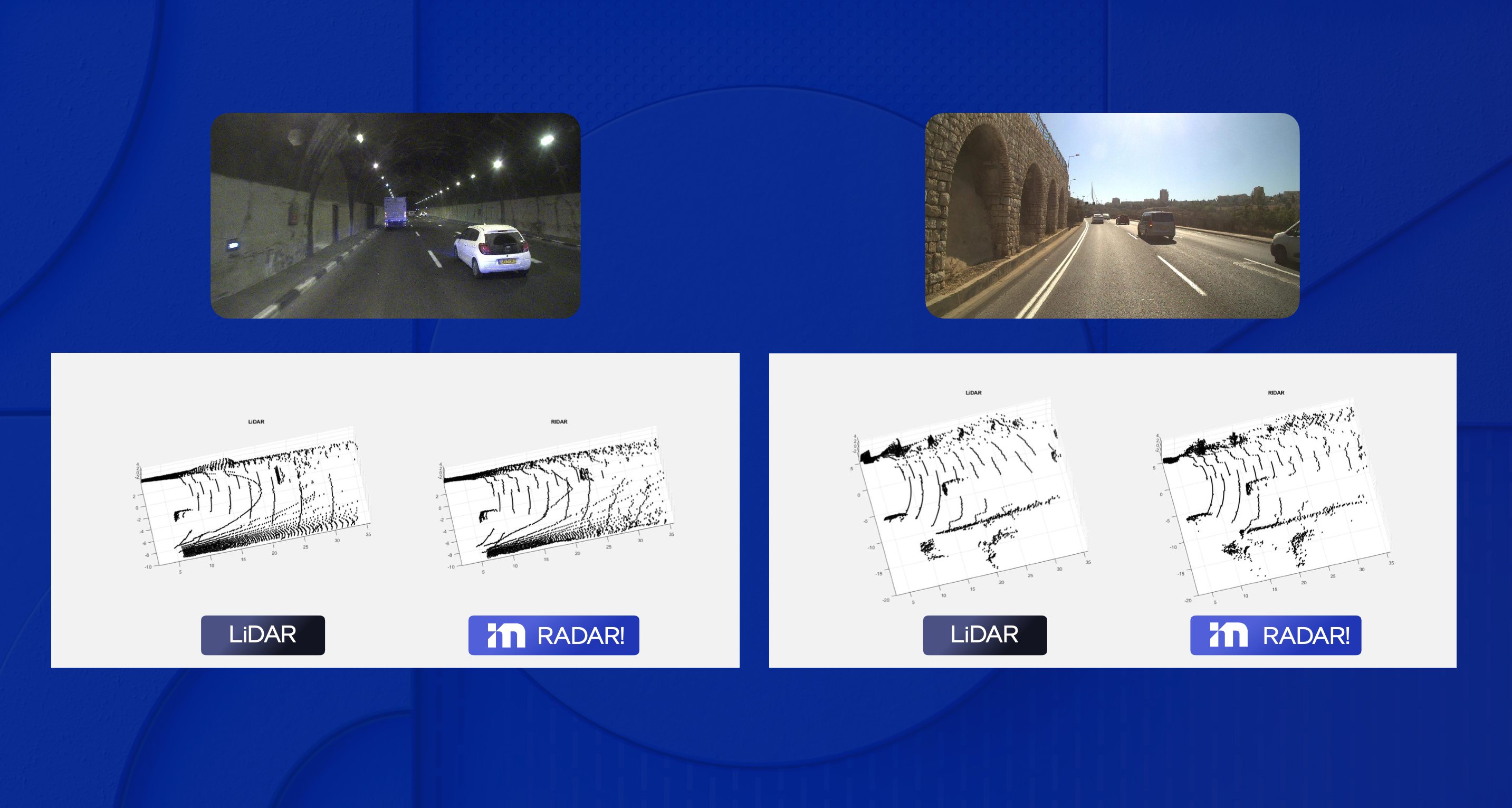

After calculating with deep learning algorithm, Shashua states that the perception result of millimeter-wave radar can achieve a laser-like effect. This radar’s detection result was displayed at CES this year, and from the results, the precision of detecting and imaging the surrounding environment is already very close to that of a laser radar. Compared with laser radar, millimeter-wave radar has stronger adverse weather performance and lower cost.

After calculating with deep learning algorithm, Shashua states that the perception result of millimeter-wave radar can achieve a laser-like effect. This radar’s detection result was displayed at CES this year, and from the results, the precision of detecting and imaging the surrounding environment is already very close to that of a laser radar. Compared with laser radar, millimeter-wave radar has stronger adverse weather performance and lower cost.

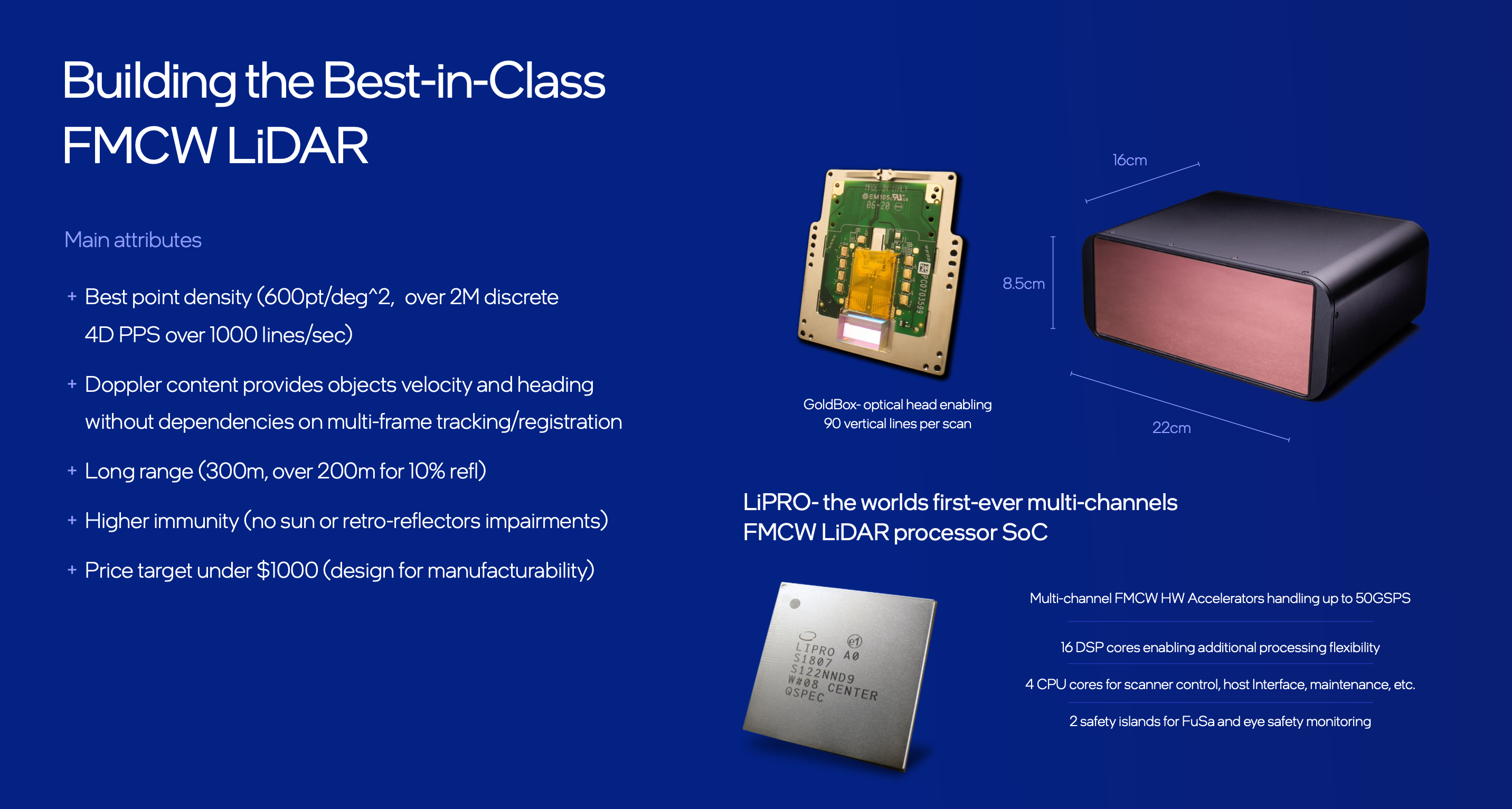

The FMWC laser radar developed by Mobileye is different from other ToF laser radars

In addition to the millimeter-wave radar for imaging mentioned above, Mobileye also set up a department last year to develop an FMWC laser radar. Unlike most ToF laser radars currently on the market, the FMCW radar measures distance not by the speed of light round trip but by the change of light frequency, its advantages are that it is not affected by other light sources and that each point cloud information not only contains distance information but also contains speed information, so it can better be used to track surrounding traffic participants, but the disadvantage is that the technical difficulty is higher and the mass production price is higher. Mobileye’s target price is less than $1,000.

Based on the above two types of radar and complemented by vision, the automatic driving system achieves threefold redundancy. In addition, compared with the perception fusion achieved by vehicle manufacturers using laser radar and millimeter-wave radar, Mobileye’s radar, laser radar, and camera are divided into two independent subsystems that are not connected to each other. They have created end-to-end driving experiences using only cameras or using only radar and laser. Mobileye hopes to improve robustness and achieve safe redundancy through the complementary use of these two subsystems.

The key to efficient use of computing power – RSS Responsibility Sensitive Safety principleNo matter in the CES conference or the interviews afterwards, Mobileye has repeatedly emphasized the views of “not relying solely on computing power” and “efficiency and the combination of software and hardware are equally important” in order to make everyone convinced. Shashua also shared some technical details.

Here I also try to use concise words to share what I understand with everyone. If there is any deviation, please correct me.

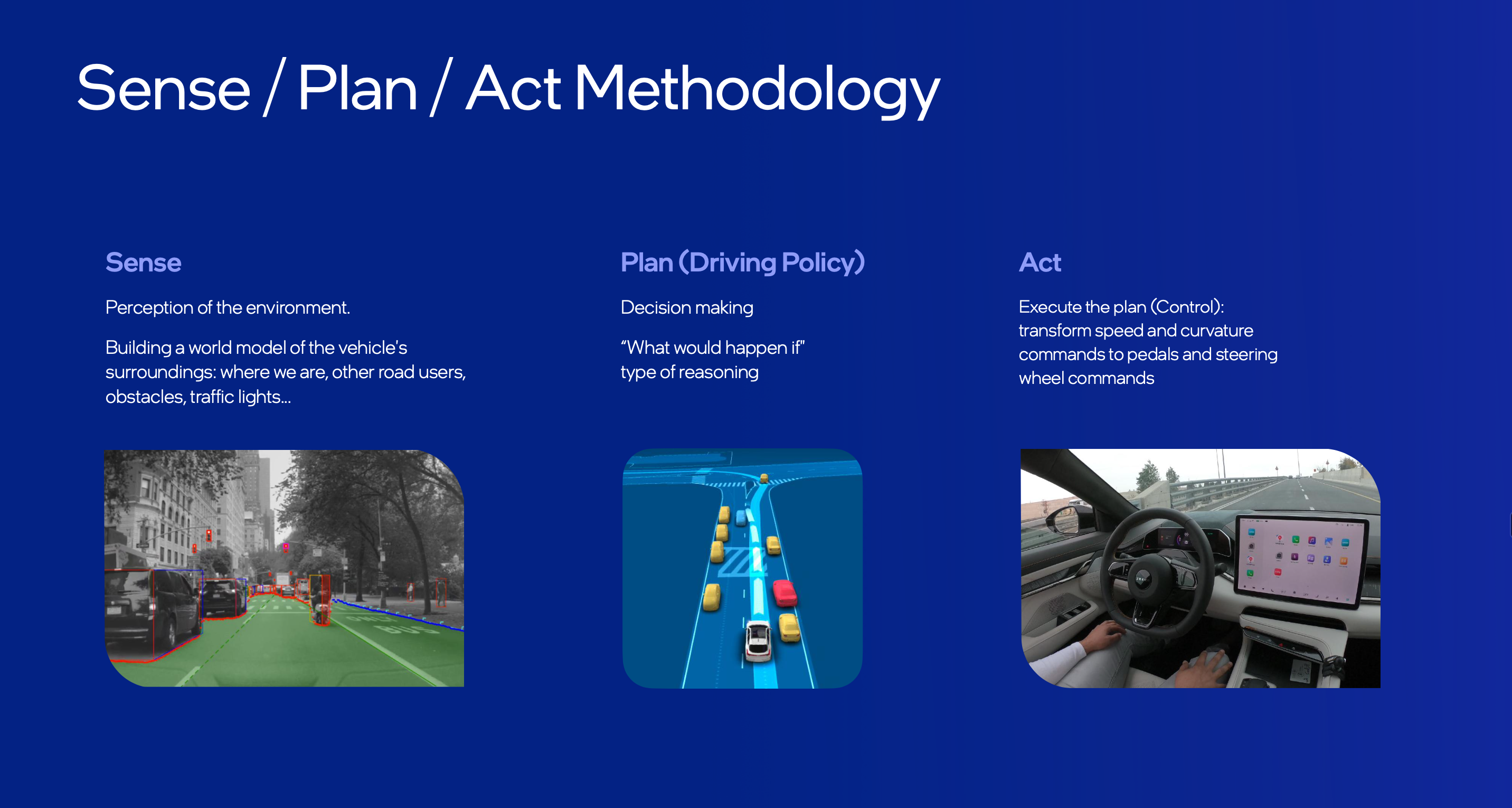

Achieving autonomous driving requires three steps: perception-decision-execution, which sounds as simple as stuffing an elephant into a refrigerator.

The most computationally intensive part is the “perception” and “decision” environments.

In terms of perception, in order to enhance perception ability, the number of cameras is constantly increasing, and the resolution is also constantly improving, and the data generated behind is also growing exponentially.

In order to use computing power more efficiently, Mobileye will perform scene segmentation (scence segmentation NSS) after obtaining visual perception information, and prioritize the calculation of road information instead of blindly processing globally.

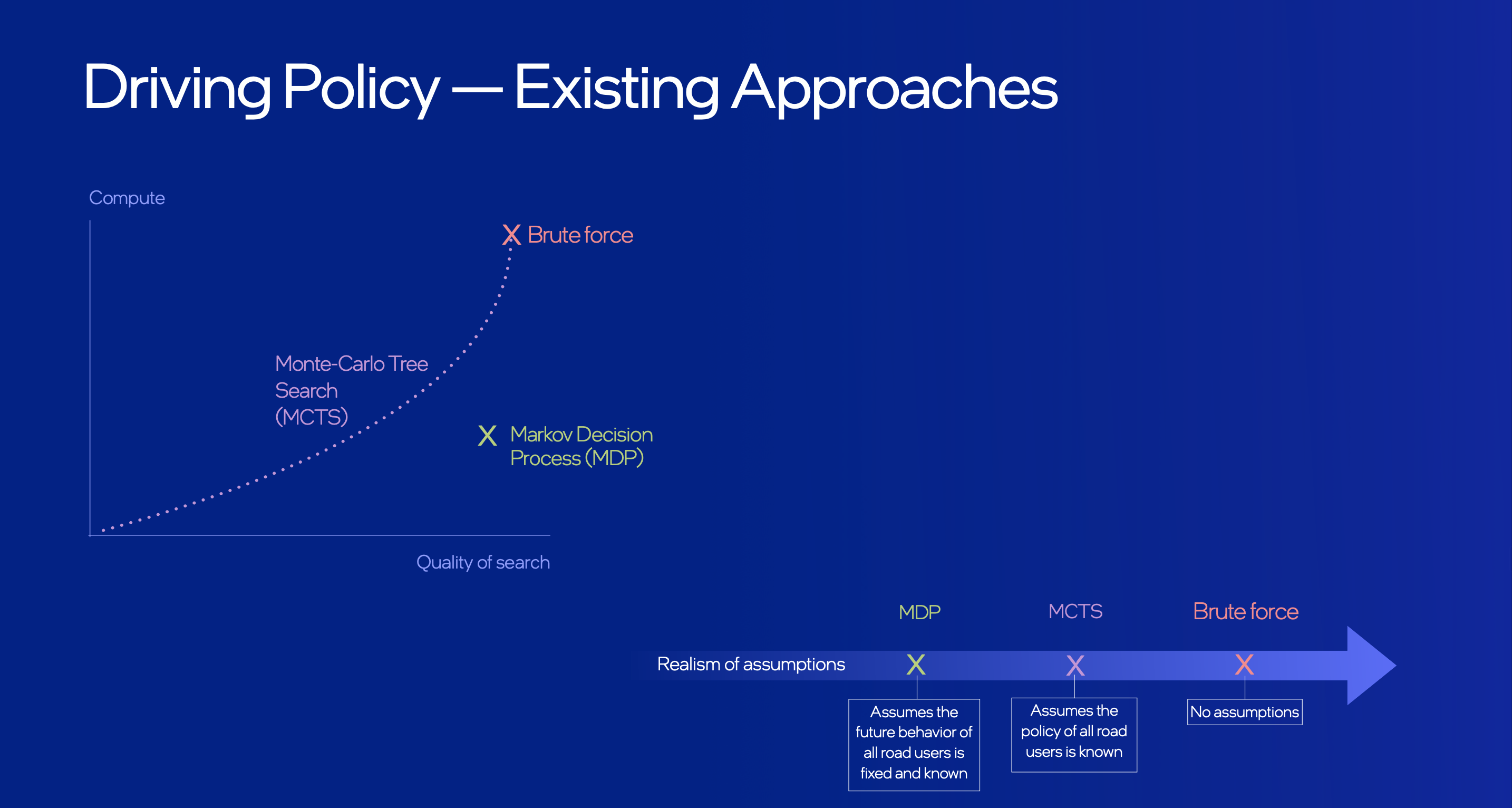

In terms of decision-making, the most computationally intensive part is predicting the travel trajectory of surrounding traffic participants based on perception information, and then deciding on a reasonable and safe route. However, once prediction is involved, as the future time of prediction increases, the demand for computing power will enter an exponential growth process.

Here, Mobileye introduces the RSS (Responsibility Sensitive Safety) principle, which aims to ensure that self-driving cars never cause accidents proactively by dataizing several subjective common sense in human driving, including:

- What are dangerous situations?

- What is the correct response in dangerous situations?

- Who is responsible for the accident?

- Safety distance under different driving scenarios.

Mobileye will induce calculation based on the above driving strategy. Therefore, based on RSS, the system will only calculate possible futures instead of all futures, which helps Mobileye use computing power more efficiently.The three major technologies mentioned above will support Mobileye to better capture the L3/L4 scenarios.

According to the information released at CES, Mobileye has already cooperated with Honda and Valeo at the L3 level, where it is responsible for visual perception.

At the L4 level, Mobileye’s Robotaxi will start road testing in mid-2022, and after being approved by the end of the year, it will conduct driverless testing in Germany and Israel, achieving true autonomous driving, with an expected cost of $150,000 per vehicle.

The L4 for consumers will be launched in 2024-2025. Unlike Robotaxi, the consumer L4 can drive in wider areas, but heavily relies on REM, and will retail for $10,000 with a cost estimate of less than $5,000.

In Mobileye’s view, Robotaxi and consumer L4 are not in conflict, and the earlier investment in Robotaxi can help Mobileye obtain valuable data, while the consumer L4 can achieve cost reduction through scale.

In conclusion, the above is all the information revealed by Mobileye at CES this year. It is undeniable that Mobileye’s years of accumulation still give it an absolute advantage in visual technology. From our test with EyeQ4 chips equipped on vehicles, they have shown decent basic abilities. When we communicated with ADAS leaders of car manufacturers, they also admitted that the quality of Mobileye’s results was very high.

However, due to the fact that the perception algorithm is a black box that cannot meet the needs of car manufacturers for self-developed algorithms, Mobileye has lost many orders from top car companies. Industry insiders have also revealed to us that compared to Mobileye, NVIDIA and Horizon Robotics provide better open development tools for car companies to realize algorithm self-development with higher communication efficiency.

Mobileye also stated that they will launch an open computing platform with Intel in January, and last October, their technical staff went to China to support the Zeekr SuperVision project. Intel in China also formed a 50-person team to support the development of Zeekr SuperVision.

Therefore, it is too early to say that Mobileye is no longer competitive. What is worth observing is whether Mobileye’s adjustment in commercialization thinking is faster or whether the factories’ self-developed algorithms are faster.But more consideration needs to be given to the fact that, as demand for autonomous driving chips grows, there are increasingly more suppliers who can provide the products, turning this market from a seller’s market in 2019 to a buyer’s market.

Finally, we still pay the highest respect to Mobileye, a company that pioneered the vision-based autonomous driving technology and is still dedicated to the development of the industry’s technology.

The following interview content was organized by Garage No. 42:

Mobileye’s Explanation on the Black Box

Question: Regarding openness, because many customers in the domestic market have feedback that our algorithms, chips, and other hardware are all black boxes. Has Mobileye realized this issue? I don’t know if more work will be done on EyeQ6 or EyeQ5 to allow OEMs to do more autonomous development in the future?

Answer: I have answered this before, and now I’ll share more. Regarding the basic forward ADAS camera providing safety warning functions, through the close combination of software and SoC, it can lower verification costs, improve energy efficiency, and reduce cooling costs. Our customers really like this compact design for a forward-facing safety-assist ADAS camera. This is the forward-facing camera they want.

When it comes to multi-camera systems with centralized computing platforms, this is also what we set as a design goal when we designed EyeQ5 for these areas about three to four years ago, making it a programmable platform.

We now have four to five customers overseas who are developing software for the EyeQ5 chip with us, with OEM or Tier1 partners providing additional development space. This is the framework for EyeQ5 design. As defined, EyeQ5 can support third-party programming.

A few years ago, we also announced the support for the driver monitoring system on EyeQ, which is also part of our vision. On EyeQ6, we go further. EyeQ6H can support video playback, and visualization is achieved through GPU and ISP. We hope that these contents can meet the customized needs of OEMs and that we can cooperate with them, that is, support an external software layer defined by our partners.

In summary, basic ADAS is a very price-sensitive product, and our customers do not need to program on the EyeQ chip, such as EyeQ4 chip, they only need to provide a safety assist function behind the windshield.

These customers pursue the most economical, safe and low-power products that can provide functions. In the central computing architecture of multi-camera and multi-sensor systems, we fully meet the programming needs of partners for EyeQ.

Mobileye’s View on Computing PowerQuestion: At CES, our EyeQ Ultra chipset was unveiled with computational power of 176 TOPS, which is not considered high compared to some industry competitors like NVIDIA. So, how does Mobileye view the relationship between computational power and Level 4+ autonomous driving?

Answer: I believe this obviously reinforces Prof. Shashua’s speech, and we are very frank that TOPS is a very insufficient indicator of computational power. The computational models we integrate into the EyeQ chipset are highly complex and cannot be quantified by any single indicator. Not only is high TOPS value involved, but also the types of workloads running in this task between thread-level parallelism, instruction-level parallelism, and data-level parallelism are different, making it much more complex than simply optimizing neural networks.

So, firstly, this metric is invalid. The evidence is that we can run the entire SuperVision system on two EyeQ5 chips. The entire SuperVision system supported by two EyeQ5 chips is an order of magnitude lower than the computational power or TOPS indicators discussed by other competitors.

We simply built and co-designed software in hardware, with a higher density of computing dimensions than TOPS. But computation density allows us to run a very broad task profile on two EyeQ5 chips, perceive the whole environment with 11 cameras, locate it on a map, generate driving strategies, and compute RSS based on them.

The second point is also a key element that we need to remember and recognize. We have been engaged in autonomous driving product work for more than 20 years, and the energy consumption requirements for computation are critical in the economic aspect of the product.

We know that the design of EyeQ Ultra should be powerful enough to compete with what we are running now, the Mobileye Drive, an autonomous driving system based on 8 EyeQ5 chips, which will be able to run the same computation with a power consumption of 100 watts. This is unprecedented in the world of electric vehicles, a critical standard, a critical KPI, and for electric vehicles, we do not want key energy consumption to consume battery power.

About the planning of domestic data center in China

Question: Mobileye announced at this year’s CES that it will establish a domestic data center in China and expand the number of Mobileye teams in China. Does this mean that REM, which is a crucial component of Mobileye’s visual solution, has a chance to land in China? If everything progresses normally, at what time point is it expected to land?

Answer:Answer: We are indeed building a powerful and important team in China, and this has become a reality through our collaboration with Jikr. This is a very important step for Mobileye, allowing us to work flexibly within such a large organization. We have deployed personnel from Intel, and also transferred technical personnel from Israel to China.

Regarding REM technology, we have already equipped SuperVision and crowdsourced map technology into Jikr’s vehicle model 001. REM will be used in China in a fully compliant and fully localized manner. This is very important for Mobileye and I look forward to REM’s launch and operation in China in the future.

Thoughts on Mobileye’s positioning of EyeQ6L product

Question: EyeQ6L is a successor to EyeQ4. Besides the volume, is it overall more powerful?

Answer: In terms of the abilities of the new EQ6 product, it is very suitable for market demand. EyeQ6 Light has stronger computing power and lower power consumption, which meets the needs of the basic ADAS segmented market. In this market, we need an integrated and very efficient solution that is both cost-effective and power-efficient, and EyeQ6 Light undoubtedly meets both of these requirements.

We have increased its computing power, but it should be noted that measuring the index based solely on computing power is incorrect. Compared with its predecessor, EyeQ6 Light has 2.5 times the computing power and lower energy consumption, making it a very competitive product.

In addition, this product also considers the potential of additional cameras. Multiple cameras can be used as part of the basic ADAS, such as a driver monitoring system, or rear parking or AEB. EyeQ6 Light basic ADAS was born to meet different global standards.

EyeQ6 High was designed with improved visual display capabilities, which is an important feature that has been completely improved based on EyeQ5. In terms of computing power, EyeQ6 High has increased 2.5 times compared with the previous generation EyeQ5 High.

Mobileye’s thoughts on the competitive landscape of the chip market

Question: Both domestic and international chip markets now have a relatively clear structure. For example, NVIDIA has already obtained many orders in the high-end intelligent vehicle market, Huawei is also competing for market share in some domestic car companies, and Qualcomm has also won orders.

Answer:Answer: A very important point is that companies selling SoCs are very different from companies providing strong, validated solutions. As for the timing of the market, we agree with your view that we have seen a huge demand for EyeQ chips in 2024-25, and we may gradually announce relevant news in the future.

Our position is completely different, and I may want to emphasize the importance of what we are doing. OEMs that need solutions require a highly scalable solution, which means that a solution suitable for the low-end market should be accessible, and the solution should be adapted to the mid-range and high-end markets by adding additional content and functions. The verification problem is that people need scalable content and additional functionality. A complex and critical system, such as a driver assistance or an autonomous driving system, needs to be verified to run safely and strictly.

Industry insiders are aware that when you decompose the system to meet multiple requirements, only slight differences in verification can be made on enhanced or added systems, such as subsystems for radar and lidar, and from high-end assistive driving to consumer-level autonomous vehicles.

Utilizing our powerful forward-facing ADAS camera to its fullest extent and incorporating it as part of the SuperVision 360-degree view, the data verification required in this regard is the same. So, compared to other SoC companies that compete in terms of AI computing power or other performance indicators, our position is completely different. Their approaches cannot deliver or guarantee that scalable and economically viable solutions can be built on these SoCs.

Question: Now there are more and more Chinese chip companies that are developing self-driving chips, and more Chinese companies will emerge in this field in the next few years. With so many competitors, what are Mobileye’s strategies to sell to more OEM manufacturers, convince more car makers to use Mobileye’s products rather than those of Nvidia or Qualcomm, and how can we enter the market faster and gain more influence?

Answer: I mentioned before, and I will make it clearer here. Now there are many “moon landing project” type Robotaxi projects. They have a lot of sensors and computing platforms on their cars and are driving in limited areas. This is completely different from the mass-produced autonomous driving solutions for the general public.The most important consideration for mass production is cost, followed by regional expandability, which means it can operate anywhere in the world, not just in certain fixed areas, as well as overall expandability design from basic ADAS to consumer-grade AV solutions.

As I mentioned before, expandability means that the verification of the entire system must be very convenient. Our OEM partners will consider verification costs when designing. All of these factors will have a huge impact on the world of suppliers.

Some Robotaxi suppliers can only operate in fixed areas, which is completely different from the scaled consumer-grade AV. These are very important technological developments and market evolutions. To achieve mass production of consumer-grade AV, different skills, economic considerations, and deep understanding of delivering autonomous driving products are required.

Thoughts and plans regarding L4

Q: Regarding cooperation with OEMs, such as Mobileye mentioning the joint launch of L4 autonomous driving capability with Jidu, but I see that Jidu’s first product, Jidu 001 ADAS, is still delayed. Is the timeline for mass-production L4 in 2024 too aggressive or optimistic?

A: It is not aggressive. Our progress to date has been incredible.

We produced all of our hardware products within a year. Remember, these systems operate in multiple locations around the world using the same number of EyeQ5 chips and provide very extensive functionality.

Many OEMs rely on the capabilities of our products, which is the best proof. This computing unit is very active, and the production process has been verified. Strengthening the system and defining it for specific vehicles involves other factors in production. We are moving forward vigorously, with tight, active, and successful arrangements for delivery time and functionality, just like our consumer-grade AV.

In this project, the hardware was designed in advance, and the productization process for this project was faster than the year-long SuperVision project we completed with Jidu. The system itself is similar, and the system is also operational. This system runs on the same computing configuration of six EyeQ5 chips, and the progress of available functions and integration of productization into vehicles is fast. Both Jidu and ourselves strongly believe and are excited to announce this revolutionary product.Question: Previously, Mobileye mentioned a collaboration with NIO on the development of an autonomous electric vehicle and planned to launch an autonomous ride-hailing service in 2022. Does the collaboration with Jidu Auto on developing L4 capabilities conflict with Mobileye’s previous collaboration with NIO? Has Mobileye’s original plan for Robotaxi changed? Jidu Auto also announced a partnership with Waymo One for an exclusive autonomous vehicle fleet, how does this affect Mobileye?

Answer: The relationship between Robotaxi and consumer-grade autonomous vehicles is not conflicting but complementary, and it is crucial to establish this relationship.

We can only afford to bring consumer-grade autonomous driving functionality today because of the strong economic design constraints that we have in our Robotaxi solution.

We are planning to launch our Robotaxi service, which will be based on the NIO ES8 model powered by our Mobileye Drive autonomous driving kit with eight EyeQ5 chips, in Tel Aviv and Munich in 2022.

In Jidu Auto’s collaboration on consumer-grade autonomous vehicles, we have migrated that capability to an electronic control unit (ECU) with six EyeQ5 chips. At a deep strategic level, there is close collaboration between us and Jidu Auto. Selling cars to any other channel is Jidu Auto’s prerogative, as they can meet other customers’ needs and sell their vehicles; this is the OEM’s role. We are proud and happy to collaborate with Jidu Auto on launching these new products and facilitating future potential purchases.

Question 2: At this CES, we specifically talked about L4 capabilities. Can you share your views on the development of the L4 market in China?

Answer: Firstly, the fact that we deployed fleets in various locations around the world demonstrates the geographical scalability of our solution, which means we can deploy autonomous driving technology using REM map technology almost anywhere.

This is critical from a data perspective, proving that our vision that only high-precision maps built through crowdsourcing will enable autonomous vehicles to truly operate in diverse locations.

In less than a month, we have deployed fleets in six cities worldwide, which is a strong testament to our capabilities. The content that we call AV maps goes far beyond simple geometric descriptions of roads, shapes, and boundaries.

It carries a large amount of semantic information, which makes it possible to deploy seamlessly and efficiently in new areas. One of the core elements, the second element mentioned by Professor Shashua in his CES keynote, is efficient computing, allowing us to achieve our goals.Our SuperVision system, one of our L4 subsystems, is powered by two EyeQ5 chips. At the L4 stage, our Robotaxi is equipped with 8 EyeQ5 chips for an autonomous driving system, which we call the Mobileye Drive system, and has received orders from numerous customers around the world.

By gradually expanding the design operating domain, our consumer-grade AV will also gradually come to fruition. Consumers will be able to begin transitioning to autonomous driving in more restrictive environments such as highways or traffic congestion, and gradually obtain wider ranges of autonomous driving operations through OTA updates. With a strong partnership with JIK, we’re able to land China’s first L4 consumer-grade vehicle and achieve this future vision.

We see that Mobileye’s system design set in 2017 has significantly reduced the cost of autonomous driving products for consumers. This is a significant piece of information, and all data points show that our design is feasible.

Mobileye’s considerations for driving styles in different regions

Question: There are significant differences in road conditions and traffic regulations in different countries. How does Mobileye ensure that enough road test data is collected in China to train software?

Answer: One is the data needed for computer vision algorithm training, the other is the data needed to build AV maps through crowdsourcing.

We have a very large database of over 200 PB provided by multiple global projects, as mentioned by Professor Shashua in his speech at CES this year. By working with OEM partners to develop rich products and deploy projects globally, we are collecting various video data from around the world. Through the development and integration of our products, we continue to collect video data from all over the world.

The second aspect is the aggregation of AV maps. Our crowdsourcing method is designed from the bottom up, using a very streamlined approach. The amount of data transmitted from the vehicle end to the cloud is very small, in order to draw the map.

To give you a rough idea, it’s like playing two videos on YouTube every year, and we collect all the useful information from production vehicles equipped with EyeQ chips. We’re talking about several tens or hundreds of megabytes of data that we can collect from a car every year, which is a very economical way of thinking and designing. The return on building this map is enormous.

Now let’s talk about how machines understand driving. We don’t base this understanding on data training, but instead, we establish a very strict understanding of the geometry of the road, the boundaries of the road, and a very rich set of semantic indications to indicate how the machine should drive in that area.An excellent example is the common speed limit in a given area, as well as the relationship between traffic lights and lanes or traffic signs. When deploying in multiple cities around the world, another important semantic that we have learned is the study of the meaning of intersections, how to maximize visibility and minimize accident risks when passing through an intersection.

For drivers, it is very simple to drive through a familiar intersection. However, if it is not so familiar, they need to learn. We aggregate this knowledge from human drivers through crowdsourcing. Therefore, any road is familiar to us, which is a very important understanding of our AV maps. Before hitting the road, we familiarize ourselves with and understand the roads, which is what we are doing with data. However, this data is not used to train the system’s understanding ability.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.