In 2019, Tesla officially launched NoA, which is the Navigate on Autopilot feature. After the user sets the destination on the car map, the vehicle can automatically merge on/off the ramp and overtake slow cars on available road sections. Although this is still only a Level 2 function, Tesla has made a significant breakthrough compared to models with only lane keeping and adaptive cruise control.

In 2020, domestic new forces NIO took the lead in launching NOP; in January 2021, XPENG followed suit. By the end of the year, Ideal also caught up. However, looking at the international automotive industry, only these four new forces have made this advanced driver assistance function.

But starting from 2022, the situation may change.

Flexing Muscles First

In November 2021, Moovita Intelligent Drive, which originated from the Intelligent Driving Forward-looking Division of Great Wall Automobile Technology Center, officially pushed the NOH Intelligent Navigation Assistance (INA) function to WEY Mocha users. This also made Moovita Intelligent Drive the first autonomous driving AI company in China to launch this function.

And just over a month after the NOH SOP was launched, Moovita Intelligent Drive launched a stronger function based on that: urban-level Navigate on Highway (NOH-U).

This also made Moovita the third company to announce this feature after XPENG’s NGP and City NGP. Regarding its performance, the official also released a video of a real-road test.

11 km Capability Display

In the one-shot video displayed by Moovita Intelligent Drive, the test car equipped with MOOVITA NOH-U traveled 11 kilometers, passing through:

- 24 intersections

- 27 pedestrian crossings

- 22 traffic lights

- 5 unprotected pedestrian crossings

- 2 roundabouts

The video lasted a total of 34 minutes, during which there was no manual takeover.

Just looking at these numbers, one can feel the complexity and difficulty of urban navigation assistance driving. But for those who do not understand this type of function very well, some understanding is still needed. So below we will analyze Moovita City NOH’s response to different scenarios encountered in urban road conditions.

Shortly after setting off, the test car encountered a typical high-difficulty scenario – an unprotected left-turn intersection.

An unprotected left-turn refers to an intersection without a specifically set green light for left turns, where the left-turning vehicle and the forward-going vehicle travel at the same time. This not only requires the vehicle to have precise perception of the vehicles in all directions, but also requires the system to simultaneously track multiple traffic targets. On the regulatory side, it also needs to run certain game algorithms based on traffic rules in the scenario.Even for novice drivers, this is not a simple intersection, let alone for a machine. However, luckily, the traffic flow is not heavy at the intersection in the video, so the vehicles can quickly pass through once they confirm that there are no other vehicles around.

One thing worth mentioning in this scene is that the vehicle accurately identifies the traffic signal light before reaching the intersection, and the vehicle’s driving will make the next decision according to the instruction of the signal light.

After the test vehicle passed through the intersection, it happened to encounter a vehicle turning around in the opposite lane. From the test vehicle’s HMI (Human-Machine Interface), it can be seen that the vehicle has already identified the slow-moving vehicle on the left front. However, since the vehicle has not invaded this lane, the test vehicle did not make any unnecessary avoidance or deceleration actions.

But then the vehicle on the left suddenly accelerated and did a CUT IN action at the position of the test vehicle’s front. The test vehicle urgently slowed down and avoided, demonstrating good decision-making ability for identifying car cutting-in.

The vehicle enters the rightmost lane in advance to prepare for the subsequent right turn. From the movement of the steering wheel, the lane-changing action of the test vehicle is still relatively smooth. Perhaps many people do not understand what this means, but in fact, a smooth action can improve the user experience, whether it is the trust of the machine or the passenger’s feeling.

This is the first unprotected right turn in this journey. Perhaps the right turn situation we encounter in daily driving is relatively simpler than the left turn, but the challenge still exists for the machine.

First of all, on the perception level, the vehicle needs to decelerate in advance before turning. At this time, the vehicles waiting in the left lane will block the view and cause the test vehicle to be unable to “see” the situation on the left front zebra crossing. The vehicle can only slow down and crawl, and then predict the trajectory after identifying other traffic participants on the zebra crossing to ensure that there will be no conflicts with its own driving trajectory before executing the next operation.

Then at this time, the vehicle cannot be “focused on the head but ignore the butt.” The vehicle also needs to recognize other traffic participants on the right rear, most likely two-wheel vehicles. The perception equipment of NOH’s smart city can achieve 360-degree visual coverage, so it can recognize the vehicle on the right rear.

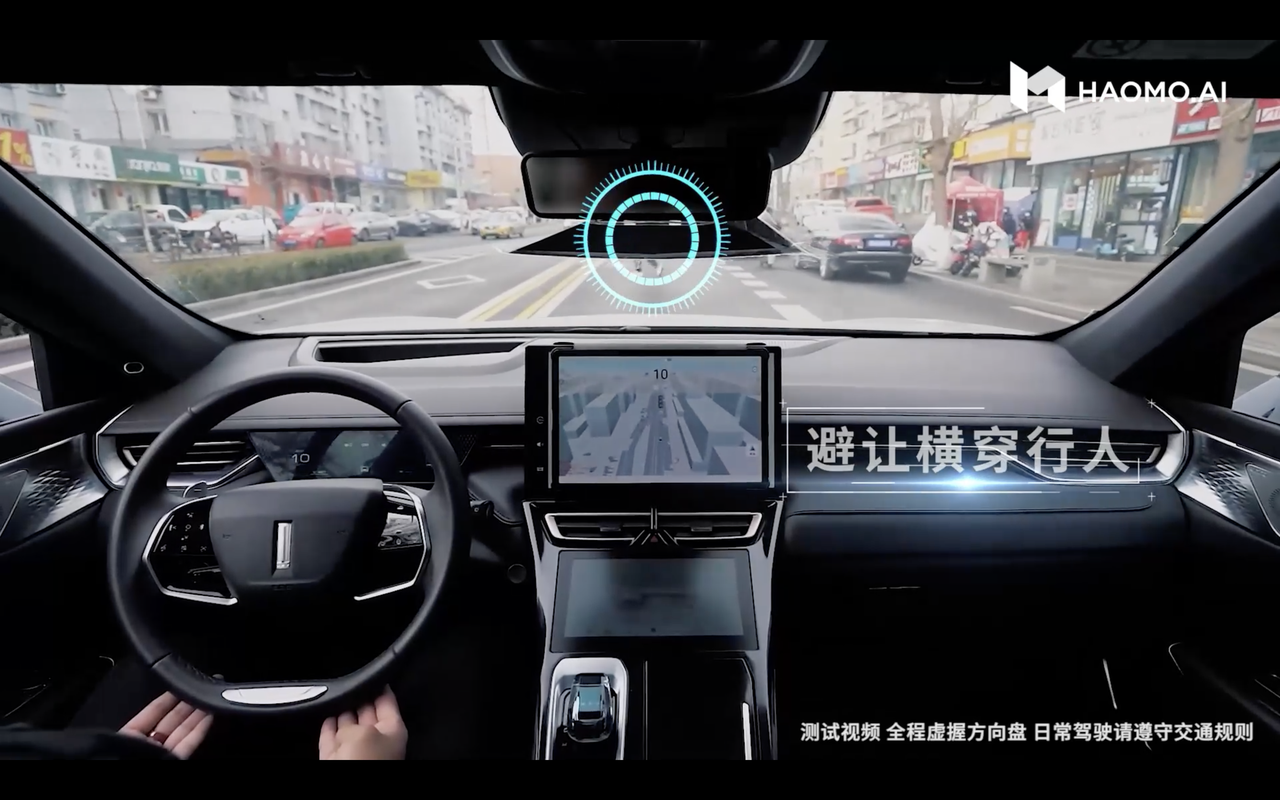

A pedestrian crossed the road in front of the vehicle, violating the traffic regulations. The test vehicle identified this in time, decelerated and yielded to the pedestrian.

The capability to pass through a roundabout is the scenario that most tests the system’s recognition, decision-making, and execution capabilities. Even when humans are driving, they need to pay attention to everything around them when passing through a roundabout. Moreover, in enclosed roads (such as highways and viaducts), there are basically no roundabouts, so the data and experience from driving on such roads are not applicable in this scenario.

Before entering the roundabout, the test vehicle changed lanes once and chose the optimal route, and then decelerated and yielded to a pedestrian on a pedestrian crossing. After entering the roundabout, another two-wheeled vehicle suddenly cut in front of the test vehicle. The test vehicle decelerated rapidly and avoided the other vehicle.

Inside the roundabout, the vehicle chose the middle lane and always maintained a centered position. At the second intersection, the test vehicle turned on the right turn signal, changed lanes and exited. Although encountering multiple cases in a row, the whole set of actions of MoatCity NOH in response was still very smooth.

The vehicle passed through the roundabout again. This time, the test vehicle used the same strategy, which was to choose the middle lane to enter the roundabout and also maintained the middle lane in the roundabout. This time, the road condition was relatively simple compared to the previous roundabout, without the interference of other traffic participants, and the test vehicle’s passing speed was relatively fast.

Encountering another unprotected left turn, the test vehicle identified that there was a small car turning right in the opposite lane, but its trajectory had nothing to do with the test vehicle, so the passage was smooth again.

At this point, there happened to be a small car making a U-turn in the opposite lane. The test vehicle successfully identified it and decelerated. After the car drove to the right lane, the test vehicle accelerated and drove away.

# Test Successfully Identifies and Enters Waiting Area Before Left Turn

# Test Successfully Identifies and Enters Waiting Area Before Left Turn

In this scenario, the system must be able to recognize lane markers and traffic signals and make decisions based on the environment. However, this is not an unprotected intersection, so passing through it is not particularly difficult.

Vehicle on Right Suddenly Accelerates in Front, System Immediately Slows Down to Yield

In urban scenes, “close-range lane changes” are relatively common, and this requires the system to accurately identify the surrounding environment and make timely decisions.

Open intersections also frequently appear in cities, with the most common being T-shaped intersections. Sometimes, this type of intersection does not have traffic lights, and human drivers usually choose to pass through them slowly. This is also the case for the test vehicle, which slows down and passes through the intersection slowly.

After a Right Turn in an Unmanned Aisle, the Test Is Completed With No Takeover by the Safety Officer

We don’t know what everyone thinks after watching this kind of test, but in terms of its difficulty alone, the ability to achieve long-term semi-autonomous driving on urban roads requires a particularly deep accumulation of technical expertise.

This is because urban road conditions are completely different from closed roads.

The difficulty level increases exponentially, and considerable requirements are placed on the vehicle’s perception and computational abilities. So, how did HoloMatic quickly develop this technology? This brings us to the HoloMatic AI DAY, where the company officially unveiled its data intelligence system MANA (Xuehu).

MANA: The Triad of the Intelligent System

We all know that data is the foundation of autonomous driving. HoloMatic’s MANA is an exact data intelligence system, which includes perception intelligence, cognitive intelligence, and automatic verification.

In a closed road, vehicles need to identify lane lines and the surrounding situation of the vehicle, but information such as speed limits and the curvature of the slope is provided by high-precision maps. High-precision maps play a “God’s-eye view” role in high-speed navigation-assisted driving, providing prior information to vehicles. This is also referred to as “cheating” and “plug-ins” by many pure visualists.

In a closed road, vehicles need to identify lane lines and the surrounding situation of the vehicle, but information such as speed limits and the curvature of the slope is provided by high-precision maps. High-precision maps play a “God’s-eye view” role in high-speed navigation-assisted driving, providing prior information to vehicles. This is also referred to as “cheating” and “plug-ins” by many pure visualists.

Of course, Tesla is an exception. Among a group of car models with navigation-assisted driving functions, only Tesla’s models do not use high-precision maps. In terms of experience, visual-only solutions and systems with high-precision support have their own advantages and disadvantages.

Regarding urban navigation-assisted driving functions, vehicles in closed road sections can strongly rely on high-precision maps, but relying on high-precision maps in complex urban roads is unrealistic. One is that the efficiency of collection and repair cannot meet the requirements; the other is that the complexity and uncertainty of the real world are too high.

Therefore, establishing a powerful database and improving single-car intelligence are the key to solving these problems.

Perception Intelligence

For autonomous driving, the ability to perceive is very important, which is the foundation and premise of all work.

The test vehicle of Moov’in NOH, a city intelligence research program of Mobvoi, adopts a sensor configuration consisting of a camera and a LiDAR, which can achieve 360-degree pure vision coverage. Regarding the impacts of the camera’s acquisition, Moov’in NOH uses a common backbone network, calculates the basic data, and generates two branches, one is multi-layer feature-based target tracking extraction, including recognition of lane lines, stop lines, roadside, vehicles, and traffic signals. The other branch generates scene recognition.

Regarding the point cloud data acquired by the LiDAR, Moov’in NOH uses the pointpillar algorithm, which has the feature of fast calculation. Moov’in NOH first reduces the dimensionality of the point cloud data to pseudo-2D, and then uses the calculation of the feature extraction network to perform multitask 3D box detection and obstacle detection.

In practical applications, sensors may sometimes be obstructed and cannot be effectively used. Moov’in integrates these two types of data by fusing the process of data sources, aiming to reflect the real world in the tensor space map.

Perception is objective, and the result is only 0 and 1. Sensors and mapping networks only need to reflect the objective world, the closer to reality, the better. Therefore, on urban roads where various types of situations exist, the only solution is to eliminate all the Concer Cases with a huge amount of data.

Perception has standards, and cognition is established by itself.

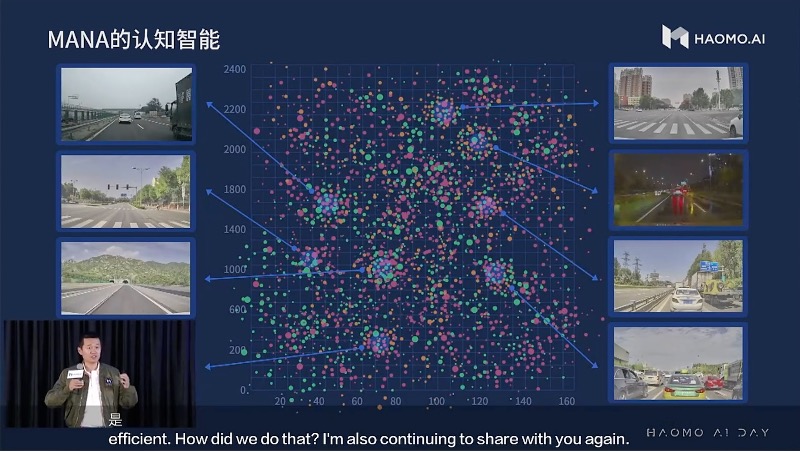

Cognitive Intelligence

Moov’in starts from macro to micro, continuously refines all kinds of problems in the real world, and has many details that affect our driving behavior.

From a macro perspective, there are several factors affecting driving scenarios, including weather, road structure, traffic participants, traffic density, relative position, main vehicle route, collision risk, and collision time interval. Momenta mines and expresses these attributes from existing data, and then performs clustering and classification to find more comfortable and efficient solutions.

From a macro perspective, there are several factors affecting driving scenarios, including weather, road structure, traffic participants, traffic density, relative position, main vehicle route, collision risk, and collision time interval. Momenta mines and expresses these attributes from existing data, and then performs clustering and classification to find more comfortable and efficient solutions.

Microscopically, Momenta focuses on refining and analyzing all the details in a driving scenario. For example, it divides a start-stop action into four phases: steady-state following, deceleration, stopping, and starting. In each phase, the corresponding processing by the vehicle system must be reasonable and comfortable.

Momenta’s smart driving research utilizes an end-to-end simulation learning approach that digitizes the scenario and derives specific vehicle actions based on previous experience as guidance. Simulation learning requires larger data samples, and rules are learned from the data.

Annotation and Validation

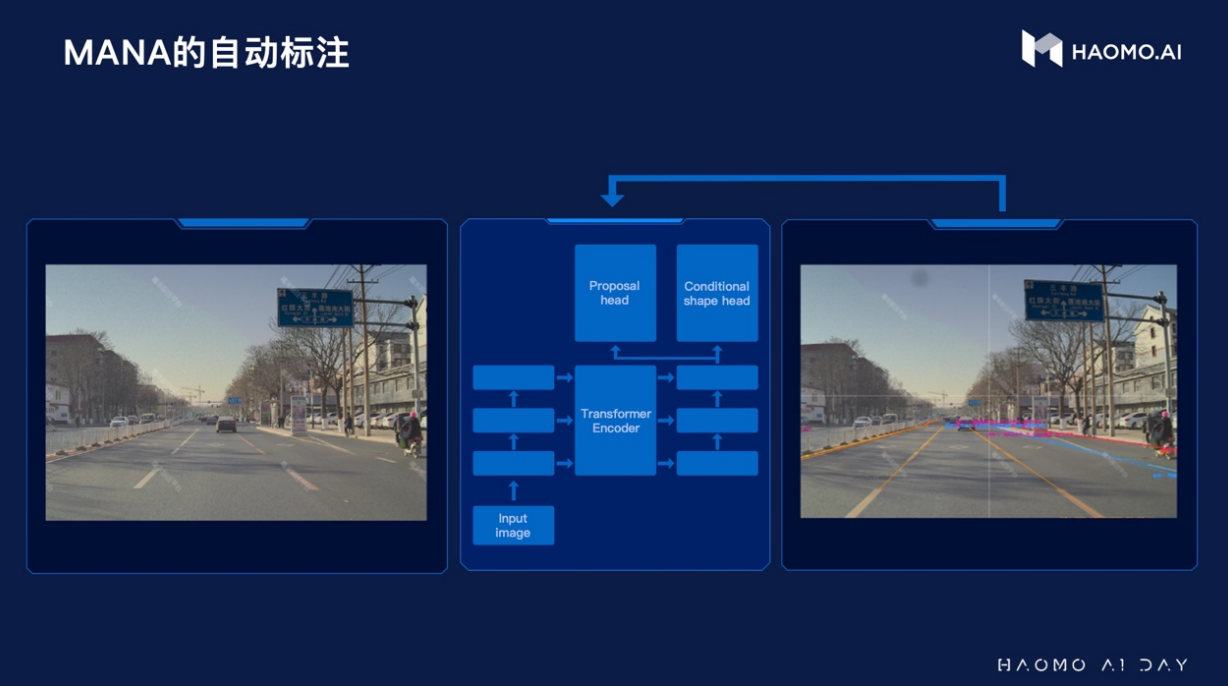

As the volume of data grows, the efficiency issue of annotation emerges. Momenta employs an unsupervised automatic annotation method, which performs reasonably well but still has room for improvement.

Validation also has many aspects, such as generalization verification for perception.

Momenta first performs 1:1 scene simulation based on the collected footage, then changes the scene environment, such as lighting, rain and fog. Finally, the perception results are verified using the images generated under different environments.

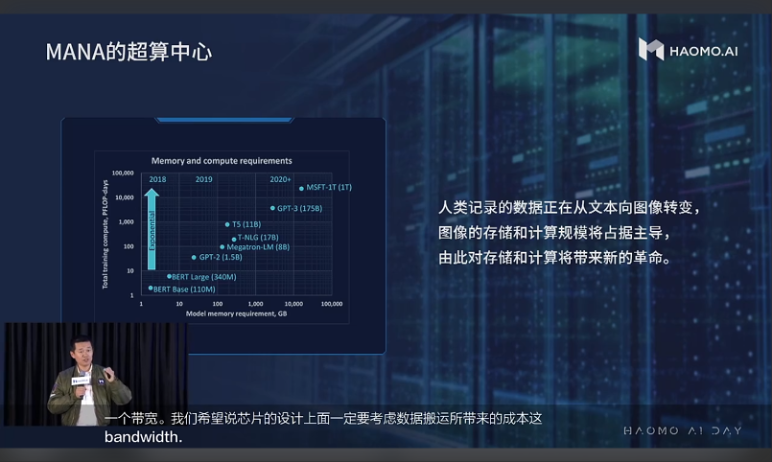

Nowadays, the types of data are transforming, which not only requires computing capability but also higher efficiency in data storage and transportation. Therefore, Momenta announced on AI DAY that they are also preparing for their own supercomputer center.

Furthermore, at Momenta’s AI DAY, Momenta partnered with Qualcomm to launch the ICU 3.0 domain controller, with the main chip being Qualcomm (8540+9000) and a computing power of 360 TOPS. The safety redundant chip uses Infineon (TC397) and can perform L1/L2 downgrading control.

Backed by the Great Wall, Facing the Entire Industry

At the end of the article, I would like to provide further insights into Momenta’s background and future development direction.# HAOMA Autonomous Driving

HAOMA Autonomous Driving was incubated by Great Wall Motors and its predecessor was the Forward-looking Intelligent Driving Department of Great Wall Motors. After the spin-off, the company has completed several rounds of financing, totaling tens of billions of RMB.

Great Wall Motors is not only a major shareholder of HAOMA Autonomous Driving, but also a major customer. Thanks to the huge delivery volume of Great Wall Motors, the system of HAOMA Autonomous Driving can be quickly put into production. The HAOMA NOH Intelligent Navigation Assisted Driving System (ADAS) was just SOP not long ago, and has been installed on many models, such as the Haval M6, Tank 300 City Edition, Haval F7, WEY Latte, and Makkuro.

It is estimated that the HAOMA NOH ADAS will be installed on 34 Great Wall vehicle models as standard equipment in 2022, accounting for about 80% of the overall market. In the next three years, the number of Great Wall passenger cars equipped with HAOMA ADAS will exceed 1 million.

Compared with new forces, HAOMA has the backing of Great Wall Motors but is also open to the entire industry, which enables them to provide other automakers with pre-installed safe and reliable ADAS systems in the role of suppliers. Therefore, their goal of achieving 1 million installations is not a pipe dream, and the progress may exceed our expectations.

A tall tree grows from a tiny sprout, and with data-driven technology, HAOMA will attract more automakers to join its circle of friends. Will it finally become a “tall tree”?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.