The 19th Guangzhou International Automobile Exhibition has successfully concluded on November 28th, 2021, as the finale of China’s domestic automobile market this year. The strong sense of technology on-site conveyed a new concept of technology changing life. According to the official data released, 54 globally debut vehicles were launched in this exhibition, including 47 domestic brands. The attention of Chinese automakers has gradually increased, and the brand effect has begun to emerge.

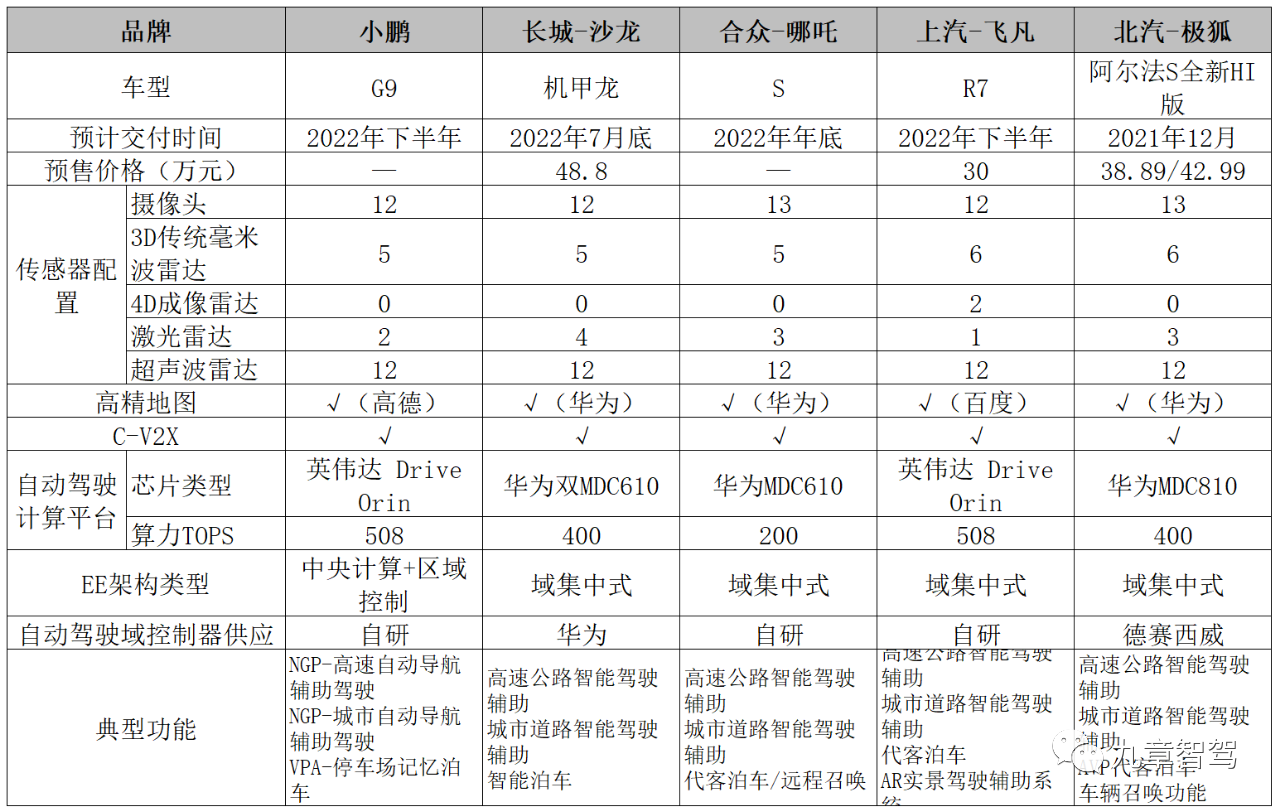

“Nine Chapters Intelligent Driving” lists the 5 popular self-driving models in this exhibition, including XPeng G9, Salon Mechadragon, NETA S, FF R7, and Jihoo Alpha S new HI version.

Table: Self-driving configuration information of 5 models。

Table: LIDAR suppliers list.

XPeng G9 (planned delivery time: 2H 2022)

Self-driving perception system

Sensor solution: LIDAR *2+ mmWave Radar *5 + 8 ADS cameras + 4 surround view camera + 12 ultrasonic sensors.

- LIDAR performance parameters (Senthink RS-LiDAR-M1):

- Type: MEMS

- Detection distance: 150 meters @10% reflectivity

- FOV (H/V): 120°*25°

- Angular resolution: 0.2°*0.2°

- Laser source: 905 nm

- LIDAR distribution location: One under each headlight

- Working temperature: -40°C~+65°C

- 8 ADS cameras arrangement

- Front cameras *3 (1 8-million-pixel binocular + 1 long-focus monocular): Front windshield

- Side cameras *4 (2.9 million pixels): Side front view *2 (under the outside rearview mirror base) + side rear view *2 (wings)

- Rear camera *1: Under the taillight

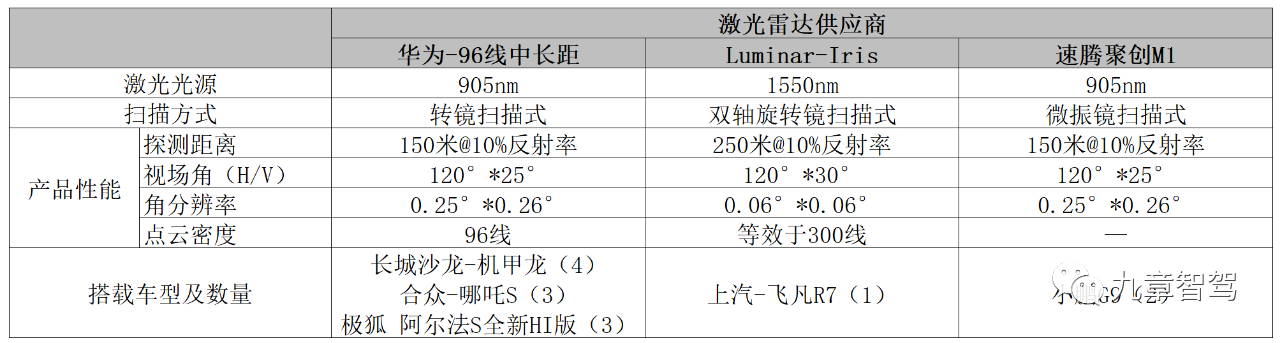

X-EEA3.0: Central computing + regional control

-

High-fusion hardware architecture: “Central supercomputing + regional control”

-

Adopts a hierarchical software platform architecture: system software platform, basic software platform, intelligent application platform

-

Zero-feel OTA: <30min for vehicle upgrade, can continue driving during the upgrade process

-

Self-diagnosis function: real-time detection of simple faults, cloud simulation prediction for complex faults

Automatic Driving Function and Application Scenario

Xpeng claims that its self-developed XPILOT 4.0 system has full-scene intelligent driving capabilities, including city NGP, highway NGP, and VPA memory parking capabilities.

Great Wall Salon – MechDragon (Scheduled to be delivered by the end of July 2022)

Automatic Driving Perception System

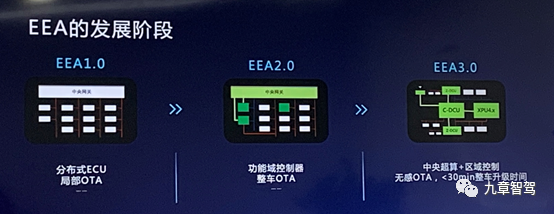

Sensor solution: 4 x LiDAR + 5 x mmWave radar + 7 x ADS camera + 4 x surround-view camera +12 x Ultrasonic radar

(1) Performance parameters of LiDAR (Huawei 96-line mid-to-long range mixed solid-state LiDAR)

-Type: Mirror scanning mixed solid-state LiDAR

-Detection distance: 150 meters @10% reflectivity

-FOV (H/V): 120°*25°

-Angle resolution: 0.25°*0.26°

-

Laser source: 905 nm

-

Vehicle effective detection distance over 200 m

-

Pedestrian effective detection distance over 150 m

-

Intelligent cleaning & automatic heating

LiDAR layout: 1 each on the front bumper, 1 below the license plate in the front, 1 directly below the rear bumper

(2) ADS camera parameters

-

Camera pixel: 8 MP

-

High dynamic range: HDR>130

-

Conical barrel detection distance for small targets: >180 m

-

Red and green light detection distance: 180 m

The entire perception solution is equipped with 38 sensing elements, in addition to those mentioned above, it also includes DVR camera * 2 + DMS camera * 1 + OMS camera * 1 + high-precision IMU

Automatic Driving System Computing Platform: Huawei Dual MDC610 Solution

-

Chip type: Huawei MDC610 *2

-

NPU computing power: 400 TOPS- CPU Performance: 440 K DMIPS

-

Interface Capability: 16 Cameras + 12 CANs + 8 Auto-Eths

-

Dimensions: 30020085 mm

-

Safety Level: ASIL D

-

Architecture: Self-developed DaVinci architecture

Automatic Driving Functions and Application Scenarios

The Captain-Pilot intelligent driving system consists of three parts: intelligent navigation, intelligent parking, and intelligent connectivity. However, some functions still need to be gradually opened up according to regulations and supporting facilities.

(1) Intelligent Navigation: Urban Scenarios Can achieve recognition and processing of roundabout exits, special vehicles such as police cars and fire engines, small animals, hand carts, etc. Supports automatic start-stop at traffic lights, automatic lane changing, overtaking, and other functions; Highway Scenarios can automatically pass through toll stations, identify and avoid obstacles and construction zones, and automatically drive to a safe zone after a malfunction.

(2) Intelligent Parking: PAVP autonomous parking across floors + HAVP memory parking with fixed parking spaces, remote control parking + APA fusion automatic parking.

(3) Intelligent Connectivity: Based on 5G-V2X, it achieves interactive courteousness for special vehicle types, interactive warnings for abnormal vehicles ahead, interactive warnings for blind spot lane changes, interactive warnings for vulnerable traffic participants, and interactive warnings for dangerous road conditions.

Hezhong-NETA S (Scheduled Delivery Time: End of 2022)

Automatic Driving Perception System

Sensor solution: 3 LiDARs + 5 mmWave radars + 13 cameras + 12 ultrasonic radars.

LiDAR Performance Parameters (Huawei 96-line medium and long-range hybrid solid-state LiDAR)

-

Type: Scanned hybrid solid-state LiDAR with rotating mirror

-

Detection Distance: 150 meters @10% reflectivity

-

FOV (H/V): 120° * 25°

-

Angular Resolution: 0.25° * 0.26°

-

Laser Source: 905 nm

Automatic Driving System Computing Platform: Huawei MDC Computing Platform

-

Chip Type: Huawei MDC610

-

Performance: 200 TOPS

-

Interface Capability: 16 Cameras + 12 CANs + 8 Auto-Eths

-

Dimensions: 30020085 mm

-

Safety Level: ASIL D

Automatic Driving Redundancy Design

-

Perception Redundancy: 5R11V2-6L no corner blind spot coverage, high-precision map assistance

-

Actuator Redundancy: Dual-backup battery, dual-backup brake, dual-backup steering- Controller redundancy: dual controllers, dual chip processing

Autonomous Driving Function and Application Scenarios

- Main functions: navigation assisted driving on high-speed and urban roads, and partial autonomous driving functions such as parking and remote summoning

- Application scenarios: high-speed scenarios, low-speed scenarios, urban scenarios

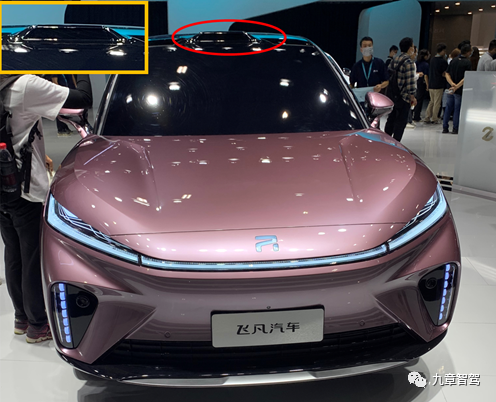

SAIC – RisingAuto R7 (planned delivery time: 2nd half of 2022)

Autonomous Driving Perception System

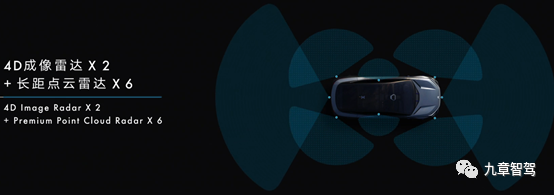

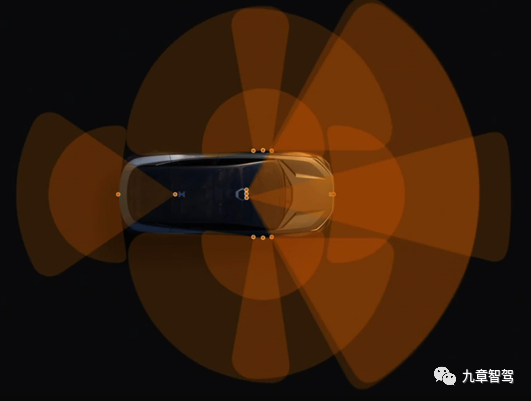

Sensor solutions: LIDAR *1 + 4D imaging millimeter-wave radar *2 + traditional 3D millimeter-wave radar *6 + ADS camera *7 + surround camera *4 + ultrasonic radar *12

(1)LIDAR performance parameters (Luminar Iris)

- Type: dual-axis rotating mirror scanning hybrid solid-state LIDAR

- Maximum detection distance: 500 meters

- Detection distance: 250 meters @10% reflectivity

- Accuracy: 1 cm

- FOV (H/V): 120°*30°

- Angular resolution: 0.06°*0.06°

- Laser source: 1550 nm

- Point cloud density: equivalent to 300 lines

(2)4D imaging radar:Continental – PREMIUM distribution location: one before and after the car

- Detection distance: 350m

- Bandwidth: 77 GHz

- FOV (horizontal detection angle): ±60°

- Operating mode: FMCW (Frequency Modulated Continuous Wave)

- Number of transmission/reception channels: 192 channels, providing thousands of data points per measurement cycle

- Able to accurately identify small and static objects: according to tests, vehicles using this technology can detect a can of cola as far away as 140 meters

- Road boundary detection and free space measurement

(3)Distribution of 7 ADS cameras

Combining the display vehicles on-site and the distribution of cameras displayed by R-TECH, it can be concluded that:

- Front camera *2 (1 binocular + 1 telephoto monocular): front windshield- Side cameras * 4: Side front view * 2 (under external rear-view mirror) + Side rear view * 2 (wing)

- Rear camera * 1: Below taillight

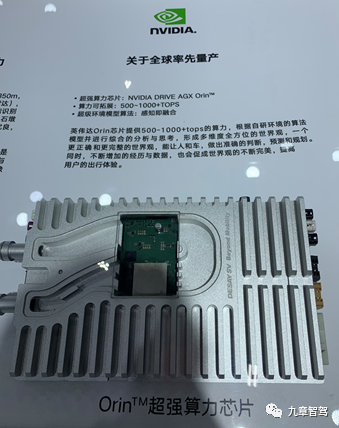

Automated driving system computing platform: NVIDIA Drive Orin

-

Ultra-high computing chip: NVIDIA DRIVE AGX Orin™

-

Computing power: 508 TOPS

-

Expandable computing power: 500~1000 Tops

-

Super environment model algorithm: Perception is fusion

-

Applicable operating system: Linux, QNX, and Android

Automated driving functions and application scenarios

1) Automated driving functions:

Based on R-TECH technology platform’s independent research and development of PP-CEM full-stack self-developed advanced intelligent driving solution, equipped with a six-fold fusion perception system and super brain dual characteristics. According to official information released, this automated driving solution can achieve: all-weather and all-scenarios.

2) Application scenarios:

Combining the current known configuration of the vehicle, the fusion perception solution with multiple heterogeneous sensors such as LiDAR and 4D imaging radar has been configured, and with the addition of the high computing power calculation platform of NVIDIA Drive Orin, it is expected that R7 can achieve high-speed roads, urban areas, and valet parking three major scenarios.

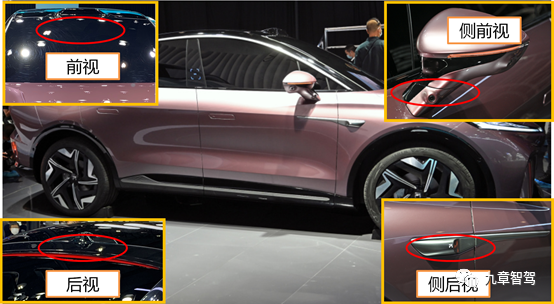

BEIJING AUTOMOTIVE – ARCFOX α-S HI version (Planned delivery time: December 2022)

Automated driving perception system

Sensor solution: LiDAR * 3 + Millimeter-wave radar * 6 + ADS camera * 9 + Surround-view camera * 4 + Ultrasonic radar * 12+ In-car monitoring camera * 1

(1) LiDAR performance parameters (Huawei 96-line mid/long-range hybrid solid-state LiDAR)

-

Type: Mirror-scanning hybrid solid-state LiDAR

-

Detection distance: 150m @10% reflectivity

-

FOV(H/V):120° * 25°

-

Angular resolution: 0.25° * 0.26°

-

Laser source: 905nm

-

Effective detection distance for vehicles: >200m

-

Effective detection distance for pedestrians: >150m“`

(2)Layout of 9 ADS cameras

- Front camera *4 (1 stereo camera +1 telephoto camera + 1 wide-angle camera): on the front windshield

- Side camera *4: 2 side-front view cameras (under the side-view mirrors) + 2 side-rear view cameras (on the fenders)

- Rear camera *1: on the rear wing center

Self-driving system computing platform: Huawei MDC computing platform

- Chip type: Huawei MDC810

- Supports two levels of computing power: 400 TOPS/800 TOPS

- High integration: integration of combination inertial navigation, ultrasonic radar ECU

- Flexible design: supports detachable liquid cooling and plug-in SSD

- External size: 300 × 171 × 35 mm

- Sensor configuration: supports up to 16 cameras + 8 lidars + 6 millimeter radar + 12 ultrasonic radar

- High reliability: EMC Class5, IP67 dustproof and waterproof, supports -40°C~85°C environment temperature

Redundancy Design for Self-driving System

The IMC architecture of a fully redundant electric platform has four characteristics: super expansion, super intelligence, super interaction, and super evolution.

Redundancy design: steering, power, communication, braking, perception sensors

If the main system fails, it will immediately switch to the redundant system, for example:

a. Redundant braking: When the main braking system fails, the redundant braking system will switch to the backup system within milliseconds to provide emergency braking.

b. Redundant power supply: When the main battery fails to provide power normally, the system will automatically switch to the backup battery, and provide emergency power supply for up to three minutes, giving the driver enough time to pull over, greatly reducing the probability of safety accidents.

Autonomous driving features and applications

Intelligent driving assistance on highways, intelligent driving assistance on city roads, AVP valet parking, vehicle summoning function

Summary:

(1) The combination of cameras, millimeter-wave radar, and lidar has become the mainstream solution for self-driving vehicles. From the perception schemes of five vehicles, 5R12V is already the most basic configuration, and lidar has become the essential perception equipment for advanced self-driving vehicles. With the maturity of 4D imaging radar technology, there may be more choices for perception fusion solutions in the future.

“`(2) From the laser radar equipment of the 5 vehicles mentioned above, it can be seen that except for FIFAN which is only equipped with one 1550nm laser radar, the rest of the vehicles are equipped with more than two laser radars, achieving higher levels of automatic driving function. In addition, the 4D imaging radar has a detection range of 350 meters, which can make up for the shortcomings of traditional 3D millimeter wave radar and with the use of laser radar, it can better improve the driving safety of the vehicle.

(3) Localization and high computing power have become the trend of the future of intelligent vehicles. At this year’s auto show, domestically produced autonomous vehicles demonstrated very impressive performances in terms of the number of new cars, as well as the level of software and hardware. Huawei’s self-developed MDC chip has also been applied in multiple domestic car models, with 200/400 TOPS of computing power that is no less than the computing power of NVIDIA-Drive Orin which is 254 TOPS.

(4) High speed, urban, and parking are the three major scenarios that have become the three main directions for the functional implementation of autonomous driving vehicles. In addition, in terms of intelligent interaction, the emergence of AR interaction will further improve the interactive experience of intelligent driving.

(5) Centralized computing + regional control is gradually being localized and implemented. In addition to the newly announced XPeng Motors’ EE architecture, we have also learned that Great Wall Salon is also developing GEEP4.0 electronic and electrical architecture based on centralized computing and regional control, which is planned to be implemented in 2022. In addition, the “Mountain and Sea Platform” released by NIO will also achieve a new electronic and electrical structure of “central computing + cloud supercomputing” in 2025.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.