Just saw all the mass-produced models equipped with LIDAR at the Guangzhou Auto Show and couldn’t help but marvel at the rapid pace of R&D efforts by major domestic automakers. As 2022 marks the “year of LIDAR adoption,” not only do new automakers like “We Xiaoli” represent cutting-edge R&D momentum, but traditional automakers like GAC Aion and Changan have also announced their mass-produced models with LIDAR technology.

The addition of LIDAR improves the perception accuracy and environmental adaptability of intelligent vehicles. When faced with challenging scenarios such as changing light conditions, passing through narrow lanes, or encountering irregular or low obstacles, LIDAR can play a huge role in enhancing the intelligent driving experience and ensuring driving safety.

With so many models equipped with LIDAR, their layout and technical highlights differ significantly. How can we extract the critical indicators from the overwhelming technical specifications?

How to choose LIDAR?

First-generation LIDAR and its features

When it comes to LIDAR, many people will probably think of autonomous taxi companies that operate in a business model like Robotaxi, where LIDAR is mounted on the roof of their self-driving cars. As shown below:

The left-hand LIDAR in the above image is a 64-line LIDAR produced by Velodyne, which rotates to scan through an external shell, making it a mechanical rotating LIDAR similar to the diagram below. Due to significant motion loss during rotation, the accuracy of mechanical rotating LIDAR decreases over time, and its life span is relatively short. Currently, Robotaxi companies hardly use this type of LIDAR anymore.

Schematic diagram of mechanical rotating LIDAR principle

To solve the problems of a mechanical rotating LIDAR, LIDAR manufacturers have optimized its internal structure by scanning through a mirror reflection of the internal structure rather than physically rotating, leading to the development of a mechanical bearingless LIDAR, as shown in the diagram below:

As mechanical rotating lidar technology developed early, there are currently many mature vehicle-grade products available on the market for automakers to choose from. Both the Innovsion lidar mounted on the NIO ET7 and the Livox lidar mounted on the XPeng P5 belong to the category of mechanical rotating lidar in their internal structure.

Whether it is mechanical rotating lidar or mechanical rotating lidar, they both need to drive the mechanical structure (laser transmitter and receiver or laser reflector) through a motor to complete the laser scanning. Due to the mechanical structure, the distribution of the laser beam and the acquisition frequency (10 frames per second) of the point cloud of the traditional lidar using the motor scanning architecture are generally fixed.

As an autonomous driving engineer, I have encountered some difficulties in using the first-generation lidar in practice.

Although many lidar manufacturers claim that the maximum detection distance can reach 150 meters, in reality, point clouds beyond 100 meters are difficult to annotate because they are too sparse, and in most cases, they are filtered out as noise by algorithms.

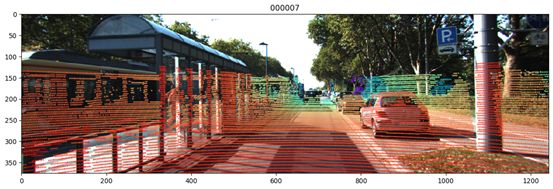

As shown in the figure below, the point cloud of the lidar is reflected and projected onto the image through coordinate transformation. It can be seen that the point cloud of the nearby car is still considerable, while the point cloud on the slightly farther car is dimly visible.

In addition, it can be seen that there is a gap between each beam, and the farther the distance, the larger the gap between two beams. If some low and irregular targets (such as stones or fallen ice cream cones) happen to be located between two beams, it is difficult to stably detect them even if the vehicle is in motion. These low and irregular targets are also difficult to perceive by vision, which can easily bring safety hazards to the driver, and this is the source of corner case in the R&D stage.

How does the second-generation lidar break through the dilemma?

Faced with the above difficulties, lidar manufacturers have launched the second-generation lidar.

At this year’s Guangzhou Auto Show, I noticed that most automakers still choose the first-generation lidar, and the mass production plans are also scheduled for next year. Manufacturers equipped with the second-generation lidar are not common, such as the AION LX Plus released by Guangzhou AEV Robotics, which is equipped with the second-generation lidar, RoboSense’s M1 lidar.

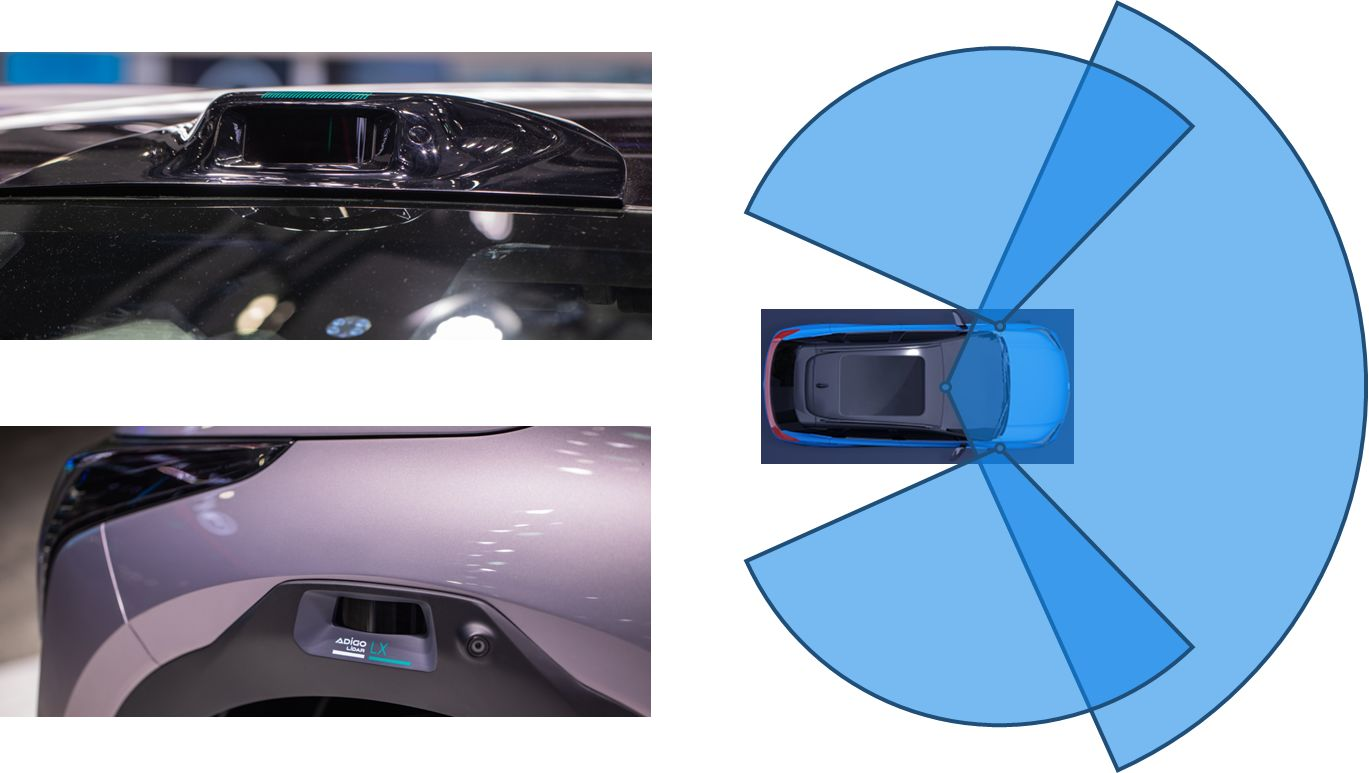

Compared to the laser perception range only in the forward direction of many car models, the GAC AION LX Plus has installed a lidar on the roof, which is in the same module as the camera, and combined with two blind spot lidars at the edge of the front wheel hub, achieving a 300° laser perception range. It is shown in the diagram below:

Compared to the laser perception range only in the forward direction of many car models, the GAC AION LX Plus has installed a lidar on the roof, which is in the same module as the camera, and combined with two blind spot lidars at the edge of the front wheel hub, achieving a 300° laser perception range. It is shown in the diagram below:

The biggest difference between the second-generation lidar and the first-generation lidar is that it abandons the original mechanical rotating mirror structure and uses a high-precision MEMS MEMS mirror to reflect the light path and complete the laser scanning, similar to the diagram below:

Schematic diagram of MEMS MEMS mirror reflecting laser

The design of the MEMS MEMS mirror allows users to independently change the region and frequency of the optical path propagation, thereby changing the vertical resolution and scanning frame rate, which will be very helpful for solving Corner Case in intelligent driving.

Breakthrough in high-speed scenarios: adjustable vertical resolution

Compared to the traditional mechanical rotating mirror structure, MEMS MEMS mirror is more flexible and can adjust its reflection angle through programming. Therefore, the second-generation lidar can change the distribution of the laser beam and freely adjust the angle range and vertical resolution size of the Region of Interest (ROI) based on different driving scenarios.

As shown in the diagram below, the scanning method on the left is evenly spaced laser scanning. In autonomous driving, not much laser scanning is needed for the sky or the ground nearby (corresponding to the upper and lower parts of the laser point cloud), and the real ROI is the road. The function on the right, which can adjust the laser beam density in a specific area (road surface), will be more suitable for the autonomous driving system because the ROI areas of high-speed and low-speed will be different.

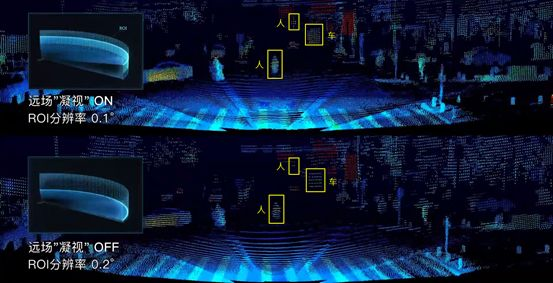

The diagram below shows the same number of point clouds of the same target in the same scene after changing the ROI vertical resolution. It can be seen that after the resolution is adjusted to 0.1° (upper part of the image), compared with before adjustment (lower part of the image), there is a significant increase in the number of point clouds of vehicles and pedestrians in the front.

Adjusting the ROI vertical resolution plays a crucial role in enhancing the perception of targets in high-speed scenarios. This is especially helpful for static obstacles such as distant cars, ice cream cones, dropped tires, and the likes. Increasing perception distance in high-speed scenarios allows the planning and control systems to have more time to complete lane-changing actions in advance, thereby improving the overall autonomous driving experience.

Breaking through city driving scenarios with high frame-rate mode

Apart from adjusting the vertical resolution in high-speed scenarios, the second-generation LiDAR sensor can utilize its high frame-rate data mode to enhance the autonomous driving experience in low-speed scenarios, particularly city driving scenarios. These scenarios involve numerous e-bikes passing by and cars cutting in quickly, which could result in severe collisions if left unaddressed.

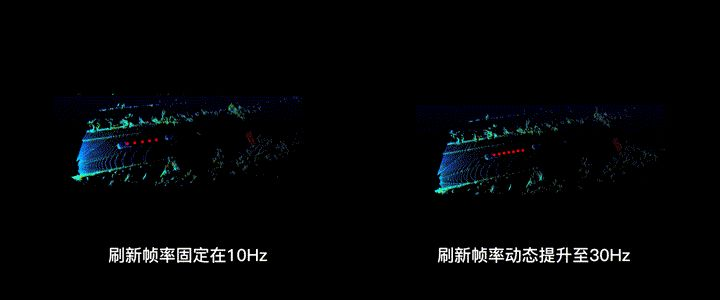

Most first-generation LiDAR sensors operate at a fixed frame rate of 10 Hz (scanning ten times per second). In contrast, the second-generation LiDAR sensor can achieve a maximum frame rate of 30 Hz (scanning thirty times per second), thus significantly reducing the perception latency and providing much more timely perception information. The following animation demonstrates the data volume difference between the 10 Hz and 30 Hz sampling rates for the same scenario.

LiDAR sensors can accurately detect a target’s position but cannot directly measure its speed. The speed is estimated by assessing the positional deviation across multiple frames and time intervals. Therefore, higher frequency input of point clouds allows the estimation of more timely and accurate speeds, faster identification of subtle movements of other cars (e.g., cutting in) or unexpected scenarios (e.g., ghosting), and an overall enhancement of autonomous driving capabilities for city driving scenarios.

Innovations such as variable vertical resolution and high frame-rate mode effectively overcome the shortcomings of fixed point cloud format and fixed frame rate of first-generation LiDAR sensors, enabling autonomous driving systems to autonomously choose modes in both high-speed and city driving scenarios, thereby enhancing the overall driving experience.

Conclusion

Recently, many opinions have emerged online stating that domestic smart cars are just “piling up technology,” mocking the domestic brand cars for merely hiding their lack of software capabilities. While I don’t totally agree with this viewpoint, I firmly believe that “the hardware of an autonomous car determines the upper limit of its autonomous driving capability.” If the hardware of a model can’t cope with certain corner cases theoretically, it should be complemented with hardware that can handle these corner cases for safety reasons. Otherwise, it is an irresponsible act towards consumers.

同样的道理,在选激光雷达时,应该选择技术更先进,上限高的激光雷达,广汽埃安 AION LX Plus 选择搭载第二代激光雷达给国内的智能驾驶领域开了一个好头。

By the same token, when choosing LiDAR, one should opt for a more advanced and higher ceiling LiDAR. Guangqi Aeolus AION LX Plus has set a good example in the field of intelligent driving in China by selecting the second generation LiDAR.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.