2021 GTC Comes As Scheduled, Jensen Huang Delivered Keynote Speech

Yesterday afternoon, the “Leather Jacket Lord” Jensen Huang gave a high-energy one-and-a-half-hour keynote speech at the 2021 GTC (GPU Technology Conference). He introduced several new technologies that are expected to bring changes to the trillion-dollar industry. The main technical content includes AI platform, conversational AI, digital twins, and autonomous driving. In summary, the keynote advocated embracing the Metaverse wholeheartedly.

Omniverse Avatar Platform

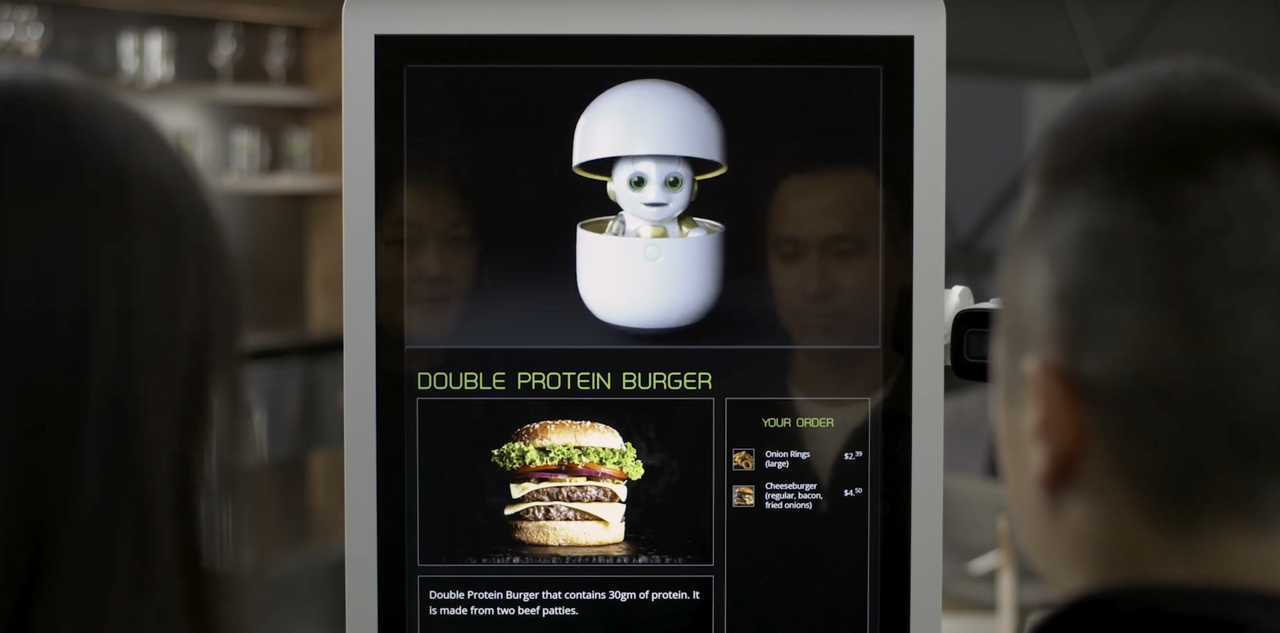

During the speech, Huang also presented a cute version of himself – Toy-Jensen (also known as Toy-Me by Jensen Huang himself).

This cute version of Huang not only has the same appearance as the real Huang, but also uses his own voice for sound synthesis. Additionally, it has real-time ray tracing to ensure high-quality animation effects and can even look at the eyes of the person it is interacting with.

Toy-Jensen can also interact with people in-depth. During the demonstration, it answered questions on climate, astronomy, and biology. Even if the questions were tricky, Toy-Jensen had an answer. This is exactly what Omniverse Avatar, the all-round virtualized AI assistant platform, will be launched for. In the next stage, the AI assistant will become more humanized with more warmth and vitality.

Omniverse Avatar empowers developers to create and customize AI assistants faster. These AI assistants can help users handle billions of customer service interactions, including restaurant ordering, banking transactions, and online appointments.

Omniverse Avatar’s use cases also include Project Tokkio for customer support and Project Maxine for video conferencing.

Project Tokkio is commonly used in restaurants to take orders. Virtual incarnations can interact with multiple customers, understand their intentions, and make recommendations proactively.

At last year’s GTC, Huang introduced Maxine. A year later, Nvidia has improved Project Maxine for customer service and video conferencing. With the RIVA (Real-Time Intelligent Video Analytics) SDK, Maxine can transcribe and translate people’s speech in real time while preserving the tone and voice.# Omniverse Enables 3D Facial Animations with Voice Simulation of Any Virtual Avatar

Omniverse allows the playback of virtual avatars in any language with voice simulation of 3D facial animations, providing the other end of a video conference with the impression that the speaker is maintaining eye contact even if they are looking at a script or meeting notes. Maxine uses synthesized speech to display the speaker’s facial expressions and movements, which may be performed in a noisy environment such as a coffee shop or elsewhere.

Omniverse Avatar incorporates technology such as graphics, computer vision, and speech recognition, all of which are proprietary to NVIDIA. The Avatar may comprehend, understand, and generate human language, thanks to the Megatron 530B large-scale language model-based natural language understanding. The recommendation engine, provided by NVIDIA Merlin, and the perception capability, offered by NVIDIA Metropolis, are also included.

These technologies will also be applied to intelligent cars in the future. When riding in Project Tokkio, the car will have a more intuitive and user-friendly AI assistant, allowing interaction with passengers while proactively recommending better driving modes and travel routes.

Hyperion 8 Enables Faster Realization of Autonomous Driving

According to Jensen Huang, the majority of new electric vehicles will have true autonomous driving capabilities by 2024. NVIDIA is developing an end-to-end process for building autonomous driving vehicles, as well as a full-stack in-vehicle autonomous driving system and a global cloud map.

NVIDIA Drive is a full-stack open autonomous driving platform, while Hyperion 8 is NVIDIA’s latest complete hardware and software architecture, featuring 12 cameras, 9 mm-wave radars, 12 ultrasonic sensors, and a front LiDAR sensor configuration, as well as two Orin chips.

Huang Renxun suggests that the process of developing autonomous driving vehicles is actually a process of transforming automobiles into robots, with synthetic data generation and validation through Omniverse’s Drive Sim and real-time robotics workflows built on Orin Robotics chips as the critical pillars of machine learning development.

In autonomous driving development, converting the sensor-obtained information into virtual world information that computers can use is a crucial step, which is also the first goal mentioned by Huang. With the help of visual images and high-precision maps, Hyperion 8 is able to achieve obstacle avoidance, positioning, environment perception, and path planning, eventually arriving at the destination.

Moreover, this architecture design can not only provide high-fidelity perception, but also has redundancy and fault tolerance mechanisms. Additionally, this hardware has sufficient computing power and programmability to cope with software improvements throughout the vehicle’s lifecycle.

NVIDIA collects PB-level road data from all over the world, and they have about 3,000 well-trained taggers to create and train the data. However, synthetic data generation is still the cornerstone of NVIDIA’s data strategy. By the way, Drive Sim Replicator is a synthetic data generator built on the Omniverse platform, which guides the AI labeling tool and model before building Hyperion 8 and collecting data. Replicator can mark the truth value in a way that cannot be achieved by humans.

Moreover, NVIDIA has established a material library for LiDAR and is also building a millimeter wave radar material library. Everything NVIDIA does is for multi-sensor fusion in autonomous driving.

In this hyper-energetic output from Huang, he emphasizes that the development of NVIDIA’s autonomous driving architecture, Hyperion 8, is based on the important foundation of data and multi-sensor fusion solutions, and they have obtained accurate beam reflections through physical simulation. In addition, with the help of high-precision maps, their autonomous driving solution has an additional God’s-eye view and redundancies.

Just talking about concepts won’t cut it. During the speech, Huang even demonstrated on-site the road test video of the Mercedes-Benz S with the Hyperion 8 architecture. The test section was around NVIDIA headquarters. In this road test, the Mercedes-Benz S with the Hyperion 8 architecture completed lane-changing, pedestrian crossing avoidance, crossroads, roundabouts, and cloverleaf interchanges one after another.

The road test scenario includes both closed roads and open urban roads, and each case is handled very naturally, almost indistinguishable from human driving. In addition, the driver had hands-off control throughout the journey, only focusing on the road ahead.“`markdown

According to Lao Huang, autonomous driving can not only change our way of travel and improve safety, but also completely change the way we interact with cars after integrating Maxine into the vehicle. With Maxine, it’s like having a personal butler, and it can also show members the scenery the car sees, building a sense of trust between humans and machines.

Nowadays, the focus of assisted driving is shifting from closed roads to open roads in cities. The uncertainty in open roads is the biggest hidden danger for assisted driving, and there are too many long-tail scenes that require continuous data collection, optimization and iteration. Huawei ADS and XPeng NGP have inspired us to think about the future of assisted driving on open roads, and now Nvidia Hyperion 8 also inspires us with this imagination.

Lao Huang’s One more thing…

Nvidia’s virtual world simulation engine Omniverse runs through the entire keynote speech, and robots, autonomous driving fleets, warehouses, factories and even entire cities will be created, trained and operated in the digital twin of Omniverse.

The unmanned warehousing and logistics can save billions of dollars for logistics companies every year, and there are 87 billion ways to deliver 14 pizzas. For Domino’s, delivering pizzas in 30 minutes is also not an easy task. Nvidia can save money for warehousing and logistics while improving efficiency, and can also provide the best solution to distribution problems.

With the help of Omniverse, we can simulate and verify unmanned driving in warehouses and factories on a large scale. Nvidia will also create a digital twin model to simulate and predict climate change, and name it E-2, Earth-2. E-2 is the digital twin of the Earth, and in Omniverse, it will run at a million times the speed. Currently, all Nvidia’s inventions are essential for realizing Earth-Two.

Lao Huang’s speech reached a climax at the final ending. Lao Huang said: I can’t think of anything more magnificent and more important than this.

To be honest, I didn’t fully understand this press conference, and the only feedback I have is the widely spread saying — technology benefits humans. This is in line with the easter egg of this press conference.

Q: What kind of people are the greatest?

Toy-Jensen: The greatest people are those who treat others well!

“`

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.