Author: Mr. Yu

Shanghai, September 27, 2021, clear, windless, slightly stuffy.

At the press conference for the release of MINIEYE I-CS (In-Cabin Sensing) production solution, Mr. Yu met Liu Guoqing, founder and CEO of MINIEYE, and Yang Yihong, head of the cabin business unit. Although the event wasn’t very long, the high energy density of the information released and the charming demeanor of Liu Guoqing and the adeptness of Yang Yihong left a deep impression on Mr. Yu.

As a company founded by a group of overseas returnee doctors that specializes in ADAS technology products and has already reached the D-round of financing, MINIEYE’s I-CS (In-Cabin Sensing) cabin sensing solution marks a shift from “outside the car” to “inside the cabin”.

It is understood that this solution mainly analyzes passengers’ intentions and behaviors by visually monitoring, tracking, and identifying human features such as the direction of the passenger’s head, facial expressions, line of sight, gestures, and body. And it provides safe and intelligent driving and cabin experiences.

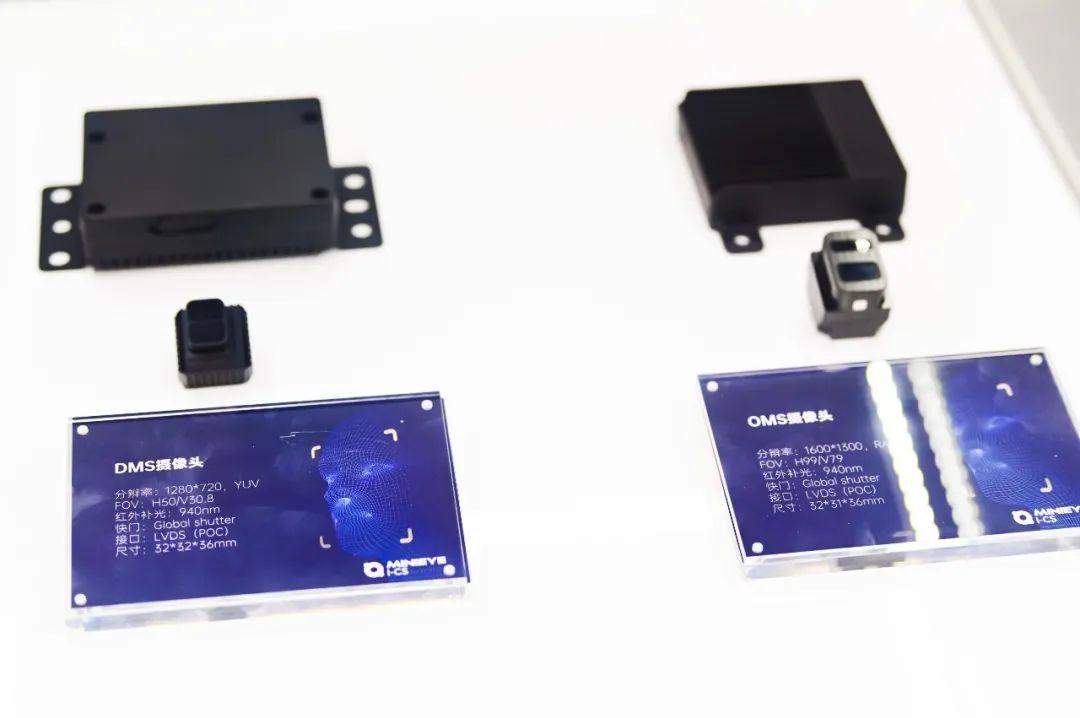

With this solution, related technological products can offer leading, reliable in-cabin sensing technology and related supporting services for intelligent cars in the form of driver monitoring systems (DMS), passenger monitoring systems (OMS), and object recognition and human-vehicle interaction systems, to ensure the safety and comfort of the cabin.

At the same time that MINIEYE’s cabin sensing solution was officially released, I-CS has already been equipped in more than 30 car models, and the number of installations in commercial vehicles has also reached 100,000.

After repeated exploration and validation, MINIEYE has concluded that excellent intelligent cabin technology for user experience “lies in seeing needs and making proactive decisions.”

Currently, the I-CS solution is specially designed for five major application scenarios to provide proactive services: seamless entry, safe takeover, fatigue monitoring, child care, and multi-person entertainment.

Let’s take a look at them one by one.

Seamless Entry

In terms of user experience, we believe that “seamless entry” may be a more fitting term.

Through the seamless identity recognition and live detection technology employed by I-CS, which includes 2D and 3D camera solutions, the system can quickly recognize and judge the user’s identity upon approaching the vehicle (sensor), without requiring any manual instruction from the user. It then automatically opens the car door and quickly logs in to the cabin system.Translated Markdown text:

Here, it’s a bit like the quick start of FaceID on a phone – pick up the phone, without any action, the phone automatically unlocks after recognizing the user’s identity.

At the same time, I-CS supports cross-identity verification of RGB and IR images to improve recognition efficiency and security. That is to say, the method of cracking security algorithms with photos and dynamic images popular in short network videos is not feasible here.

Safety Takeover

I-CS has two sets of vision plans. Through monitoring the driver’s line of sight, it can accurately determine whether the driver’s line of sight falls within seven gaze areas such as the road ahead, center console, AI assistant, and rearview mirror. By analyzing the driver’s attention, it ensures that they can take over the car in autonomous driving mode at critical moments.

Fatigue Monitoring

Firstly, let’s cite some background information.

According to the new general safety regulations Reg. (EU) 2019/2144, the EU will implement the DDAW standard (Driver Drowsiness and Attention Warning System) for all M-class and N-class vehicles for which new certification models are issued. According to the regulations, when tired driving is detected, a warning signal should be directly issued to the driver to further improve road traffic safety.

Let’s look at I-CS now.

Yang Yihong stated that, targeting individual driving scenes, I-CS has built a sound and DDAW-compliant fatigue monitoring system, and is confident in passing the EU DDAW detection in 2022.

The system can real-time monitor the driver’s head posture, eyelid opening and closing degree, gaze direction, blink frequency, and other multi-dimensional indicators and quantitatively grade the degree of fatigue based on different behavioral characteristics.

I-CS can warn or terminate dangerous driving behaviors in a timely manner based on the driver’s different levels of fatigue, reducing the incidence of accidents.

This can be regarded as MINIEYE’s reasonable prediction of China’s autonomous driving-related policies and technical requirements. If this prediction comes true, MINIEYE’s I-CS cockpit plan will have a significant advantage in the industry.

Childcare

Mr. Yu believes that this will be one of the scene areas that receive industry attention.I-CS accurately identifies adults and children in the car by integrating facial features, body proportions, and height information.

Once a child is recognized, the system will activate child care functions and monitor the child’s facial expression, body movements, and safety belt in real-time. Feedback on the monitoring situation is provided in real-time to the parents in the front row, reminding them to take necessary measures.

Yang Yihong specifically mentioned that in actual operation, I-CS will implement a multi-stage reminder plan.

When the system detects that a child has been forgotten in the car, it will notify the driver to return through methods such as disabling door locks, voice calls, and text messages. If there is no response for a long time, the system will automatically alert to protect the safety of the child passengers in the car.

Faced with the frequent tragedies of children being forgotten and trapped in cars by their parents, instead of constantly criticizing the responsible personnel, it is better to popularize related solutions in products as early as possible to prevent tragedies from happening again and again.

Multiplayer Entertainment

I-CS can use multiple natural body language interaction methods, such as eye contact, gestures, and head movements of passengers, to enable multi-person and multi-location interactions. In this scenario, the weight of intelligent cabin functions is no longer limited to the front row near the center console, and all passengers in the cabin can clearly feel it.

Forgive Mr. Yu for his limited knowledge. He can only think of the absurd scene in the video where the front-row passenger stared at the car screen and flailed their arms playing “Fruit Ninja.”

Jokes aside, we believe that the product managers of car manufacturers and game development teams can use these capabilities better to bring users a better active entertainment experience.

Summary

We can see that the product direction of I-CS is not to forcibly strengthen and repackage common cockpit visual perception solutions in the industry, but to strengthen the “no-feeling” experience of its intelligent services by combining some learning methods that are low in cost and even close to human natural body language habits.

From another perspective, just like humans have a simple understanding of the concept of “fatigue,” it is not the same for AI. Visual systems cannot make rough and quantitative judgments based on simple objective facts such as how many times the driver’s eyelids open and close or how many yawns they make during the driving process.

MINIEYE believes that technology without productization is just a “castle in the air”.

MINIEYE believes that technology without productization is just a “castle in the air”.

The I-CS Cabin Perception solution has undergone extensive experience in productization and mass production verification while upgrading the scenario-based experience. Through the one-stop service of “product+tools”, the solution is effectively guaranteed to go through the productization process from algorithm design, function development, verification testing, to final mass production delivery.

During the design phase, MINIEYE proposed the underlying interactive logic of Intuitive Body Language, incorporating the concept of “human-vehicle interaction should be as natural and fluent as human-human communication” into the I-CS solution and fully utilizing intuitive body movements to form a natural user interface.

The benefits of this approach are straightforward. First, it effectively reduces the user’s learning cost of interaction. Second, it helps to create a real cabin interaction experience.

For the different requirements of OEMs and Tier 1 suppliers, MINIEYE provides flexible and encapsulated software solutions. The advantage of full-stack adaptability can adapt to various camera modules and positions such as steering wheel columns, instrument panels, central control screens, A-pillars, and rearview mirrors. With the Mini-Cali self-calibration system patented by I-CS, the automatic conversion of camera coordinate systems to cabin coordinate systems can be achieved for the variable cabin with camera position.

The MiniDNN accelerator developed independently by I-CS is deeply and customizedly bound. For I-CS algorithm design, the commonly used neural network models of I-CS are optimized to achieve theoretical peak performance. Based on ARM architecture, memory access, and data layout technology, RAM access can be reduced, and higher cache hit efficiency can be obtained.

Yang Yihong said that this self-developed technology is more friendly to the allocation of resources and computing power in integrated cabin solutions and lays a good foundation for future OTA upgrades.

Based on a mature research and development system, MINIEYE has established a complete self-developed development toolchain and a semi-automatic data annotation platform. Through multiple ground truth systems and visual testing tools, it provides solid technical support for the targeted mass production and supporting acceptance of I-CS.

Regarding data security, Yang Yihong said that MINIEYE attaches great importance to user privacy protection. Whether locally or in the cloud, I-CS does not store photos. IC-S converts the monitored user images into 512-dimensional vector images to protect data security and user privacy.

>Regarding the balance between recognition accuracy and trigger sensitivity in the DMS and OMS systems, we will utilize various technical and product planning methods to accurately identify patterns such as eye tracking, locating dozens of facial features, and recognizing various gestures and postures under different lighting conditions. However, accuracy is only the first step. In fact, the definitions of sensitivity and accuracy cannot fully capture the overall experience. To achieve innovation in the final experience, we must collaborate deeply with our client team’s product managers and maintain an open attitude in our product delivery. We hope to offer the most competitive and accurate underlying technology modules to define and improve the product together with our customers.

>Regarding the balance between recognition accuracy and trigger sensitivity in the DMS and OMS systems, we will utilize various technical and product planning methods to accurately identify patterns such as eye tracking, locating dozens of facial features, and recognizing various gestures and postures under different lighting conditions. However, accuracy is only the first step. In fact, the definitions of sensitivity and accuracy cannot fully capture the overall experience. To achieve innovation in the final experience, we must collaborate deeply with our client team’s product managers and maintain an open attitude in our product delivery. We hope to offer the most competitive and accurate underlying technology modules to define and improve the product together with our customers.

>

As for the research on post-processing, MINIEYE’s cockpit team is divided into two major research and development groups. One is the deep learning group, which is responsible for providing accurate algorithmic structures. The other is the system group, which is called the post-processing team or the operation logic design team in other companies. Since external information detection can generally be quantified, we usually pursue accurate judgments and let the system make relevant decisions. However, it is crucial to emphasize that for the in-cabin environment, the core focus is not on accuracy but on the user experience. This means that the technology and product in the cabin need to be deeply integrated on both sides to achieve success and overcome any obstacles towards further product and market development. Therefore, our team has done a lot of post-processing logic-related work for each single feature, including defining the granularity of action start and stop commands, such as natural nodding and gesture strength. We have spent a lot of time and effort studying the natural movements of the human body in order to achieve a good user experience.

Regarding your second question, I have noticed some information about multi-modal perception and interaction today. Can you please tell us if MINIEYE has any related layout plans in the future?

As for the layout plans related to multi-modal perception and interaction, we will continue to focus on the development of our capabilities and explore new cutting-edge technologies, while also paying attention to the market and customer demand. We will maintain close communication with our customers while keeping an eye on the latest trends and development in the industry, so as to provide better products and services.In the multimodal part, we have been working on some related technical reserves, including iterations and refinements for some scenarios. If everything goes well, we will disclose the relevant information before the end of this year.

Also, as we mentioned earlier, we still need to “fight together”. We have good partners in the field of speech, and we will explore whether we can create better experiences from a technical perspective together.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.