Introduction

Autonomous driving vehicles, through technology, achieve automatic driving of vehicles, with the aim of reducing driving fatigue and enhancing driving safety.

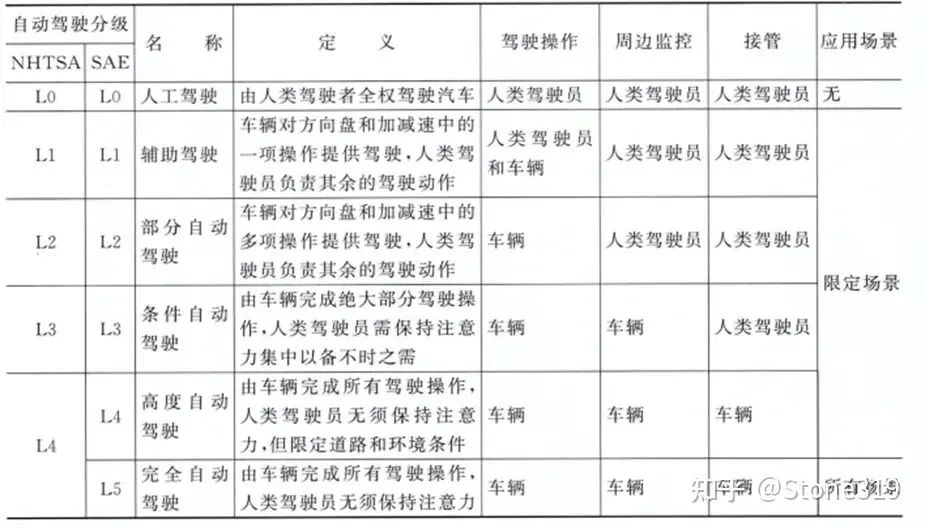

Autonomous driving vehicles can be categorized into five levels based on the degree of automation: assisted driving, partial automation, conditional automation, high automation, and full automation.

Fully automated driving can free up people’s driving time, turning cars into a third space for people’s work and leisure activities during their travel, apart from home and office.

To achieve autonomous driving, the vehicle needs to address three basic questions: where to go, how to get there, and what route to take. Addressing these questions involves multiple fields and technologies, including hardware platforms, software algorithms, interaction, and safety. This article will briefly outline the relevant technologies.

System Architecture

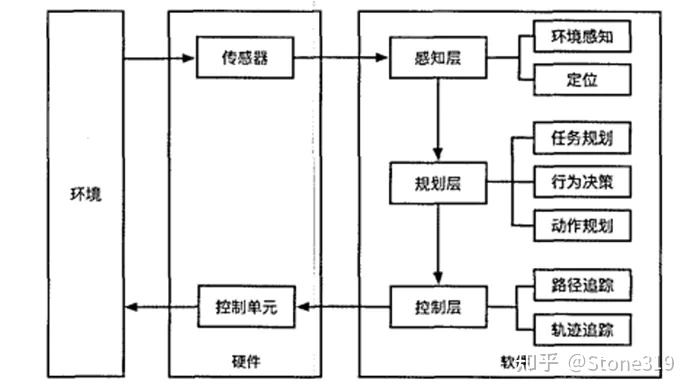

Any control system is inseparable from three parts: input, control, and output. Autonomous driving systems are no exception.

Autonomous driving vehicles sense information about the surrounding environment through sensors, input it to the computing platform for processing, determine the vehicle’s position and construct a driving situation map. Based on the driving situation map, the vehicle’s motion is subject to behavior decision-making and path planning, and the chassis actuator is accurately controlled to achieve autonomous driving.

On the hardware side, the autonomous driving system includes: sensors that collect various types of input information, a computing platform that processes input information and controls vehicle motion planning, and chassis actuators that achieve steering, braking, and acceleration.

On the software side, it includes a perception layer that processes perception data, a planning layer that plans and decides vehicle motion, and a control layer that precisely controls the actuator. The software is mainly integrated into the computing platform, with some perception data processing being done in the intelligent sensors. Considering that the amount of data to be processed is increasingly large and the demand for computing power is increasingly high, there is a trend toward shifting some data processing to cloud servers in the future.

Hardware Platform

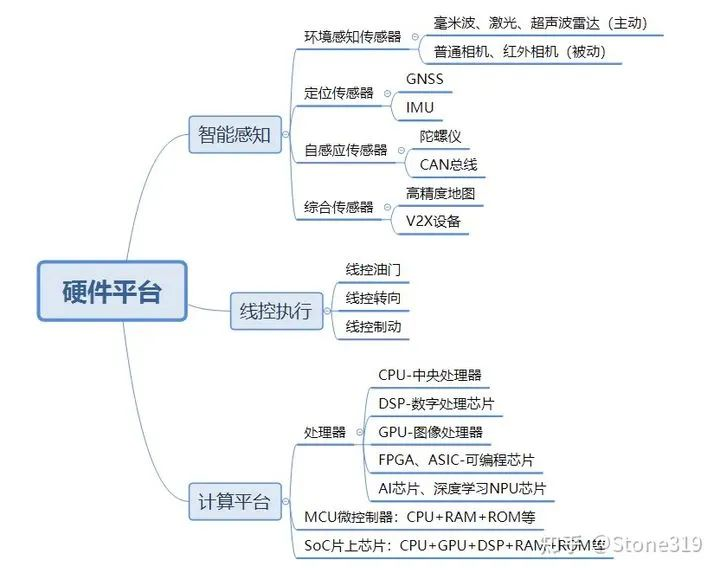

The hardware platform for autonomous driving systems mainly includes three parts: intelligent perception, wire-controlled actuation, and computing platforms, corresponding to the input, output, and control of general control systems.

Intelligent perception includes sensors for environment perception, such as millimeter-wave, laser, ultrasonic radar, and cameras; positioning sensors that determine the vehicle’s position, such as the Global Satellite Navigation System (GNSS) and the Inertial Navigation System (IMU); and self-inductive sensors that obtain vehicle body data, such as gyroscopes and vehicle Controller Area Network (CAN) bus.

In a broad sense, high-precision navigation maps and V2X devices that communicate with other equipment are also part of the perception hardware.# Hardware Architecture of Autonomous Driving Systems

The calculation platform is responsible for processing perception data, making planning decisions, and controlling actuators. Due to the large amount of data processing and complexity of algorithms, specialized processing chips have been developed, such as the GPU for image processing, DSP for digital processing, FPGA for programmable chips, and AI chips.

The line control execution is responsible for implementing the vehicle’s motion actuators, controlled by the calculation platform, including steering mechanisms, brake systems, and power systems.

Software Platform

In addition to the underlying drivers and real-time operating system, the software platform mainly includes programs such as the perception layer, planning layer, and control layer. Various types of perception algorithms, localization algorithms, planning and control algorithms are the core content of the technology.

Perception Algorithms

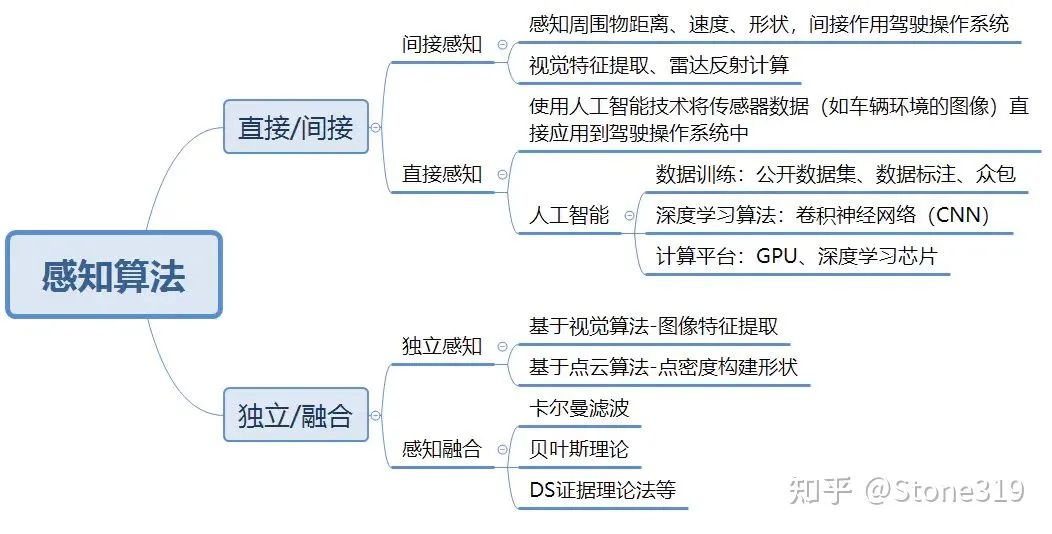

The purpose of perception is to inform the autonomous driving system of the vehicle’s surroundings, which areas can be traveled, and which areas have obstacles.

The widely used method currently is indirect perception, which constructs a driving situational map by perceiving the surrounding distance, speed, shape, etc., and then plans and controls the driving operation system indirectly.

With the development of artificial intelligence technology, one of the future trends is to construct a mapping relationship between sensor data (such as images of the vehicle’s environment) and driving operational behavior through deep learning, which directly affects the driving operating system.

Perception sensors vary greatly in advantages and disadvantages, such as millimeter-wave radar, which is good at perceiving the motion state of target objects, and the camera, which is easier to extract the shape of target objects for classification. Data fusion of various sensors is conducted to fully exploit their respective advantages, improve perception redundancy, accuracy, and timeliness. Perception fusion technology is widely used, with typical algorithms such as the Kalman filter, Bayesian theory, etc.

Localization Algorithms

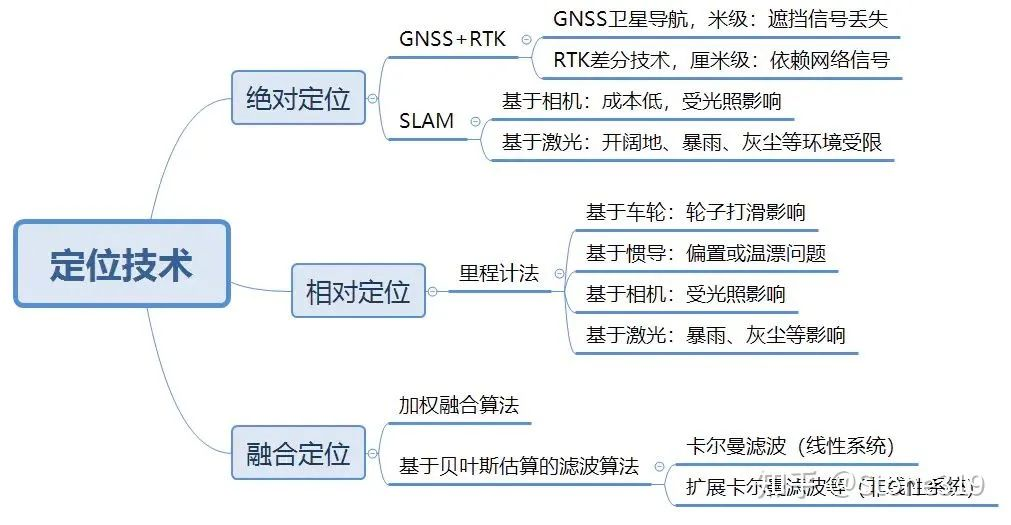

The purpose of localization is to inform the autonomous driving vehicle of its current location. Only with precise localization, can it determine where to go and how to get there.

Global positioning systems like GNSS, such as GPS and Beidou, can tell us our position on Earth, but its accuracy is generally at the meter level, which cannot meet the centimeter-level requirements for autonomous driving. Generally, RTK differential technology is required to achieve centimeter-level positioning accuracy.

GNSS has a problem that when it is blocked, it greatly reduces the accuracy of localization, such as on the sides of the road with tall buildings, dense trees, inside tunnels, underground, and indoor parking lots. In this case, where there is short-term assistance in localization, relative positioning methods are generally used, through the odometer method, to calculate based on methods such as the IMU, also some calculate based on wheel, camera, and LIDAR.# SLAM, Simultaneous Localization and Mapping, is widely used in indoor robot localization. This is a positioning method based on data feature point matching and often divided into camera-based SLAM and LiDAR-based SLAM.

When applied to cars, camera-based SLAM is easily affected by lighting conditions, while LiDAR-based SLAM is easily affected by environmental factors such as heavy rain and dust. However, LiDAR-based SLAM is also restricted in open spaces as there may be no significant feature points for positioning.

Navigation and Control Algorithms

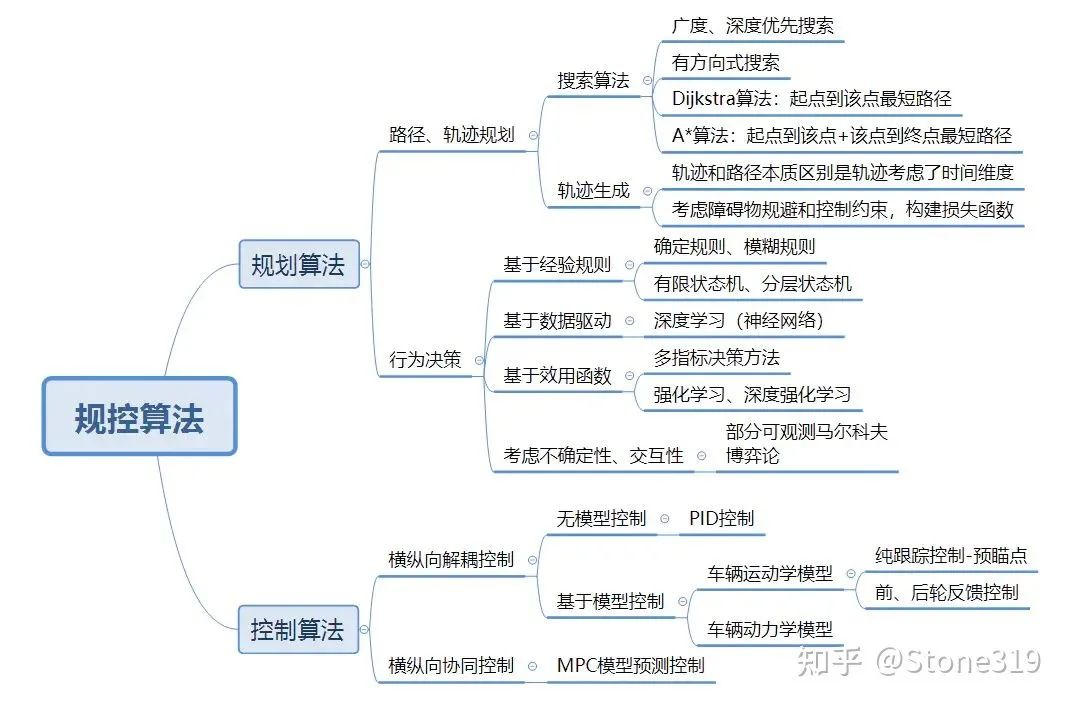

The purposes of navigation and control are to perform global planning of vehicle movements (e.g. from location A to location B), determine behavior decisions (e.g. overtaking or lane changing), perform local planning (e.g. conduct obstacle avoidance), and precisely control the vehicle to travel along the planned trajectory.

Global path planning and local trajectory planning are essentially searching for the best path. The common search algorithm used is the A* algorithm, considering the shortest distance from the node to the starting point and the endpoint as priority. The trajectory and path are essentially different with the former taking time dimension into consideration, conducting obstacle avoidance and control constraint, constructing loss function, and selecting the optimal traveling trajectory.

The most commonly used method for control algorithm is the classical PID control. For lateral control of the vehicle, a simplified two-wheeled bike model is generally adopted, and the preview point is selected through simple tracking control – just as human drivers look ahead while driving.

In order to achieve more accurate control and improve control robustness, vehicle kinematic models are also used, and even cross-axis coordinated control algorithms – MPC model predictive control are utilized.

Interaction Technology

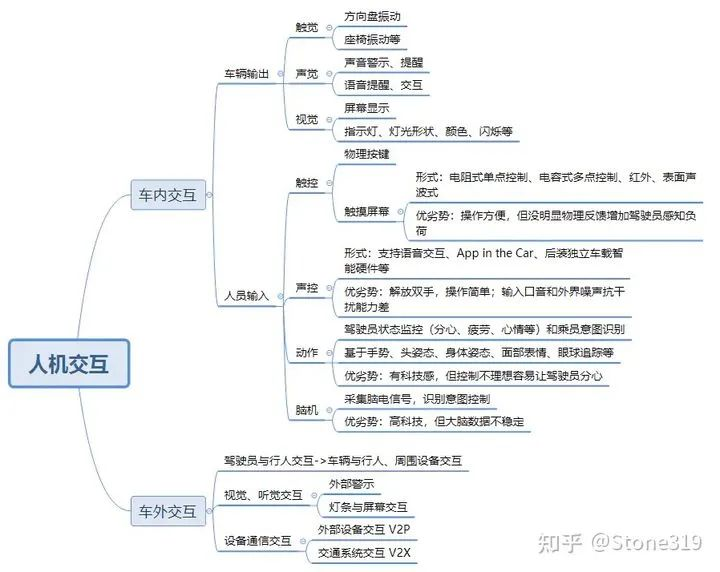

In traditional car interaction, drivers input driving intentions to the vehicle through the accelerator, brake pedal, gear shift, and other physical buttons or touch screens to control the vehicle systems. Outside the car, drivers interact with pedestrians through gestures or horns.

Currently, voice and gesture interaction for in-vehicle operation is becoming increasingly popular. To avoid drivers being overwhelmed by too much automatic control before fully realizing autonomous driving, driver status monitoring is also widely used.

In the future, with the development of autonomous driving and improved interaction technology, people will not need to pay as much attention to outside conditions and can enjoy more time inside the car. Vehicles will be controlled through gestures, head postures, or even brainwaves, enabling interaction.

Outside the car, self-driving vehicles will directly interact with pedestrians through external alerting, light bars, and screens, even interacting with pedestrians through mobile devices, or communicating with traffic systems through V2X devices.

Safety Technology

One of the main objectives of developing autonomous driving technology is to improve traffic safety and prevent traffic accidents. Therefore, ensuring the safety technology of the autonomous driving system is particularly important.

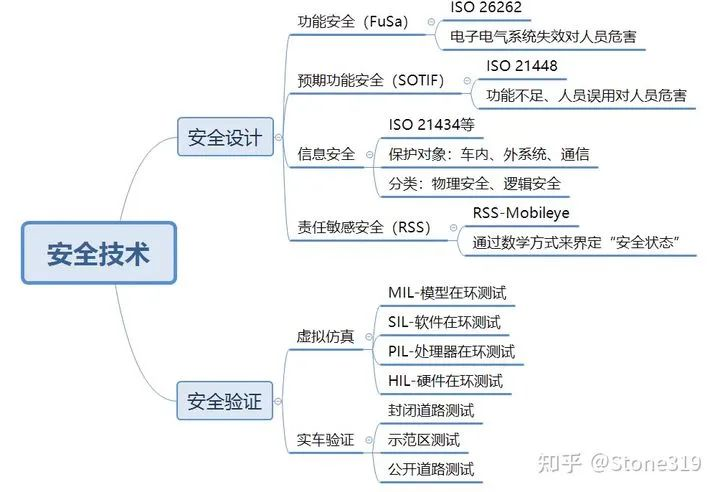

Safety technology has two dimensions, one is safety design, which ensures that safety is fully considered during the design and development of the autonomous driving system; the other is safety verification, which fully verifies the autonomous driving system through various verification technologies and methods to ensure reliability.

Safety design generally includes: functional safety design that avoids harm to personnel due to the failure of electronic and electrical systems; anticipated functional safety design that avoids harm to personnel due to insufficient functions or personnel misuse; information security design that ensures the physical and logical security of in-car and external system communication by preventing intrusion and attack on the system; and responsibility-sensitive safety design that defines the “safe state” through mathematical methods.

Safety verification generally includes: virtual simulation and real vehicle verification. Virtual simulation includes different types of verification and simulation such as model-in-the-loop testing (MIL), software-in-the-loop testing (SIL), processor-in-the-loop testing (PIL), and hardware-in-the-loop testing (HIL). Real vehicle verification can be divided into closed-road testing, demonstration zone testing and open-road testing.

Compared to traditional vehicles, the verification of autonomous driving cars is exponentially increased. Relying only on real vehicle testing is neither practical in terms of time nor cost, so conducting sufficient simulation testing is crucial.

In Conclusion

The above-mentioned autonomous driving technology is focused more on the perspective of single-vehicle intelligence. As a major point of future development—intelligent vehicles, the future direction includes both automation and networking.

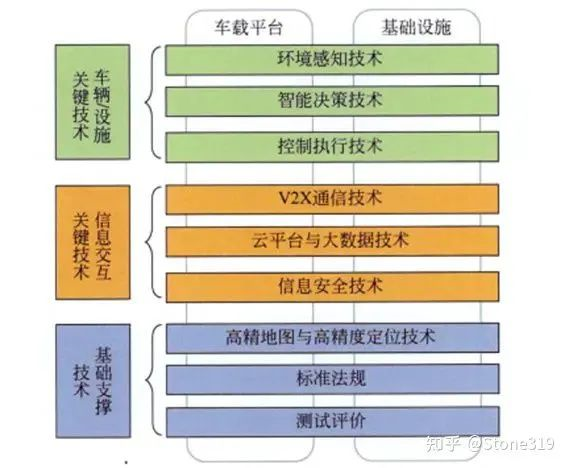

The key technologies of intelligent vehicles can be summarized as “three horizontals” and “two verticals”.

The “three horizontals” refer to vehicle/equipment technology, information interaction technology and basic support technology; the “two verticals” refer to on-board platforms and infrastructure construction.

Vehicle/equipment technology includes environmental perception technology, intelligent decision-making technology and control execution technology, etc.

Information interaction technology includes V2X communication technology, cloud platform and big data technology, information security technology, etc.

Basic support technology includes high-precision map and high-precision positioning technology, standard regulations and testing and evaluation technology, etc.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.