Layman’s Explanation of Tesla AI Day

Author: Winslow

After reading the translated article about “Cheyou Zhineng” (smart driving) on reddit, I was inspired to give my own perspective and translate it from the original English text.

This reddit article provides a great interpretation of Tesla’s AI Day, gradually explaining the connection between Tesla’s products and the underlying technologies. After researching and learning related knowledge regarding the many technical terms used in the article, I have incorporated my understanding of them into the translation. If there are any omissions or mistakes, I welcome everyone to correct and discuss them.

The original author, @cosmacelf, is a technical writer on reddit. The title and link to the original text, “Layman’s Explanation of Tesla AI Day,” can be found here: https://www.reddit.com/r/teslamotors/comments/pcgz6d/laymansexplanationofteslaai_day

Main Text:

Full article of 2,011 words

Estimated reading time: 10 minutes

Now, on to the translated main text.

The original intention of Tesla’s Autopilot system (hereinafter referred to as the “AP system”) was to design a driving technology that can be applied to any location and scenario. This is very different from many other car companies that have developed autonomous driving systems, such as Ford, GM, Mercedes, etc., whose autonomous driving technology depends on pre-made high-precision maps, and therefore can only activate the autonomous driving function in limited areas (i.e. on roads with high-precision maps).

Tesla’s AP system uses 8 cameras around the vehicle to perceive the driving environment in real-time. Tesla’s visual perception and vehicle control system uses a neural network that has been trained using the backpropagation algorithm, combined with complex C++ code algorithms.

This “neural network trained with the backpropagation algorithm” is no different from the neural networks widely used in the field of artificial intelligence. This technology is widely used in many fields, such as voice assistant technology (Alexa, Siri – Amazon, Apple’s voice assistants), movie recommendation systems (Netflix recommendations), and facial recognition technology (Apple’s face recognition – Apple’s face ID), etc.

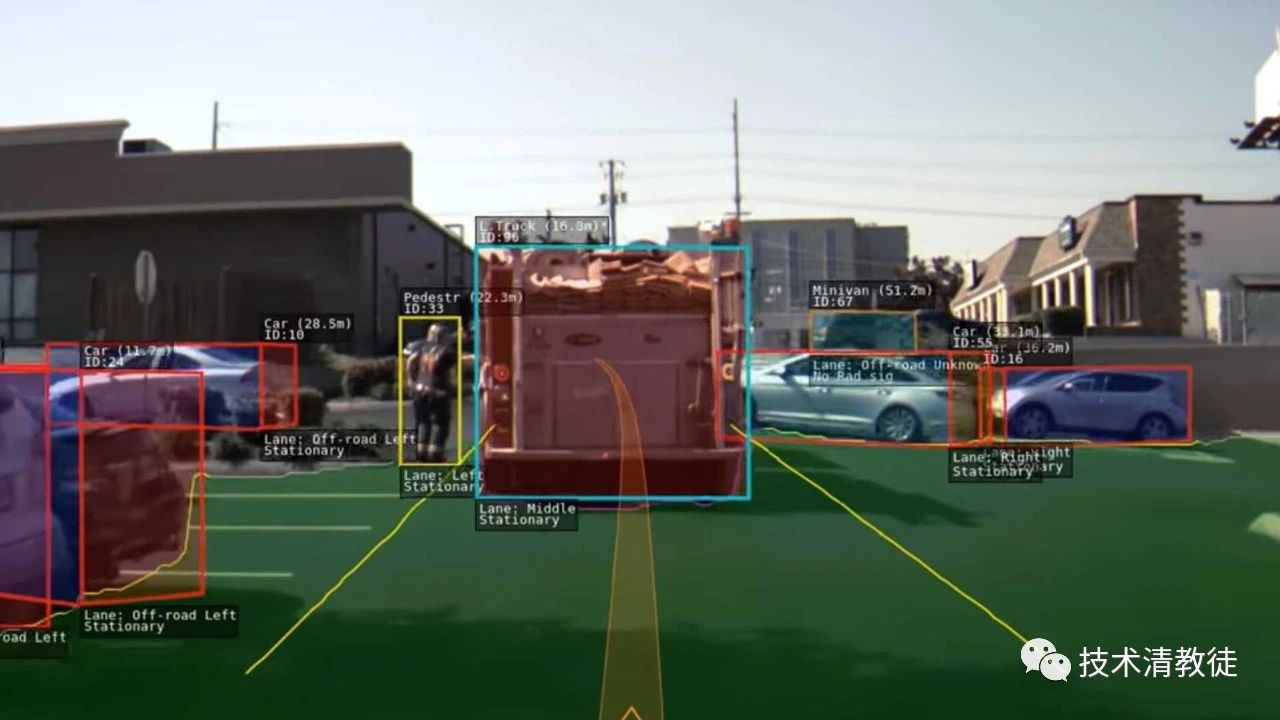

These neural networks have some differences and similarities with the workings of our brain.In the process of training neural networks, people use millions of processed samples to clearly tell the neural networks what they need to recognize and learn. For example, to train a neural network to recognize visual image content, hundreds of thousands of annotated images need to be inputted in the early stage, each of which identifies one or more objects. To train a neural network to handle complex scenes, engineers need to manually draw boxes around these objects and label them at the same time, as shown below:

After millions of annotated images are available, we can start training the neural network. Training neural networks is a computationally intensive task that often requires the use of a GPU cluster. Even with a huge GPU cluster, it may still take several days to complete, which is not surprising. This illustrates the enormous computational power required to train a sufficiently useful neural network.

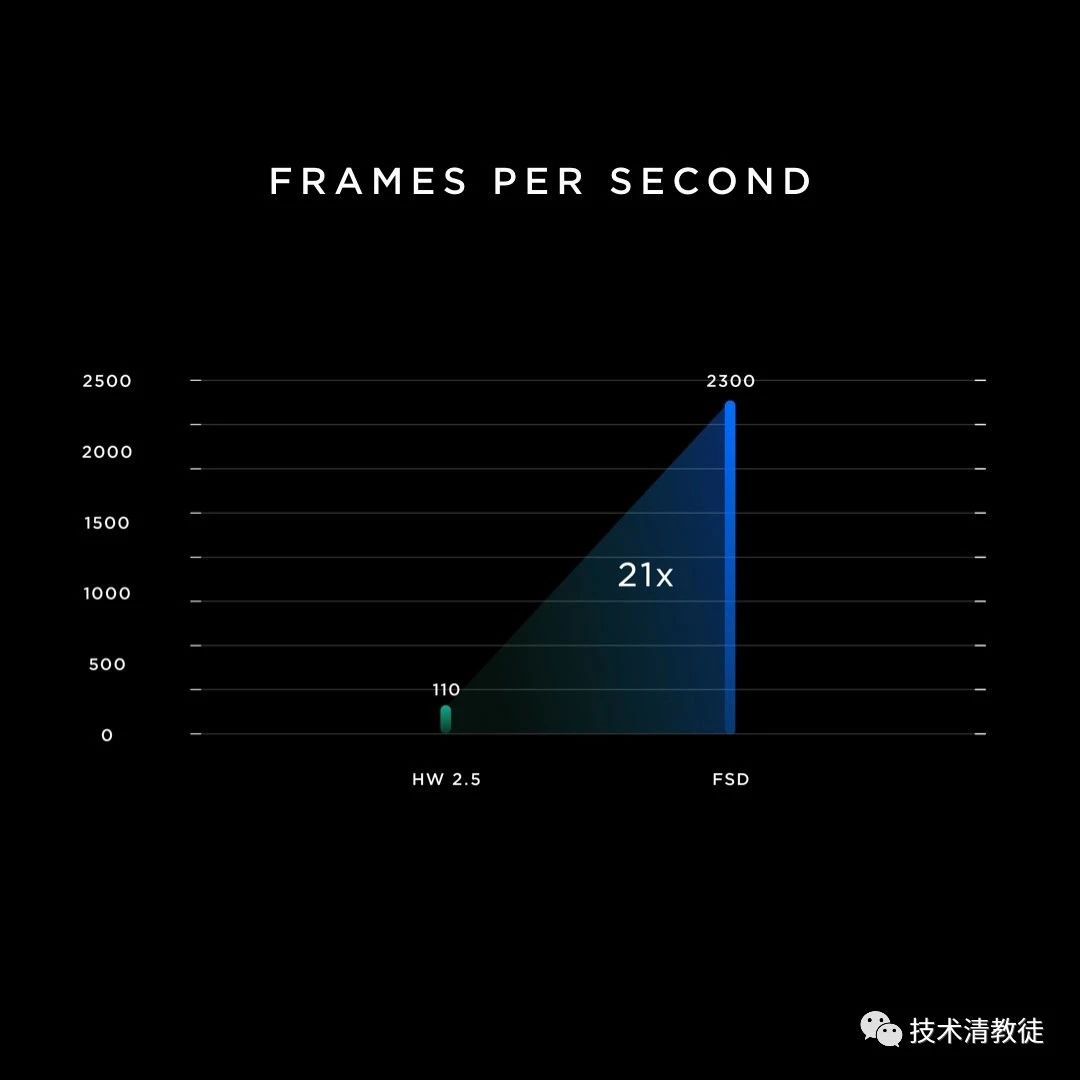

After training, we now have a neural network with millions of internal parameters, which were automatically generated during the training process. This neural network can be downloaded to a smaller inference processor for scene analysis or other designated tasks. Typically, running scene analysis and identifying the content in each frame of the image takes only a few milliseconds to complete. Tesla has developed its own AI inference chip, and each Tesla vehicle on the road is equipped with two self-developed chips.

Tesla’s vehicles produced after 2020 are equipped with the first self-developed Hardware 3.0 chip for automated driving, which has a processing capability of 2300 frames per second, while the previous generation Hardware 2.5 has significantly lower processing capabilities, only processing 100 frames per second.

Elon Musk once said that every user using the AP system is helping Tesla train its neural network. This statement is only partially correct. First, the vehicle using the AP system does not learn anything new, it simply uses the neural network that has already been downloaded to analyze its surroundings. What really helps to train the neural network is when Tesla needs videos of specific scenarios, the vehicle can save and upload a 10-second video clip to Tesla’s supercomputer center.

Tesla engineers wanted to improve the perception and prediction function of lane changes and sudden lane cutting of other vehicles. They wrote a piece of request code that was sent to all running Tesla vehicles in the world, so that these vehicles would save a video clip of about 10 seconds whenever encountering similar scenarios and upload them to Tesla’s supercomputing center. After a few days, Tesla might collect 10,000 similar videos. Moreover, these videos contain specific contents of lane changes, so these video clips are all “automatically labeled”. Tesla can then use these videos to train neural networks. The neural network will find and learn clues in the video by itself, such as other vehicles turning on turn signals, other vehicles starting to deviate, or the slight deviation of the front of the other vehicles. This is the advantage of neural networks having labeled data. It can automatically write programs without the need for human intervention (or with less human intervention) and learn to recognize patterns.

Tesla engineers wanted to improve the perception and prediction function of lane changes and sudden lane cutting of other vehicles. They wrote a piece of request code that was sent to all running Tesla vehicles in the world, so that these vehicles would save a video clip of about 10 seconds whenever encountering similar scenarios and upload them to Tesla’s supercomputing center. After a few days, Tesla might collect 10,000 similar videos. Moreover, these videos contain specific contents of lane changes, so these video clips are all “automatically labeled”. Tesla can then use these videos to train neural networks. The neural network will find and learn clues in the video by itself, such as other vehicles turning on turn signals, other vehicles starting to deviate, or the slight deviation of the front of the other vehicles. This is the advantage of neural networks having labeled data. It can automatically write programs without the need for human intervention (or with less human intervention) and learn to recognize patterns.

After retraining the neural network, Tesla will download and deploy the new neural network to all vehicles, and observe the correctness of predicting the lane changes of other vehicles using shadow mode. When shadow mode is running, if the behavior of other vehicles changing lanes is not predicted in advance or predicted to occur but does not happen, the video clips of these two situations will be marked as “inconsistent”. This “inconsistent” data will be uploaded to Tesla’s supercomputing center, and a new neural network will be trained based on this data. Then Tesla will deploy the updated neural network to the vehicles and continue to observe the accuracy of prediction using shadow mode. This process can be repeated until the neural network’s prediction of other vehicle behavior is accurate enough. At this point, the neural network version will be released as a formal OTA firmware update for the vehicle.

Whenever Tesla claims that the size of its fleet is the real secret weapon that sets it apart from its competitors, it is not just boasting. Tesla can call upon over one million vehicles worldwide, as well as the AI chips in those vehicles, to train its neural networks. By analogy, Tesla has the world’s largest AI supercomputer cluster to date.

In the following section, we will specifically explain how Tesla’s neural network works.

(To be continued)

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.