Author: Michelin

Recently, “assisted driving” and “automatic driving” have become sensitive terms.

Whether it’s because the names are accurate, the functions are reliable, or the marketing is appropriate… The emergence of these controversies is due to the fact that we are in the era of “human-machine co-driving.”

What is human-machine co-driving?

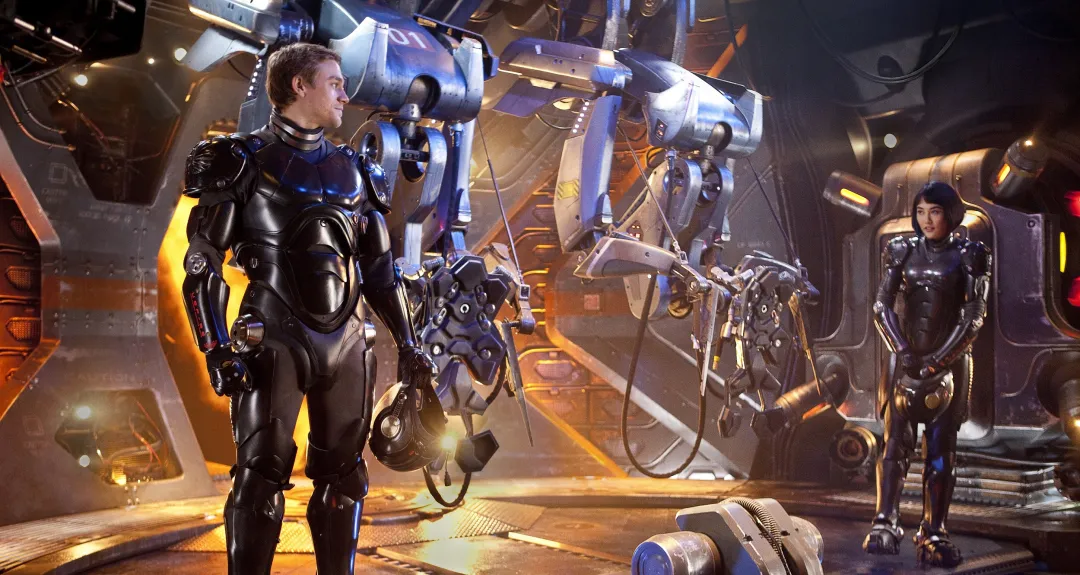

In the traditional automotive era, drivers have had 100% control of the vehicle. With the advancement of intelligent technology, the system begins to take control of driving in some scenarios, and humans are responsible for observing the environment and taking control of the vehicle in case of dangerous situations. During this period, humans and the system are like partners driving the same mecha, working together to drive. Until fully automated driving technology arrives, we have no choice but to face human-machine co-driving before we completely hand over driving control to the “driver” system.

In “Pacific Rim,” each mecha is jointly driven by two people, and the partners need to cultivate the same tacit understanding, coordinated actions, and even mutual understanding through getting along and training slowly.

Cars have slowly evolved from cold tools to intelligent partners of drivers. Just like humans encountering a new partner, they must go through the initial unfamiliarity, gradually become familiar, and gradually adapt until the tacit understanding is developed.

Throughout this process, we need to adapt to various situations: when the machine encounters situations that it cannot handle, the driver needs to take control in a timely manner. The smoother and more seamless the transition of driving control, the higher the driving experience. After all, a delay of one second on a complex high-speed road may cause serious consequences.

However, human tacit understanding can be achieved through long-term interaction and adaptation, and the tacit understanding between people and systems cannot be solved simply by spending more time driving. More importantly, it requires technology to establish a bridge for communication between the system and humans, allowing them to perceive each other.

And the cockpit that is in close contact with both humans and systems provides this bridge.

Human-machine co-driving also has its own “Maslow’s needs”

People communicate with each other through language, text, actions, and expressions to convey information. Communication between humans and systems is also the same. In the cockpit, people use their eyes to see indicator lights, instrument panels, and control panel screen prompts, use their ears to listen to warning sounds, and use car warning systems to detect information that is not easily noticed from the driver’s perspective. At the same time, the driver also controls the auxiliary driving system by using buttons, touch screens, steering wheels, and brake pedals in the cockpit.

Facing complex and diverse data and information, which information is key? Which one is the most important?When talking about human needs, we often mention Maslow’s hierarchy of needs:

Human needs are like a pyramid, ranging from physiological needs to self-actualization needs. Different levels of needs emerge when basic needs are met. After being fed and clothed and with no immediate danger to life, individuals begin to desire social needs, such as emotional needs for love, friendship, and familial relationships; when emotional needs are fulfilled, the need for recognition and respect from others arises…

Similarly, in human-vehicle interaction, various needs similar to Maslow’s hierarchy of needs exist.

The most basic physiological need in human-vehicle interaction is driving, i.e., how people and the vehicle system work together to operate the car. If this need is not satisfied, it can hardly be called human-vehicle interaction; it’s just a person driving by themselves.

Once the car is in motion, the most critical need for human, vehicle, and system is safety.

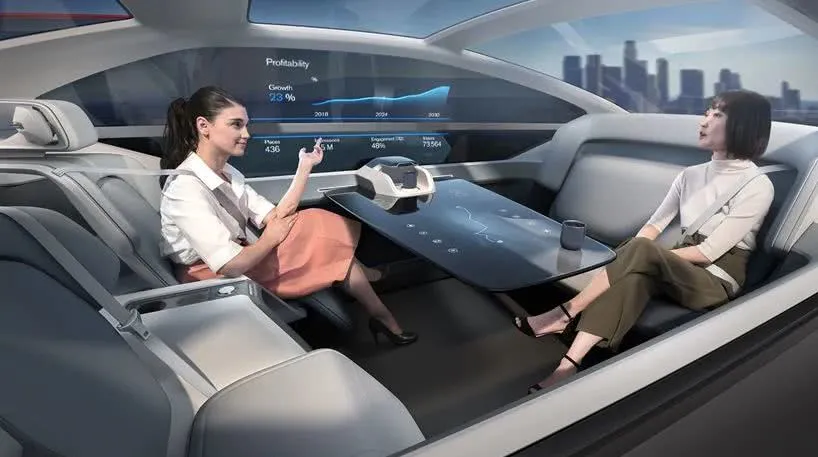

With safety needs met, our inherent laziness takes over, and we hope that the autonomous driving system can operate more efficiently and help us save time and energy, until it can drive the car entirely for us. Meanwhile, our hands and eyes, having been liberated by the driving system, begin to seek pleasure and anticipate a pleasant travel experience in the cabin. This is the need for emotional satisfaction.

These different stages of needs are reflected in the cabin’s functions.

Any form of driving that does not prioritize safety is rogue behavior

Safety is a fundamental need shared by human, vehicle, and system, and it is a critical element that runs through the whole process of human-vehicle interaction. Regardless of whether we pursue speed and excitement, or attentive service and experience, they are all based on safety.

As the co-pilot of human-vehicle interaction, when we worry about whether the “assisted driving function is reliable?” The vehicle system may also be concerned about the same issue, “Is the driver reliable? Is he or she ready to take over driving at any time?”

Therefore, in the complex data transfer between people and the system, the interactive information related to safety is given top priority and has become a top priority for automakers.

Basic Version: Keep the Driver’s Hands on the Wheel

Let’s take a look at how automakers currently address these issues.When you first open the XPeng NPG system, the system will force you to play a 4-minute “teaching video”. After the video ends, there is a quiz similar to the driver’s license test. Through video teaching and this small test, users are ensured to learn how to use NPG functions. Apart from instructional videos, car companies also use more mandatory measures, such as steering wheel warnings. Through sensors on the steering wheel, the system ensures that the driver does not remove their hands from the steering wheel when enabling the “Assisted Driving” function.

Of course, the same steering wheel warning system can reflect different strategies of different car companies. The HOD (hands off detection) of GAC Aion ADiGO 3.0 installs a capacitive sensor on the steering wheel, which is very sensitive, so you can barely touch the steering wheel with your hands. The system also supports a brief hands-off function, and the sensor will issue an alarm a few seconds after the driver’s hands leave the steering wheel.

In contrast, XPeng NPG’s steering wheel warning is more “conservative”. The steering wheel uses a torque sensing sensor, and the driver must exert a steering force on the steering wheel to have a presence. Even when the driver is using the assisted driving function, they must always maintain a driving state, which makes it easy to take over the vehicle at any time. Of course, in terms of user experience, this mode can be a bit “tiring”.

Advanced version: Who is the little eye that didn’t look at the road conditions?

The way of steering wheel warning can only ensure that the driver’s hands are on the steering wheel. As for whether the driver is looking down at their phone or paying attention to the vehicle changing lanes in front of them, it can’t do anything about it. At this time, it is necessary to rely on the “core eye” of the cabin to further integrate the automatic driving with the cabin.

At CES 2020, BMW released the BMW i Interaction EASE intelligent cabin. Using an eye tracking system to monitor the passenger’s line of sight, the system can recognize the object focused by the passenger’s line of sight, so the system of intelligent cars can read wherever the user’s gaze is.

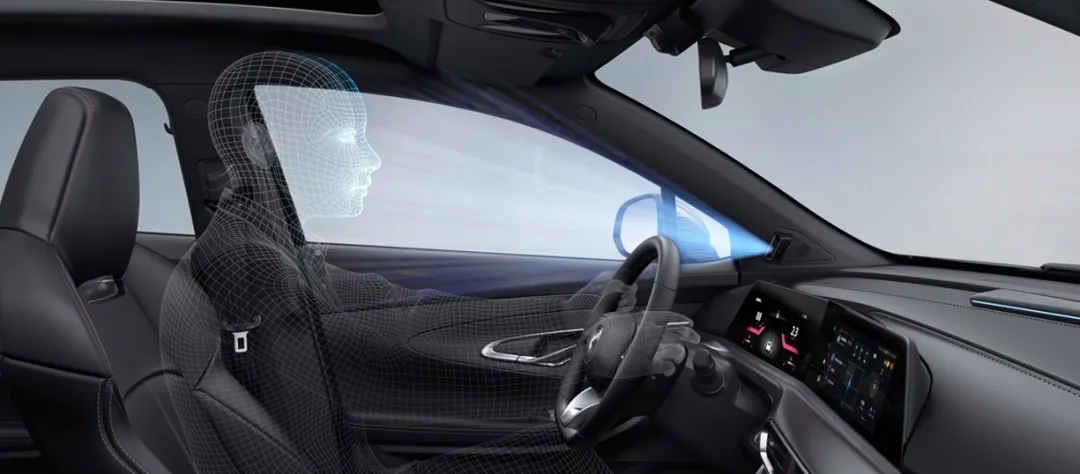

As a concept version of the cabin, the eye-tracking function may sound cool and distant, but the technologies it utilizes, such as sensors, AI, 5G, and cloud computing, have already been applied in the cabin. The combination of these technologies is the DMS (driver monitoring system) function of the cabin.

By collecting information on the driver’s facial expressions, movements, and gestures through cockpit cameras, infrared sensors, and other sensors, the driver monitoring system (DMS) can determine if the driver is distracted, fatigued, sleepy, or even unable to drive. Initially used to address tired driving, commercial vehicles in China, a “high-risk zone” for fatigue driving, must be equipped with DMS. However, when combined with advanced driver assistance systems (ADAS) and autonomous driving (AD), DMS clearly achieves a “1+1>2” effect.

By collecting information on the driver’s facial expressions, movements, and gestures through cockpit cameras, infrared sensors, and other sensors, the driver monitoring system (DMS) can determine if the driver is distracted, fatigued, sleepy, or even unable to drive. Initially used to address tired driving, commercial vehicles in China, a “high-risk zone” for fatigue driving, must be equipped with DMS. However, when combined with advanced driver assistance systems (ADAS) and autonomous driving (AD), DMS clearly achieves a “1+1>2” effect.

When the assisted driving function is turned on, a relaxed driver may become distracted by their phone or the scenery, or they may become tired and drowsy. It is possible that they may not be able to react in time when sudden situations arise that require them to take control of the vehicle. At this point, DMS can ensure that the driver is in a focused state through visual monitoring.

Drivers may try to trick steering wheel sensors by using a mineral water bottle instead of their hands, but it is difficult to hide their gaze and expressions.

For example, the star Yue will activate DMS when the vehicle’s speed exceeds 50km/h. It captures facial expressions through cameras and emits warnings, such as sounds and steering wheel vibrations, when the driver yawns, closes their eyes or dozes off. Guangzhou Automobile Group’s EA midsize electric SUV captures driver behavior through a camera on the steering wheel and sends out alarms when they smoke or fall asleep. The NIO ES6 and ES8 also integrate DMS cameras in their rearview mirrors.

However, at present, DMS cameras are mainly used for FACE ID and fatigue monitoring. It is still difficult to accurately detect small facial expressions and eye movements.

Ultimate Version: Independent thinking and prevention.No matter whether it’s the basic version of steering-wheel disengagement detection or the advanced version that uses user eye and motion capture to alert drowsy drivers, both warn users of dangerous signals after they happen and make users aware of and handle the danger, which is like “treating the headache when one has a headache and the foot pain when one has a foot pain.”

How do we prevent danger before it happens?

This requires the cabin to have some “independent thinking” ability.

Many start-up technology companies focus on driver’s micro-expressions. For example, Israeli sensor company Cipia calculates and studies using computer vision and AI technology, and finds that minor facial expressions occur when people are slightly sleepy. Facial expressions also change when people are daydreaming, before they yawn or start to doze off. That means the system knows you’re tired before you start to yawn or nod off.

Imagine this: the system calls up the car’s fragrance diffuser and activates a “meditated” scent; it opens up some dynamic rock music; and it complements that with the vibrating steering wheel and flashing lights to stay alert.

Even if you wear sunglasses, it’s hard to escape the “lawful eye” of the infrared sensor and camera.

Of course, this ability to read expressions and gestures is backed by improved cabin initiative. It can not only obtain user expressions, movements, and external road conditions information but also combine the data in advance, sort out the logical between the data, and draw conclusions about potential danger. The system can even make judgments and help you activate the “stay alert” mode by itself.

Don’t underestimate this minor progress; it takes much more than an increase in the system’s “IQ.” From the “baby” mode of handing non-processable information over to the driver to the stage where the system processes information and makes decisions by itself, the system begins to learn to think independently. It’s also at this stage that the human-machine co-drive system evolves from being a tool to an assistant, and even into the user’s friend.

You might ask, what if the safe warning system is foolproof but someone opens the Tesla AP system and goes to sleep in the back seat?

For those who don’t follow the norm, systems like General Motors’ Super Cruise can exit the system directly and apply automatic braking after three warnings. They’ll receive a direct “red card.”## Human-Machine Co-driving: Tool, Assistant, and Friend

People often say, “Laziness drives technological progress,” and the same is true for human-machine co-driving.

When the basic need for safety is met, just like when people are full and warm, the instinct of “gluttony” begins to awaken: if you can lie down, you won’t sit; if you can speak instead of using your hands, you won’t want to move your hands; if you can give a command in one step, you won’t want to say more words…

In the past two years, the voice systems of car companies have gradually evolved into intelligent voice assistants, not only intelligent, but also providing “personified” and thoughtful services like assistants, which is a trend.

BMW once gave this scenario when announcing BMW Natural Interaction:

The infrared light sensor configured in the cabin can accurately capture finger movements. When the index finger points to the window, the window can be opened or closed according to the sliding direction of the finger; this ability of remotely manipulating objects can also be extended to the outside of the car. When you use your fingers to point to a place outside the car and ask “How is the rating of this restaurant?” the system can also provide information about the restaurant. It is a bit like the martial arts novels’ “grabbing objects remotely” and “Six-Meridian Divine Sword”.

To cater to the language interaction needs of human beings, voice interaction has evolved from single-round interaction to multi-round interaction, which can continuously understand the context and make answers. Maybe when the system can integrate more information and remember users’ daily habits, the interaction needs will become simple again: you just need to say “I’m hungry”, and it will prepare a list of restaurants that you like. When you choose a restaurant, the system will directly plan the navigation route for you, find a parking space, and even book a seat for you by phone.

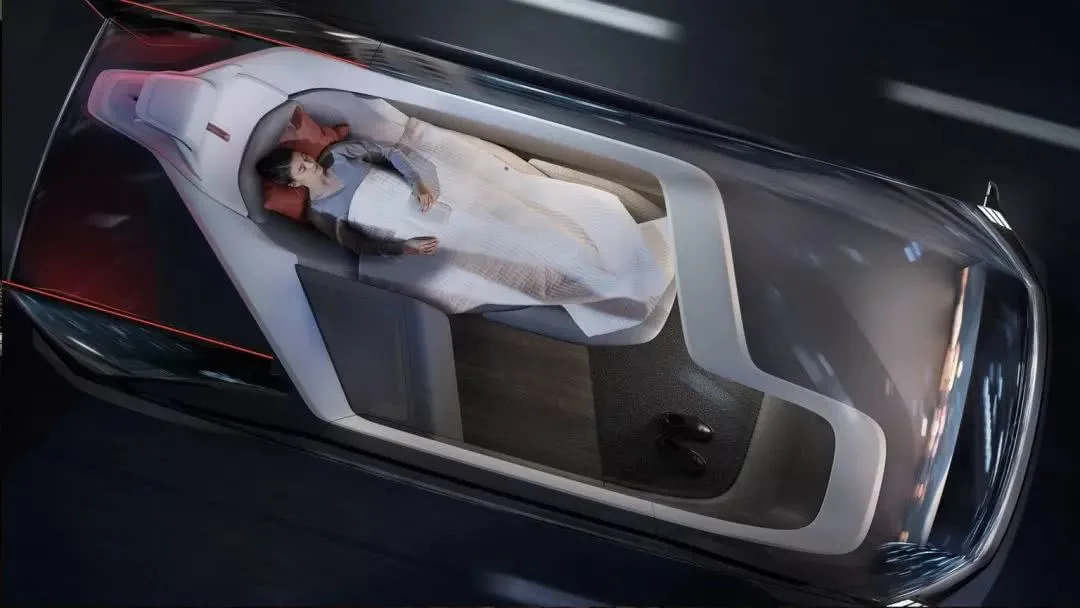

Even one day, the system can proactively obtain and judge the user’s needs and automatically push the service to the user. When you enter the cabin, the system has obtained several recommended driving modes based on past usage habits and data. You only need to click a button to choose “Sleeping Cabin” or “Business Cabin”, and it can be done with just one click.

Or, can Musk just send a Tesla Bot to help you drive?

FinallyWhen we hear “autonomous driving”, we often automatically associate it with the image of a wise and powerful Transformer. However, the autonomous driving system that is currently evolving is far from being so powerful.

During its evolution, we need to go through a long “human-machine co-driving” stage. At this stage, the system is like a gaming account that we have to gradually adapt to, starting from zero skills and gradually accumulating and sharpening our skills. We also need to adapt to its usage habits from human tolerance, adaptation, and compliance at the beginning to more and more driving assistance functions provided by the system, freeing up more time and space for human driving, and providing more considerate services and emotional companionship as the system becomes stronger. Until one day, the system can completely drive the vehicle by itself, do we no longer need the “human-machine co-driving” stage?

Not necessarily, as humans still tend to hold decision-making power in their own hands.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.