Author: LYNX

It has been two years since Tesla’s “Autonomy Day” in 2019.

On that day, Tesla CEO Elon Musk made countless optimistic predictions about the future of autonomous driving technology. The most famous of them was that by mid-2020, there would be one million Tesla autonomous vehicles on the road and people could “even sleep in the car while driving.”

Now, over two years later, the completely rewritten FSD system is still in internal testing in the United States. At Tesla’s AI Day held on August 19, Musk did not mention his ambitious goals from that day, but instead shifted his focus to a more sci-fi target: creating robots.

The most exciting moment of Tesla AI Day was the introduction of this robot shown first to the audience in the form of a Japanese tokusatsu. After introducing various sleepy FSD development and Dojo supercomputer chip data terms, Musk quickly changed the topic: “With such powerful sensors and data processing chips, we may develop such a prototype product next year.”

Then an actor dressed in black and white appeared on the stage, spinning and dancing wildly. Standing next to him, Musk joked, “This dancing guy is clearly not a real robot, but Tesla Bot will be.”

Regarding this robot, which was first introduced in the form of a Japanese tokusatsu, Musk himself did not give any more detailed introduction. He just briefly outlined the characteristics of the robot in a few pages of PPT:

In terms of “physical ability,” the Tesla Bot is about 1.73 meters tall, weighs about 56.7 kilograms, can carry up to 20 kilograms of weight, and can lift up to 4.5 kilograms with a single arm. It can run at a speed of 8 kilometers per hour.

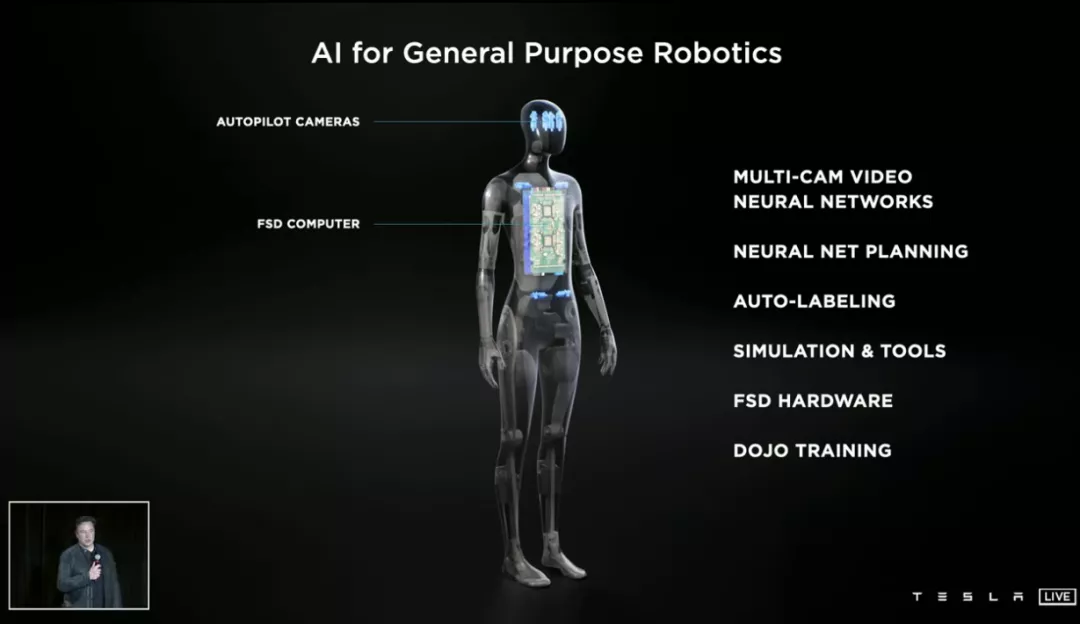

In terms of “appearance”, it is obvious at a glance that this robot has limbs similar to humans, and its whole body is made of lightweight materials and equipped with 40 electronic mechanical transmission devices. Its head even has a “face” – a display screen that shows information.Splitting Tesla Bot apart reveals that it is blessed with all sorts of Tesla technologies hidden under its body: Autopilot cameras make up its “eyes”, the FSD chip constructs its “brain”, and neural network algorithms + data simulation systems + Dojo supercomputing power make up its “soul”.

Musk said that the prototype of Tesla Bot will be launched as early as next year.

As for why it can be done and why it should be done, Musk explained that “Tesla already has almost all the parts and technologies necessary to manufacture humanoid robots, and Tesla vehicles themselves are like robots on wheels.” The goal of creating Tesla Bot is to “serve people, be friendly to people, and free them from dangerous, repetitive, and boring work.”

“At the very least, it should be able to handle simple tasks like going to the supermarket to buy groceries,” Musk said.

As soon as Tesla Bot was released, media reports once again carried the title of “Musk confirms himself as the modern Iron Man,” but I believe deep down inside we all have a little doubt: can this thing really be made next year? Is Musk just “releasing satellites” again?

Regarding this, Musk’s words at the press conference can provide some clues. He was honest and said “it may not work,” and immediately afterwards, he indicated that he hoped Tesla Bot would inspire enthusiasm and encourage people to join Tesla.

It should be noted that Tesla AI Day is actually a series of technical lectures held in California, USA, and its more important purpose is to recruit machine learning talents.

Well, before Tesla makes robots, the more important work is probably to recruit people.

As mentioned at the beginning of the article, Tesla Bot is the most refreshing part of the Tesla AI Day, but it actually only took up less than 20 minutes of the two-and-a-half hour event.

The most heavyweight content of the press conference still revolved around the development of Tesla FSD autonomous driving technology, the practice of neural network learning systems, and the computing power of the Dojo supercomputer chip.

And the reason why the most eye-catching Tesla Bot was introduced first…

With the principle of speaking human language and making it understandable for everyone, let’s start with the most core D1 chip from this press conference and attempt to talk more about how Tesla is “awesome” behind this pale praise.

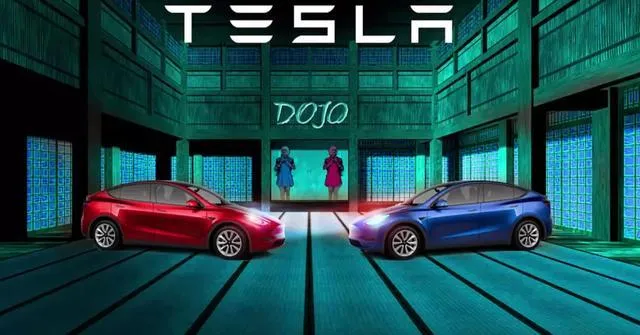

For those familiar with Tesla and autonomous driving technology, they would have heard of Dojo Supercomputer, while for more consumers who don’t care about technology, it may be very unfamiliar.

Dojo, which comes from the Japanese word “dōjō,” is a supercomputer used to train Tesla’s autonomous driving capabilities. Data from over one million Tesla cars is aggregated into this “dōjō”, helping Tesla FSD autonomous driving technology continuously improve.

Still feeling confused? Let’s use three things for comparison:

The first one is from the movie “The Matrix.” When the protagonist Neo returned to the real world for the first time and woke up after being hooked up to a plug in the back of his head, he said the famous line to Morpheus: “I know kongfu.”

The second one is from the British show “Sherlock.” When “Benedict Cumberbatch” faced a problem, he opened up his “memory palace.”

The third one brings us back to reality. After AlphaGo defeated all the top human Go players, it achieved “self-learning”–playing chess with itself on a higher level.

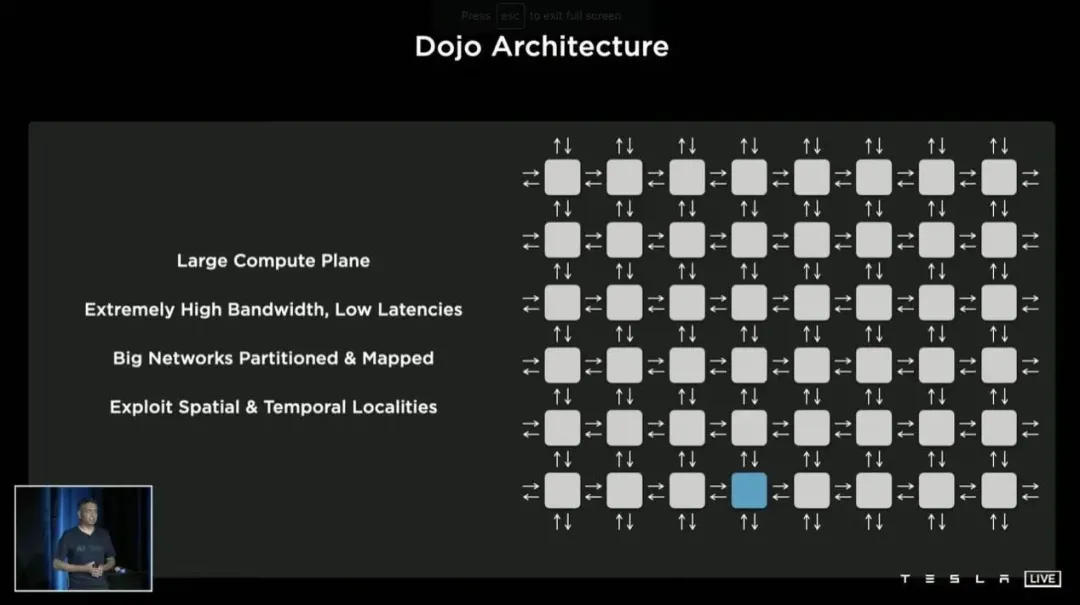

The logic of Tesla’s Dojo supercomputer is similar to the above three cases, which is to collect the massive amount of road data that Tesla’s vehicles produce and integrate it into an AI brain. The computer simulates various traffic situations in its “mind” to exercise and optimize autonomous driving capabilities.This is also the underlying logic behind Musk’s insistence on “visual recognition” rather than “lidar + high-precision maps” in the development of autonomous driving: autonomous driving is about making vehicles “learn to drive by themselves” rather than following map routes and radar feedback to “drive automatically”.

That is achieved by another fancy term: “neural network calculation”.

Well, before we officially introduce the D1 chip, we encounter another difficult point. What exactly is “neural network calculation”?

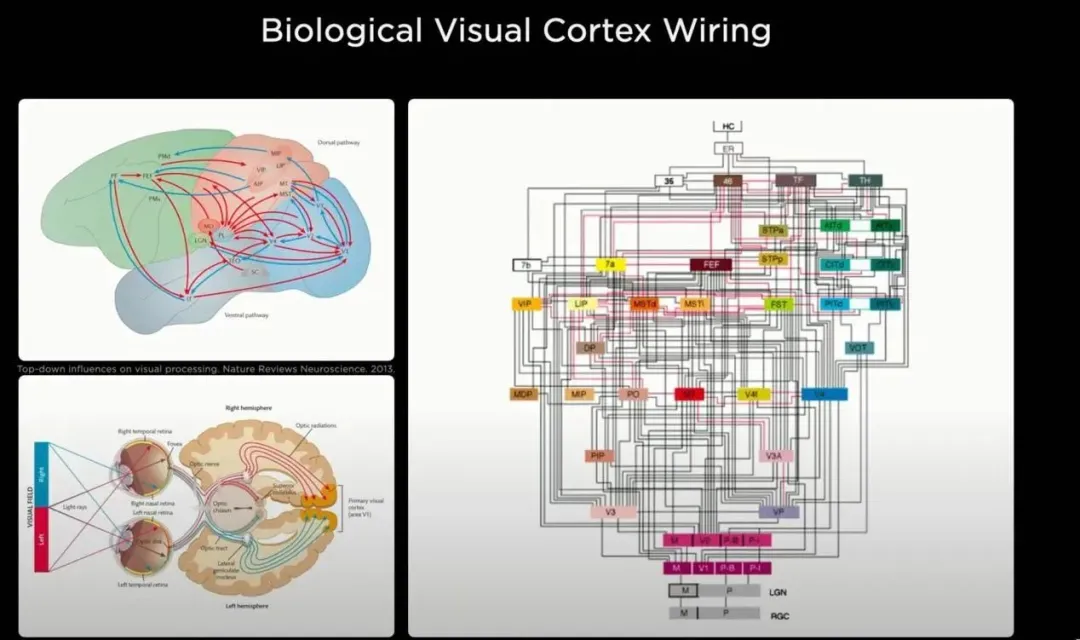

In plain language, we can analogize the 8 cameras on a Tesla vehicle to the car’s eyes, which collect video and image data that can “see” the surrounding traffic conditions.

However, the problem that follows is that “eyes” can see, but their “intelligence” may not be enough. The vehicle may not be able to understand whether what it sees is an ice cream cone or a construction vehicle, so it requires neurons and a brain.

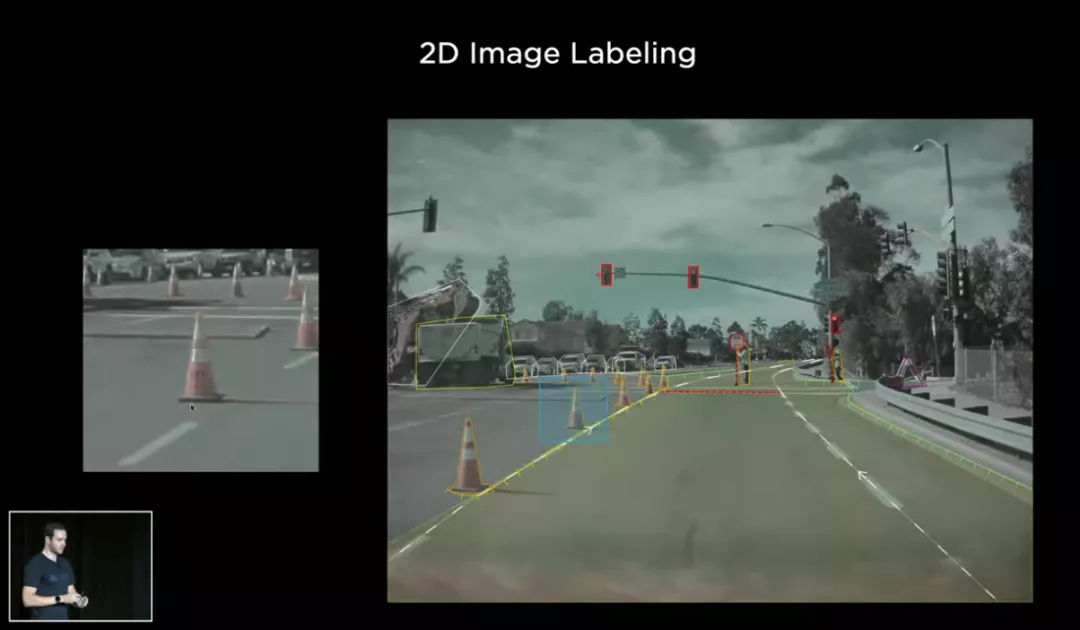

This brings us to another term in the field of artificial intelligence: “supervised learning” – the data needs to be manually labeled before it is given to the algorithm for training.

The most basic part is to manually label different shapes on objects commonly seen in various autonomous driving videos, such as motor vehicles, non-motor vehicles, pedestrians, traffic lights, etc.

As an aside, Xinhua News Agency once reported that there are more than 100 data labeling companies specialized in Beijing alone, and more than 10 million people across the country are engaged in data labeling work. This is also the main reason why the AI technology, which has always been associated with high-end and cutting-edge technology, is ironically ridiculed as “digital Foxconn” or “labor-intensive industry”.

Tesla’s approach can be seen as a combination of manual and machine annotation. On the one hand, it has developed HydraNets, a multi-task learning neural network that can simultaneously handle tasks such as object detection, traffic sign recognition, and lane prediction. At the same time, the company has assembled a team of over 1,000 data labeling experts to conduct data labeling and analytic foundational work.

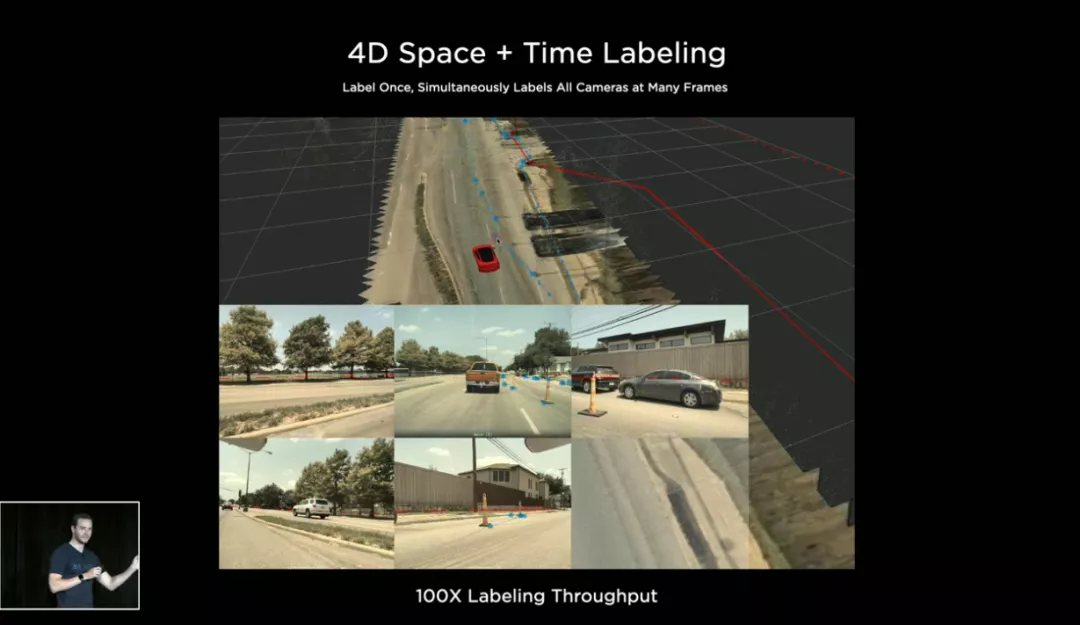

This work has currently achieved good results: the accuracy of labeling has been greatly improved, and it has evolved from 2D image labeling to 4D space-time labeling. It can even transfer labeled images from one camera to another after labeling once.

Currently, there are nearly one million Tesla cars on the road worldwide, generating over 200 million hours of video data each month. With such a massive amount of data, Tesla is now able to simulate real-world scenarios in its system.

The above image, which looks like a video game scene, is actually not designed for people to play with, but rather to simulate various driving situations and train Tesla cars.

According to Tesla, their labeling and simulation system can simulate up to 371 million data points and scenarios.

So, how does Tesla handle all this data and use the simulated world to train its self-driving system?

It is the Dojo supercomputer that makes this possible.

The biggest news from AI Day is not about Dojo’s official deployment (Elon Musk said it would be next year, so who knows if it will be delayed), but rather the display of Dojo’s powerful D1 chip, which forms the foundation of its immense computing power.

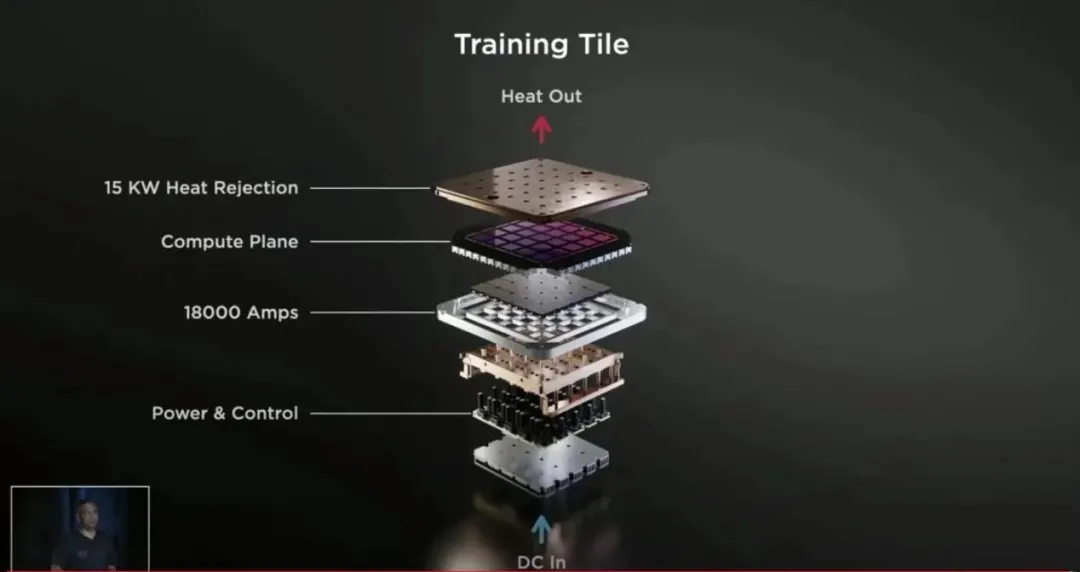

Rather than delving into a bunch of dry numbers and parameters about the D1 chip, let’s take a look at Dojo’s core computing power, which closely resembles the structure of an electric vehicle battery pack, as shown in the “training module” image below.

The basic building block of Dojo’s computing power is the D1 chip. Twenty-five D1 chips are assembled into a small training module, which delivers an impressive 9PFLOPS (0.9 trillion floating-point operations per second) of computing power.### Translated English Markdown Text

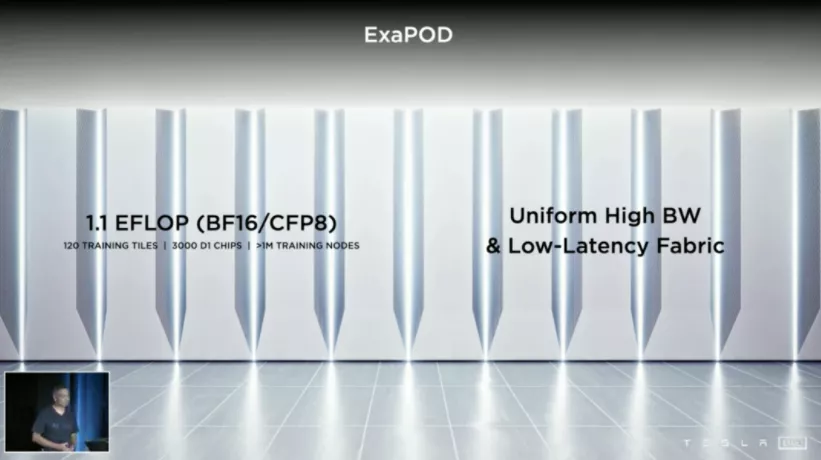

Finally, 120 training modules are interconnected and placed in several cabinets, using more than 3,000 D1 chips to form the Dojo ExaPOD, which has the most powerful computing power (more than 10 to the 16th power operations per second).

Under such tremendous computing power, Tesla claims that its performance per unit energy consumption is 1.3 times higher than that of today’s strongest supercomputers, while carbon emissions are only 1/5.

This is in line with Tesla’s design philosophy of “best AI training performance, larger and more complex neural networks, and energy cost optimization.”

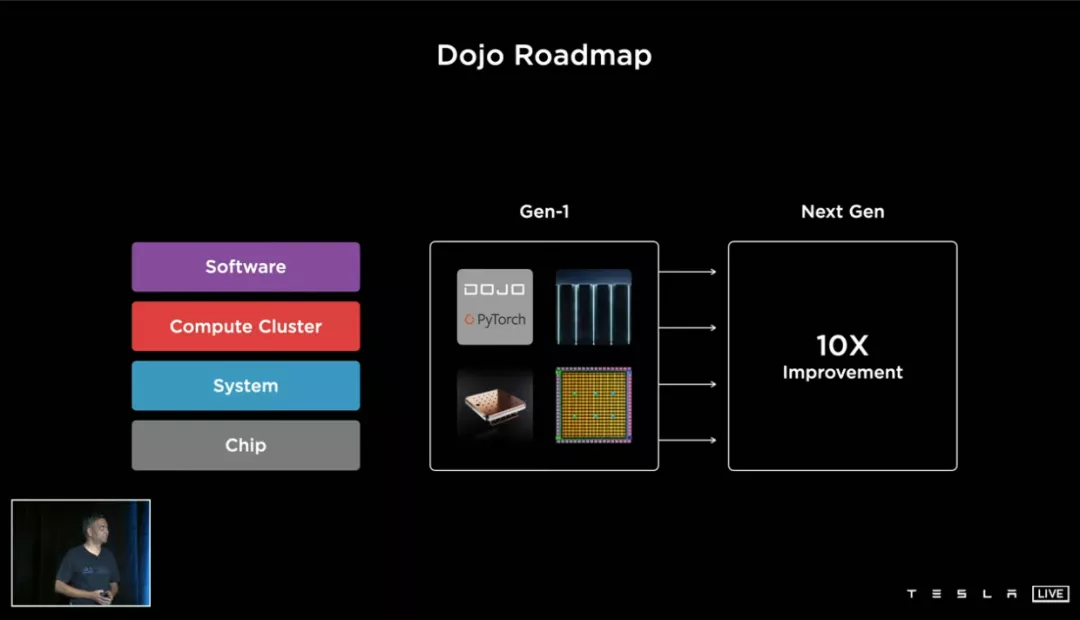

Therefore, Tesla proudly states that Dojo is the fastest AI training computer in the world, and this is just the beginning. The next generation of Dojo will be 10 times more powerful.

The question is, as we approach the end, I still have some doubts about whether technologically advanced supercomputer chips can achieve efficient production capacity next year, given the current situation where Musk personally drove up cryptocurrency prices globally and consumer-level graphics cards are hard to find.

Anyway, let’s end Tesla AI Day with this picture of Lee Ang.

You know, compared to Tesla’s supercomputer and Musk’s robots, what really concerns me is a news headline on the internet a few days ago: “Tencent invests another 50 billion to promote common prosperity.”

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.