Author: Dian Che Sen

Recently, the accident of “young entrepreneur driving NIO to death” is still under close attention from all walks of life. In the past few days, Lin Wenqin’s friends, partners, and the referrers who recommended NIO vehicles expressed their strong dissatisfaction with NIO’s handling of the matter, and called on CEO Li Bin to stop deceiving users.

Regarding the accident, NIO founder Li Bin stated that he was deeply saddened by the death of such an excellent young entrepreneur and expressed his condolences. At the same time, he also stated that no further information about the accident will be released until the investigation results are finally confirmed.

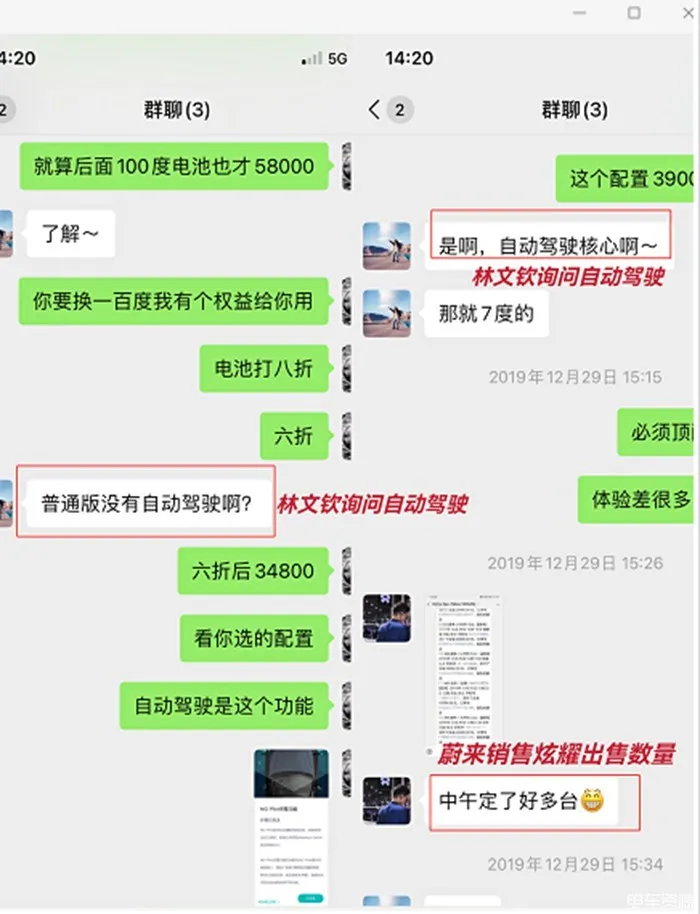

On August 17th, Lin Wenqin’s friend spoke up again, stating that “Lin Wenqin believes that automatic driving is the difference between electric and oil vehicles, and automatic driving is the core reason why he bought this car.” He said: “I was one of the first NIO users. At that time, NIO claimed that automatic driving was safer than human driving, and the car was equipped with cameras and LiDARs, which sounded very high-end. I trusted NIO very much, so I recommended it to Lin Wenqin.”

From the chat records provided by Lin Wenqin’s friend, it can be seen that before Lin purchased the car, the sales consultant brought Lin Weiwei and Lin Wenqin into a three-person group chat. During the conversation, Lin Wenqin repeatedly asked the NIO sales staff about “automatic driving,” but the sales staff did not respond positively at that time, but instead boasted about the sales that day.

As of the time of writing, the cause of the accident is still under investigation, and all results are subject to the announcement by the police.

However, the discussion about “assisted driving” has become a hot topic in the industry.

Especially for owners of cars with similar functions such as NIO’s NOP (Highway Pilot Assist), Tesla’s NOA (Autopilot Assist System), and XPeng Motors’ NGP (Automatic Navigation-Assisted Driving System), how should they correctly view and use assisted driving safely?

Let’s start with the recent “most controversial” NIO NOP

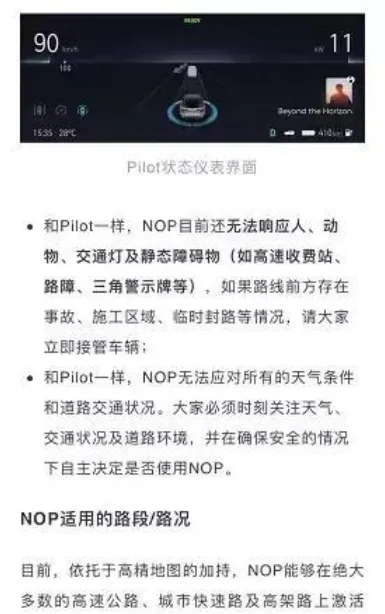

“NOP” stands for Navigate On Pilot. According to NIO Automobile’s description, NOP integrates the on-board navigation and NIO Pilot automatic driving functions, allowing the vehicle to automatically cruise along the path planned by high-precision map navigation under specific conditions.

Like Tesla’s NOA, this function can “realize automatic cruising from point A to point B, including automatic on and off ramps, overtaking, lane changing, and cruising.”Translated English Markdown Text:

But it should be emphasized that it is just an intelligent driving assistance function, not “automatic driving.” Drivers need to pay attention to the road conditions and be ready to take over the car at any time.

This is also reminded in the NIO car owner’s manual.

Let’s talk about Tesla’s “most confident” NOA

Tesla has always aimed at creating the leading advanced driving assistance system, or more straightforwardly, releasing fully autonomous driving technology.

NOA is a function in Tesla’s fully autonomous driving upgrade package. It can make the car automatically enter and exit the highway ramp or interchange, and overtake slow-moving vehicles. Compared with traditional adaptive cruise control.

The characteristic of the NOA function is that it can independently judge the timing of entering and exiting the highway as well as the timing of overtaking, and realize automatic lane changing and overtaking without human intervention. It is worth noting that this feature can only be activated when the vehicle enters the highway.

In fact, Tesla is the most aggressive in promoting this feature. It always emphasizes “Autopilot automated driving” and “FSD fully automated driving”. Giving users the illusion that Tesla can achieve fully automated driving. There is only an inconspicuous disclaimer on the Tesla official website saying: “The currently available (Autopilot) function requires active driver monitoring, and the vehicle has not achieved full autonomous driving.”

Recently, the US National Highway Traffic Safety Administration (NHTSA) announced that it will investigate accidents involving Tesla vehicles with automatic driving assistance functions, involving a total of 765,000 Tesla cars produced after 2014. These accidents caused a total of 17 injuries and 1 death.

It is reported that in recent years, the US National Highway Traffic Safety Administration has sent multiple collision investigation teams to review multiple Tesla collision accidents. The agency said that most of the incidents occurred after dark, and in many accidents, Tesla vehicles failed to recognize traffic control measures at the scene, such as ambulance flashing lights, lighting shells, luminous arrow boards, and cones.

Finally, it’s the “more conservative” XPeng NGP

The small team NGP of XPeng is actually an assisted driving function based on the XPeng XPILOT 3.0 system and navigation path. As long as the driver sets the navigation route, the vehicle can basically achieve automatic navigation and assisted driving from point A to point B. Of course, during this period, the driver is still the main controller of the vehicle, but in fact, many actions are completed by NGP. However, this function is currently only applicable to fast and high-speed road sections in certain specific areas.

The small team NGP of XPeng is actually an assisted driving function based on the XPeng XPILOT 3.0 system and navigation path. As long as the driver sets the navigation route, the vehicle can basically achieve automatic navigation and assisted driving from point A to point B. Of course, during this period, the driver is still the main controller of the vehicle, but in fact, many actions are completed by NGP. However, this function is currently only applicable to fast and high-speed road sections in certain specific areas.

In the eyes of XPeng Motors, NGP is not a fully automatic driving technology, after all, it cannot replace the driver in complex traffic and changing scenes. However, the implementation of this “assisted driving” function is to help drivers complete the journey more easily and safely, rather than replace them.

It is worth emphasizing that before using XPeng NGP, one must obtain a “XPeng version driver’s license”. After downloading the app and logging in to the account, you need to watch the safety prompt video under the supervision of the app and complete the safety test on the first voyage of the mobile app. After passing the two procedures, the system will open the NGP function for you.

Compared with a small footnote or a paper-based teaching material, XPeng Motors’ “mandatory” requirements may be more meaningful.

How to avoid being a “guinea pig” for assisted driving

First of all, we must understand that from the perspective of automatic driving technology, there are still many issues that have not been solved at this stage. Even if 90% of the problems have been solved technically, the remaining 10% of the problems may never be completely resolved, and various new road conditions and traffic emergencies may occur at any time.

In other words, people are constantly solving new problems to approach perfection, but they can never be completely perfect. It is like the extreme situations such as peak congestion during rush hour in major cities and high-speed rear-end collisions during National Day holidays. At present, all mass-produced assisted driving systems can only rely on the driver’s active intervention.

If the driver excessively trusts the assisted driving and relaxes his vigilance, once encountering this one-in-ten-thousand new situation, accidents will occur.The overconfidence of most drivers in assisted driving cannot be separated from the car manufacturer’s “exaggerated propaganda”. Phrases such as “the first L3 level autonomous driving vehicle” and “the world’s first mass-produced autonomous driving vehicle” can be seen on promotional posters. Car manufacturers will never miss a chance to boast.

This irresponsible hype gives drivers with assisted driving the subconscious idea that “this car can do anything.”

Consumers buy cars for transportation or to enjoy life, not to become “experts”. The terms L1, L2, L2.5, and L2++ only confuse drivers. What assisted driving functions can be achieved should be conveyed to drivers in plain language, rather than irresponsible “selling points”.

Therefore, respecting current assisted driving means “no reliance, no letting go, no distractions” for drivers, under the premise of car manufacturers promoting their own assisted driving rationally so that drivers can clearly understand the assisted driving functions of the vehicle.

But if such a “highest quality of life” driver is encountered, car manufacturers may be scared themselves.

Recently, a video of an ideal ONE car owner lying down while driving caused a lot of discussion among netizens. From the video uploaded by this ideal car owner, it can be seen that during high-speed driving, the car owner actually reclined the seat, took his/her hands off the steering wheel, and lay on the seat to enjoy “sunbathing” after turning on the vehicle’s assisted driving. At this time, the vehicle was driving rapidly on the expressway, and the voice was still playing in the video saying “100km/h speed limit, current speed is 100 km/h”.

It is no longer an isolated case that drivers relax after turning on assisted driving, holding the steering wheel with one hand, looking down at their phones, and even being distracted.

Cui Dongshu, Secretary General of the China Passenger Car Association, said that the objective reason for consumers’ incorrect understanding is a lack of effective education. Many consumers have insufficient awareness of the problems and risks that exist during the use process due to a lack of accumulation of professional knowledge. Car manufacturers should periodically organize potential problems with the assisted driving functions currently installed in their vehicles, allowing consumers to promptly understand and avoid risks.

At the same time, Li Xiang, Zhou Hongyi, and Shen Hui, have recently spoken out about the general public’s understanding of assisted driving, saying that “governing the promotion of assisted driving is imminent”.

Zhuyulong, a senior industry engineer, said that car manufacturers have a responsibility to monitor drivers’ attention in L2 level systems. They can use DMS (fatigue driving warning system) to monitor drivers’ state at any time, and prevent their non-standard behavior in a timely manner.

Final Words

We can regard “autonomous driving” as a trend in the future. However, if in the process of its development, we rely on actual lives to detect the bugs in the software system, it is obviously unreasonable. There should not be any life being sacrificed for “autonomous driving”.

Meanwhile, we urge every driver who chooses to equip with auxiliary driving to not entrust their lives to machines. To some extent, the probability of error made by a machine is lower than that made by human beings. However, currently, auxiliary driving is far from being as omnipotent as you think!

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.