Since Autopilot is used as an example to ask whether the autonomous driving function is worth it, let’s first review the “futures” of Autopilot.

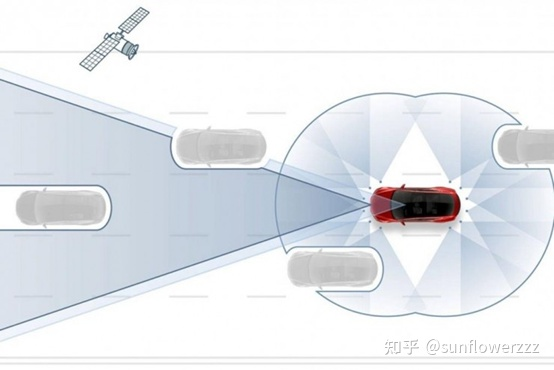

In October 2014, Tesla released the all-wheel-drive version of Model S and announced that all Tesla vehicles produced thereafter would be equipped with hardware for autonomous driving. The initial “autonomous driving” (Autopilot) device hardware includes a front-facing camera, 12 ultrasonic sensors, a front-facing millimeter wave radar, and a rear-facing backup camera.

In October 2015, Tesla pushed the OTA update of the V7.0 software system to its Model S, launching automatic lane keeping, automatic lane changing, and automatic parking, which are functions that require the driver to take over at any time, but still titled “autonomous driving” in Tesla’s external propaganda.

In August 2016, the V8.0 software system OTA update changed the system logic of Autopilot 1.0 from mainly using a single front-facing camera to mainly using a single millimeter wave radar, and this update happened three months before the very famous highway accident with Model S that occurred when Autopilot was turned on.

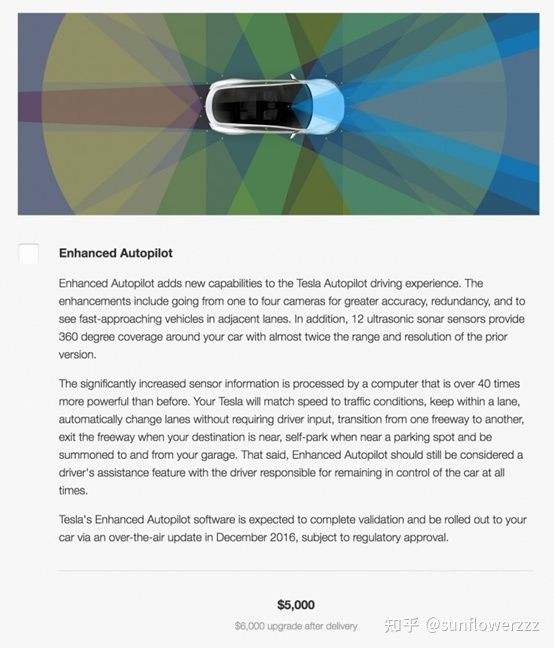

In October 2016, Autopilot 2.0 hardware was introduced, and Mobileye, which had been at the forefront of 1.0 hardware, was completely abandoned (due to an embarrassing relationship with Tesla since the previous accident), replaced by the FSD (Full Self-Driving Computer) platform previously launched internally by Tesla, with NVIDIA chips as the basis for the Autopilot 2.0 computing platform, and perception hardware consisting of 8 cameras, 1 millimeter-wave radar, and 12 ultrasonic radars.

In October 2016, Autopilot 2.0 hardware was introduced, and Mobileye, which had been at the forefront of 1.0 hardware, was completely abandoned (due to an embarrassing relationship with Tesla since the previous accident), replaced by the FSD (Full Self-Driving Computer) platform previously launched internally by Tesla, with NVIDIA chips as the basis for the Autopilot 2.0 computing platform, and perception hardware consisting of 8 cameras, 1 millimeter-wave radar, and 12 ultrasonic radars.

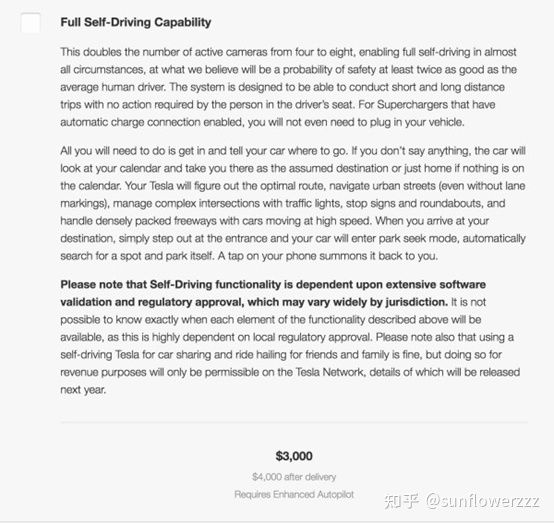

At the same time as the release of Autopilot 2.0, Tesla introduced its biggest “futures” ever for the Autopilot: Full Self-Driving Capability (FSD), which was offered as an option. After describing the vision of autonomous driving in the FSD package, there was a humorous description that “It is not possible to know exactly when each element of the functionality described above will be available, as this is highly dependent on local regulatory approval”…

Tesla’s dream of self-driving taxis did not stop with the description in the “futures” package. At the “Autonomy Investor Day” event in April 2019, Tesla CEO Musk announced the introduction of a self-developed FSD chip and the deployment of Robotaxis starting from the end of 2019, with the goal of deploying one million vehicles by the end of 2020.

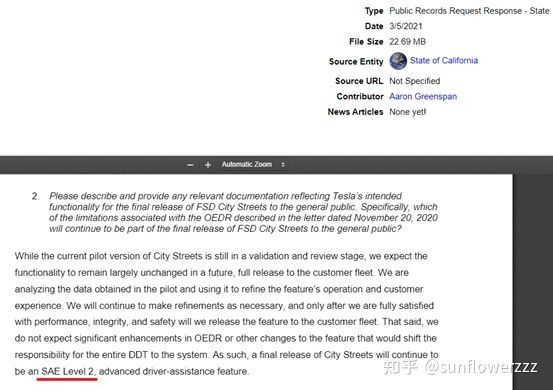

However, in an email conversation between Tesla officials and California DMV officials, the current autopilot and FSD functionalities are rated as SAE Level 2. This means that the driver is still required to take over at any time. Whether it is the “autonomous driving” released in 2014, the optional package with the function of “fully automatic driving” added in 2017, or the Robotaxi autonomous driving car rental business set to launch at the end of 2019, it remains a presence that requires the driver to take over at any time. The term “futures” is still appropriate to describe it.

In contrast to Tesla’s technical route, numerous new cars equipped with LIDAR systems have appeared one after another this year. LIDAR is even more advanced than millimeter-wave radar, and Tesla is a staunch supporter of a vision-based solution and has even abandoned millimeter-wave radar altogether. On the other hand, vehicles like the NIO ET7 and Xpeng P5 rely on high-line-count LIDARs that fuse visual and millimeter-wave radar perception, combined with high-precision maps, to accomplish autonomous driving.

So which approach is superior? Can the descendants of the latter approach achieve high-level autonomous driving that Tesla “cannot yet achieve” by adhering to a purely visual solution?Indeed, as Tesla claims, driving relies on pure visual systems, and if there is an intelligent enough deep neural network computer that can solve corner cases through massive data training and machine learning, and reproduce human perception, judgment, and decision-making capabilities in high-resolution and high-accuracy camera visual information, then other sensor information is no longer required. But why should we be paranoid and rely solely on AI algorithms to extract distance and speed information from visual images? Is it not possible to provide more secure and safer functions by complementing the information extracted from visual images with the distance measurement area that lidar excels in and the dynamic object detection area that millimeter wave radar excels in?

I happen to have a recent popular demo video of mixed road conditions on urban and highway, featuring the test vehicle used by the IM AD smart driving system, the first product of IM Auto, L7, and based on the hardware using Nvidia Xavier chip and vision perception scheme that will be adopted when L7 is put into production. In the demo video, the test vehicle’s autonomous driving on public roads in Suzhou’s urban and suburban highways without any human intervention is impressive to watch. At the same time, this model also reserves hardware interfaces for upgrading to higher computing power Nvidia Orin chip and lidar perception scheme, which is just an example of taking advantage of each other’s strengths and blending.

Drive through intersections with multiple cars changing lanes, predict the direction of travel trajectory and predict leaving;

Perceive the car behind during lane changes, return to the original lane for avoidance and perform dynamic planning for the next step;

Perceive the side-approaching truck and merge into the lane, quickly decelerate and give the way;

Automatically pass slow cars on urban expressways;

When the car in front changes lanes but the speed difference is sufficient, the test vehicle does not slow down or brake;

When a vehicle in the leftmost lane changes lanes to the middle lane, the test vehicle successfully changes lanes from the rightmost lane to the middle lane without slowing down;In these scenarios, the smart driving test vehicle of IM L7 has shown great perception and control capabilities with IM AD. Its actual road performance is remarkable. With a certain level of visual perception scheme and the promised upgrade ability of IM L7 to be compatible with the laser radar software and hardware, chip replacement and laser radar installation, the final complete version is expected to have even better performance.

For ordinary people, advanced driving assistance and ultimate autonomous driving functions liberate time and energy when moving in transportation. It is not advisable to adopt a paranoid mentality of selecting technology routes. Instead, to make better use of technology, learn from each other’s strengths and weaknesses, and create a better user experience is a more worthy choice.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.