Author: Mr. Shangu

The intelligentization of cars is sweeping the world with unstoppable force, and countless resources, talents, and technology giants are entering this race, fundamentally changing the ecological chain and competition pattern of intelligent cars. Intelligent cars have shifted from simply providing automatic driving functions to driving product innovation and iteration through user services and commercial value, presenting a situation of “confusingly beautiful flowers” in the entire industry. This is a huge challenge for traditional automotive supply chains and R&D personnel, but innovators are excited.

This change is different from the past. 50 years ago, cars were representative of the mechanical age, while 20 years ago, they were representative of the electronic age. Nowadays, their representative form is software. Through software, the engineering realization of intelligent cars is completed.

The trend of multi-domain integration of intelligent cars

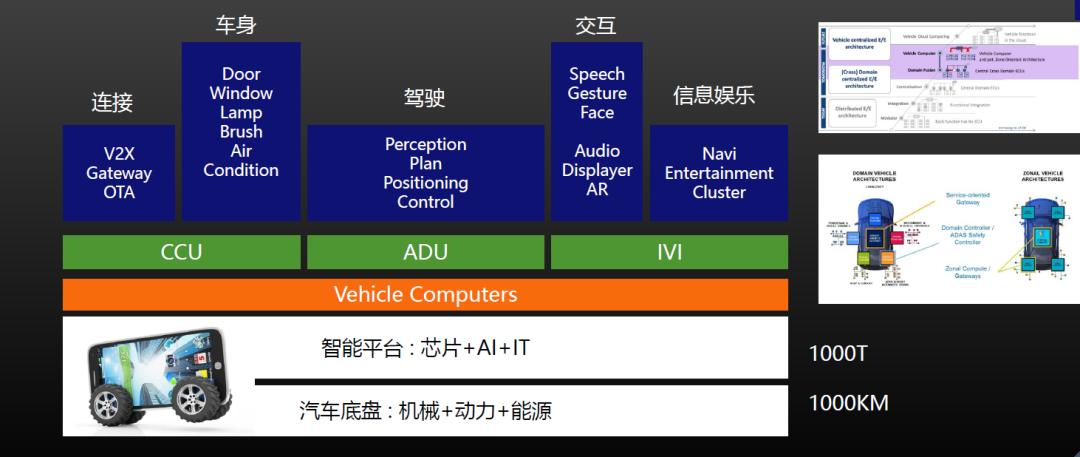

Multi-domain integration is the main technical trend of intelligent car high-performance computing platforms. It will bring many challenges to automotive R&D, including system-level, software-level, and semiconductor chip-level.

Accelerating the landing of autonomous driving

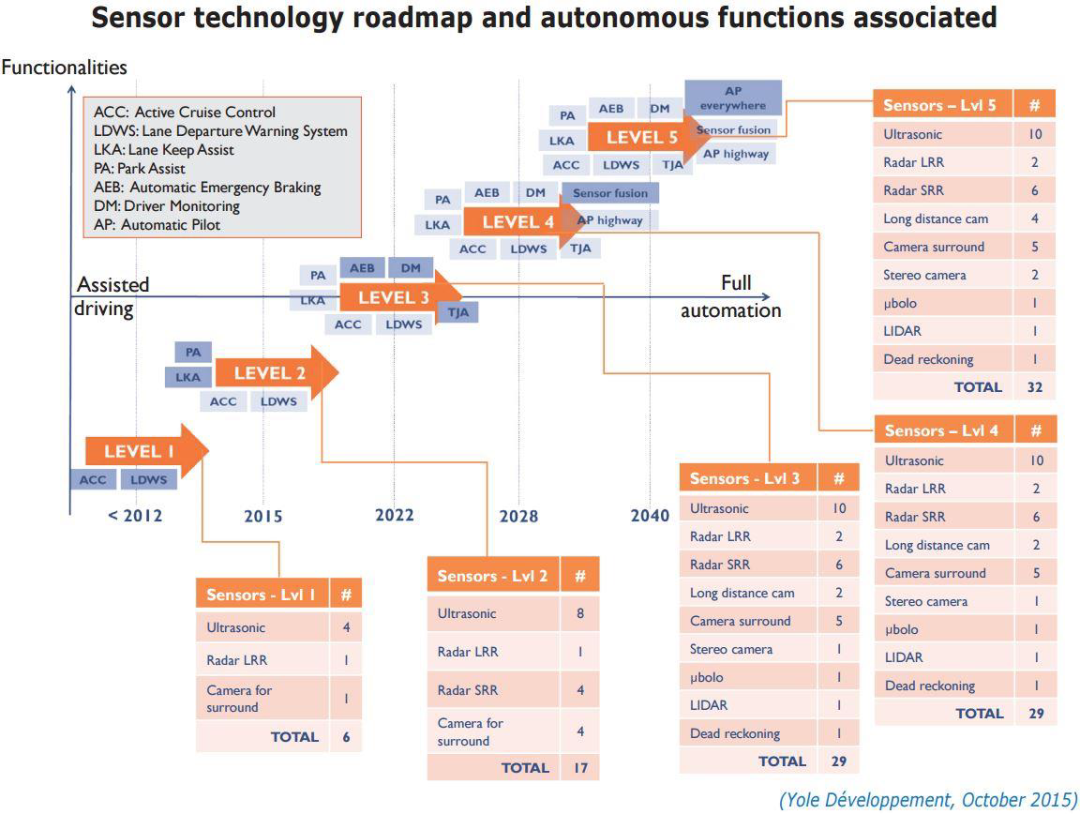

In the early days, we have always emphasized the intelligentization at the sensor level of L1 and L2. Now, people are talking more about the overall level of intelligentization of the vehicle. We can see that many new cars launched will mention the overall electronic and electrical architecture, the number of cameras and radar, and the improvement of the overall perception ability of the vehicle. It also mentions its computing platform, chips, OTA, etc. and improves the overall level of vehicle intelligence through hardware pre-embedded.

In addition to the intelligentization of the car itself, vehicle-to-road coordination is also the main technological route promoted by China’s intelligent networked cars. The V2X roadside technology, communication and synchronization technology of vehicle, road, and cloud coordination, edge computing technology, etc. derived from this not only require smart cars but also require smart roads.

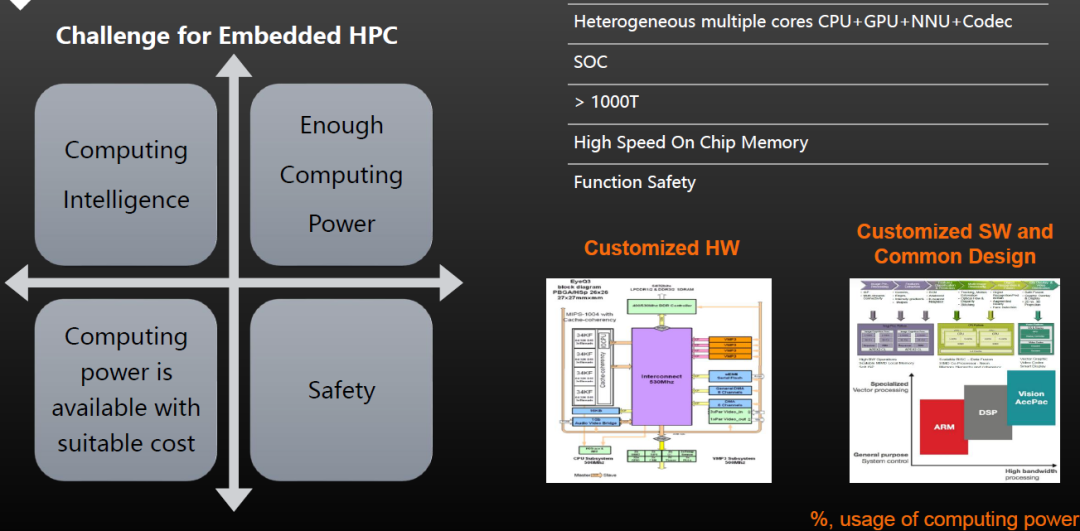

With the widespread application of various intelligent new technologies, computing power will become the core focus of the industry, including AI computing, MCU, etc. When talking about computing power, there is an important concept of “multi-core heterogeneous”. We need to think about and clarify what tasks are loaded on what hardware platforms and what kind of computing is done.

Reshaping the automotive industry with software

The trend of software-defined cars is becoming increasingly clear in the automotive industry. Domain controllers and central computing platforms have centralized computing, and the pattern of relying on software and hardware integration for profit is gradually transitioning to software-hardware separation. Even with this separation, the software code still consists of millions of lines, with L4 systems having billions of lines of code. Now, a new concept of software-software separation has emerged. The introduction of these technological concepts presents new challenges to whole-vehicle electronic and electrical architecture, as well as the abilities of car manufacturers and suppliers.

The trend of software-defined cars is becoming increasingly clear in the automotive industry. Domain controllers and central computing platforms have centralized computing, and the pattern of relying on software and hardware integration for profit is gradually transitioning to software-hardware separation. Even with this separation, the software code still consists of millions of lines, with L4 systems having billions of lines of code. Now, a new concept of software-software separation has emerged. The introduction of these technological concepts presents new challenges to whole-vehicle electronic and electrical architecture, as well as the abilities of car manufacturers and suppliers.

Opportunities in the Midst of Change

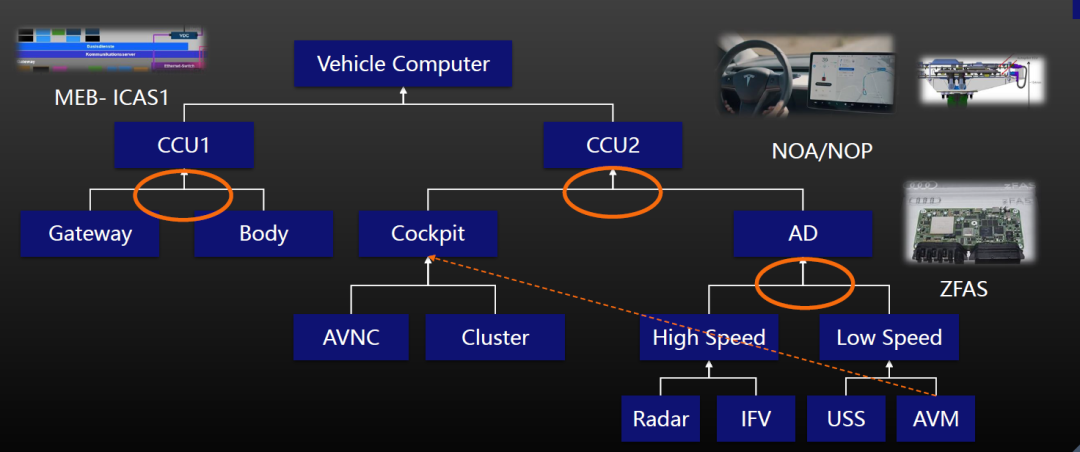

We can gain insights from some pioneers in the industry. As shown in the zFAS system on the Audi A8 in the above figure, this intelligent driving high-performance controller is the first mass-production vehicle with L3 functionality. This controller combines the capabilities of high-speed driving and low-speed parking control within one unit. Later, Tesla’s NOA system combined intelligent driving and intelligent cockpit controllers in one controller box, solving the high-bandwidth data transmission and communication problem. The Volkswagen MEB platform also combines traditional ECU modules. For the past decade, many global car manufacturers have integrated different functional ECUs to enable cross-domain integration.

In the past, when discussing automobile product strength, the focus was on safety, comfort, and improved performance while providing a means of transportation. Today, when we think of the product strengths of intelligent cars, what comes to mind? The definition of intelligent cars has surpassed the boundaries of traditional transportation tools and now emphasizes enhancing user experiences through intelligent features. Automobiles will evolve into mobile, intelligent platforms that offer various functionalities and services through subscription services.

From this perspective, automobile functionalities will be redefined, and the entire electronic and electrical architecture will undergo significant changes.

Tesla’s impact on the intelligent automotive industry is significant. Tesla was a leader in technology and business models, manufacturing their software and hardware as an integrated solution. They allowed license-based software acquisition and demonstrated the value of software developers. Tesla also pioneered the introduction of SAAS (software as a service) in the automotive industry.

Software Development Challenges in High-performance Computing Platforms for Autonomous Driving

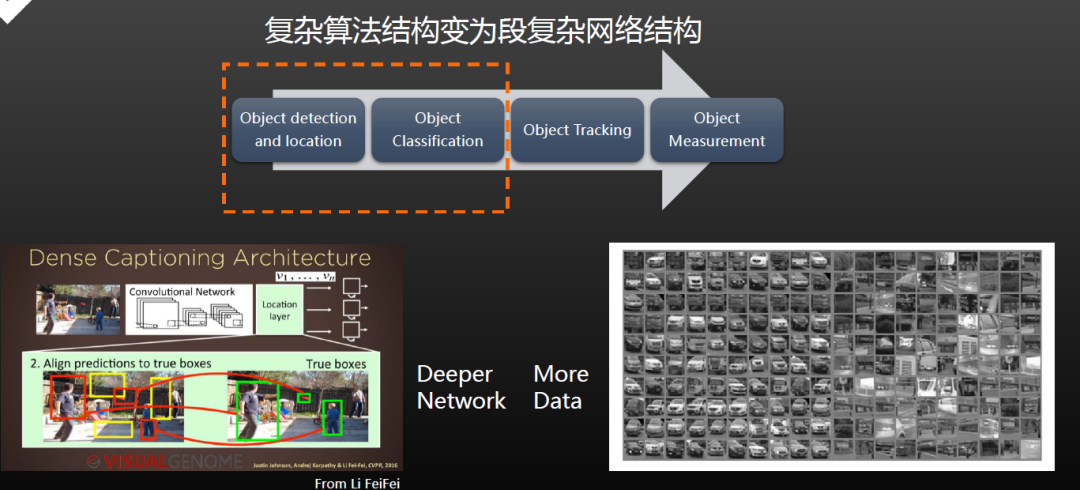

Challenges in Autonomous Driving Perception and Algorithms.

In the three major technology modules of autonomous driving (perception, planning/decision-making, and control), the perception module is the most complex and difficult to predict. There are five major indicators in the perception field of intelligent driving, namely perception completion function, performance, availability, reliability, and feasibility. In the current stage, the focus of perception technology has shifted from early control false alarm rate to avoiding missed recognition to maximize driving safety. This poses challenges for software algorithms, safety architecture, and sensors.

When we analyze the autonomous driving perception from top to bottom, we will find that the requirements for false detection and missed detection become higher as the functions become more complex. Designers need to come up with a redundant engineering solution. For example, trajectory tracking can be used to supplement my perception system’s detection, which solves the problem from a system level.

Despite this, better algorithms, safer architecture, and more reasonable sensor arrangements are still needed.

Different levels of autonomous driving require more and more vehicle sensors to provide different intelligent experiences. For example, there are more and more cameras. Three years ago, people were still discussing how many cameras should be installed outside the cars, but now people are discussing whether there should be fewer cameras inside the car. Tesla installed an interior camera in its earliest models. In some solutions, one camera is used to monitor the driver and another wide-angle camera is used to monitor other passengers in the car. This presents significant challenges in calculation and arrangement.

Multiple sensors also bring information security issues, as well as fusion problems between different sensors. Moreover, the technical challenges brought by the fusion at various levels are different.

There is a more advanced solution, which is millimeter-wave radar ADC conversion. The emitted waves and reflected waves are collected and processed, and then clustering is performed before tracking, and finally target output is formed. Nowadays, all the data processing after ADC analog target acquisition is usually placed in the central brain. In this way, the post-processing of data from multiple sensors onboard can be uniformly calculated, ensuring the highest fidelity while the computational complexity is also the highest.

Challenges in Calculation and Communication

The computing power of chips is becoming stronger, allowing developers to use more complex networks. Not only are the levels of these networks becoming more complex, but their types are also increasing. In practice, it is common to find only two NPUs (neural processing units) inside an SoC (system on chip). However, customers may want to run four or even six deep learning models for detection purposes, including for different types of detection. The computation power is sufficient in this case, but the issue that needs to be solved is the “reasonable allocation of computation power.” How can we assign the two computing units to six models?

The computing power of chips is becoming stronger, allowing developers to use more complex networks. Not only are the levels of these networks becoming more complex, but their types are also increasing. In practice, it is common to find only two NPUs (neural processing units) inside an SoC (system on chip). However, customers may want to run four or even six deep learning models for detection purposes, including for different types of detection. The computation power is sufficient in this case, but the issue that needs to be solved is the “reasonable allocation of computation power.” How can we assign the two computing units to six models?

Due to the challenges posed by many sensors and AI, the use of chips is becoming more common. Well-known chip makers such as Horizon, Cambricon, and Black Sesame, as well as some automotive companies, are conducting research or have already launched their own self-developed SoC plans. This presents a huge challenge for developers because the underlying drivers and software for each chip are different. After changing to a new platform, previous developments need to be completely rebuilt, which means that all previous efforts will be wasted. In this case, how can we ensure that past development assets are retained? In the MCU era, the usual practice was to isolate the software layers from the hardware, thereby achieving application layer isolation.

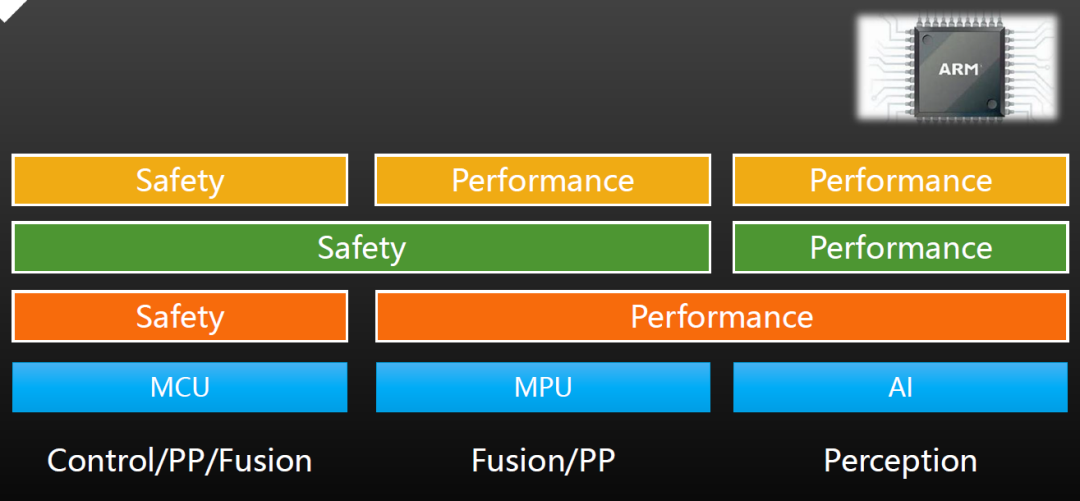

But today, it is not just an MCU. It is divided into three major categories based on function or calculation requirements.

One category is the safety processor, which plays a role in safety functions, such as diagnostics, functional safety, and real-time control.

Another category is the performance processor, which requires a large amount of mathematical calculations, such as differential, integral, trigonometric function calculations, as well as differential equation and linear algebra calculations. These calculations are used in scenarios such as coordinate conversion for cameras, path planning, and environmental modeling. Therefore, the performance processor is a new thing for traditional MCUs.

The third category is the AI processor, which is the processor for “TOPS” (trillions of operations per second) that everyone often talks about. Generally, vendors will provide customized tools. The results obtained when running AI deep learning models on AI processors on the server side are exactly the same, but the traceability, consistency, and reliability of AI during this process are huge challenges. Because the accuracy and model of the results are different when running on the target board compared to running on a computer server, it requires a lot of Know-How to ensure that the results are the same.

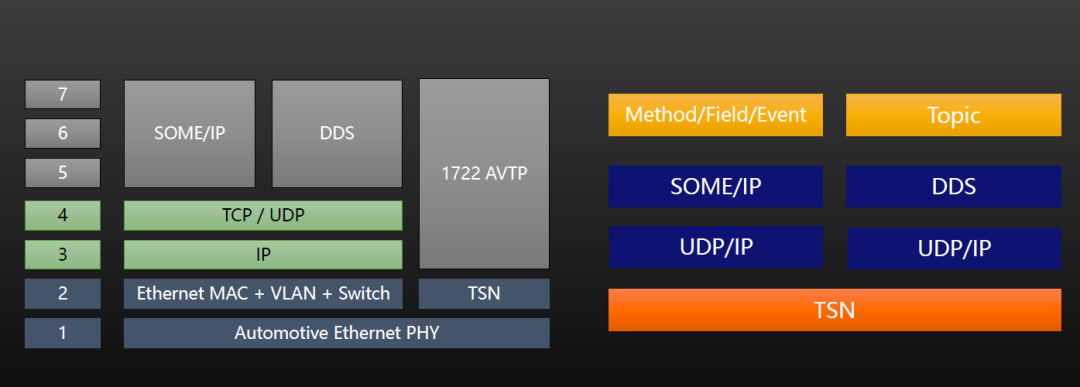

A large number of sensors will generate more and more data. The figure shows a typical seven-layer OSI open interconnection network. Our understanding of it is to ensure deterministic communication at the bottom layer, support SOA architecture with DDS or SOME/IP in the middle layer, and parse this data at the top application layer. One thing to note is that using SOME/IP or TSN does not solve the problem of intelligent car communication alone; it is a three-dimensional system solution.

Development Challenges

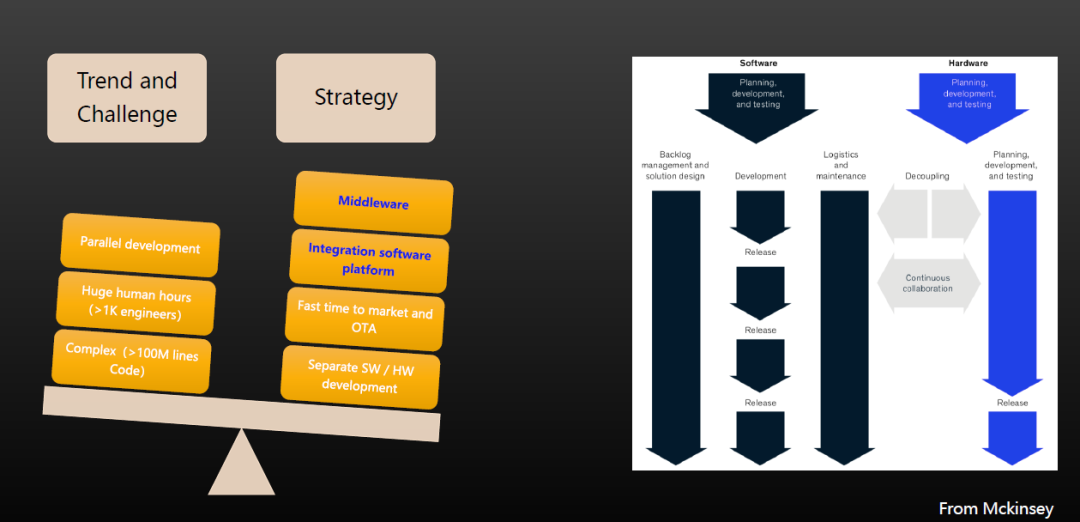

Today, consumers are accustomed to the smartphone industry, with new product iterations each year. Traditional car development cycles take two to three years, which is a huge challenge for the automotive industry. If the development cycle is shortened to 12 or nine months, researchers need to develop in parallel, so it looks like one engineer is doing 12 months of work instead of 12 engineers completing it in one month. But in reality, the difficulty and process of developing and integrating a complex system by one person are completely different from splitting it into 12 sub-systems and developing them in parallel, then integrating them. Development managers need to think about how to adapt to rapid product iteration through the use of tools and methods.

To cope with parallel development involving massive hours and personnel, overall strategies include:

- Parallel development of hardware and software;

- rapid listing and upgrade iteration;

- Integration platform;

- Appropriate middleware to splice the layout.

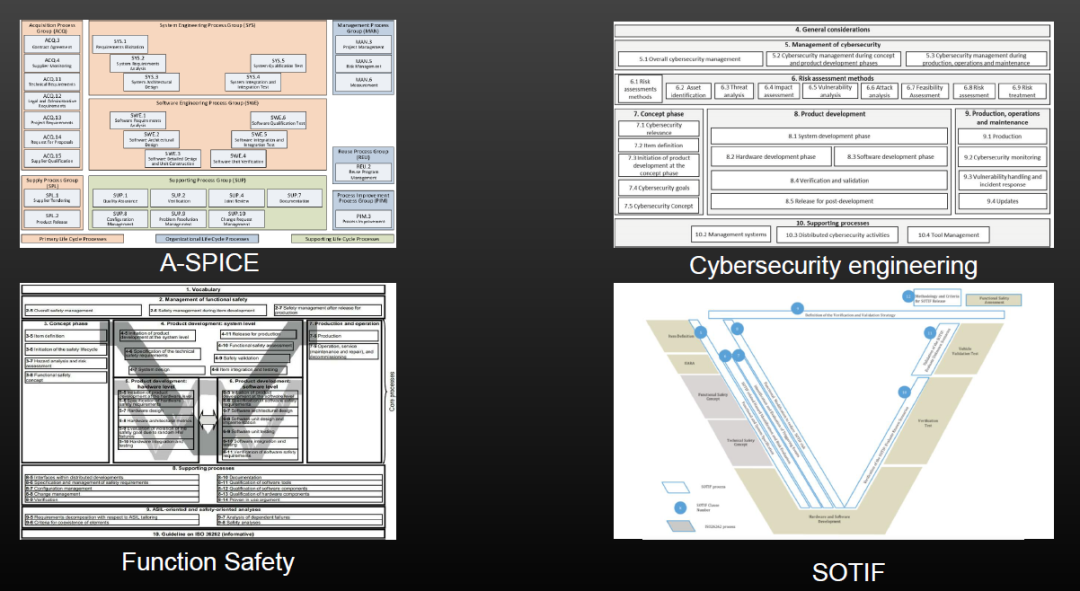

Security Challenges

Safety is the minimum bottom line of all automotive products, and the safety of intelligent cars will extend to functional safety, expected functional safety (SOTIF), and information and network security. As software engineers, some use Linux open-source software, some use commercial QNX software, and some provide software services and security, in fact, what we are pursuing is a software engineering process. A software written by 10 people is completely different from one written by 1,000 people in terms of organizational structure, tools used, and development form.

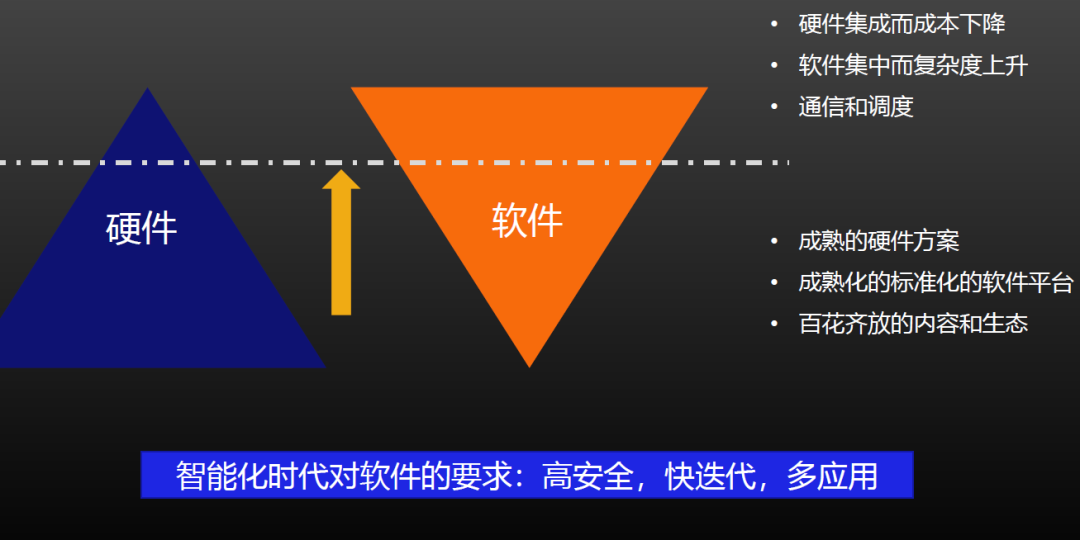

In the trend of intelligentization in the automobile industry, hardware integration has brought about cost reduction, but also challenges in the increase of software quantity and complexity. The software development for the intelligent driving industry requires mature hardware solutions and standardized software platforms. What is even more crucial is to embrace an open mindset and welcome a diverse ecological environment.

In the trend of intelligentization in the automobile industry, hardware integration has brought about cost reduction, but also challenges in the increase of software quantity and complexity. The software development for the intelligent driving industry requires mature hardware solutions and standardized software platforms. What is even more crucial is to embrace an open mindset and welcome a diverse ecological environment.

The requirements of software in the intelligent era can be summarized as: high security, fast iteration, and multiple applications. Technologically, this is demonstrated as:

- Efficient and trustworthy AI calculation

- High bandwidth real-time communication

- High security software design

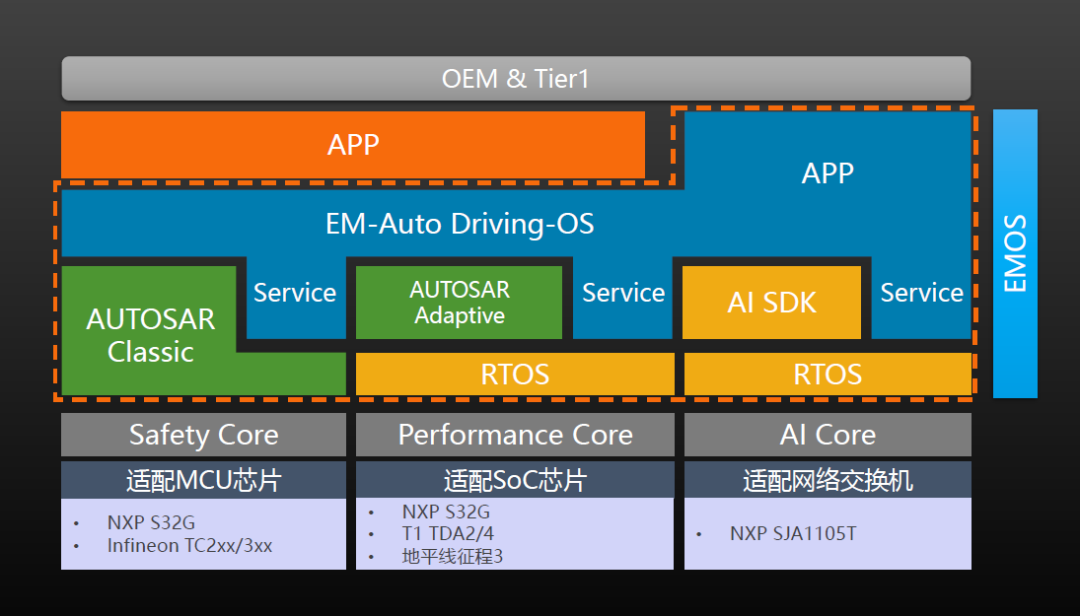

EMOS: Practical High-Performance Computing Software Platform

EMOS, a high-performance computing software platform, is one of the core products of Horizon Robotics. It has efficient and trustworthy AI calculation, high-security software design, and real-time communication technology. EMOS is built based on deterministic communication and scheduling to ensure chip adaptability and maximum computational power mobilization for application layer development. At the same time, the middleware’s functionalities are defined based on the intelligent driving application scenarios.

EMOS is a heterogeneous multi-core, cross-chip software platform that can be provided to manufacturers for independent hardware R&D and application layer software development. In addition, the EMOS database can help developers shorten the development cycle for autonomous driving.

In summary, it has the following two main features:

1. Horizon Robotics maintains a unified software platform

- Based on mature OSLinux/QNX/VxWorks

- Based on mature AUTOSAR (CP/AP) basic support services

- Provide customized support for autonomous driving functions

- Provide system applications

- Provide innovative applications

2. Manufacturers can develop their own platforms or use directly

- Independent hardware design and development (providing reference designs)

- Independent development of customized software platforms for their own brands

- Form their own application store ecology

- Support a wide range of third-party application ecosystem development

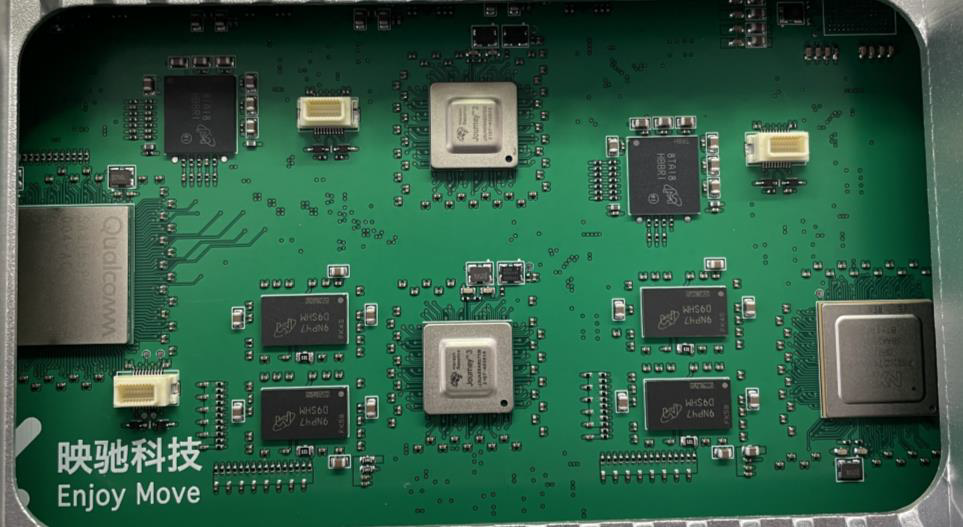

The intelligent high-performance computing platform depicted above is an exploration of the convergence of three fields – intelligent driving, intelligent cockpit, and intelligent gateway. The cockpit uses Qualcomm’s SA8155P, while two Horizon Journey 3 processors are employed in intelligent driving, and NXP’s S32G is used in the gateway, thus forming a high-performance computing platform that combines the three fields. EMOS is used to deploy the TSN communication protocol stack on each chip and interconnect them with gigabit Ethernet. A reference application is built on the software platform, which is currently mainly applicable to L1 to L3 high/low-speed autonomous driving. Based on this, further exploration is conducted into the computational and communication requirements of intelligent driving, as well as what characteristics and functionalities the software platform should provide to support intelligent driving.

The intelligent high-performance computing platform depicted above is an exploration of the convergence of three fields – intelligent driving, intelligent cockpit, and intelligent gateway. The cockpit uses Qualcomm’s SA8155P, while two Horizon Journey 3 processors are employed in intelligent driving, and NXP’s S32G is used in the gateway, thus forming a high-performance computing platform that combines the three fields. EMOS is used to deploy the TSN communication protocol stack on each chip and interconnect them with gigabit Ethernet. A reference application is built on the software platform, which is currently mainly applicable to L1 to L3 high/low-speed autonomous driving. Based on this, further exploration is conducted into the computational and communication requirements of intelligent driving, as well as what characteristics and functionalities the software platform should provide to support intelligent driving.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.