Automatic driving and DMS technology

Author: Sun Li

Automatic driving technology has become the focus of development and promotion for automotive companies. Automakers are continuously “packaging” their automated driving assistance technologies, with Tesla naming its system Full Self-Driving (FSD) and attempting to instill this concept in consumers. However, in reality, FSD is still in the stage of assisting drivers.

In this fervent “marketing battle”, automakers are constantly “tempting” drivers to use automated driving functions. Drivers are very likely to take their hands off the steering wheel and/or look away from the road during use, which can cause serious safety hazards.

Regardless of automakers’ promotion efforts, according to the latest definitions from the Chinese Ministry of Industry and Information Technology and the US Society of Automotive Engineering, the current level of automated driving is still at L2, and human drivers are still responsible for driving. In the midst of continuous promotion of automated driving technology, automakers must use corresponding technological means to supervise and inspect human driving behavior, ensuring that vehicles are always under human control.

Is there a technology that can “supervise” human driving behavior and prevent human drivers from relinquishing control of the vehicle due to over-reliance on automated driving technology?

It is against this backdrop that driver monitoring systems (DMS) have returned to the public eye.

What is DMS?

DMS is not a new technology, having been developed 20 years ago to address issues such as driver fatigue, distraction, and other dangerous driving behaviors (such as using a phone or eating while driving).

DMS is the abbreviation for Driver Monitoring System, which is a system that can detect driver fatigue, distraction, and other dangerous behaviors while driving. When it is detected that the driver’s attention cannot be maintained on the driving behavior, the system will timely remind the driver.

As a technology that can save human lives, laws and regulations have strongly promoted the popularization of DMS.

It is reported that the national mandatory installation regulations for commercial vehicle DMS systems are already under investigation and are expected to be announced by the end of this year at the earliest. The latest 2025 roadmap from the European New Car Assessment Programme (E-NCAP) requires all new cars to be equipped with DMS from July 2022.

Mandatory installation laws and regulations are driving the popularization of DMS technology. French consulting firm Yole predicts that by 2025, the highest growth rate of the in-vehicle sensory market will reach 63% with this segment market reaching $2.6 billion, of which DMS accounts for 73% of the revenue. By then, the Chinese DMS market will also reach 8.2 billion yuan.According to the research data from ZUSI Automotive Research, 2.37 million commercial vehicles were required to be equipped with DMS in the first half of 2020 in China.

The commercial vehicle market has low requirements for the experiential aspect of DMS functionality and only needs to meet regulations. However, the passenger vehicle market has high requirements for the experiential aspect of DMS technology. Manufacturers need to find a suitable balance between practicality and cost, which is why passenger vehicles are more cautious about adopting DMS. Nevertheless, with the popularization of assisted driving technology, the market for DMS has shown an explosive growth trend in recent years.

According to data from the GaoGong Intelligent Automotive Research Institute, in the passenger vehicle market, the insurance risk of new cars in China (joint venture + domestic brands) equipped with visual DMS in 2020 was 136,500, while in the same period in 2019, the data was less than 10,000. The growth rate has reached an exponential level. In terms of penetration rate, the penetration rate of DMS has just exceeded 10%, and it is currently mainly concentrated in mid-to-high-end models.

DMS Technology Development

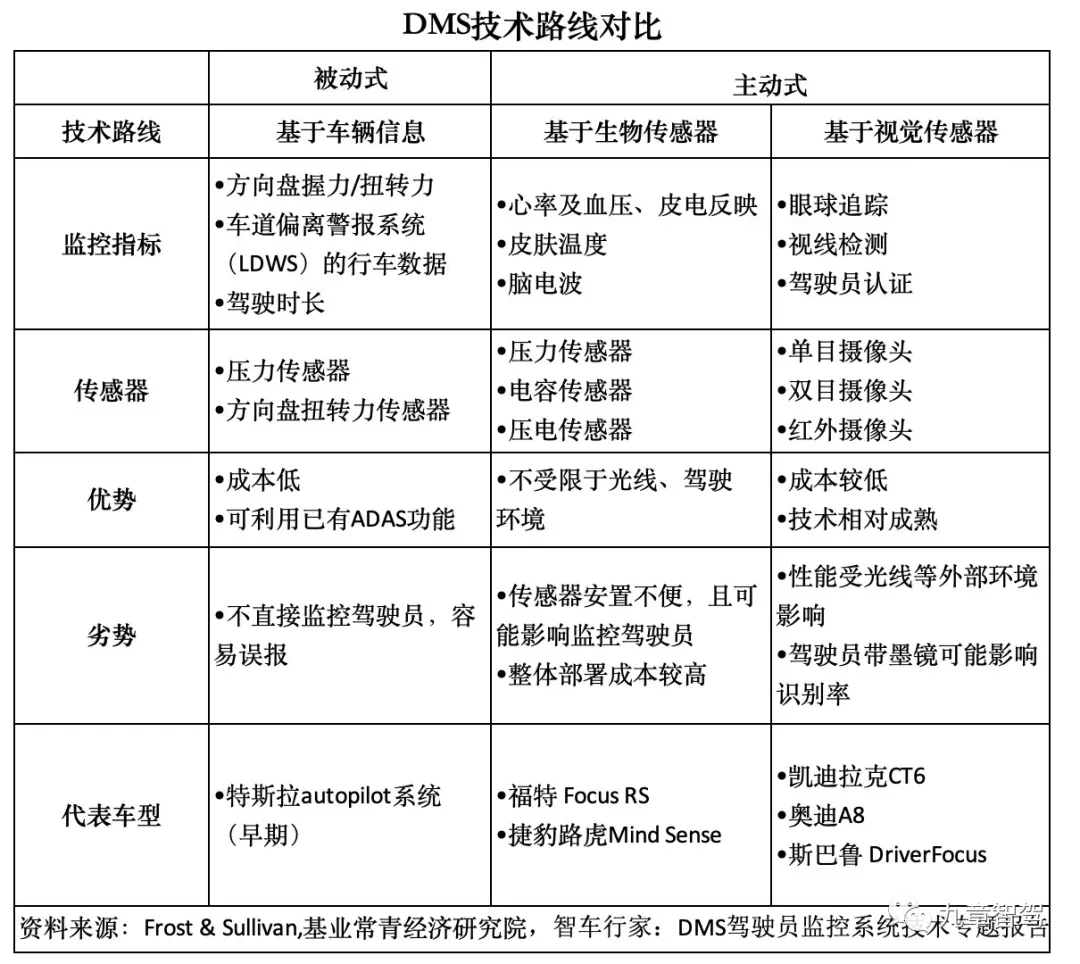

Early DMS technology mostly used passive technology. Passive technology refers to monitoring unstable steering wheel movement, lane deviation, or arbitrary speed changes of vehicles through the steering wheel and torque sensors. This early DMS system had complex models and high costs, so it was mostly installed in luxury car models.

In addition to cost factors, the false alarm rate of passive DMS is high, the intelligence level is low, and it cannot be linked with intelligent cabins. For example, some early users of Tesla used mineral water bottles and other objects to jam the steering wheel, thereby deceiving the DMS.

Due to the technical defects of passive technology, some automakers have attempted to use biosensors to monitor drivers, such as using capacitance sensors deployed on steering wheels or safety belts to analyze physiological data and infer the driver’s current state.

The theoretical basis for the fatigue detection technology is that the physiological characteristic indicators such as heart rate, blood pressure, skin conductance, skin temperature, and brain waves are different from those under normal conditions when the body is in a fatigued or abnormal state. However, the layout of biosensors is costly, and the user experience of sensor contact and wearing is poor. The detection accuracy is limited by the sensor’s contact method, so this technology has not been significantly promoted.

The industry urgently needs a new technology that is non-contact and can intelligently determine driver monitoring behavior.

The rapid development of AI vision technology has provided a possibility for the birth of new technology. Based on the infrared technology and visual camera, non-contact, low-cost, and highly intelligent active visual DMS technology relying on face recognition technology has emerged and quickly become the mainstream technology route.

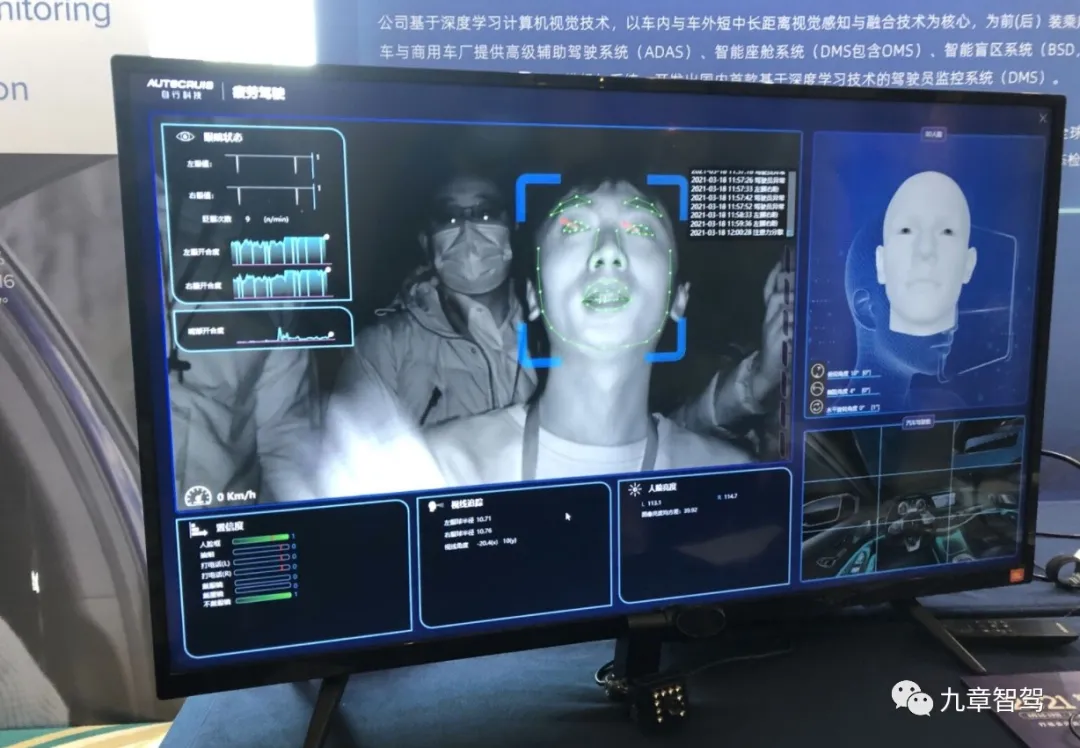

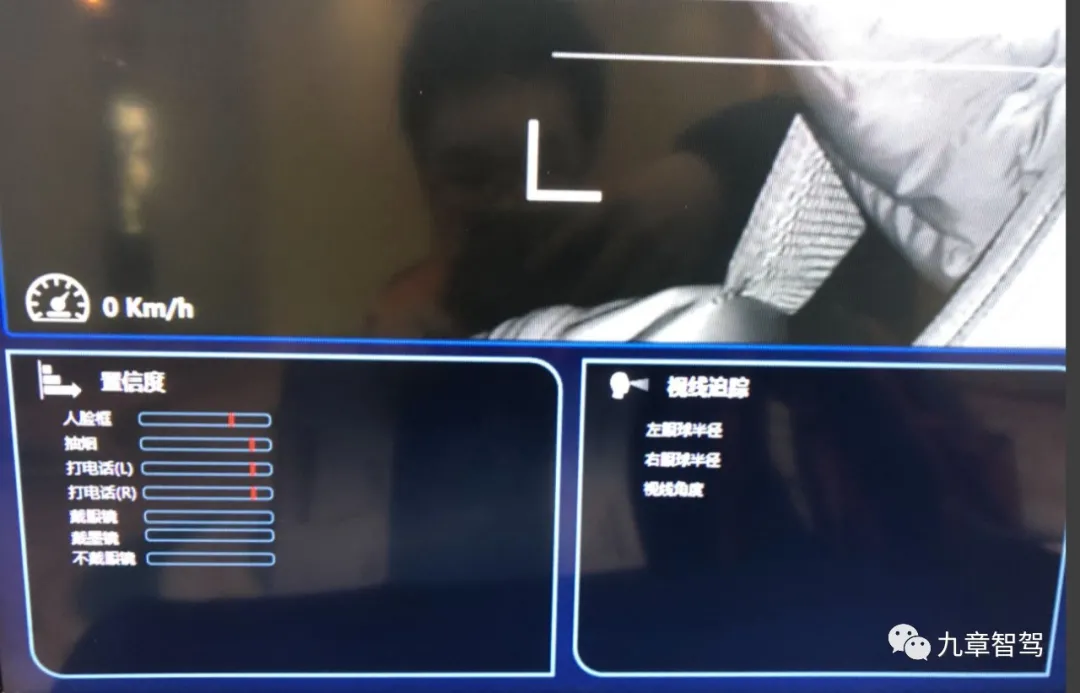

This technology solution obtains the driver’s eye state (such as eyelid closure detection, eye tracking, gaze tracking), head posture (such as head position detection, head posture tracking), yawning, making phone calls, smoking, and other behaviors through optical cameras and infrared cameras deployed in the steering wheel, instrument panel, or A-pillar, and captures image or video information. Through deep learning algorithms, the obtained information is analyzed to determine the driver’s current status and achieve the goal of driver monitoring.

The advantage of infrared cameras is that they have strong light interference resistance and can still have high resolution in dim environments. They can adapt to the strong brightness changes in the cabin.

Infrared light can penetrate most sunglass lenses, including polarized sunglasses. For the image sensor of DMS, the lens is transparent, and the eye, eyelid, blink, and eye gaze vector can be tracked normally.

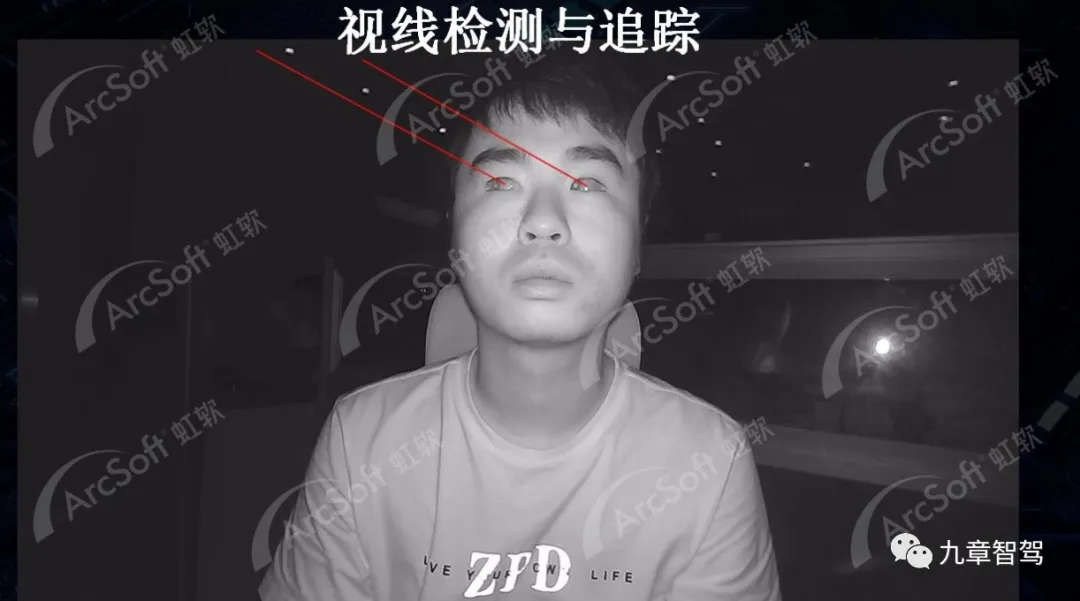

Eye tracking and detection are important means for determining whether fatigue driving or distraction, drowsiness, etc. occur, and are also one of the cores of DMS technology. Therefore, infrared cameras have strong competitive advantages in the DMS field.

Infrared cameras are composed of infrared light source emitter, lens that can recognize object reflectance, and image sensor and other components. Generally, 940 nm near-infrared light, which cannot be perceived by the human eye, is used, and it can avoid the adverse effects of insufficient illumination. Infrared cameras generally require 1 MP-2 MP pixel, FoV above 40°, and frame rate of 30~60 fps.

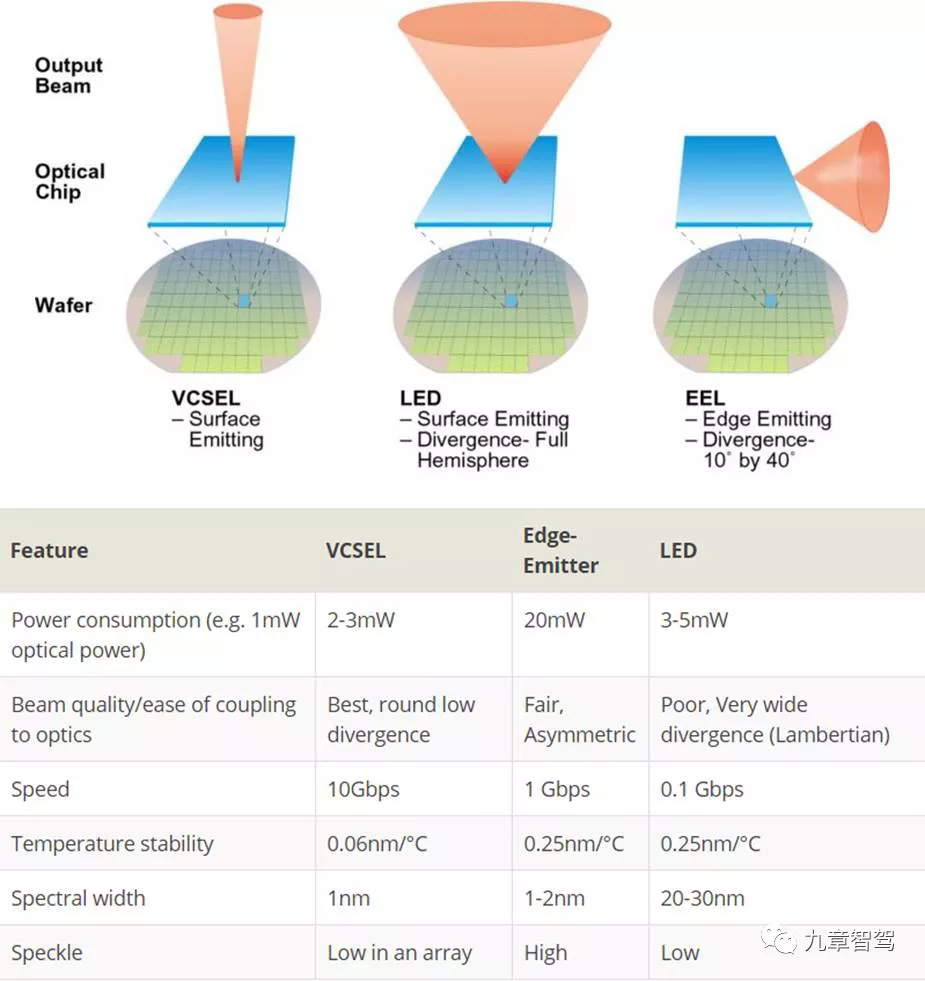

At present, there are three main types of near-infrared light sources that can provide wavelengths of 800-1000 nm: infrared LED, LD-EEL (Edge Emitting Laser Diode) and VCSEL (Vertical Cavity Surface Emitting Laser).

Infrared LEDs have become the mainstream solution due to their low cost, large lighted area, ease of installation, and high industry maturity.

General Motors is one of the car companies that introduced DMS technology based on infrared cameras early on. In 2017, Cadillac launched the Super Cruise system on the CT6, which integrates an infrared camera on the steering wheel to track the driver’s head position and gaze and ensure that the driver’s eyes are on the road ahead. The system is provided by Joyson Safety Systems and uses Osram’s infrared generator.With its advantages of non-contact, low cost, high reliability, and high intelligence, DMS based on near-infrared technology is becoming the mainstream choice in the market. Most DMS systems developed by car companies and suppliers use this technology.

Although LED infrared technology has low cost, the light source is relatively scattered. Car companies and suppliers are constantly exploring new light source technologies, such as using VCSEL light source emitters.

VCSEL, whose full name is Vertical Cavity Surface Emitting Laser, is based on gallium arsenide semiconductor materials. It is different from other light sources like LED (light-emitting diode) and LD (laser diode) with advantages of small size, circular output spot, single longitudinal mode output, low threshold current, low price, and easy integration into large-area arrays. It is widely used in optical communication, optical interconnection, optical storage and other fields.

Although infrared LED has low cost, it does not have a resonant cavity, and the light beam diverges. It requires more current inputs to overcome losses, resulting in higher power consumption. In addition, LEDs cannot be modulated quickly, limiting their resolution. Compared with infrared LED and EEL, VCSEL has more focused output light and symmetrical beam, and is more advantageous in temperature drift and cavity reflectivity. VCSEL can also achieve two-dimensional arrays.

The advantages of VCSEL in photovoltaic conversion efficiency, light uniformity, and temperature drift make it have outstanding technical advantages in the field of facial recognition. Currently, light source suppliers are developing car-level VCSEL light source technology.

In addition to improvements in infrared light sources, detection sensors are also changing. Conventional infrared cameras can only provide 2D images. Sensors with 3D effects have been widely used in consumer electronics, such as ToF sensors.

ToF sensors first entered the public’s field of vision from Apple. Apple introduced VECSEL and ToF sensor technology in its Face ID technology in iPhoneX, making facial recognition a standard feature of phones.

The ToF (Time of Flight) is a metric of the time it takes for an object, particle, or wave to propagate a distance. ToF sensors use tiny lasers to emit infrared light, which bounces off any object and returns to the sensor. With the time difference between the emission of light and its reflection off an object back to the sensor, the sensor can measure the distance between the object and the sensor.

The ToF (Time of Flight) is a metric of the time it takes for an object, particle, or wave to propagate a distance. ToF sensors use tiny lasers to emit infrared light, which bounces off any object and returns to the sensor. With the time difference between the emission of light and its reflection off an object back to the sensor, the sensor can measure the distance between the object and the sensor.

The biggest advantage of ToF sensors is that they can construct a 3D “depth map” of the driver’s head, providing precise analysis of the driver’s unique facial features, thereby improving the accuracy of facial recognition, gesture recognition, and occupancy/positioning algorithms.

Comparing with 2D visual sensor solutions, ToF sensor solutions are more expensive, with slightly lower recognition resolution, but can provide extensive 3D perception.

ToF technology is widely used in the consumer electronics sector, represented by smartphones. The industry and manufacturing maturity are high, and the cost is decreasing rapidly.

However, the shortcomings of ToF technology are short detectable distance, currently high costs, and product reliability requiring car-grade certification. Domestic and foreign companies are already working on car-grade certification. For example, Judo Technology already has a project in mass production.

According to Yole Consulting, starting from 2022, DMS (Driver Monitoring System) with 3D perception will gradually be added to cars, and 3D cameras will explode in 2024. The estimated volume of DMS installed in new car markets will increase from 4.5 million in 2020 to 57.6 million in 2025, with 80% using 2D camera solutions and 20% using 3D camera solutions.

In addition to ToF sensor technology, some companies are also trying to apply millimeter-wave radar technology to in-car perception detection systems. The advantage of millimeter-wave radar solutions is that they are not affected by lighting conditions and do not need to detect the occupants’ facial expressions, thereby protecting privacy.

Traditional millimeter-wave radar companies such as Bosch and Continental are planning to introduce millimeter-wave radar into the cabin. Domestic Chinese brands, such as Chuhang Technology, launched a 60GHz car-grade millimeter-wave radar at the 2021 Shanghai Auto Show that can detect the driver’s heartbeat and breathing, prevent dangerous driving caused by sudden physical discomfort of the driver, and provide seat detection for rear passengers or pets, effectively preventing children and pets from being forgotten in the car.

4D millimeter-wave radar is evolving and expected to be applied in the car cabin. In March 2021, foreign product development and engineering expert Path Partner announced that they have developed DMS and OMS (passenger monitoring sensor) using 4D imaging radar technology and camera fusion technology.

Due to the limitations of detection principles, millimeter-wave radar cannot detect the driver’s facial expressions and eye movements, making it difficult to detect fatigue and distraction, and the increasing cost also limits the promotion of millimeter-wave radar in the cabin. Therefore, the millimeter-wave radar scheme can serve as a supplement to the visual scheme. Additionally, the cost increase also restricts the promotion of millimeter-wave radar in the cabin.

Compared with other schemes, the DMS technology based on visual technology is considered the optimal solution for the detection of distraction, no matter in terms of cost or functional implementation —— except for vision, it is almost impossible for other technologies to achieve eye tracking and distraction detection.

Challenges of DMS Technology

The compulsory regulations on DMS determine its minimum level of functions, solving the problem of existence or nonexistence. The usability, user-friendliness, and accuracy of the functions, however, still require continuous investment from manufacturers.

As an “active safety technology,” the biggest challenge that DMS faces is the accuracy of detecting the mental states of human drivers. The conflict between the experience and efficiency brought by “false-positive” and “false-negative” can cause users to close the function on their own when they are interfered with by false detections.

Currently, DMS is mainly based on facial recognition visual technology, and the visual sensor is greatly affected by the environment. In addition, insufficient chip computing power may cause insufficient processing speed in real-time.

Different light conditions in different weather and time periods can also lead to differences in image brightness, such as the Yin-Yang face phenomenon, image occlusion and jitter, signal-to-noise ratio, contrast, etc., which pose challenges to the robustness of the system.

DMS also has high requirements for chips, firstly for automotive-grade requirements, and needs to meet reliability certification under certain temperature and other working conditions. Secondly, it also has high demand for computing power.

DMS receives and transmits data through a single control unit. Therefore, DMS needs to use a large amount of computing power to process and analyze the input information, and feedback to the terminal display after analysis. Additionally, it needs to be integrated and fused with the whole vehicle’s electronic and electrical architecture, ADAS driving assistance functions, intelligent cabins, and displays.

For example, in order to accurately identify the 3D spherical image of the face, the resolution needs to be improved, 3D information needs to be introduced, and the model needs to be optimized after collection; to prevent the system from stalling while running, the frame rate needs to be increased, and the computing power needed has to be greatly improved.Apart from the physical limitations of sensors, understanding and researching the mechanisms of human physiological states have a direct impact on the accuracy of perception algorithm models. However, current human knowledge on fatigue and drowsiness is not sufficient.

The core of DMS is to detect the mental state of drivers, especially whether they are fatigued, distracted, or sleepy. So, how can we measure fatigue and distraction? How to quantify the degree of fatigue and distraction?

Based on the analysis of physiological research on human fatigue, there is a relationship between fatigue and body temperature, skin resistance, eye movement, respiratory rate, heart rate, and brain activity. Based on physiological cognition, the most effective method is to directly measure the pulse and heart rate variability HRV using various types of biological sensors, but installing a large number of biological sensors is not allowed in the car environment.

Visual cameras can only extract human facial states, such as eye states (open-close time, open-close frequency, open-close angle), eyeball states (eyeball direction, eyeball rotation rate, eyeball dwell time), mouth states (yawning, mouth opening degree, mouth opening frequency), head states (head posture, nodding frequency, nodding time), and other information features. By using algorithms to extract and quantify these key physiological information, such as using the landmark method to extract 68 key points of the face, important data of the face, eyes, and mouth can be obtained.

Algorithm engineers need to establish physiological models of fatigue, distraction, and drowsiness based on facial physiological indicators and parameters, set physiological thresholds, confidence levels, and determine trigger conditions.

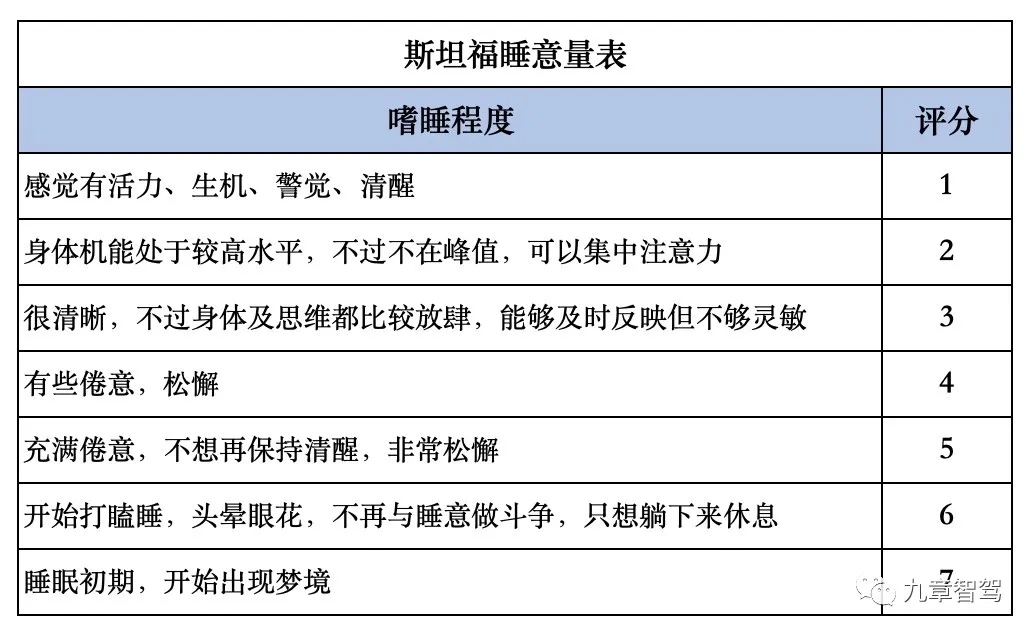

In terms of fatigue and drowsiness levels, the Stanford 7-Level Sleepiness Scale is generally adopted to determine if someone is fatigued or drowsy and quantify the level of drowsiness.

Although there are Stanford’s 7-level rating indicators, the actual evaluation of fatigue requires a certain subjective evaluation component. Research and development engineers need to conduct statistical analysis on key physiological indicators based on accumulated databases and human simulation training, and determine the boundaries from being awake to slight fatigue, moderate fatigue, and deep fatigue, in order to optimize the model structure parameters.In terms of publicly available algorithms, DMS products often use or refer to the evaluation method PERCLOS P80 of the US National Highway Traffic Safety Administration (NHTSA) to analyze and judge the driver’s level of fatigue.

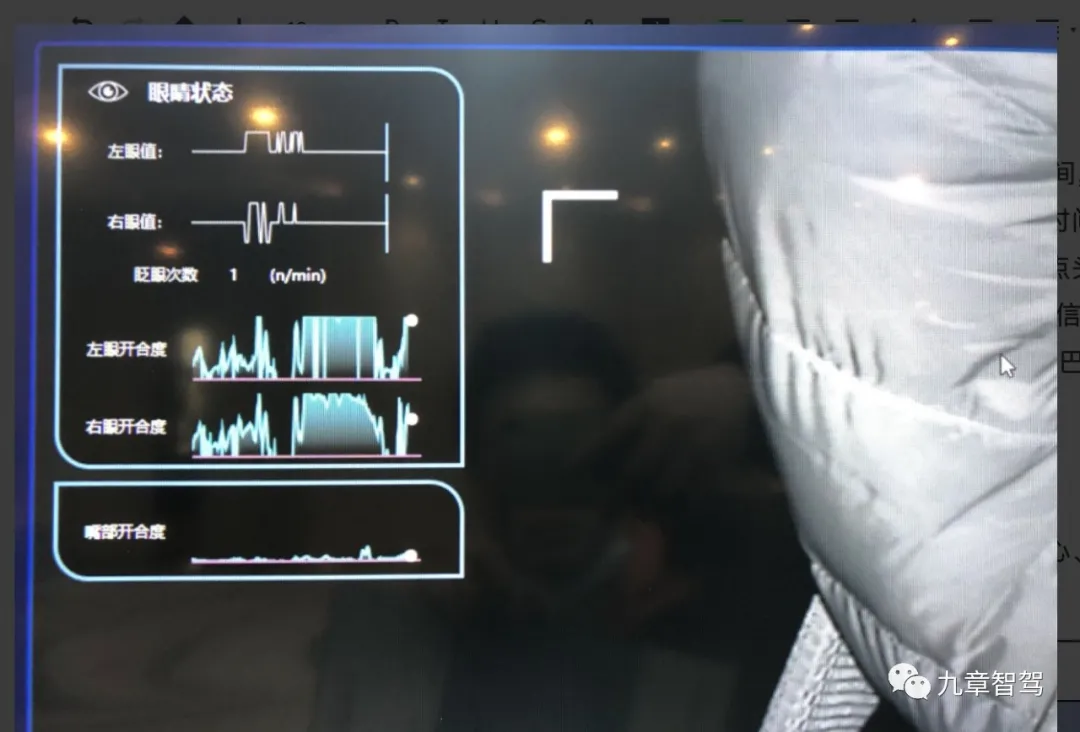

PERCLOS (Percent Eye Closure) refers to the percentage of time the eyes are closed for a certain period of time. P80 means that if the eyelid covers more than 80% of the pupil, it is considered closed. PERCLOS P80 is considered the most reflective indicator of a person’s level of fatigue.

The specific calculation principle is as follows: t1 is the time from when the eyes are fully opened to when they are closed 20%; t2 is the time from when the eyes are fully opened to when they are closed 80%; t3 is the time from when the eyes are fully opened to when they are next opened 20%; t4 is the time from when the eyes are fully opened to when they are next opened 80%.

By measuring the values of t1 to t4, the value of PERCLOS f can be calculated, and then the fatigue state can be judged based on the set threshold. The PERCLOS method has high detection accuracy, low cost, and low interference, gradually becoming the mainstream method in the industry.

In addition to detecting fatigue by opening and closing the eyes, it is also necessary to detect whether the driver is distracted and whether the driver’s line of sight is off the road, such as turning to observe the rearview mirror or looking at the central control panel. This requires a high-resolution camera.

In addition, algorithms and models need to track the eyeballs and extract the direction, angle, and duration of the eye line more accurately in order to make more accurate judgments.

Visual tracking generally involves first determining the position of the eyes and eyeball contours, and then determining the position of the pupil. If an IR camera is used, the corneal reflection phenomenon will occur under LED illumination, so algorithms and camera companies need to make some compensation methods in this area.

In addition to physiological fatigue and drowsiness, human emotional states also have an impact on driving safety. For example, when humans are in negative emotions such as negativity, anger, etc., they are prone to make more dangerous driving actions. If the DMS algorithm can recognize emotional states, it can issue reminders. This requires more accurate judgments on the relationship between facial expressions and emotions.

In addition to the quantitative challenges posed by the fatigue model, face detection also faces other challenges, such as internal and external rotation of the face, makeup on the face, eyeglasses obstructing the eyes, changes in facial expressions, and masks over the mouth and eyes. Especially, masks and glasses obstructing the mouth and eyes may reduce the recognition rate of visual cues.

In addition to the limitations of algorithm due to cognitive physiology, neural networks themselves also have certain limitations. One of the major challenges is that the effectiveness of algorithm models depends on a large amount of data training, and data becomes a critical influencing factor.# How to obtain real-scene data at a low cost has become the focus of major algorithm manufacturers.

Algorithm manufacturers need to spend a lot of manpower and financial resources to gather facial materials that simulate human fatigue, drowsiness, and distraction in order to build up their training library.

However, obtaining real data on fatigue and drowsiness while driving poses great difficulty. Although some data and training sets can be obtained through simulated human experiments, there are still some differences compared to real driving scenarios.

Organizing manpower solely for training is undoubtedly time-consuming and laborious. The most economical and efficient way is to transmit the actual detection information of mass-produced products through the cloud.

Therefore, if the product can be put into use as early as possible and its data can be transmitted back to the algorithm provider, the algorithm manufacturer can quickly iterate its algorithms.

Collecting real facial data from actual cars will invariably cause privacy issues.

On the one hand, automakers also have strict control over user data. Algorithm suppliers need to persuade or reach an agreement with automakers to obtain real data from the car and upload it to the cloud for training.

On the other hand, users are extremely sensitive to facial data because it involves personal identity information. It is a high-attention data that users are unwilling to easily upload to the cloud.

In order to obtain user data, automakers need to make promises to users, such as promising to leave the data on the car or obtain user consent before uploading it to the cloud. In addition, automakers need to take some technical measures, such as desensitizing facial and identity information and uploading desensitized data to the cloud, to avoid identity privacy leakage.

In May of this year, Tesla launched the DMS driver camera function in its OTA update. After the FSD is turned on, the camera will monitor the driver’s status. Previously, Tesla turned on the in-car camera without seeking the owner’s consent, causing the owner’s offense and dissatisfaction.

This time, Tesla promised that the camera data would be saved in the car and the system would not transmit the data back to the company unless the user enabled data sharing. At the same time, Tesla emphasized that users can change their data sharing settings by entering “Controls-Safety & Security”.

The issue of lawful acquisition and use of data will be a problem that DMS must face.

DMS Industry Pattern

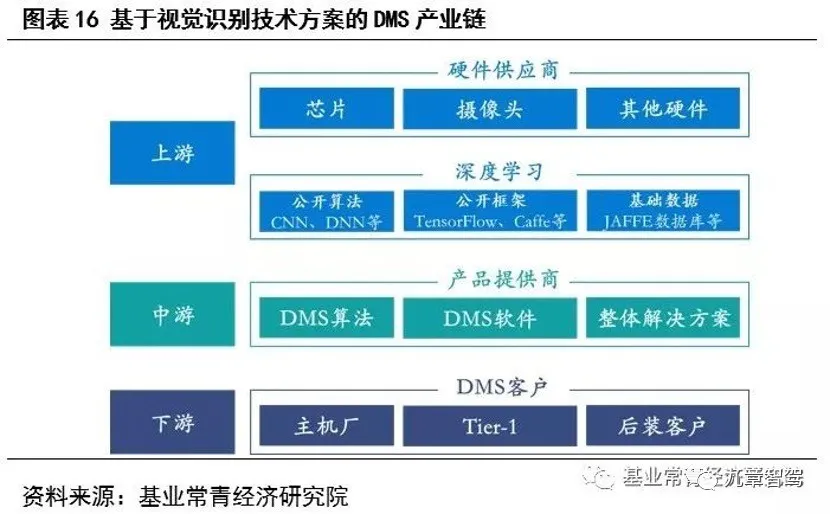

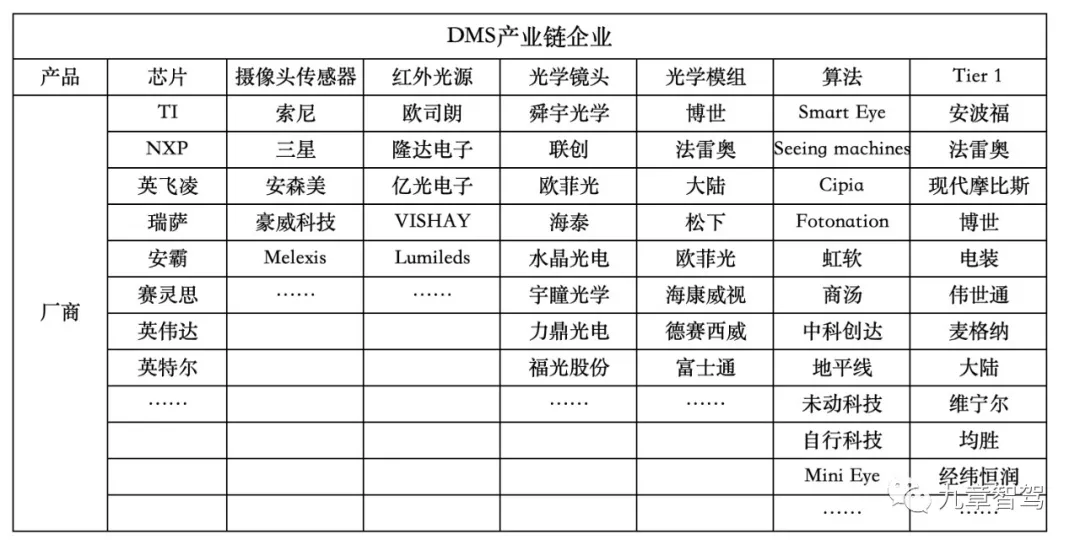

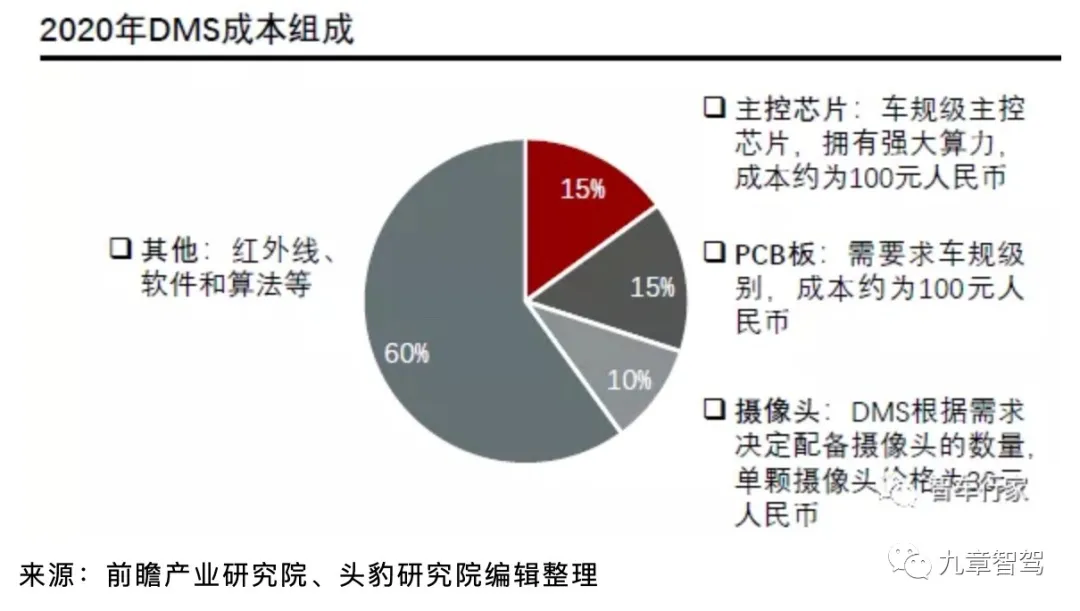

The key technologies involved in the DMS industry chain are: chips, sensors (cameras), DMS algorithms, and solution integration.

## DMS future is front-loading and as a vehicle product, it must meet the regulatory requirements, therefore, hardware suppliers are basically enterprises in the automotive industry chain.

## DMS future is front-loading and as a vehicle product, it must meet the regulatory requirements, therefore, hardware suppliers are basically enterprises in the automotive industry chain.

In terms of upstream hardware, chip technology has high barriers to entry, and the market concentration is high. At present, traditional chip giants such as NXP, TI, Xilinx, NXP, Renesas, Intel, NVIDIA occupy the majority of the market share. These manufacturers have relatively mature computing platform solutions.

For example, NXP’s S32V series / i.MX8 series, NXP’s H22, H32, Intel’s Atom, OV’s OA8000, Xilinx’s Zynq-7000, TI’s TDA2P/TDA3x/DRA726, Renesas’ R-Car E3/M3/H3/V2H/M2, MTK’s Autus I20/T10, and so on.

As these chips are at vehicle regulatory level and have high computing power requirements, their prices are also relatively high, with cost basically over 40 yuan. The price of high computing power main control chips can even reach over 100 yuan.

Infrared light source manufacturers include Osram, Lumileds, Taiwan EpiStar and other electronic manufacturers.

The core component of the camera, CMOS image sensor, is mainly controlled by Japanese companies led by Sony and Samsung. In addition, in the automotive sensor market, AMS is in a leading position, followed by Hella, although Sony has an absolute position in the global CIS market, it lags behind AMS and Hella in the field of automotive sensors.

In the optical component market, domestic companies such as Sunny Optical, Crystal-Optech, OFILM, and Hi-Tech have great potential for development and compete with overseas high-end technology companies to achieve localization of the automotive optical field industrial chain.

According to estimates, in the hardware cost of the camera solution, by removing the algorithm cost, a single camera costs about 30 yuan, and with an infrared light source, the cost of the entire camera can reach up to 130 yuan. Among them, the image sensor CMOS accounts for about 52%, the lens accounts for about 26%, and the module packaging accounts for about 20%.

The midstream DMS algorithm has become the most competitive area at present.

In terms of the competition of the algorithm supplier on the product end, the optimization of the accuracy and efficiency of the algorithm is the main focus.

From a technical point of view, there are two directions for algorithm suppliers to optimize algorithm efficiency. One is to choose and design chip architecture, and solutions with low power consumption ratio and cost-effectiveness undoubtedly have advantages. The second is based on open neural network algorithms and open-source deep learning platforms to debug and optimize algorithms, which requires algorithm suppliers to collect a lot of training materials and quickly iterate algorithms to improve their accuracy.Therefore, algorithm vendors need to study various types of chips in the market. After understanding the hardware of chip manufacturers, they can develop better products that are compatible with chips and algorithms. If chip manufacturers can also develop their own algorithm, they will undoubtedly have strong competitiveness.

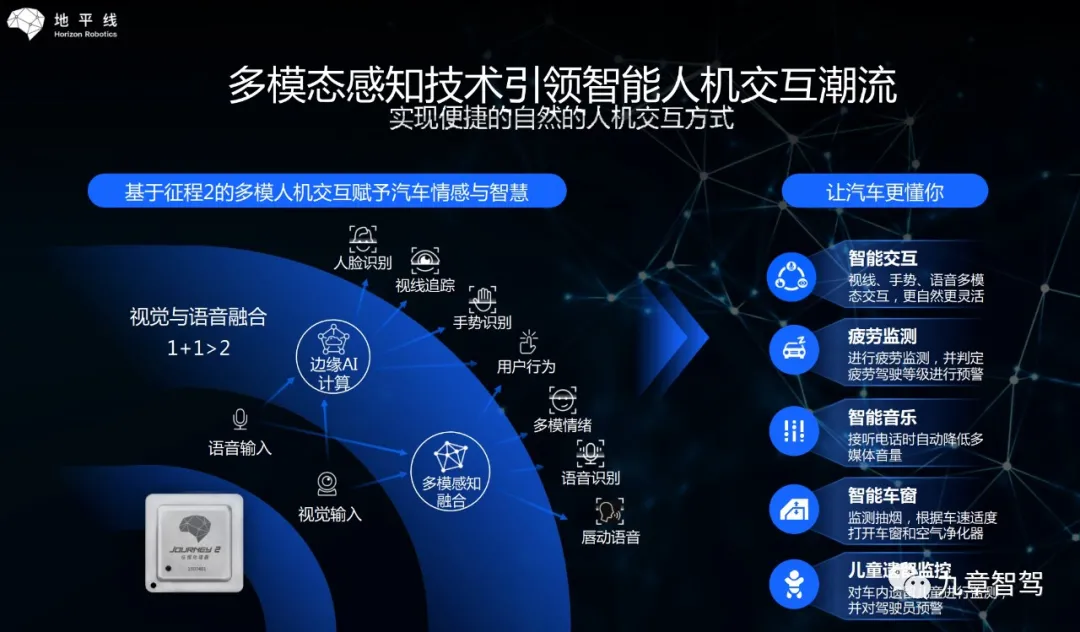

For example, Horizon Robotics, as an AI chip company, its Journey series chip can achieve multimodal perception, supporting all-round perception and interaction of front and rear rows, inside and outside the car, and vision and speech, which includes DMS driver detection system.

Currently, most DMS companies optimize algorithms based on open source algorithms and combine them with product functions and application scenarios to provide overall solutions.

These vendors include algorithm vendors with AI technology backgrounds, startups focused on DMS technology, and some manufacturers that have expanded from the ADAS field to the DMS field. Globally, there are more than 15 suppliers focusing on DMS visual algorithm alone.

In terms of competitive landscape, foreign DMS companies have taken advantage of their first-mover advantage to seize some opportunities. For example, Seeing Machines, Smart Eye, Cipia (formerly EyeSight), and Fotonation.

Australian computer vision developer Seeing Machines not only independently develops DMS algorithms but also develops an independent DMS chip-FOVIO chip. The company’s DMS products have been installed on 2018 Cadillac CT6 vehicles, and its revenue in 2018 has reached AUD 30.72 million, with automotive revenue contributing more than AUD 8 million.

Smart Eye from Sweden is another major player. According to Smart Eye’s financial data, the company’s revenue increased to USD 7.85 million in 2020, but it still lost approximately USD 6.40 million. Smart Eye’s latest valuation is approximately USD 537 million.

In addition, many companies with computer vision backgrounds have entered the DMS field. For example, Fotonation was established in 1997 and relied on iris recognition technology to become established in the consumer electronics face recognition field. It officially entered the DMS field in 2015 and became a DMS software supplier for Denso in China.

Domestic algorithm suppliers include SenseTime, Megvii, Hikvision, and iFlytek, as well as startups such as Umdap and companies that supply both ADAS and DMS products such as Shenzhen Ziyan Technology and Minieye.

AI companies have abundant financial resources and can also collect face data in other fields to help train algorithms.# Sensing Solutions for Intelligent Cockpits by Sensetime

Sensetime, during the 2021 auto show, announced its one-stop solution for cockpit, including DMS (Driver Monitoring System), OMS (Occupant Monitoring System), touchless door unlocking, virtual driving partner, and in-car AR entertainment.

Vision-based Products for the Automotive Industry by Hikvision

Hikvision provides DMS, ADAS, BSD products, focusing on visual-related features for the automotive industry. In 2019, its intelligent driving algorithm business grew quickly to achieve operating income of 16.0566 million yuan, a significant increase from the previous year’s 2.2021 million yuan. In the first quarter of 2020, its intelligent driving algorithm business generated 17.0076 million yuan in operating income, exceeding the full-year revenue in 2019.

Comprehensive Perception Solution for In-car and Out-car by MINI EYE

MINI EYE also introduced its all-round perception solution for in-car and out-car during the 2021 Shanghai auto show. In addition to traditional monitoring functions, its in-car DMS and OMS products focus on developing interactive features for the cockpit.

Currently, an increasing number of players have penetrated into the field, resulting in a crowded market. The early technology dividend is slowly disappearing, and gross profit margins continue to decline. Currently, the price of a complete set of DMS systems, including software algorithms, has dropped to 500-1000 yuan. With the intensification of competition in the industry, costs will continue to decline.

Secondly, the DMS field is a small field with low technology barriers, and as AI companies and self-driving companies with more financial strength penetrate, start-up companies in the DMS field will feel the pressure to survive. The trend towards the integration of this segmented field is becoming more apparent.

In May 2021, Smart Eye, a DMS solution provider from Sweden, announced a deal to acquire all of Affectiva’s business for 73.5 million US dollars to accelerate the development of the in-car perception market. Affectiva, incubated from MIT’s Media Lab in 2009, is a company focused on emotional AI perception technology.The head of Smart Eye said that this will help the company sign 84 mass production contracts with 12 of the world’s largest OEM manufacturers, including BMW, GM, Mercedes-Benz, Audi, Jaguar Land Rover, and Porsche, among others, in addition to existing business orders.

It is foreseeable that mergers between startups and penetration by large AI companies into the field will continue in the future.

The downstream of DMS is mainly Tier 1 integrated suppliers, such as foreign companies Continental Automotive, Valeo, Bosch, Ampere, Visteon, Denso, and Faurecia, and domestic companies Junsheng Safety, Jinwei Hengrun, Huayang, and Desay SV Automotive.

These types of suppliers generally participate in the DMS industry chain in the form of partnering with DMS technology companies to develop technology or directly purchasing algorithm IP. For example, Shenzhen Road-Ex technology, a domestic ADAS manufacturer, became a software supplier to Bosch China’s driver monitoring system.

These traditional tier 1 suppliers have outstanding advantages in terms of system integration capabilities, automotive-grade verification, customer relations, manufacturing and service capabilities.

Product competition between suppliers is a competition of comprehensive product strength, including chip design and selection, computing power consumption, algorithm and computing power matching, camera scheme integration and design, system integration and engineering capabilities, and most importantly, cost competitiveness.

Due to the deep customer binding relationships between OEMs and Tier 1, customer relations are also an important competitive advantage. OEMs often choose the Tier 1 with the closest relationship and the smoothest cooperation as their partners, and the costs and profits of these big-name Tier 1 suppliers are also higher.

On this front, foreign traditional Tier 1 suppliers have outstanding advantages, but domestic suppliers such as Junsheng and Desay SV Automotive also have advantages in cost control and customer service capabilities. As long as product performance and engineering issues are resolved, domestic Tier 1 suppliers have a great opportunity to eat into the market share of foreign capital, especially in the field of intelligent technology.

Future Trends of DMS

The original intention of DMS was to detect the driver’s driving status in order to issue warnings for dangerous driving behavior. However, the introduction of visual technology provides DMS with a broader range of applications. In addition to detecting fatigue and distraction, smoking, drinking water, drunk driving, and drugged driving can also be included in DMS monitoring.

With the rise of automatic driving and intelligent cabins, DMS has become an important “interface” for building human-machine interaction between the driver and the vehicle.

Automatic driving has become an important application scenario that is applied first.

For a long time, Level 2 to Level 4 automatic driving will be the mainstream of the automobile market. This will bring up the problems of “human-machine co-driving” and “manual takeover”. The penetration rate of advanced automatic driving increases, and advanced assisted driving such as navigation assistance driving becomes the new car standard.Under the constant promotion and “temptation” by the automotive industry, drivers are increasingly relying on autonomous driving features, resulting in more situations where hands leave the steering wheel and eyes are off the road.

The Driver Monitoring System (DMS) can supervise the driver to keep their hands on the steering wheel and their focus on the lane while using autonomous driving features. This helps humans better adapt to scenarios of “co-driving” and “manual takeover.”

In certain “Level 3” autonomous driving scenarios, drivers are allowed to take their hands off the steering wheel and their focus off the road. However, the DMS can provide advance warning to ensure the driver is prepared to take over in the event of an emergency or the system being deactivated.

Currently, the fusion of Advanced Driver Assistance Systems (ADAS) and DMS data has become an important development direction for DMS. The ADAS system can determine if the driver is experiencing driving fatigue or even detached from the driving state based on DMS detection data, combined with analysis of vehicle motion and control status.

For example, analysis of the steering wheel’s turning, travel trajectory, acceleration and deceleration of the acceleration pedal, and visual cameras can determine whether the driver is in a state of sleepiness, fatigue, or distraction after opening the ADAS system.

Additionally, based on analysis of the driver’s state and emotions by the DMS, different thresholds can be set for activating and using the ADAS system’s functionality.

For instance, when the fatigue value and sleepiness value are high, the frequency of distraction is high, or the driver is in negative emotions such as passiveness and anger, the driving style of the ADAS or autonomous driving system can be adjusted. This ensures the system operates more conservatively and offers more active prompts. If necessary, the autonomous driving system can be disengaged to require the driver’s undivided attention.

The fusion of DMS and autonomous driving systems will also promote the fusion of hardware, such as integrating DMS algorithms and chips into the autonomous driving control domain.

Mobileye is about to launch the mass-produced next-generation EyeQ6 computing platform, which may have pre-installed embedded vision DMS algorithms. It is speculated that the algorithm supplier may be Cipia.

In addition to the integration with the autonomous driving domain, the intelligent cabin is another major area for DMS function expansion and integration.

In addition to monitoring driver behavior, DMS can also achieve driver identity recognition, such as face recognition, age and gender estimation, and form recognition, such as eye gaze, seatbelt detection, and posture position. This includes state analysis, such as emotional states, and object recognition, such as forgetting detection, cabin abnormality detection, and child detection.As algorithms become increasingly accurate in perceiving human facial expressions, intelligent algorithms can provide more humanized and emotional perception, and provide customized intelligent services and interactions for vehicles through identification of identity, status, and form recognition.

For example, Veoneer’s HMEye is a DMS based on gaze measurement. In addition to monitoring whether the driver’s hands and gaze are in driving mode, the driver can also use eye movements to switch the radio, adjust the temperature, and turn on navigation, achieving safer interactions between humans and machines during driving.

The intelligent cockpit can even adjust the vehicle’s interior atmosphere, music type, and even the status of autonomous driving based on the driver’s facial expressions, to identify changes in the driver’s mood.

For example, the Valeo driver monitoring system has implemented a system based on artificial intelligence, using convolutional neural network algorithms and emotional recognition functions, which can realize personalized settings such as different music playback and interior lighting through facial recognition.

In addition to driver monitoring, DMS can also be extended to OMS (passenger detection system), expanding the detection range from the driver to all members inside the vehicle.

For example, consumer-oriented OMS applications include in-vehicle video calls, or OMS selfie mode, which automatically takes pictures of passengers with simple voice commands. These functions have also become the spotlight features of automakers in their promotions.

However, there are also some basic technical differences between DMS and OMS.

First is the difference in cameras. OMS requires a wider field of view and needs to solve the resolution problem from close range to wide field of view. In addition, with the addition of video calls and selfies, cameras have evolved from IR infrared cameras to RGB cameras to obtain color images.

Furthermore, OMS systems require a wider monitoring range, and 3D ToF solutions can provide a larger field of view FOV of up to 110°, which is difficult for ordinary 2D cameras to match. Therefore, the 3D ToF solution may have more extensive applications in OMS.

With the increasing concept of multi-modal interaction in intelligent cockpits, in addition to traditional buttons and touch screens, DMS can use facial expressions, speech, AR-HUD, and gestures to achieve multi-modal interaction, continuously enriching the interaction mode in the cockpit.

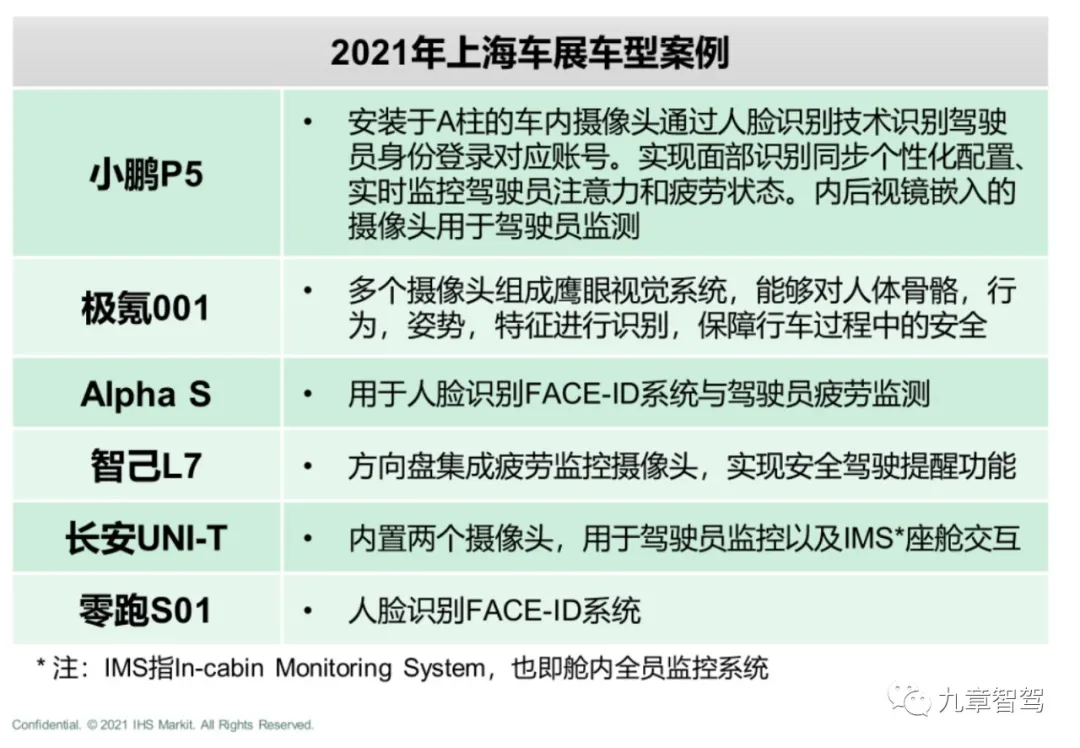

During the 2021 Shanghai Auto Show, DMS will be standard equipment for many new cars, becoming a benchmark for the development of DMS technology. Some vehicle models have already combined DMS with OMS, and the cockpit, which was once centered on driver monitoring, is now evolving into monitoring everyone inside the cabin.

The built-in two cameras in the Changan UNI-T also monitor the status of the co-pilot and rear passengers; The Zeekr 001 also uses eagle-eye cameras for skeleton monitoring to identify people’s behaviors, actions, and postures; In the future, there may also be cameras for the rear entertainment screen FACE-ID and emotion recognition.

The built-in two cameras in the Changan UNI-T also monitor the status of the co-pilot and rear passengers; The Zeekr 001 also uses eagle-eye cameras for skeleton monitoring to identify people’s behaviors, actions, and postures; In the future, there may also be cameras for the rear entertainment screen FACE-ID and emotion recognition.

DMS and intelligent cockpit are constantly integrated from software to hardware, and the chip power required by DMS may also be transferred from a separate ECU to the SoC in the cockpit domain controller, and share computational power with the intelligent cockpit to facilitate functional fusion between different sensors, thus achieving a single control domain to control multiple functions.

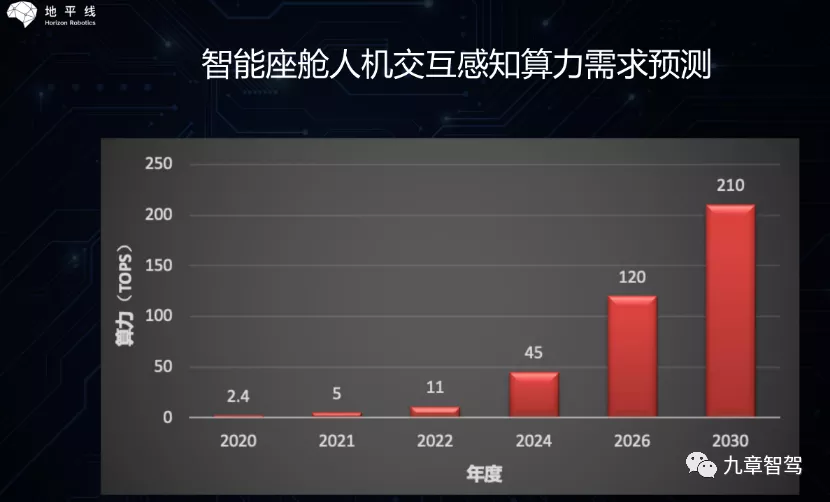

It is certain that with the continuous increase in functions, the demand for computing power will also continue to increase, and the growth rate will reach an exponential level.

Among the latest models released by car companies, XPeng P5, WEY Mocha, NIO ET7, Leapmotor C11, etc. have chosen the high-pass 8155 system chip with a maximum computing power of 4.2TOPS; Audi has chosen the Auto V9 chip, which is self-developed by Samsung, with a computing power of 2TOPS; On Tesla’s latest Model S Plaid, an AMD semi-custom chip is used, and the performance of the car entertainment chip inside the cabin begins to be benchmarked against the PS5 game console.

Chip manufacturers have also launched more powerful chips. Huawei has launched the Kirin 990A intelligent cockpit chip with one chip for five screens and 3.5TOPS computing power.

During the Shanghai Auto Show in 2021, Horizon released the intelligent cockpit system Horizon Halo ™️ 3, which is based on the journey 3 with 5 TOPS of AI computing power, supports up to 6 camera access capabilities, and supports more MIC access. It can support full-range perception and interaction of the front and rear rows, inside and outside the car, and vision and speech, forming more interactive possibilities.

According to Horizon, the journey 5 with completed chip designs has already integrated human-vehicle interaction and automatic driving on the same on-board central computing platform to achieve intelligent driving and intelligent cabin functions.

According to Horizon’s estimation, with the integration and development of various modal technologies, the demand for intelligent cockpit human-computer interaction and perception computing power will increase exponentially, and it is expected to exceed 100 Tops by 2026.

The application of DMS in autonomous driving and intelligent cockpit has created disagreements in the domain fusion of future DMS. Some manufacturers choose to integrate with the field of autonomous driving, while others choose to integrate with the domain of intelligent cockpit.

For example, in 2020, Seeing Machines announced a partnership with Qualcomm to provide an embedded integration system solution for the Qualcomm Snapdragon™ Automotive Platform that fully supports and integrates driver monitoring system kits.

According to disclosed public information, this kit will support the integration of DMS solutions into information entertainment or centralized ADAS systems, including an optimized system reference design camera, ADP interface board, and software algorithms.

Perhaps this disagreement is only temporary. The development of automotive electronic and electrical architecture will shift from distributed domain controllers to unified central processor architecture. By then, there will be no need to worry about which domain to choose, and the technological form of DMS will also undergo earth-shaking changes.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.