Introduction

Autonomous driving is set to bring revolutionary changes to travel in the next decade. Currently, autonomous driving applications are undergoing testing in various use cases, including passenger vehicles, robot taxis, automated commercial delivery trucks, intelligent forklifts, and automatic tractors for agriculture.

Autonomous driving requires a computer vision perception module to understand and navigate the environment. The role of the perception module includes:

- Detecting lane lines

- Detecting other objects such as vehicles, people, and animals in the environment

- Tracking the detected objects

- Predicting their possible movements

A good perception system should be able to do these things in real-time under various driving conditions, including day/night, summer/winter, rain and snow, etc. In this blog post, we focus on a real-time model for lane detection, vehicle detection, and generating alerts.

Training a Real-Time Lane Detection Model

Lane detection problems are typically defined as semantic or instance segmentation problems, with the aim of identifying pixels belonging to the lane category.

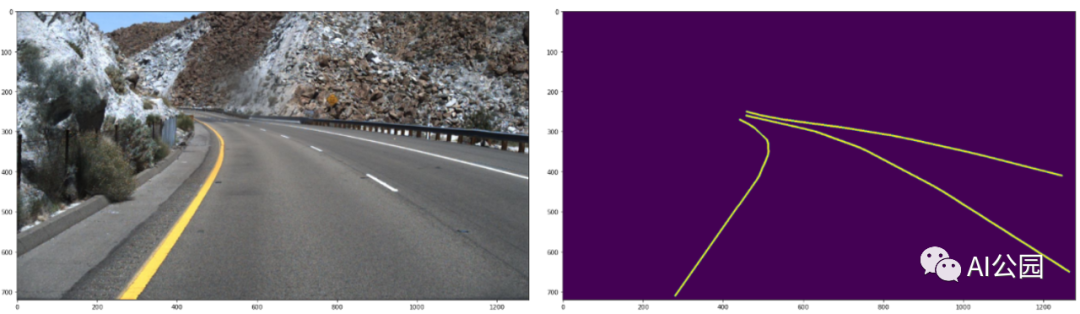

TUSimple is a commonly used dataset for lane detection tasks. The dataset contains 3,626 annotated video clips of road scenes, each with 20 frames. The data was captured using a camera installed on a vehicle. The following is an example image and its annotation from the dataset:

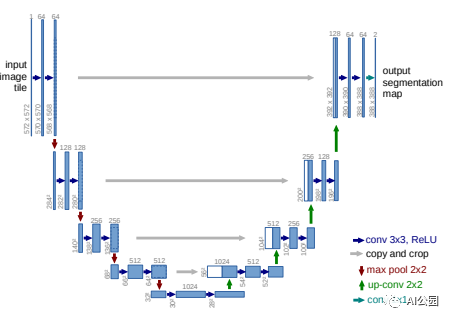

We can train a semantic segmentation model on this dataset to segment out pixels belonging to the lane category. The U-Net model is particularly suitable for this task as it is a lightweight model with real-time inference speed. U-Net is an encoder-decoder model with skip connections. The model architecture is shown below:

However, the loss function needs to be modified to use the Dice loss coefficient. The lane line segmentation problem is an extremely unbalanced data problem. Most pixels in the image belong to the background category. The Dice loss, based on the Sorenson-Dice coefficient, places similar importance on false positives and false negatives, making it perform better when dealing with unbalanced data problems. The Dice loss attempts to match the ground truth and predicted lane line pixels in the model, hoping to obtain a clear boundary prediction.## LaneNet Model

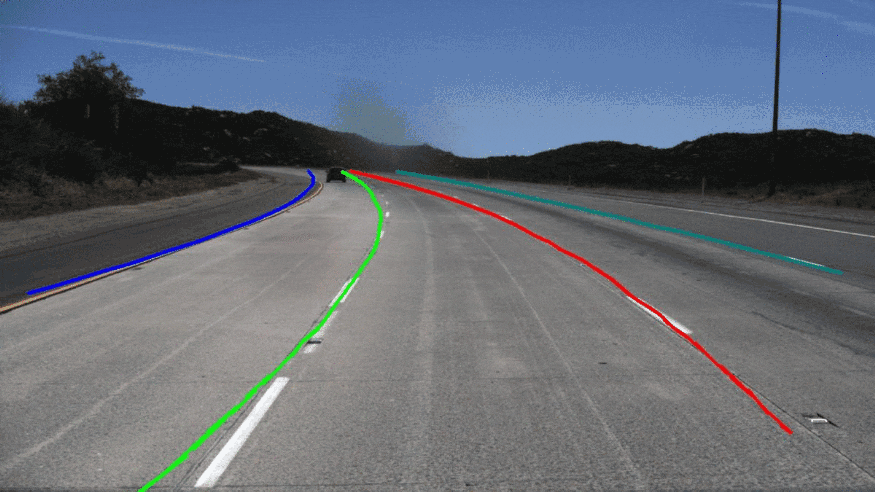

Here, I used the LaneNet model to generate lane lines. The LaneNet model is a two-stage lane line predictor. The first stage is an encoder-decoder model that creates segmentation masks for lane lines. The second stage is a lane line localization network that uses the extracted lane points from the mask as input and learns a quadratic function using LSTM to predict lane line points.

The following image shows the operation of both stages. The left image is the original image, the middle image is the lane line mask output of stage 1, and the right image is the final output of stage 2.

Generating Intelligent Alerts

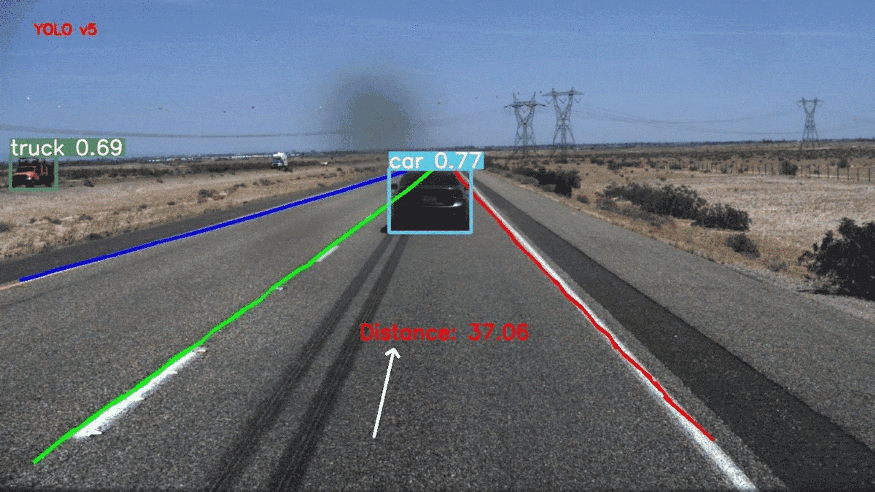

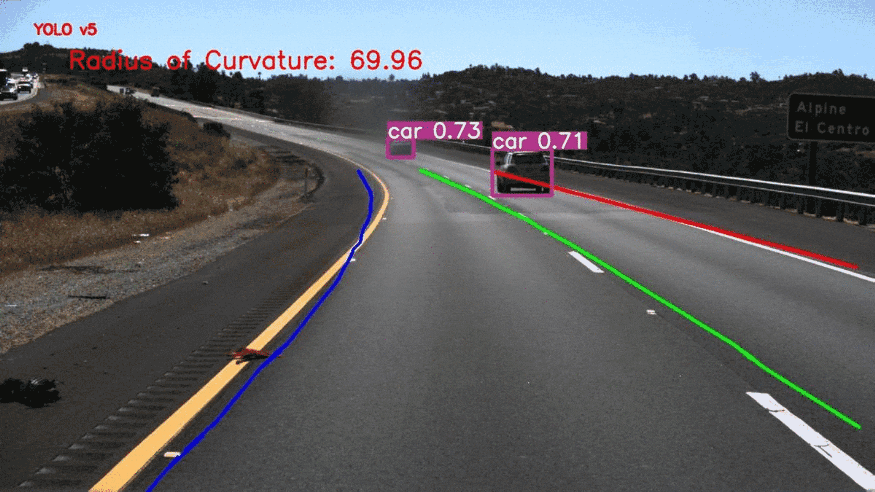

I combined lane line prediction with object detection to generate intelligent alerts. These intelligent alerts may involve:

Detecting whether other vehicles are in the lane and measuring their distance.

Detecting the presence of vehicles in neighboring lanes.

Understanding the turning radius of curved roads.

Here, I used YOLO-v5 to detect cars and people on the road. YOLO-v5 performs well in detecting other vehicles on the road. The inference time is also very fast.

Below, we measure the distance between our own car and the closest car in front using the YOLO v5 model. The distance returned by the model is in pixels and can be converted to meters based on camera parameters. As the camera parameters for the TUSimple dataset are unknown, I estimated the pixel to meter conversion based on the standard width of the lane line.

We can similarly calculate the curvature radius of the lane and use it for the car’s steering module.

Conclusion

In this blog post, we explored the problem of accurately and quickly detecting lane lines in autonomous driving. Then, we used YOLOv5 to build an understanding of other objects on the road. This can be used to generate intelligent alerts.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.