Author: Michelin

If you had to choose the most emotional part of a steel machine like a car, the cabin would definitely win high votes.

As the most direct intelligent product that interacts with users in a car, it’s difficult to simply evaluate a cabin as “good” or “bad”, and give it a score. This is the difficulty and charm of intelligent cabin design.

This charm has attracted Geekcar to launch the column “Wonderful Car Intelligence Bureau” two years ago, starting with the infotainment system of each car and experiencing the changes of this still young product of intelligent cabins, trying to outline its future.

No one can accurately predict the future, but we can predict its general shape from the trend of technology and user needs, because the development of any product can’t go without the driving force of technology and the real needs of users.

Recently, IHS Markit released the “White Paper on the Market and Technological Development Trends of Intelligent Cabins” (hereinafter referred to as “the White Paper”), so let’s explore which technologies are making cabins more intelligent and try to guess what the future intelligent cabin will look like based on the trend of intelligent cabin technology.

Multi-modal interaction enables cabins to learn how to read emotions

In the “Intelligence Bureau” evaluations of dozens of cars over the past two years, we found that although current voice interaction is not yet perfect, we must admit that in-vehicle voice systems are becoming more intelligent.

In addition to improvements in technical indicators, a key reason is to treat voice as a platform, rather than just a product. In the 2020 evaluation, the voice systems of Bozhong and XPeng P7, which performed well in voice interaction, both treat voice as a platform, integrating electronic and electrical architecture, vehicle information security, vehicle data, and more to jointly optimize the ability of voice interaction.

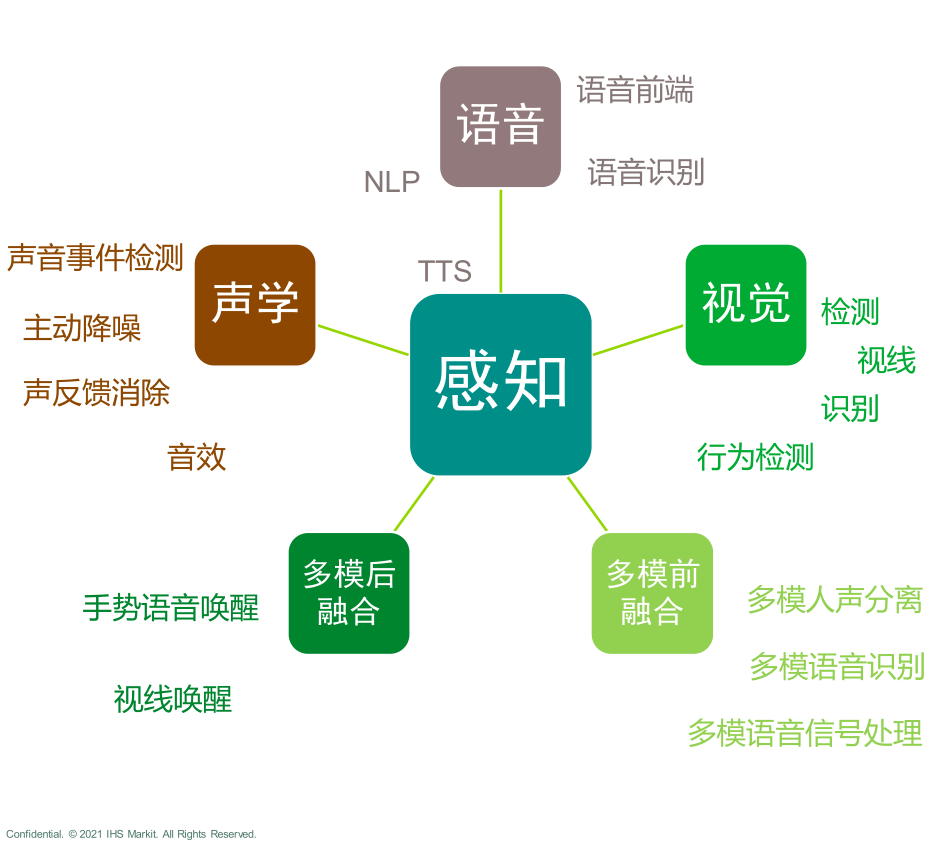

In the “White Paper”, we see that in 2021, multi-modal interaction technology that integrates voice with touch screen, emotion recognition, gesture recognition, face recognition, location tracking, and other technologies based on the perceptual awareness inside and outside the car, is becoming the trend of intelligent cabins as a platform for intelligent cars.

No matter when fully autonomous driving arrives, people and cars will face a long-term future of co-driving. Highly automated driving can further release the energy of drivers and passengers during the process of mobile travel, and good cabin space can help them better enjoy a good life. In the face of the enormous amount of information exploding in the intelligent cabin, how to make full use of voice, expression and other body movements that take up less space when driving, is the key to improving the driving experience and interaction experience.

No matter when fully autonomous driving arrives, people and cars will face a long-term future of co-driving. Highly automated driving can further release the energy of drivers and passengers during the process of mobile travel, and good cabin space can help them better enjoy a good life. In the face of the enormous amount of information exploding in the intelligent cabin, how to make full use of voice, expression and other body movements that take up less space when driving, is the key to improving the driving experience and interaction experience.

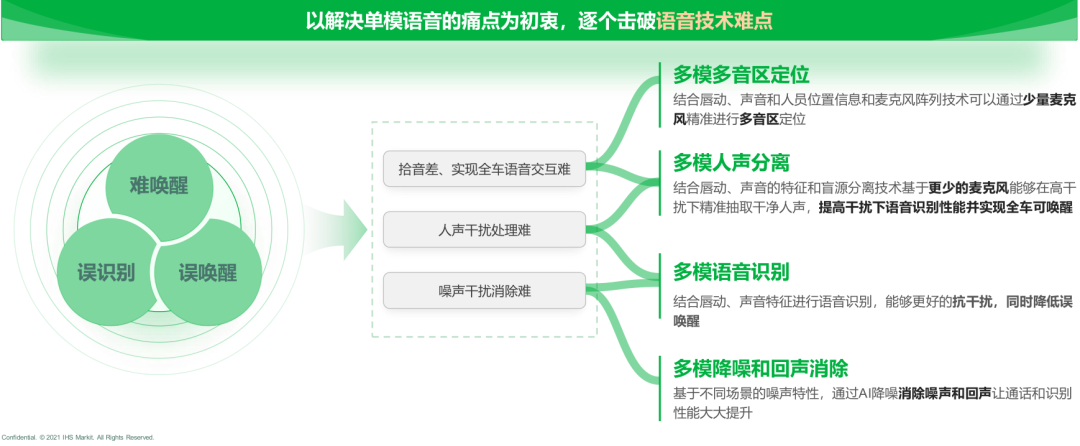

Inevitably, independent voice interaction will encounter situations such as noise, echo, unclear recognition, and even “difficult to speak” command requests within the “semi-public space” of the cabin. Just as people need their five senses to “see everything and listen to everything”, relying solely on a single-mode voice cabin is still a “machine” that plays “what you point to” and is far from being truly “intelligent”. Only by combining other interactions such as gestures, eye contact, expressions and even emotions with voice interaction, can the maximum effect of interaction be exerted.

For example, by combining voice with lip reading technology, even in noisy environments inside the car where the system cannot fully hear instructions, the system can still accurately accept the task by reading the user’s lip movements.

In our evaluation, Changan UNI-T, as the industry’s first mass-produced vehicle equipped with a multi-modal voice interaction solution, uses Horizon Halo car intelligent interaction solution from Horizon Robotics. It accurately determines the source location of voice commands by combining multi-modal AI technologies such as voice and lip movements to avoid mis-wake-up. When making calls, the cabin can also automatically lower the volume of music.

In the future, this multi-modal voice interaction mode will make the cabin more active and understanding of users. For example, by fusing voice perception with visual, acoustic, and other senses, when the user says “bigger” while looking at the car window, the window will open a little larger; when the user says the same thing while staring at the air conditioner, the command will become to turn up the air conditioner; when you are sad, saying “play music” will allow the cabin to play a soothing song to comfort your emotions…

Of course, now the imagination for the future of multi-modal voice fusion is not rich enough. However, technology is used to provide infinite possibilities for us.

Perception system upgrading, more and more sensors in the cabin

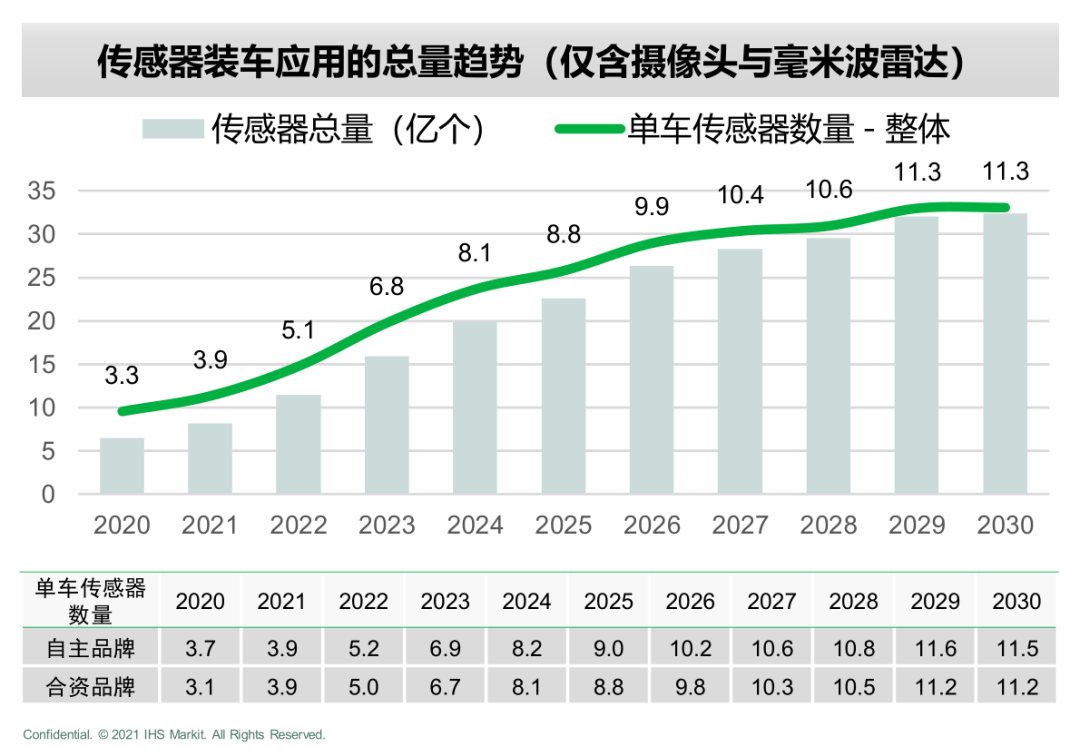

Multi-modal voice interaction has brought us convenience and made the cabin no longer a machine that can only follow commands, but can interact and communicate emotionally. However, the premise is that the perception system inside the car must be accurate enough, which requires the role of sensors inside the cabin.According to statistics from IHS Markit, the average number of sensors per car for 2020 is 3.3, including in-vehicle cameras, millimeter-wave radars, vital sign testing sensors, and so on. By 2030, the number of sensors per car will reach double digits, with an average of 11.3 sensors per vehicle. In particular, the most commonly used sensors in the cabin are in-vehicle cameras and microphones.

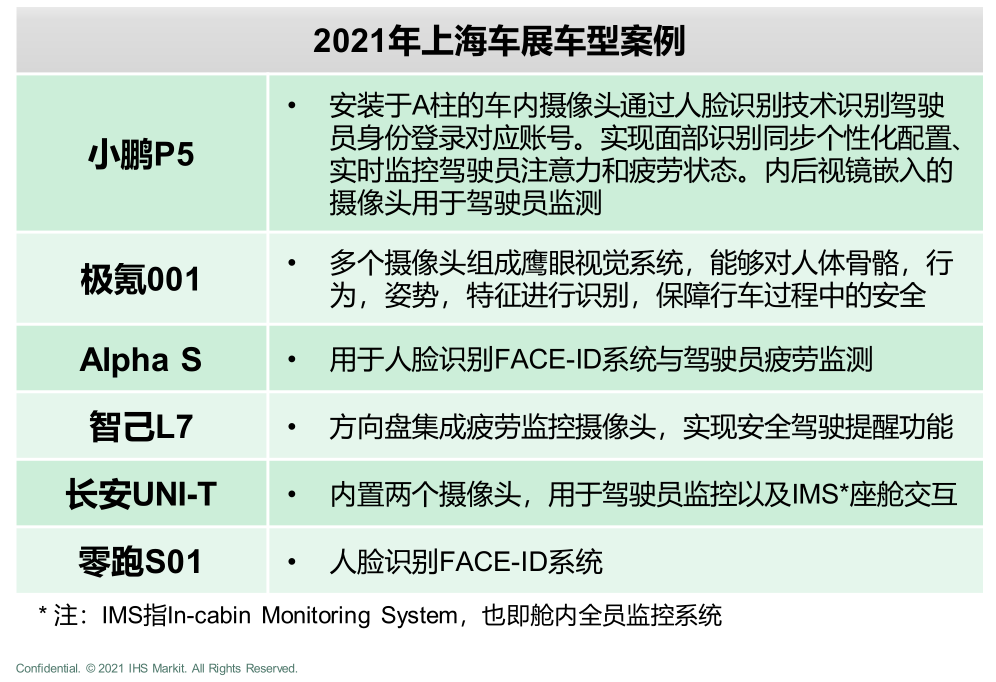

At the 2021 Shanghai Auto Show, new models such as the XPeng P5, Zeekr 001, IM L7, and Alpha S are equipped with more than one camera inside the cabin, mainly used for face recognition FACE-ID and driver fatigue monitoring.

Not only has the number of cameras increased, but the number of microphones inside the cabin has also increased. In just one year from 2020 to 2021, the average number of microphones per new car has increased by 0.5. According to predictions in the white paper, this number will increase to 8.7 by 2030, meaning that on average, each new car will have 8 to 9 groups of microphones.

The increase in cameras and microphones inside the cabin not only reflects on the number but also on their location and function. If the traditional cabin is centered around the driver, then the intelligent cabin is gradually transforming into one centered around all occupants inside, and the cabin monitoring system is developing from a DMS (driver monitoring system) to an IMS (all occupants monitoring system) system.

In-vehicle cameras not only monitor the driver’s behavior and facial expressions but also check on the status of the front passenger and rear passengers, such as the two built-in cameras in the Changan UNI-T. Zeekr 001 also uses the “eagle-eye” camera for skeleton monitoring to identify a person’s actions, movements, and posture. In the future, cameras may also be used to identify passengers’ faces and emotions at the rear entertainment screen. The multiple microphone array has also expanded from the driver’s side to the front passenger and rear seats, allowing rear passengers to issue voice commands, such as voice-activated window switches and air conditioner temperature adjustments.

### High-Performance AI Chips Becoming the Core of Intelligence in Cars

### High-Performance AI Chips Becoming the Core of Intelligence in Cars

As the automotive industry’s electrical and electronic architecture moves toward a centralized system, chips are gradually replacing the functions of dozens or even hundreds of ECUs. With the increased scale of cockpit sensors and upgraded interaction modes, even our intuitive instruments, central control screens, and HUDs rely on the capability of cockpit system chips.

Over the past year, we have gradually felt the cockpit computing power battle between carmakers: Wey Mocha, NIO ET7, and Zeekr’s C11 have chosen the Qualcomm 8155 system chip with a computing power of up to 4.2 TOPS; Audi has chosen Samsung’s Auto V9 chip, which boasts a computing power of 2 TOPS. The recently released Model S Plaid by Tesla boasts a semi-custom AMD chip, demonstrating the ability to play the game Cyberpunk 2077, bringing the performance of in-car entertainment chips to be on par with that of the PS5 game console.

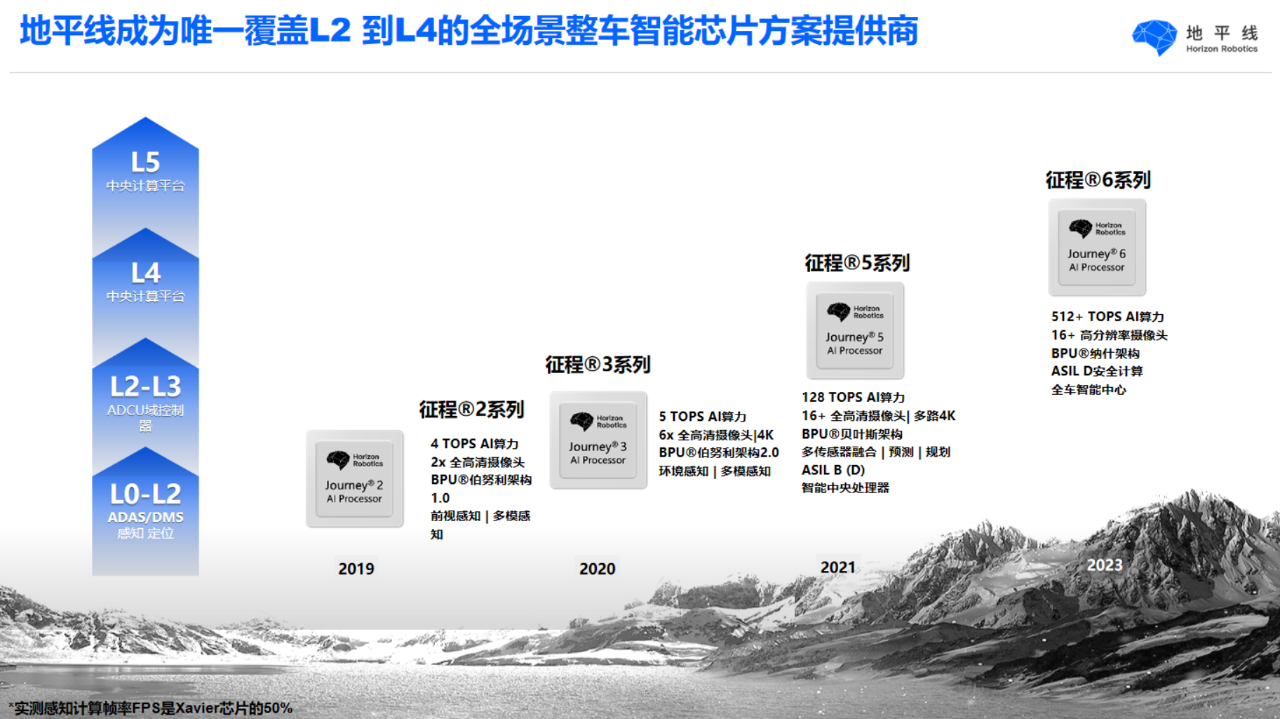

Domestic chip suppliers are also not falling behind: at the Shanghai Auto Show, Huawei launched the Kirin 990A intelligent cockpit chip with a capacity of processing five screens simultaneously and computing power of 3.5 TOPS; the Horizon Journey 2 chip, with 4 TOPS AI processing power, has already been used in two models of Changan’s UNI series. According to Horizon, the Journey 5, which integrates human-machine interaction and autonomous driving together, has already completed chip production, allowing the central computing platform within the car to complete the functions of intelligent driving and intelligent cabin technology.

The computing power race is not simply a numbers game. AI SoC, as the brain responsible for controlling various software and hardware functions within the cockpit, is prone to the “butterfly effect,” where even the slightest hardware, software and functional changes, such as increasing the number of cameras, microphones, resolution, and frame rates can cause a compounding effect. According to research by IHS Markit, there are at least 22 factors affecting computing power, and each one can have a direct impact on the functions we use.

For example, if we want to add FACE-ID, we need to detect the key points of the face, which requires adding a specialized camera for capturing facial images; in order to accurately identify the 3D ball-shaped image of the face, we need to increase the resolution, introduce 3D information, and optimize the captured model; and to ensure smooth system operation, we also need to increase the frame rate… Each of these factors only slightly increases the requirements for chip computing power. However, when combined, these requirements can significantly increase the demand for computing power.

In fact, from 2021 to 2024, the demand for NPU computing power will increase by double each year at the perception level alone, while the demand for CPU computing power will increase sixfold.

This strong demand for chip computing power has also made chip and algorithm development companies gradually become core participants in the intelligent ecosystem. Chip companies such as Horizon, NXP, Ambarella, and algorithm companies such as SenseTime and iFlytek participate in visual and speech perception.

The future cockpit will undoubtedly revolve around these “hardcore” smart technologies to create differentiated functions.

Finally

What kind of future smart cockpit do you want? This question may be too abstract and cannot be answered specifically for the time being. However, with the help of technological innovation, our demand for cockpits will also gradually change.

Ten years ago, safety was the primary requirement for a car cockpit, and being able to listen to the radio and music was sufficient. Five years ago, accurate navigation and voice control became everyone’s main demands. Today, the intelligent configuration of the cockpit has begun to affect people’s car-buying decisions. The cockpit not only needs to hear and understand users’ instructions but also needs to perceive users’ unspoken needs.

From the initial buttons and touch screens to voice and gesture interaction, and to future multi-mode and emotional interaction, smart cockpits are becoming more intelligent and proactive. This intangible considerate service depends on the breakthrough of interaction technology and the development and popularization of hardware sensors and chip computing power behind it.

In the future, whether the cockpit has several large screens, physical buttons, or what kind of HMI is used, they are just different carriers. The functions that can meet users’ real needs and become friends with users can be long-lasting friends with time. These functions rely on the driving force of perception systems, algorithms, AI chips, and other technologies, especially the development and popularization of high computing power chips, which bring unlimited possibilities to user experience.

The intelligent interaction of the cockpit will bring the new intelligent interaction of the robot era, and the car will be a proactive, personalized, and emotionally intelligent robot.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.