Author: Aimee

The current intelligent application of the automobile industry is mostly related to autonomous driving. In fact, the real intelligence has three major development directions: automated driving, intelligent cabin, and Internet-connected intelligence. To realize true autonomous driving, not only does it require the help of intelligent Internet-connected technology to free people from the dynamic driving task, but also it needs to promote the digitalization, informatization, and multifunctionality of the cabin. That is, from automated driving to unmanned driving, the integration of ECU within the entertainment domain needs to be completed, and high-speed communication with the automated driving domain is necessary. The intelligent cabin will visualize the external environment and perceive the state and emotions of the people in the cabin. At the same time, the in-vehicle human-machine interaction system will be gradually integrated to form an “electronic cabin domain” and form a layered system.

All of the above three functions are the key functions for the implementation of unmanned driving. In fact, the real experience of unmanned driving is not just about the driving itself, but also more focuses on the intelligence of the cabin after the realization of automated driving. The biggest difference between the digital cabin and the intelligent driving is that the former focuses on the human-machine interaction and information service experience, with less safety risks. The latter is responsible for controlling the vehicle and has greater responsibility, so the difficulty of implementation is much higher. With the help of the new E/E architecture, the cabin domain ADAS/AD will eventually be integrated, realizing intelligent control of the vehicle. The goal of automated driving is to fully analyze the driver’s intention and achieve a “mobile office and rest room” that combines commuting, work, leisure, and entertainment. At that time, the automated driving process will no longer rely on various traditional driving equipment (steering wheel, brake, accelerator, and even the dashboard will be completely virtualized). The equipment can be totally intelligent and foldable, and support freely layout of the moving seat to provide passengers with a more comfortable and flexible space.

The development of the entire driving cabin domain can be divided into the following four stages:

①. Local entertainment and navigation stage

This stage is mainly the original car-machine interaction system, and each interaction unit runs independently.

②. Intelligent Internet-connected in-vehicle system stage

Decentralized edge computing begins to become centralized, and some component companies form a mature cabin domain controller production plan.

③. Autonomous driving cabin stage

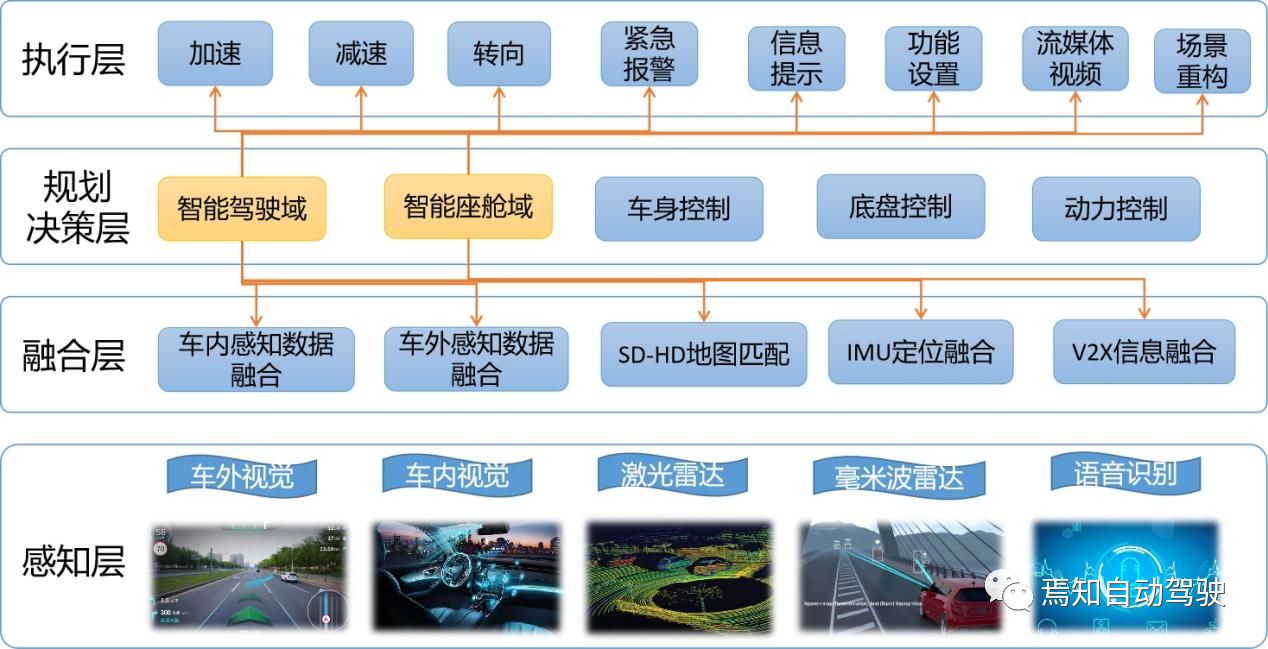

The intelligent driving and intelligent cabin domain begins to merge. Single AI chip realizes edge computing such as vehicle exterior, interior, and fusion, and the “independent perception layer” is formed. The in-vehicle operating system has established an intermediate-level ecosystem.

④. Unmanned driving cabin stage

The formation of in-vehicle central computers, and the integration of computing chips will become the trend. The in-vehicle operating system has established an application-level ecosystem.As self-driving technology evolves, the intelligent cockpit is also entering the self-driving phase. During this stage, the interaction between humans and vehicles regarding driving disappears, and the car itself becomes a service carrier. The relationships between the road and the car and between cars no longer affect the relationships between humans and cars. The design of the self-driving cockpit will shift from being driver-centric to passenger-centric, with highly intelligent and interactive virtual images at the core of this stage.

To meet the future multifunctional and multitasking needs of the intelligent cockpit, a comprehensive upgrade is needed for the current cockpit domain, which mainly involves integrated system solutions, including multiple perception systems in the cockpit domain, decision-making control systems, cockpit domain execution terminals, and AR-HUD portals. In terms of cockpit layout, emphasis is placed on in-cabin perception systems, cameras, and biometric recognition. At the same time, the cockpit display uses atmosphere lights, flow media rearview mirrors, augmented reality, and head-up displays. This requires the integration, digitization, and display of the information entertainment system, which can implement the electronic, foldable, and mobile layout of cockpit facilities such as seats, steering wheels, and center consoles when intelligent driving reaches a certain level.

Intelligent Cockpit Perception Capability

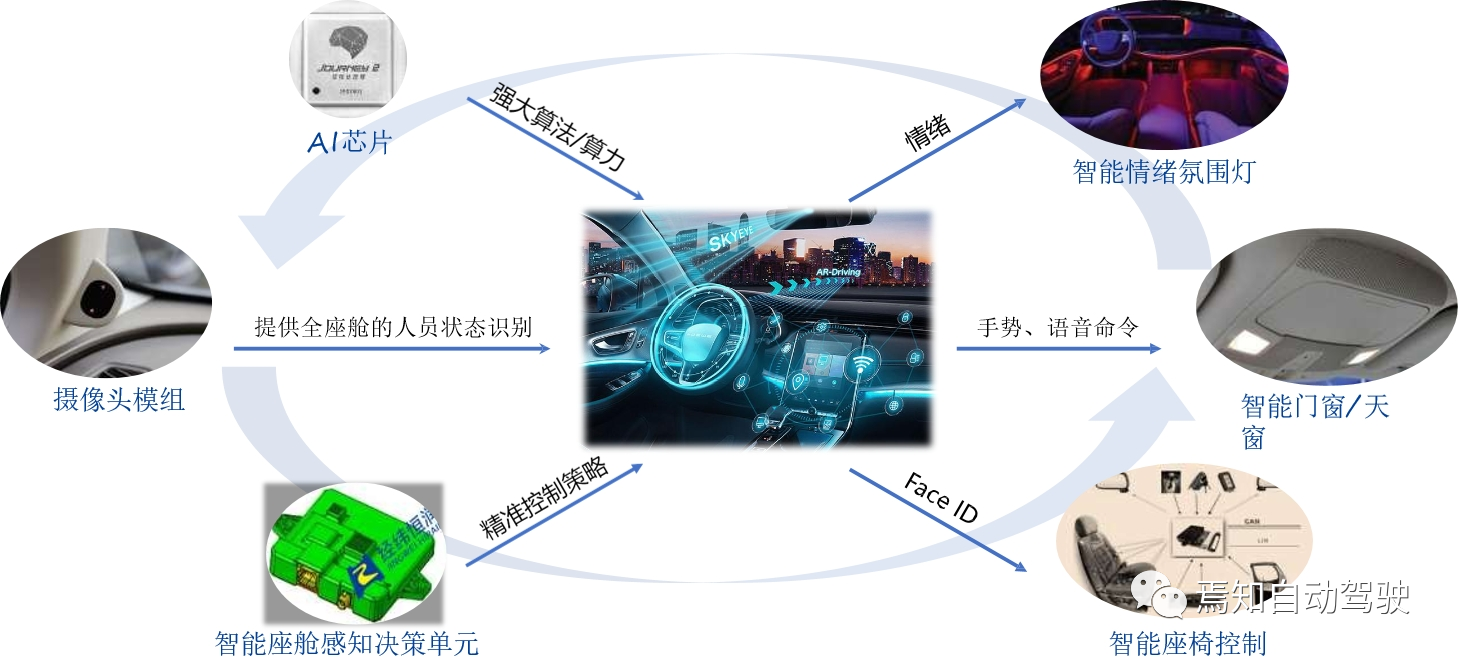

Similar to intelligent driving, the overall capabilities of the intelligent cockpit still include perception, presentation, and processing capabilities. Among them, the perception system mainly includes in-cabin cameras and biometric detection methods; the presentation capability mainly includes atmosphere lights, flow media rearview mirrors, augmented reality head-up displays, and intelligent seats; and the processing capability mainly involves cockpit domain controllers (or sub-domain controllers).

The following will introduce the various functions of the intelligent cockpit in detail and analyze the design solutions for some major functions in intelligent driving.

The independent perception layer of the intelligent cockpit system enables the vehicle to “perceive” and “understand” humans. This independent perception layer can obtain sufficient perception data, such as in-cabin vision (optical), voice (acoustic), and chassis and body data such as the steering wheel, brake pedal, accelerator pedal, gear position, and seat belts. By using biometric identification technology (face and sound recognition in the cabin), it comprehensively judges the driver’s (or other passengers’) physiological state (portrait, facial features, etc.) and behavior status (driving behavior, sound, posture, etc.) to enable the car to fully “understand” humans. The centralization of computing resources will also occur in edge-side perception computing, where a single powerful AI perception chip is responsible for external vision, internal vision, speech recognition, and other perception tasks that require AI acceleration. This hardware-layer change will ultimately lead to the formation of an “independent perception layer” in the system software layer.The mature intelligent cockpit perception capability is mainly reflected in the perfect high-performance camera module, which is used for cabin visual perception monitoring (generally referring to the driver state monitoring system). Secondly, the high-performance millimeter-wave radar biological occupancy detection is utilized to assist the camera for corresponding driver or passenger existence detection.

L3 autonomous driving has operational design domain (ODD) restrictions and driver takeover requirements. If the driver is in a fatigued or distracted state and cannot effectively take over the car, the in-car perception capability is needed to grasp the driver’s state. Therefore, the car needs in-car perception capability to grasp the driver’s state. An intelligent cockpit system that realizes in-cabin visual and voice perception can effectively detect the driver’s state, helping to improve the decision accuracy and safety of the autonomous driving system.

Truly high-performance perception interaction can also push interaction requests based on specific scenarios, such as providing consultation information, providing vehicle status information, and providing proactive interaction between the car and the driver to reduce the driver’s interaction burden, improve interaction experience, and so on.

For the development capability of intelligent cockpit camera, the corresponding development requirements capability is mainly reflected in the following aspects:

- Maturing camera module development experience and control system integration capability;

- Professional whole vehicle layout support capability;

- Complete AA assembly, calibration, and EOL production and manufacturing process.

In addition, the application of biological recognition technology composed of high-resolution and high-performance radar inside the cabin has driven the iteration of driver monitoring systems, effectively enhancing vehicle perception capability.

At the same time, breakthroughs in voice and gesture control technology and fusion based on multiple modes of perception make perception more accurate and proactive. In-car visual perception can effectively support the technical implementation of diverse functions in the cockpit, realizing personalized in-car experience. In-car visual perception can also effectively help the implementation of autonomous driving functions, ensuring decision accuracy.

System Architecture of Intelligent Cockpit

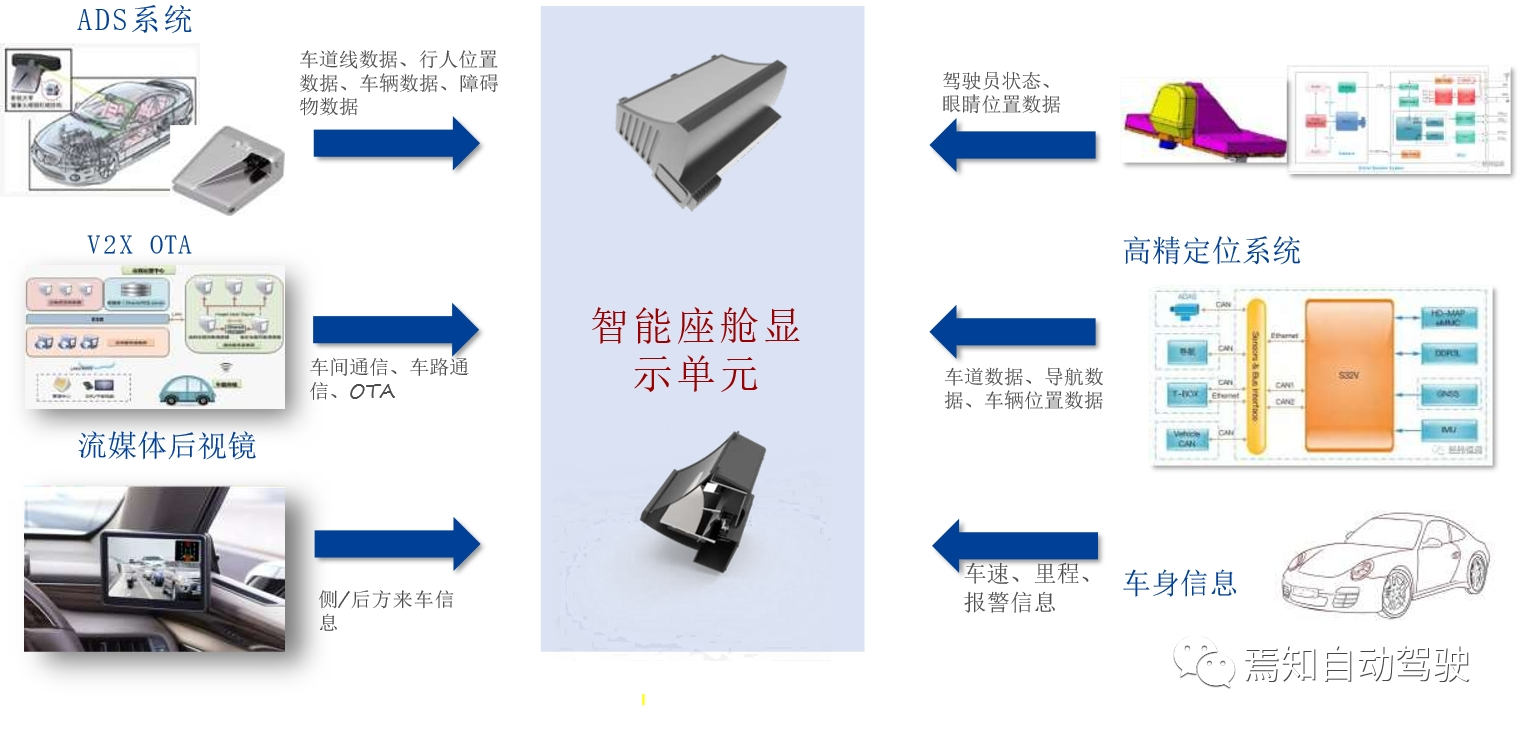

Currently, the presentation capability of the intelligent cockpit system is mainly divided into two control domains. One is the information security domain closely tied to the intelligent driving system, and the other is the entertainment information domain tied to the intelligent interaction end. The relationship between the two domains is shown in the following figure:

The intelligent driving system and the intelligent cockpit system are divided into two independent information processing units for information processing. Among them, the intelligent driving system needs to efficiently utilize the intelligent cockpit domain, such as the instrument panel and AR-HUD display unit for effective display of driving information. It also needs to use the central control screen for effective driving control, settings, and interaction prompts. The upgraded intelligent cockpit domain control needs to access cloud service platforms, mobile communication and Ethernet gateway units, power amplifiers, wireless units, and set up driver interaction display units: that is, central control multimedia display, driver monitoring display, passenger monitoring, and so on.

The overall system architecture of the intelligent cockpit domain is similar to that of the intelligent driving domain, and there is a certain degree of reuse between the two. For example, the perception results of the intelligent driving domain may be reused as perception input data for the intelligent cockpit domain. In general, the cockpit domain is more inclined towards the display of video information, while the intelligent driving domain is more inclined towards environmental semantic recognition and processing. Therefore, if the original input of the perception end is processed by the intelligent driving domain controller, the driving domain needs to be able to add effective encoders and decoders, and carry certain image rendering processors such as GPU to process the original video encoding and decoding information and input it to the central processing unit.

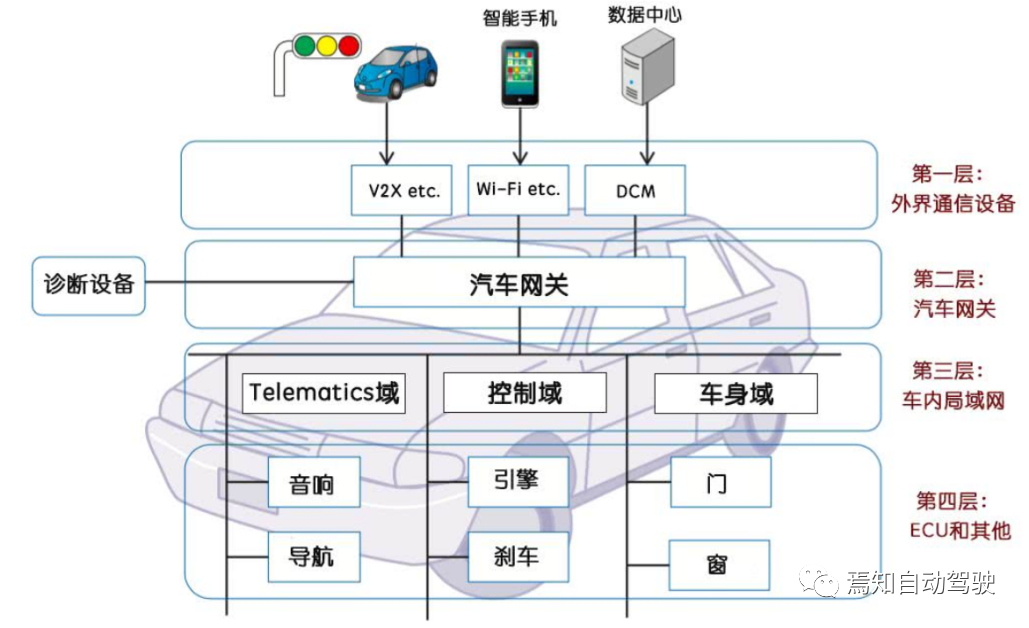

In the transition of the electronic architecture from the electronic control unit (ECU) to the domain controller (DCU), the computing power of the underlying hardware of the in-vehicle audio and video entertainment is rapidly increasing, making it possible to support one chip and multiple screens. The rich functions of the advanced driver assistance system (ADAS) have increased the difficulty of driver information processing. In the face of the demand for real-time information processing, intelligent interaction and display are more needed. As the AI engine gradually matures, it greatly enhances the intelligent experience.

The scattered cockpit domain ECUs are difficult to work with the safety domain (ADAS/AD) controller in a cooperative manner, and cannot display the real-time environment information of GPS, radar, LiDAR and cameras to users in real-time. Therefore, the cockpit domain control platform integrates multiple ECUs of the cockpit domain onto one controller, integrates the information resources of IVI, HUD, driving information, multifunctional information display, ADAS and vehicle networking, and forms a highly integrated hardware platform. This effectively reduces the overall vehicle cost, optimizes the interior space, reduces power consumption, and can also integrate the operating system, achieve software and hardware separation, and take the first step towards “software-defined cars”.

Based on the development trend, multiple domains such as the cabin domain, power domain, and chassis domain will form a multi-domain integration, promoting the development of new in-car interaction technology. The cabin domain controller will participate in the control of the intelligent driving domain, power domain, and chassis domain, and provide support for operations requiring high safety such as intelligent driving through voice control. With the continuous integration of the automatic driving system with the power domain and the chassis domain, and the popularity of service-oriented architecture (SOA) in vehicles, complex driving behaviors may be abstracted as driving services. After upgrading the functional safety level, the cabin domain controller can directly call the driving services of the automatic driving domain to control the vehicle, forming a new situation of human-machine co-driving. After upgrading the system architecture and hardware and software of the cabin domain system, the architecture of intelligent vehicles will be upgraded to a service-oriented system and hardware architecture based on the whole vehicle and targeting driving services.

Based on the development trend, multiple domains such as the cabin domain, power domain, and chassis domain will form a multi-domain integration, promoting the development of new in-car interaction technology. The cabin domain controller will participate in the control of the intelligent driving domain, power domain, and chassis domain, and provide support for operations requiring high safety such as intelligent driving through voice control. With the continuous integration of the automatic driving system with the power domain and the chassis domain, and the popularity of service-oriented architecture (SOA) in vehicles, complex driving behaviors may be abstracted as driving services. After upgrading the functional safety level, the cabin domain controller can directly call the driving services of the automatic driving domain to control the vehicle, forming a new situation of human-machine co-driving. After upgrading the system architecture and hardware and software of the cabin domain system, the architecture of intelligent vehicles will be upgraded to a service-oriented system and hardware architecture based on the whole vehicle and targeting driving services.

Intelligent Cabin System Display Capability

Generally, the display capability of the intelligent cabin domain mainly includes the instrument cluster, central control display, and intelligent AR-HUD system. By integrating T-Box, DMS system, ADAS system, high-precision positioning system, and future V2X, the advantages of the intelligent cabin in autonomous driving can be fully demonstrated. For example, the intelligent cabin system inputs navigation information into the intelligent driving system domain controller and matches it through SD-HD Map, which can well complete navigation positioning system tasks based on lane-level high precision.

The intelligent cabin can upgrade ambient lighting by using traditional LED single-color and multi-color schemes. Its functions include support for single-channel 4000+ RGB, smaller LED packaging for flexible layout, large-scale LED deployment, and high design space for functional and effective designs. New interactive features such as dynamic music following and emotional recognition fusion are also supported.

The intelligent cabin has also continued to iterate and update on the streaming media rearview mirror, with constant upgrading in the direction of blind zone indication, collision reminder, lane change warning, rain and fog penetration, night vision enhancement, and others. Through functional expansion and integration, the streaming media rearview mirror can be combined with driving recorders, ADAS systems, streaming media side and rearview mirrors, reversing images, cabin monitoring systems, and other systems to provide corresponding services to the intelligent driving domain.

In addition, the intelligent cabin system can also enhance comfort on the intelligent seats, for example, achieve precise, fast, and convenient adjustment of seats and rearview mirrors; have memory function and convenient retrieval of comfortable driving and riding positions; and improve comfort through ventilating and heating functions of the seats. The automatic functions of welcome and automatic downward tilting of the rearview mirror can also enhance the overall quality of the vehicle.

ConclusionThe technology of the intelligent cockpit is equivalent to that of intelligent driving, with low implementation difficulty and perceivable results, which helps to rapidly enhance product differentiation competitiveness. Autonomous driving brings about liberation of the driver’s hands and feet, requiring the intelligent transformation of cockpit functions from the five dimensions of interaction, environment, control, space, and data to improve the overall experience.

If truly realized (L5, with the steering wheel and pedals removed; distinguishing ADAS from L0-L2 and AD autonomous driving from L3-L4), the concept of the intelligent “cockpit” would no longer exist, as the physical concept of autonomous driving vehicles would have degraded into a “mobile chassis”. The concept of the intelligent “cockpit” would truly transform into a “mobile lounge”. As technology gradually matures, it may lead to further concentration of hardware architecture, accelerating the integration of driving and cockpit domains, and ultimately creating a central computer for in-car use.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.