Article by Feng Yuchen

This article introduces various aspects of Black Sesame Intelligence’s development in chip architecture and core competitiveness, including the AI chip research and development department, independent and controllable core IP, application of autonomous driving computing platforms, and tool chains.

Founded in 2016, Black Sesame Intelligence specializes in Support L2+ and above autonomous driving computing chips. The core team comes from chip companies, therefore their strengths are in hardware and IP. As a startup, Black Sesame has developed their own core IP and conducted in-depth research in terms of automobile-grade and reliability, which can fulfill their customers’ development needs, and at the same time provide supporting tool chains and software algorithms.

The evolution of traditional cars to autonomous driving brings revolution to society’s core productivity

The transformation in the car industry poses both challenges and opportunities. Facing the future development of intelligence and interconnectivity, core chips, electronic and electrical architectures, and artificial intelligence algorithms become mainstream. However, the ultimate development of Big Data and AI will have a profound impact on social change.

Starting with Waymo, the commercial application of autonomous driving has developed for ten years. It is gradually recognized that it is not a one-step process nor is it successful by crossing from one Level to another according to the SAE classification standard. Instead, it requires industrial coordination and exploration of different implementation paths. Currently, the industry is divided by L3, which primarily has two implementation paths.

One is the bottom-up approach, from L1 to L3, for the commercialization of passenger cars and commercial vehicles. The supplier provides L1, L2 or L3 intelligent modular products to the automobile manufacturing company, and the automobile manufacturing company deploys intelligent modules. Then, feedback information is collected from consumers for product innovation and optimization, which is the main direction of implementation for the automatic driving industry.

The other approach is represented by high-tech companies such as Waymo and Didi, who start from the operation of self-driving vehicles in restricted areas, which means going down from Level 4 self-driving, including Robotaxi, unmanned buses, unmanned logistics, etc.

Although the two approaches are different, the core is still the integration of the entire industry, the development of artificial intelligence, and the advancement of vehicle-road cooperation technology.

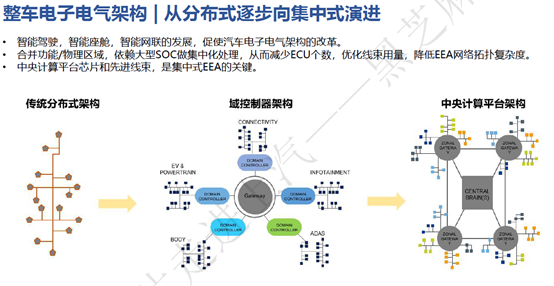

The evolution of the vehicle’s electronic and electrical architecture from distributed to centralized drives the development of autonomous driving supercomputing platforms

The development of Electronic and Electrical Architecture (EA) has evolved from distributed to centralized, domain controller architecture, and central computing platform architecture, which overall means concentrating functions to reduce the number of wiring harnesses. However, the problem is that the functions carried on a single chip will increase significantly, including computing power, data throughput, and multistage connections, among others.

Currently, regardless whether it is AEB, ACC, or APA, ADAS is operating with low-speed and high-speed time division multiplexing for domain controllers, with a relatively low chip computing power of approximately ≤10 Tops. The overall chip can run lightweight neural network projects or artificial intelligence algorithms, while the overall functional safety level is relatively low, mostly ASIL-B.

The current hardware and chip status cannot meet the future support requirements for central processing platforms, therefore requiring higher hardware standards with chips that can achieve high computing power, high reliability, and low latency.

Heizhima Intelligence’s Vehicle-Grade High Computing Power Chip

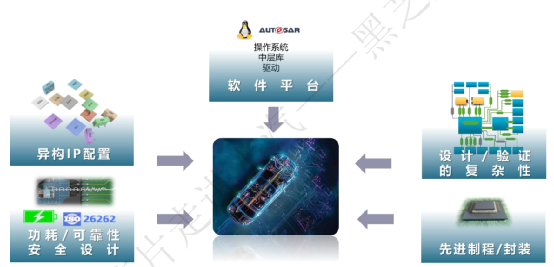

In the industry, well-known SOC manufacturers include NVIDIA, Qualcomm, Mobileye, etc., and they all have their own expertise in specific areas. Chip making is a very complex process that requires the accumulation of time and experience in terms of both technological architecture and process. Currently, many companies’ chip IPs are obtained by finding third-party universal IPs, but for Heizhima, we deeply understand the complexity and importance of the entire IP configuration while pursuing computing power, with utilization being even more important.

SOC chips are mostly heterogeneous in design and include different operating IPs such as GPU, NPU, and CPU. Therefore, the configuration of heterogeneous IPs is critical in chip design. Configuring IPs is not just about increasing computing power as much as possible: the overall chip design and verification must also be considered with aspects such as bandwidth, peripherals, and memory taken into account. Additionally, chip processing has a data flow, and during the design and verification process, optimization must be done at every processing step based on the data flow and throughput.

Heizhima’s IP design is demand-driven and determines IP configuration based on expected application scenarios, the number of corresponding sensor processing, algorithm and functional complexity issues in that scenario. Then, power and safety performance design are conducted. From the very beginning, Heizhima has introduced the concept of vehicle-grade and functional safety whole-process design, ensuring that the chip itself meets stringent vehicle-grade standards.

In Heizhima’s overall IP design, advanced process technologies are combined with certain power-saving modes. In terms of design and verification, Heizhima takes into account peripheral bandwidth and memory configuration to verify data transfer and throughput, optimizing configuration. While prioritizing vehicle-grade and functional safety standards, Heizhima lays a solid foundation for subsequent product iterations.

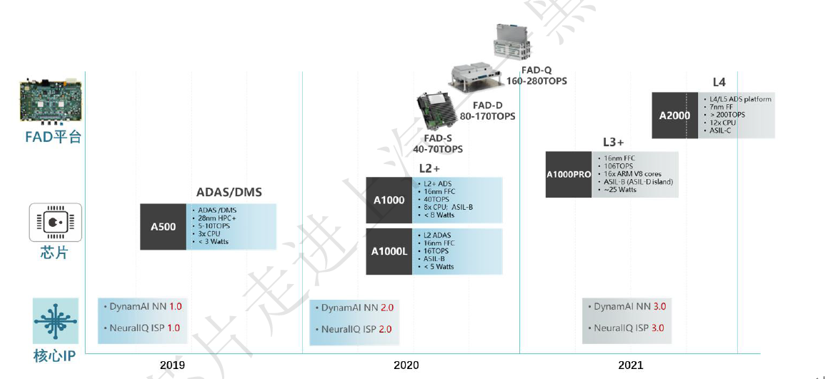

The current chip used by Heizhima is the A1000, which is based on 16nm technology and is a whole chip of ASIL-B level, which can achieve ASIL-D level safety island. The part used for AI computing is based on Heizhima’s completely self-developed NPU, which can achieve computing power of 40 Tops.

The current chip used by Heizhima is the A1000, which is based on 16nm technology and is a whole chip of ASIL-B level, which can achieve ASIL-D level safety island. The part used for AI computing is based on Heizhima’s completely self-developed NPU, which can achieve computing power of 40 Tops.

At this year’s Shanghai Auto Show, Heizhima released a new generation of A1000pro, which is also based on 16nm technology, but its computing power will reach 106Tops. The functional safety design and safety island will follow the A1000 design, and it is expected to obtain engineering samples in Q3 this year.

The comparison between Heizhima’s SOC and NVIDIA, Mobileye and other products can be seen in the table below. As a start-up company, Heizhima has its own self-developed IP, and its NPU design is completely based on neural networks. In terms of energy efficiency, it is superior to NVIDIA.

The Two Core Competitiveness Advantages of Heizhima Intelligence: NeuralIQ ISP and DynamAI NN

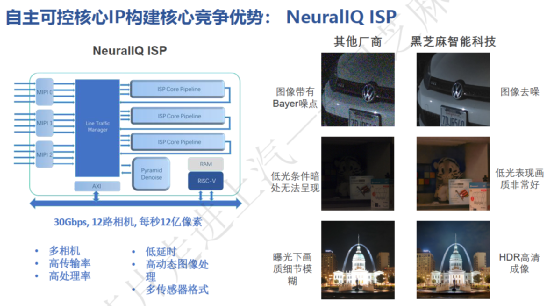

The first is the independent and controllable core IP to build core competitiveness: NeuralIQ ISP

As one of Heizhima’s two core IPs, ISP is particularly important because it is very important for image processing. As introduced by Ms. Wu Yu, if the image data is not adjusted to the best state before being fed to the neural network, it is difficult to meet the requirements solely through backend detection and processing. Therefore, Heizhima invested heavily in the ISP aspect and has achieved a leading position in the country. As shown in the figure below, each MIPI block corresponds to a pipeline, and each pipeline has a clear ISP development process, including noise reduction, high dynamic dimming, and white balance. Each part has a corresponding software team for optimization and invocation. NeuralIQ ISP allows the camera to have advantages in high-definition imaging under rainy or dim light.

The second is the independent and controllable core IP to build core competitiveness: DynamAI NN engine.As a high-performance deep neural network algorithm platform, DynamAI NN engine relies on four 4K convolution operation arrays to provide typical convolution layer operations on neural networks. All neural networks can be handled by a separate NPU, depending on the use of the latest software and algorithms for hard-core acceleration and IP design employed by Black Sesame. The benefits are that it increases the flexibility of algorithm configuration for customers, and, based on the combination of ISP and NPU, it can achieve optimal results for image and neural network processing.

Autonomous Driving Computing Platform and Complete AI Development Toolchain

Black Sesame provides an open FAD autonomous driving computing platform, which can be selected based on customer applications and algorithm requirements through a dual- or quadruple-cascade of A1000 chips, making it easier for customers to realize algorithm and application verification.

In addition, Black Sesame also provides supporting DSP and SDK. Furthermore, Black Sesame provides a complete AI algorithm development toolchain – the “Shanhai” artificial intelligence tool platform. The Shanhai AI tool platform is divided into four parts:

The first part provides 50 neural network reference models based on different visual applications, such as target recognition, small object recognition, and semantic segmentation.

The second part is an artificial intelligence training framework. If customers previously used TensorFlow, PyTorch, or Caffe to train a type of neural network, Black Sesame’s tools can be used for framework transformation, facilitating subsequent development and operations.

The third part comprises specific tool types, including quantization, group diagram segmentation, and cropping, among others. It also supports customers’ self-contained toolchains, based on which these operations generate parameters and executable code that can be deployed on embedded systems.

The fourth part includes deployment ranges, including chips, single boards, and FAD development platforms.

The development of the autonomous driving industry relies on high computing power SOC as its core foundation. Only by continuously improving the hardware computing power can we meet the increasing demand for sensor configurations and software algorithm iterations. Black Sesame, based on its self-developed core IP: ISP and NPU, utilizes the most advanced neural networks and software algorithms to drive chip optimization and innovation. With a complete toolchain, Black Sesame also helps customers quickly implement algorithm verification and application deployment. It is reported that this year, several models equipped with Black Sesame’s intelligent high-computing vehicle chip will enter mass production, providing customers with guarantees for mass production and implementation of products with car-level and high-security chips.

The development of the autonomous driving industry relies on high computing power SOC as its core foundation. Only by continuously improving the hardware computing power can we meet the increasing demand for sensor configurations and software algorithm iterations. Black Sesame, based on its self-developed core IP: ISP and NPU, utilizes the most advanced neural networks and software algorithms to drive chip optimization and innovation. With a complete toolchain, Black Sesame also helps customers quickly implement algorithm verification and application deployment. It is reported that this year, several models equipped with Black Sesame’s intelligent high-computing vehicle chip will enter mass production, providing customers with guarantees for mass production and implementation of products with car-level and high-security chips.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.