Author: EatElephant

As an autonomous driving algorithm engineer, we have always adhered to the belief that multi-sensor fusion is the only way to achieve autonomous driving. However, Tesla Autopilot and FSD seem to challenge this view. Obviously, Elon Musk and Tesla engineers are the most outstanding talents in the industry, they must know the importance of multi-sensor fusion, and they would not persist in making obviously wrong decisions due to stubbornness. But why did Tesla choose to go the pure vision route and abandon Lidar and millimeter wave radar? Perhaps we won’t know the reason for 100% certainty until this year’s AI Day, but Elon shared some thoughts on this decision on his Twitter, and here I will try to analyze and understand these theories.

It can be seen from Mr. Musk’s explanation that the biggest obstacle to integrating camera and millimeter wave radar data at the same time is that the signal-to-noise ratio of millimeter wave radar is very low, in other words, there are a large number of false detections. When fusing the visual perception results with the millimeter wave radar results, the usual approach is to trust the vision and ignore the millimeter wave radar detection results if they are inconsistent.

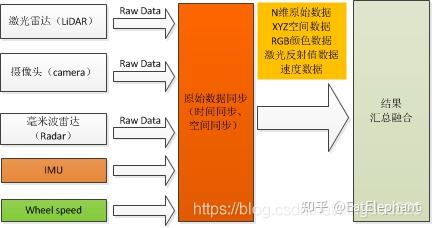

To further explain the challenges of visual and millimeter wave radar fusion, first let’s introduce common multi-sensor fusion strategies. The commonly used multi-sensor fusion strategies are categorized into pre-fusion (tight coupling) and post-fusion (loose coupling), where “pre” and “post” refer to whether fusion occurs before or after data processing by the algorithm. The following schematic diagrams are from Multi-Sensor Fusion Technology (Basic Concepts, Differences between Pre-Fusion and Post-Fusion) – Beyond What We See – cnblog.

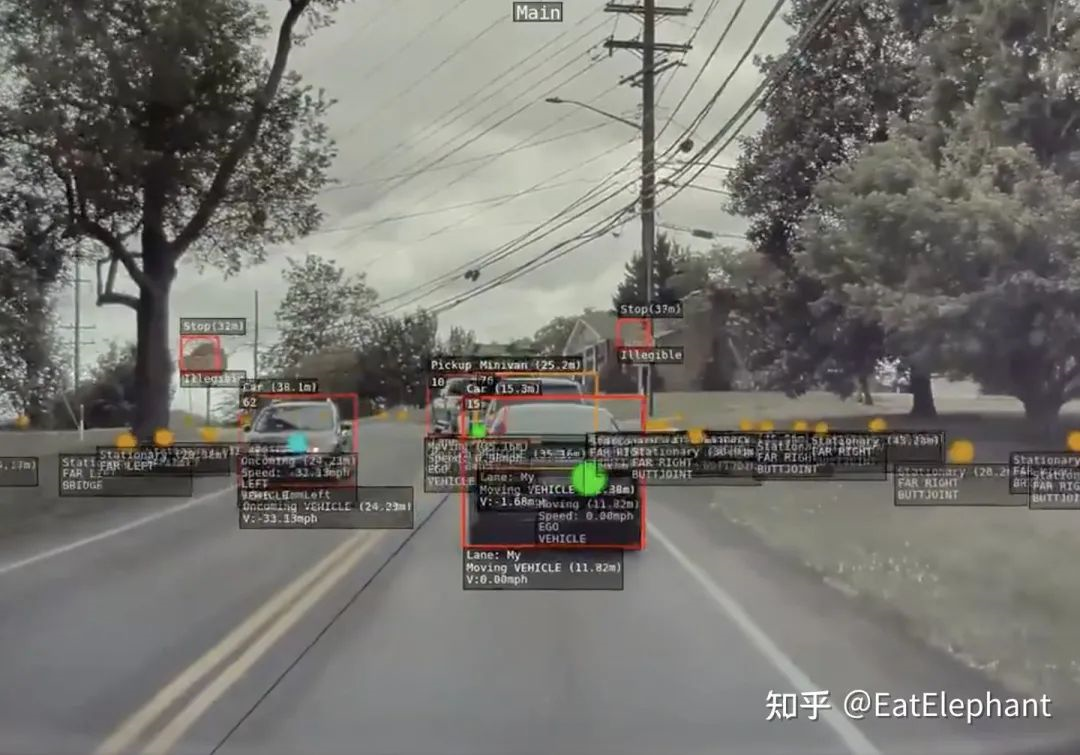

Pre-fusion obtains better results theoretically because it combines the raw information from multiple sensors for algorithm processing and thus maximally preserves different sensor information. However, camera data is structured high-resolution image data, and millimeter wave radar raw data is a sparse point cloud with low angular resolution and no elevation information. It is difficult to fuse these two types of raw data as a single input to a neural network model. Therefore, currently, most solutions use post-fusion (loose coupling) strategies.However, the loosely coupled fusion solution inevitably causes information loss in the process of each sensor processing its own data, and the fusion process uses the sensor output results that have lost information for fusion. Theoretically, the effect is not as good as tight coupling. The image below shows the visualization effect of the Tesla main camera image combined with the millimeter wave detection results (blue, green, and yellow dots). The millimeter wave radar does not differentiate height, so multiple detection results such as road signs, vehicles, and road edges are visualized at the same height. Moreover, due to the poor millimeter wave angular resolution, it is difficult to correctly match visual detection results (red box) with millimeter wave detection results (dots) by using horizontal position.

The low signal-to-noise ratio mentioned by Musk is caused by the inherent defects of millimeter wave radar, such as lack of height information and low angular resolution. Because millimeter wave radar does not have height information, it cannot distinguish static objects that do not affect driving, such as manhole covers and gantry frames, from static obstacles that can cause collisions such as white stationary trucks. If we rely too much on millimeter wave radar, automatic driving would brake unnecessarily when passing gantry frames and manhole covers. If we ignore millimeter wave radar’s detection results excessively, it may cause accidents like in the past two years, where despite equipped with millimeter wave radar, Tesla’s Autopilot still failed to handle stationary white trucks in many cases (so when using Autopilot, the driver must pay attention to the road conditions!!!).

Since millimeter wave radar cannot distinguish between obstacles and manhole covers or gantry frames, automatic driving usually does not pay much attention to millimeter wave’s static detection results, resulting in accidents involving hitting stationary white trucks.

It was once rumored that Tesla would use 4D millimeter wave radar to avoid the problems caused by the current millimeter wave radar. 4D millimeter wave has more precise angular resolution, and theoretically, it will help automatic driving solve many issues of ghost braking and missed static obstacles. I also had high expectations for Tesla to make this improvement, but now it seems that Tesla is ready to give up millimeter wave completely. This may be due to the immaturity, high cost, and insufficient production capacity of 4D millimeter wave products, as well as the difficulty of finding a suitable method to perform front-end fusion between millimeter wave radar and camera even if using 4D millimeter wave.Actually, from Tesla’s perspective, their FSD Beta’s perception under Birdeye view achieved even surpassed the performance of LiDAR and millimeter wave radar in terms of functionality through the use of multi-camera fusion. However, there may still be some shortcomings in terms of stability and generalization, and their top priority is to iteratively improve the existing visual fusion solution and improve the generalization of Birdeye view detection. Giving up the use of millimeter wave radar, which, in most cases, performs worse than cameras, has simplified the difficulties encountered in the multi-sensor fusion process while saving costs. If safety can be ensured through algorithmic improvement, this may be a reasonable approach. However, I still remain skeptical about whether this solution can ultimately replace LiDAR and millimeter wave radar. We can only wait and see what the future will bring.

The perception results obtained by Tesla FSD Beta under Birdeye View through multi-camera fusion often rival or even surpass LiDAR and millimeter wave radar.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.