*Author: HYZY

Autonomous Driving Simulation Test Objects

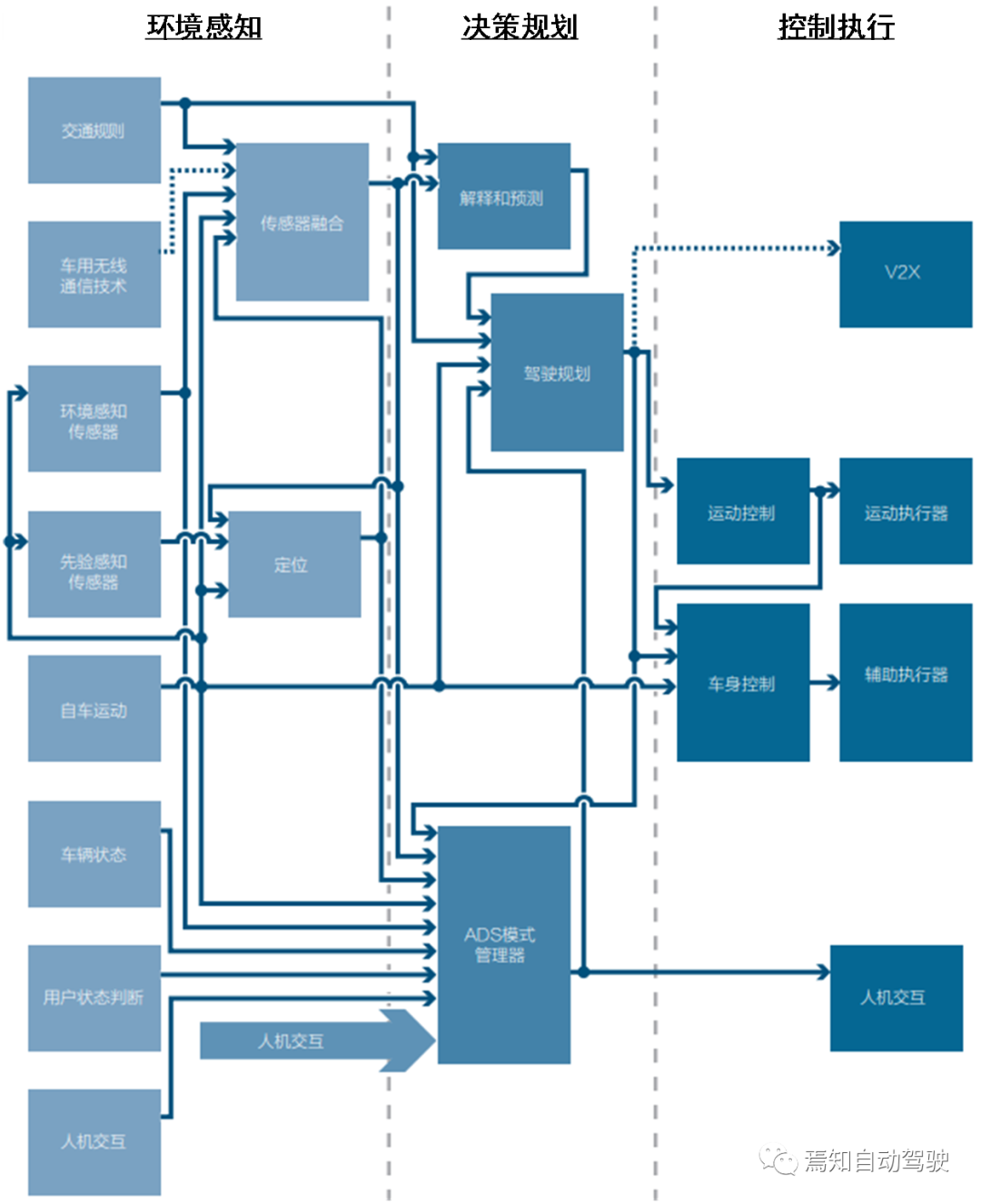

The autonomous driving system consists of three subsystems: environmental perception, decision planning, and control execution, which are composed of sensor models, decision models, control object models, and corresponding software and hardware parts.

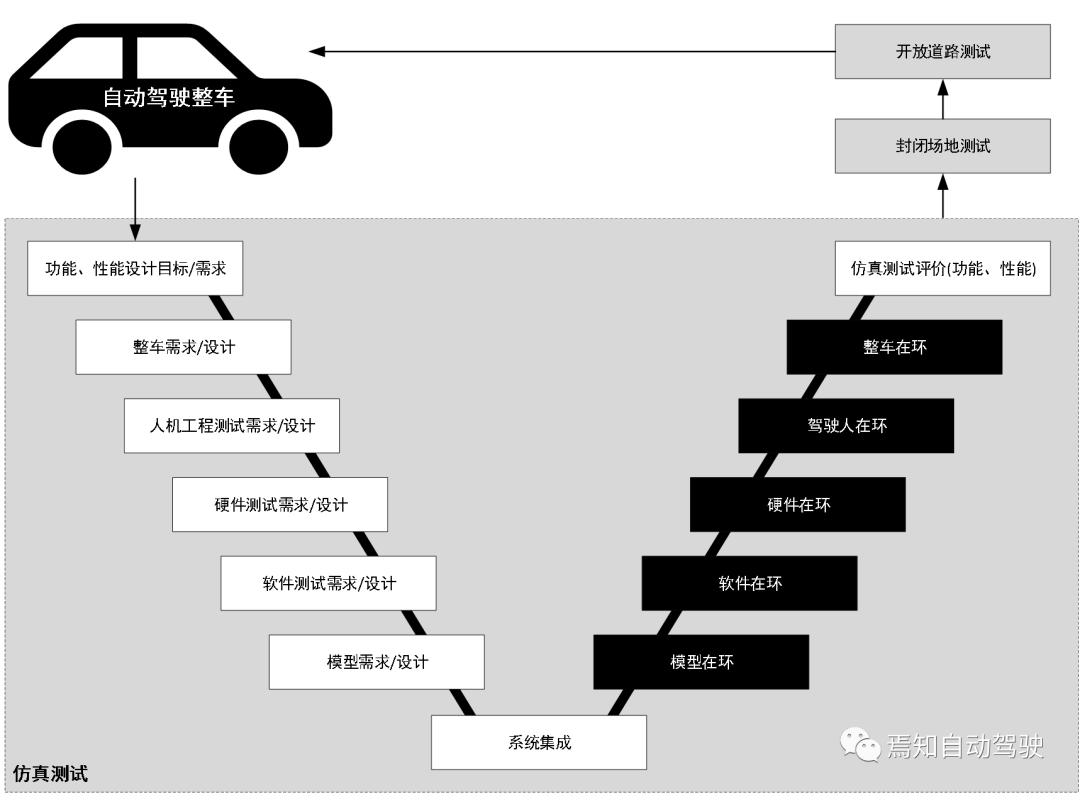

From the perspective of the V-model, to complete the testing of the autonomous driving system, each layer of its algorithms, software, hardware, subsystems and the entire vehicle must be tested layer by layer, so as to form a complete chain of tests.

In terms of testing methods, simulation testing, site testing, and road testing together constitute the “three pillars” of autonomous driving testing.

Site testing and road testing only focus on the vehicle level, and the covered scene and operating conditions are limited. Especially for long-tail scenarios, it is difficult to be tested by the actual vehicle method.

Autonomous driving simulation testing can effectively make up for the shortcomings of vehicle testing. In addition to the coverage of scenes, it can also test different levels of testing objects such as autonomous driving algorithms, software, hardware, subsystems, and the entire vehicle to form a complete chain of tests.

Autonomous Driving Simulation Test Process

According to the characteristics of the testing objects at different levels, different autonomous driving simulation testing environments can be selected. Generally speaking, the model-in-the-loop (MIL), software-in-the-loop (SIL), and hardware-in-the-loop (HIL) testing for the autonomous driving system’s model algorithms, computing platforms, domain controllers, etc. are carried out in turn. After that, driving-in-the-loop (DIL) and vehicle-in-the-loop (VIL) testing are carried out for the entire vehicle. See the specific simulation testing process in the figure below.

Autonomous Driving Simulation Test Execution Steps

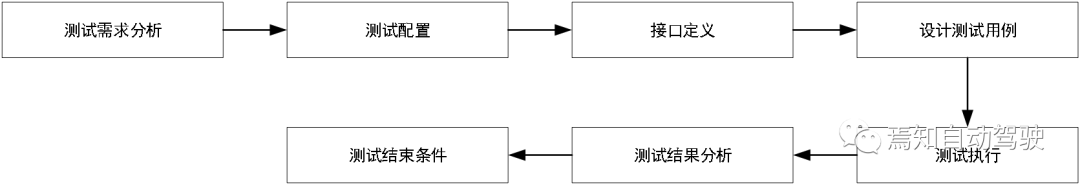

Typical execution steps for autonomous driving simulation testing include: test requirement analysis, test configuration, interface definition, design of test cases, test execution, test result analysis, and test termination criteria.

1. Test Requirement Analysis

Simulation testing requirements usually include the functional and performance requirements of the tested autonomous driving system, the output requirements for simulation results and the self-requirements of the simulation testing platform.

-

Functional and performance requirements of the tested autonomous driving system: functional specifications, performance indicators, architecture diagrams, design operating ranges, and test ranges, etc.;

-

Output requirements for simulation results: data format and content, output data frequency, result analysis;

-

Self-requirements of the simulation testing platform: synchronicity, real-time performance, stability, etc.2. Testing Configuration

Testing configuration refers to the parameter configuration of the simulation testing platform according to the testing project and requirements, including:

-

Vehicle model configuration: mainly setting the aerodynamics, power transmission system, braking system, steering system, suspension system, tires, etc.

-

Static scene configuration: mainly setting road parameters, including roads, lane markings, signs, guardrails, vegetation, streetlights, weather, etc.

-

Dynamic scene configuration: mainly the input of the target model, including vehicles, pedestrians, animals, and their dynamic relationships.

-

Sensor simulation configuration: modeling according to the physical characteristics of cameras, millimeter-wave radars, LiDAR, and ultrasonic radars.

-

Controller configuration: mainly setting power supply voltage, interface configuration, and protocol configuration.

3. Interface Definition

Includes data format interfaces, communication interfaces, interfaces between actuators and controllers, and special interfaces.

4. Designing Test Cases

The design of test cases should consider both the adequacy and efficiency principles, and have repeatability for the description, construction, and execution process of autonomous driving testing tasks.

Test cases mainly describe functions, static scenes, dynamic scenes, expected test results, and passing standards.

5. Test Execution

Test execution refers to the process of conducting specific simulation scene testing work based on the test requirements of the system under test by formulating a test outline and running software to obtain response data of input signals for the simulation platform.

Test execution specifically includes initial state setting, test vehicle operation, target vehicle addition, test vehicle decision-making, test process monitoring, test process automation, data storage, etc.

6. Test Result Analysis

Simulation results should be processed, including data classification, statistics, filtering, and visualization.

7. Test Termination Conditions

Mainly used to evaluate whether the system simulation testing meets the required specifications, usually including:

-

Completing the scheduled system testing task as required.

-

The actual testing process follows the predetermined testing plan.

-

Objectively and completely recording the testing process and all problems discovered during testing.

-

The entire testing process is controlled from start to finish.

-

The anomalies in the testing have a reasonable explanation or are correctly and effectively handled.

-

All test cases, test software, and test configurations have been completed, and data has been recorded.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.