*Author: She Xiaoli

Recently, our self-driving vehicles have made their debut and ADS is no longer a mysterious organization. From the not-so-impressive demos a few years ago to the mature products of today, it is safe to say that every aspect of the field, from concept to design, has undergone revolutionary changes and reconstructions numerous times. Looking back, this is the painful yet necessary process of forging an innovative product.

The field of safety is no exception.

This process has forced us to set aside all standards and regulations, and question ourselves: fundamentally, where does the safety risk of autonomous driving come from?

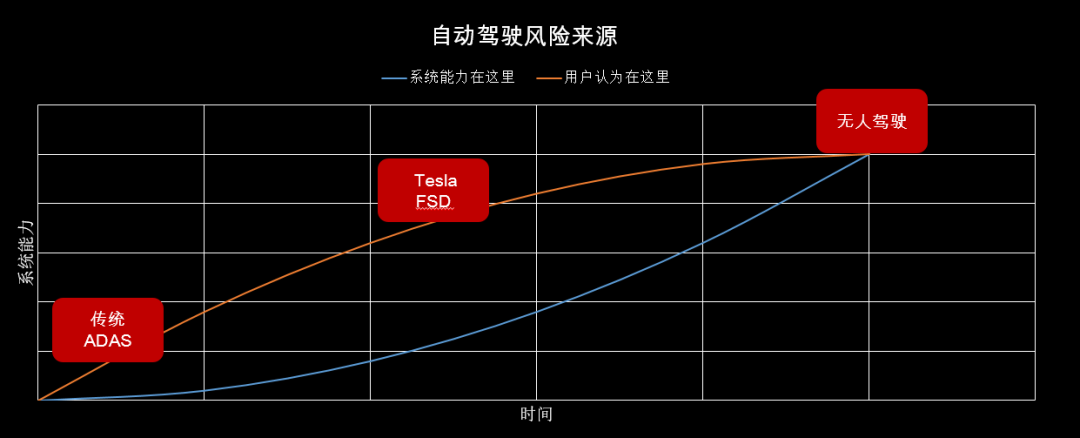

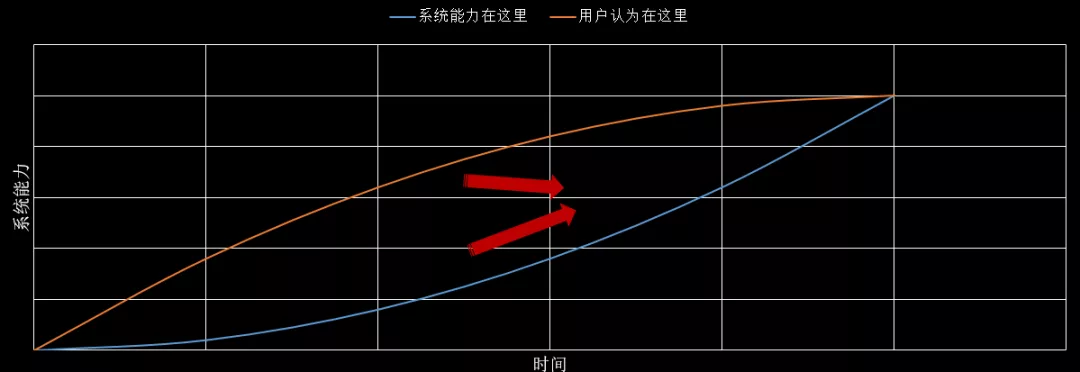

I believe this graph roughly illustrates our understanding:

This graph intuitively expresses the source of safety risks: deviation between user expectations and system capabilities.

With low-level traditional ADAS, users quickly realize they can only occasionally take their foot off the accelerator, and won’t have any misconceptions that it has more advanced features. With low system capabilities and low user expectations, safety is not a problem. The problem is that such products are useless.

With advanced self-driving technology, high system capabilities and high user expectations, safety is not a problem either, but the problem is that such technology still does not exist.

The difficulty lies in the process from the lowest point to the highest point. All self-driving systems on the market are at this stage, striving for a more seamless user experience and trying to raise user expectations. Risks accumulate during this climbing process.

Users are easily pleased by a system’s single points of capability and short-term performance. When the system successfully avoids a small donkey, users will assume the system can do anything. When the system does not require human intervention for a week, drivers’ trust will dramatically increase, and they may start using their phones or even fall asleep. User expectations are bound to increase much faster than system capabilities.

Our considerations and starting point are all aimed at bridging this gap.

Traditional functional safety design is essential

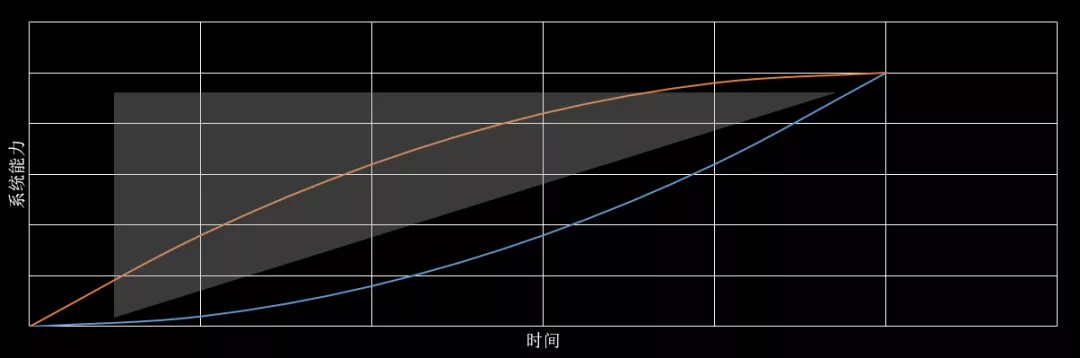

Fundamentally, traditional functional safety design ensures that risks outside the system capabilities (gray area) can be covered by human intervention.

Specifically, it ensures two things: first, the driver can take over at any time. Second, it avoids causing unavoidable harm to public safety. Everyone has expectations of traffic behavior, and when the behavior of one’s own car greatly disrupts these expectations in a short time, it is difficult for people to react and avoid in a timely manner.

Although the goal is the same, there are significant differences between the design of high-level self-driving technology and traditional ADAS. But it is nothing more than the application of functional safety concepts to this new species of ADS. I believe this will gradually become a mature area of research in the industry, so I won’t go into too much detail.## Just Right Redundancy

The term “fail-operation” commonly used in the industry originates from the definition of Level 3 (L3) automation, which requires leaving enough time for the driver to take over the system in the event of a failure (usually 10 seconds). The paradox of this definition has been discussed enough in the industry, but it is not the focus here. The focus is that due to the stringent definition of L3 by SAE, fail-operation is almost equivalent to full redundancy, which has led the direction of redundancy design completely off track.

Interestingly, I attended a discussion on autonomous driving a few days ago and saw the obvious consensus among mainstream industry players: no one is arguing about L2/L3/L4 anymore, and everyone is focusing on urban areas, generalized scenarios, and user experience. So, does this mean redundancy design can be completely disregarded?

The problem still lies in the gap between system capability and user expectation. When the user trusts the system enough, it becomes difficult for them to take over the system in an instant. This is the root of the system redundancy requirement.

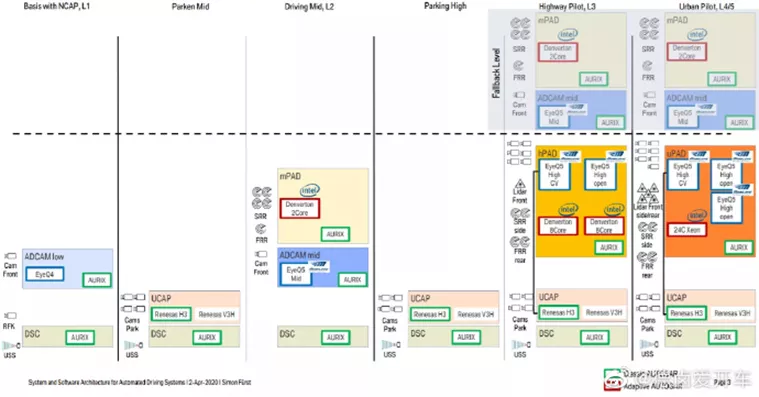

However, redundancy is a double-edged sword. Redundancy means increased costs, complex architecture, and numerous pitfalls in the switching process. With the diagram below, we can observe the redundancy architecture of a certain OEM for their autonomous driving system. I am not certain if this architecture has been implemented, but my judgment is that it is definitely a failed and non-competitive architecture.

Redefining “System Delivery”

In traditional product development, the system’s capabilities, reliability, and safety must reach a high level of maturity at the moment of “SOP” (start of production).

However, for autonomous driving, “SOP” does not mean the end of product development. The process of constantly improving system capability often occurs after SOP. This is a subversive shock to the traditional “system thinking” of safety design. This means that it is not practical or necessary to follow the traditional approach of listing all requirements, developing and delivering them according to the V-model before SOP.

“Data-driven improvement” is a popular concept in the field of autonomous driving and is also the mainstream direction of system evolution unanimously recognized by industry players.

For safety design, in order to respond to this trend, one must identify key delivery objectives at each stage and the timely closure of the data loop is critical. This is also the problem we aim to solve with our “Data Driven Improvement” program.

Finally, a crucial point that cannot be ignored is the need for reasonable human-machine interaction design to control the overall system risk during the process of improving system capability.

This also leads to the last and most challenging point: through human-machine interaction design, make user expectations and system capabilities as consistent as possible.

The challenge lies in striking a balance between “user experience” and “user expectations.” From a user experience perspective, we hope that users are as undisturbed as possible, with complete liberation. From the perspective of product safety, users need to be prepared to take over the system’s capabilities at any time.

These two demands place very high demands on human-machine interaction design: when not necessary, do not disturb the user as much as possible; reminders should be as accurate and intuitive as possible; based on the evolution of system capabilities, constantly adjust and evolve human-machine interaction. Perhaps everyone is not aware that the design difficulty and workload in this field is no easier than algorithm development.

Finally, in recent years, we have often heard people criticizing that traditional functional safety methods cannot effectively respond to the development of new technologies. I hope to provide some new ideas for everyone and inspire discussion on this issue.

After all, the user experience of the product is the key core competitiveness of the autonomous driving track, and safety is the guarantee of laughing until the end of this marathon track.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.