Author: Sun Luyi from Ambarella

At the “First Yan Zhi Intelligent Vehicle Annual Conference”, Sun Luyi, Senior Director of Software Development at Ambarella Shanghai, gave a presentation on the theme of “Application of High-performance Low-power AI Chips in Autonomous Driving and Intelligent Cockpits”, introducing the role and market situation of visual perception in ADAS (Advanced Driver Assistance Systems), autonomous driving, and intelligent cockpit applications, and sharing Ambarella’s corresponding solutions.

Note: At the end of the article is a video introducing Ambarella’s exhibit at the 2021 Shanghai Auto Show. Feel free to click and watch.

Visual perception is crucial to autonomous driving and intelligent cockpits

Sun Luyi stated that modern cars require AI functions such as fault diagnosis and autonomous driving, and mainstream and practical products all use cameras, even multiple cameras, which is a trend. He said: “We do not exclude various radar technologies, including laser, millimeter wave, ultrasound, and other technologies that can play a good role. Currently, cameras are the main sensors, and others are auxiliary sensors.”

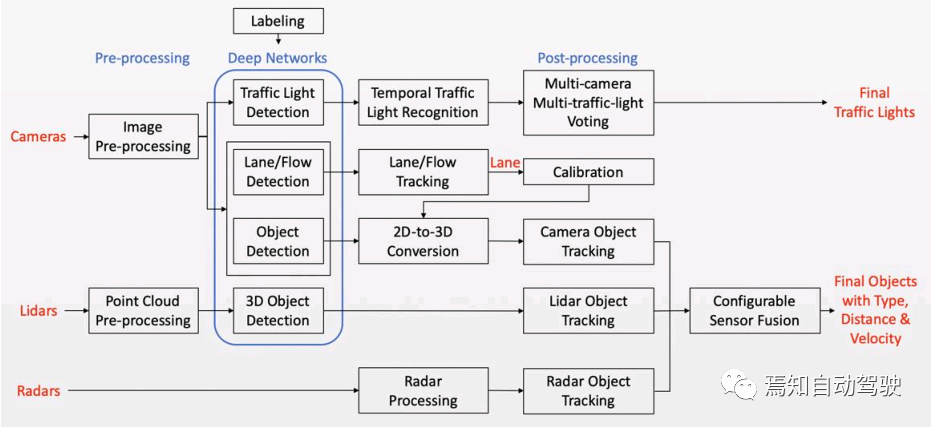

He explained that from Baidu Apollo’s multi-layer perception framework, it can be seen that its autonomous driving uses a more classic and authoritative open-source basic framework. They all process and calculate images coming from cameras (especially traffic lights and lane detection), while laser and millimeter-wave radar are not so useful. In terms of object detection and classification, most radars currently do not perform well. Their role is deep detection and the detection of objects that do not require special discernment, and they are used to detect obstacles.

Visual perception surpasses the human eye

In terms of the fusion of various sensors, visual perception plays a very important role, which is the core value of cameras. Ambarella is dedicated to developing the most relevant chips for visual sensors in autonomous driving.

He used Tesla as an example to explain that the Model 3 represents a typical design concept for vehicles with a certain degree of ADAS or autonomous driving assistance. Two or three years ago, in order to achieve ADAS and autonomous driving, a large number of cameras were needed, some of which were responsible for short distances, some for long distances, and they needed to cover different angles. The viewpoints of different cameras also needed to overlap, in order to see objects from all directions, and with appropriate redundancy to improve reliability.

Traditional cameras can partially replace human eyes. With the advancement of visual perception and optoelectronic technology, today’s cameras not only have clearer vision than human eyes, but can see visible light and thermal imaging light. Now, in some respects, visual sensors have surpassed what the human eye can see. With the further development of technology, more image sensors will definitely be added to visual processing units to replace the human eye.

Perception quality can vary greatly among identical modulesWe know that the general construction of camera modules is very similar. There is a lens in the front and an Image Signal Processor (ISP) in the back. After going through the ISP, do modules with the same configuration produce the same image quality? Sometimes there can be a significant difference.

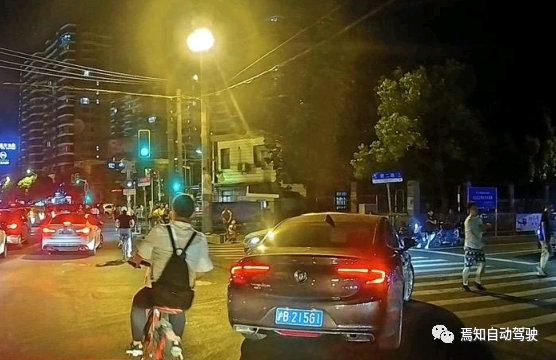

There are many reasons for this. For example, take a night image from a driving recorder that uses the Ambarella solution. It displays dark areas quite clearly while it is not easy to get halo effects from strong light sources such as headlights of oncoming cars. This seems simple but you will find that the image quality of many driving recorders that use other solutions cannot achieve this effect in the same scene.

To achieve this effect, there is one important condition: High Dynamic Range (HDR) is required. In the noon sunlight, a person standing against a wall will be very bright in the background but dark in the front. This is very similar to the scene when a car passes under a bridge or enters/exits a tunnel. A camera based on traditional visual perception systems will see either a white or a black field. When encountering sunlight, the visual perception sensor will not be able to see the person.

To solve this problem, it is necessary to use High Dynamic Range technology. The effect of a camera with built-in ISP is vastly different from that of an ISP and image processing algorithm that comes with Ambarella SoC. The latter not only sees more clearly in dimly lit areas, but also better retains details in brightly lit areas. This is one of the advantages of Ambarella image processing.

Need a Strong “Brain” in Addition to “Eyes”

Sun Luyi believes that good visual perception requires a strong “brain” in addition to good “eyes”. It is difficult to imagine how many algorithms are needed for autonomous driving and intelligent cabin applications. Therefore, new functions should be added according to customer requirements, and future needs cannot be replaced by a single algorithm. Compatibility with various algorithms is necessary.

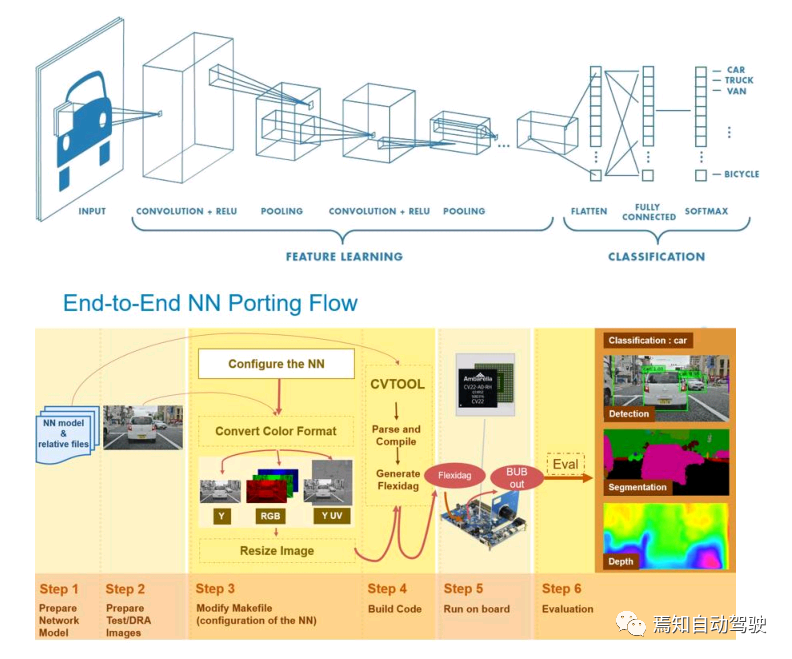

Ambarella’s AI acceleration engine is designed with a universal strategy to help various manufacturers run neural network algorithms well, and the algorithms can be ported and adjusted according to needs. Ambarella’s tools are not only convenient and easy to use, but also enable network optimization.”We have a different approach from other manufacturers. If customers need help, we can help them with network optimization. If customers feel they are particularly strong and do not want to tell us any details, they can do it themselves,” he said.

Ambarella has rich experience in the development and production of automotive chips for 7-8 years. Shanghai R&D center mainly focuses on China’s automatic driving market, which is not only a research and development center, but also a customer support center, and also supports projects including the United States and Europe.

“In the Chinese market, Tier1 and host factories are closely cooperating with us, such as some projects of intelligent cockpit. The definition of new car functions generally comes from the host factory, and Tier1 generally communicates with the main chip suppliers to determine whether the plan is appropriate and how to design it when demands arise,” he added.

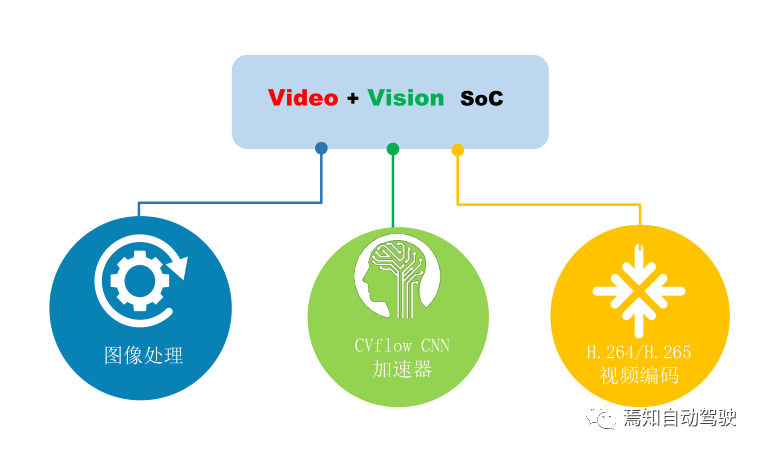

The core technologies cover image processing, video coding, and visual AI algorithms.

According to Sun Luyi, as of 2018, the cumulative shipment of high-definition cameras using Ambarella chips has exceeded hundreds of millions.

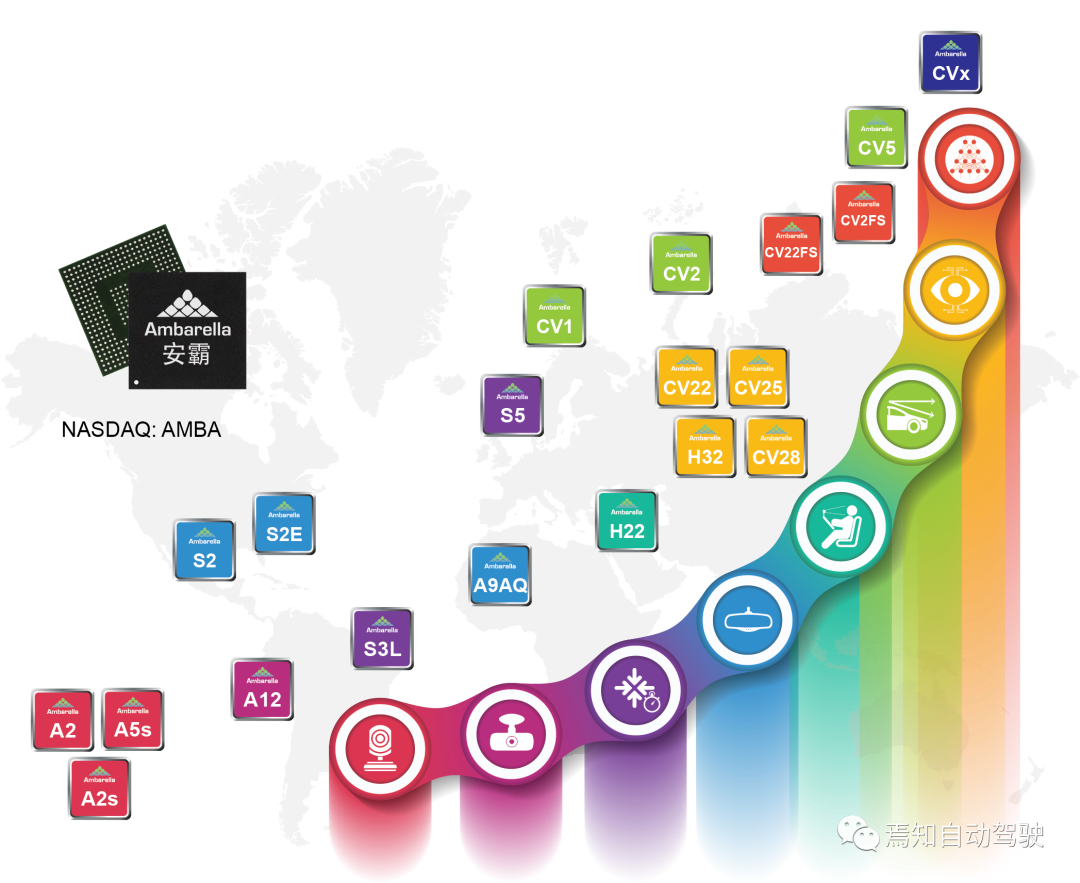

In 2017, Ambarella took the lead in launching the front-end AI acceleration chip CV1, followed by the CV2x series chips in 2018, all of which have been mass-produced, including automotive front-loading projects. All CV series chips use 10-nanometer process technology and conform to automotive regulations.

Earlier this year, Ambarella released the CV5 AI visual processor that uses the most advanced 5-nanometer technology and A76 architecture, suitable for video security, sports cameras, drones, and multi-channel driving recorders, supporting 8K real-time video recording.

Ambarella’s core technologies include image processing, video coding, and visual AI algorithms:

Image processing: Ambarella has had its own ISP chip since 2005, with many years of development and experience accumulation. Through repeated improvement based on customer demand, there have been many iterations until now. The new generation ISP has very strong performance, and has been specially optimized for automotive applications in recent years.

In 2017, Ambarella launched the first CVflow computer vision SoC chip CV1. It uses independent AI hardware units for effective deep learning acceleration and low power consumption. The later released CV2x series chips are more mature, and the CV2x series chips have empowered the front-end AI computing of millions of mass-produced products.

In the Chinese market, there are currently hundreds of thousands of front-end projects of mass-produced automobiles using CVflow engines. The latest product is the CV25 camera system SoC, which combines advanced image processing, high-resolution video coding, and CVflow computer vision processing, using extremely low-power design.CVflow architecture provides the necessary deep neural network (DNN) processing for the next generation of cost-effective intelligent home monitoring, professional monitoring, and aftermarket automotive solutions, including Advanced Driver Assistance Systems (ADAS), Driver Monitoring Systems (DMS), Occupant Monitoring Systems (OMS), Electronic Rearview Mirrors (CMS) with blind spot monitoring, and Parking Assistance Systems (APA).

Video Encoding: In recent years, the H.264 and H.265 encoding capabilities of Ambarella chips have become crucial in high-definition video collection for dash cameras. Previously, high-definition video collection and recording were used to record a one-time accident, allowing for the investigation of the cause of the accident after it occurred as a basis for assigning responsibility.

In recent years, high-definition video collection has been used for multiple purposes, including an important purpose that was not previously considered, that of collecting various rare cases for use as richer data for automatic driving.

Visual AI Algorithms: How are AI algorithms in the age of AI created? They are produced through feeding large amounts of effective data. Only by providing a variety of data can there be a sufficiently good scenario coverage, and training of the algorithm can result in higher accuracy. The data must be collected by customers using the same equipment.

Therefore, Ambarella’s AI vision chip’s deep learning engine CVflow provides customers with the operational environment for the algorithm, and Ambarella’s video recording engine helps customers establish a video and image data acquisition system. As a result, customers can collect this data for training, followed by updating the algorithm through OTA to further improve the capability of ADAS.

What are the Capabilities of Ambarella Chips?

Sun Luyi also described the performance advantages of Ambarella ISP chips, computer vision chips, and AI accelerators in detail.

ISP Chips: Ambarella ISP supports all CMOS sensors on the market, and its market positioning determines that it supports CMOS sensors in the automotive industry standards and major manufacturers, and supports new sensor types such as RGB-IR. It has a high bandwidth and a total processing capacity of 4Kp60, and can support time-division processing and synchronous input of multiple sensors. The chip uses professional noise reduction algorithms and wide dynamic range processing algorithms, which can achieve clear, accurate colors, and rich detail images.

The ISP also has advanced programmable hardware built in, and with a set of system software and algorithm libraries and image tuning parameters, image experts can improve image quality according to customer project feedback.

Nowadays, several mainstream CMOS manufacturers have RGB-IR sensors, but it is not easy to achieve mass production for systems containing RGB-IR in practice. Among the RGB-IR systems used in the automotive industry’s intelligent cabin field, only the Ambarella solution has been proved to have good image quality for mass production, besides meeting the basic requirements of accurate colors.

The reason behind this is that Ambarella’s RGB-IR mode has two advanced features, one being the ability to work with both RGB-IR and HDR simultaneously, and the other being the ability to use the RGB-IR camera of the OMS (Occupant Monitor System) and the camera of the intelligent cabin DMS (Driver Monitor System) patented technologies have been implemented to ensure that the two functions do not interfere with each other. It seems simple to achieve, but it is not easy in reality.

The lighting conditions for these two functions are different, with the lamp of the OMS always on while the lamp of the DMS usually blinks periodically. Only Ambarella has solved the interference problem between the two and applied for a patent.

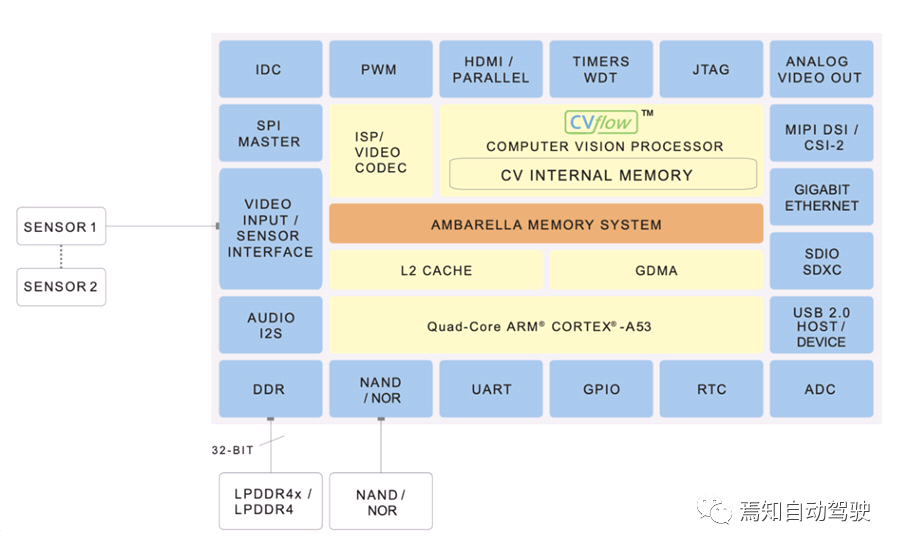

Visual Chips: CV25, CV22, and CV2 computer vision chips, which are specially designed for front-end vision, have now been mass-produced. They were first mass-produced in the security monitoring market and are now also being mass-produced in the automotive market.

The three chips have highly consistent hardware architectures, with only differences in specifications. The lower the code number, the stronger the performance.

No matter which chip the customer chooses to develop the system, they can choose other chips of the same series to improve performance or reduce costs in the future. Several chips support multi-channel image sensor inputs, including high-performance neural network processors and multi-channel encoding, and can also achieve system function safety.

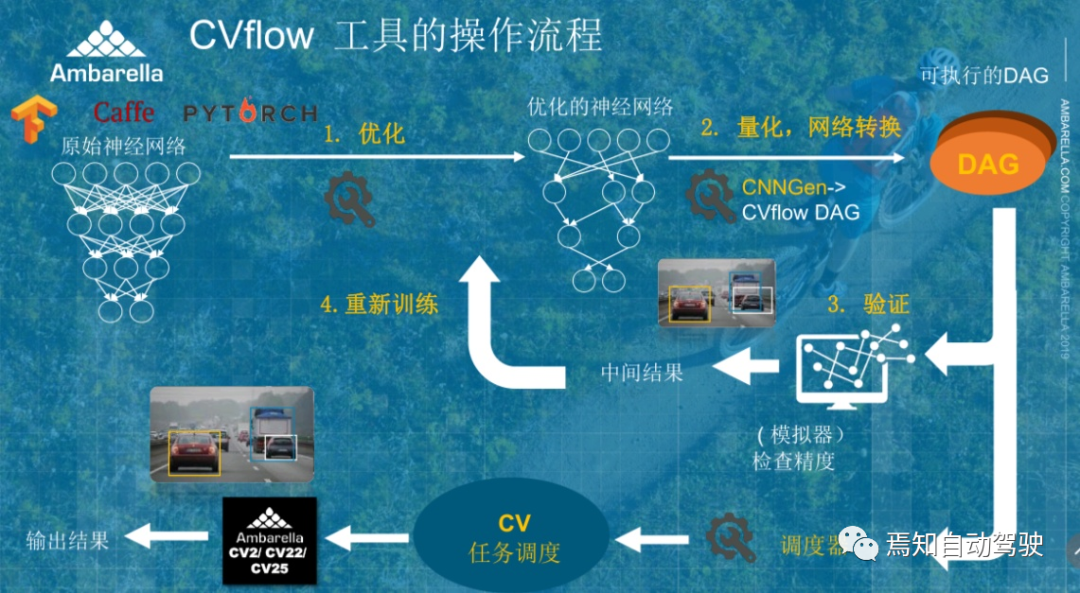

AI Accelerator: Although AI accelerators stacked with multi-core processing may have high maximum performance in testing, in practical applications, there may be some cores that are very busy while others are very idle. In this case, the system does not achieve the high performance of multi-core processing, and the parallelization degree is not high enough.The majority of GPUs and ASP architectures with simple multicore systems need to return the results of their calculations due to cost requirements. This causes repeated storage, increases power consumption, and adds delays. Often, internal cores are not very busy, but are waiting instead. Ambarella solves these problems with CVflow, which can run at low power consumption. The main method is to use hardware designs specifically tailored for deep learning acceleration, reduce unnecessary memory throughput, and develop optimization methods that support intelligent quantized data types.

CVflow is a deep learning training framework. When customers import neural networks, they can obtain results during quantization, conversion, and validation. Although similar methods exist, Ambarella has been developing this tool for three years, supporting most popular neural networks in the market through repeated iterations. Systems developed using this solution are not only highly efficient, but also consume very low power. When running a deep learning algorithm with an 8-megapixel image input, CV22’s power consumption is less than 2W.

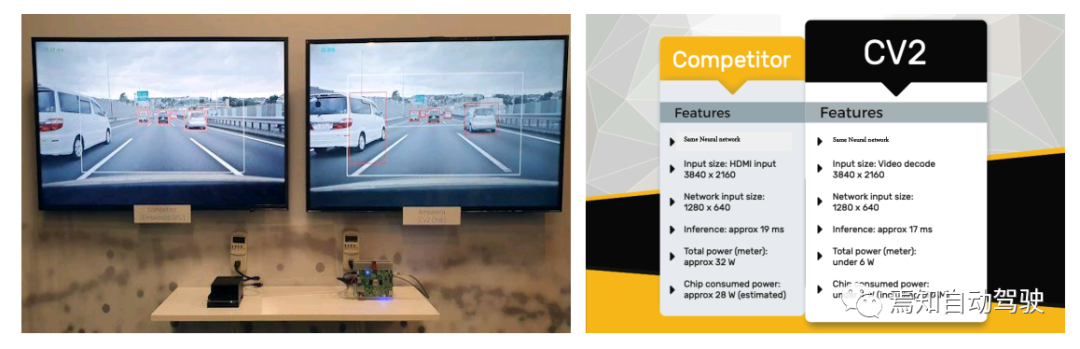

When compared with a mainstream X platform from a competitor that claims 30 TOPS, it can be found that CV2 is currently the most powerful chip among the several mass-produced chips in the market. Under the same input conditions, after running the same neural network, CV2 with 12 Tops takes 17 milliseconds and the competitor takes 19 milliseconds. 12 TOPS is the performance that customers can actually obtain from CV2.

CV5 is a new platform launched this year, using a 5 nanometer process, while the original CV2x series used a 10 nanometer process. The CV5 platform can support 14 image sensor inputs with one chip and has an ISP performance of up to 50 Megapixels per second. One chip can encode over 16 1080P channels simultaneously, and this chip has a USB3.2 high-speed interface for data transmission, and the performance of its AI engine is also very strong.

How to Move Towards Mass Production?

Sun Luyi also talked about the mass production problem, he said: “Our experience is that when designing a system, we should first adopt a reasonable hardware architecture and consider whether to use a discrete or integrated controller. There is no special answer, we need to consider the requirements of the system.”When developing intelligent cockpits, some developers considered adding DMS to the car’s system. After calculating the computing power, it seemed feasible, but after adding DMS, they found that other functions were lagging and the actual performance was insufficient. Sometimes DRAM was also inadequate. Therefore, in practical design, it’s important to consider the extensibility of some features for the future, not just for the present.

He pointed out that in the software development process, analysis, design, and review should be carried out based on software development requirements. However, many companies lack review processes, not because they didn’t do enough, but because they don’t exist. Both unit testing and integration testing are essential, but many teams don’t do unit testing. These problems can lead to the production of complicated and slow software that cannot be mass-produced, requiring overtime work to solve problems.

He believes that the correct approach is to have a plan from the beginning, including how to implement and carry out the plan, and to focus on details. Finally, configuration management is essential, but many companies haven’t reached the level of professional software companies.

In addition, development and testing should be done simultaneously, starting from the smallest unit test and iterating quickly. Developers should review each other, and there should be an open, challenging atmosphere within the company to identify and solve problems as soon as possible and ultimately achieve mass production.

Finally, a comprehensive test, including a real vehicle test, is necessary. The comprehensive test detects high system loads and complexity issues during multiple function operations, as well as issues of reliability under long-term operation, additional reinforcement tests, and actively injecting errors.

Sun Luyi said: “Ambarella has considered design margins and the effects of multiple functions, such as total memory bandwidth usage since the beginning of product specification design. If the single function can meet the target when running independently, but when the system is running together, it falls short, we cannot deliver the product to the customer.”

For visual perception systems, various possible testing scenarios, including different lighting, roads, environments, and weather, should be tried. Currently, some domestic companies don’t have a repeatable and automatic testing system, and they can’t just randomly ask two people to test. They need to gradually create their own testing systems and algorithms, and repeatedly test some cases.

The last task is real vehicle testing, which many companies don’t do routinely. For instance, if a system crashes and someone finds a solution, but now there’s no problem, testing to confirm everything’s alright is not a solution. Why? Have you explained the root cause of the problem? If testing can’t detect a problem, it doesn’t mean it’s solved. It may become more difficult to detect, which is extremely dangerous.## High Standards of Product Quality

Sun Luyi finally said: The automotive industry has its special requirements, some tests require the system to run 7×24 hours non-stop. To achieve such standards, only tests that exceed the product release and exceed customer requirements can anticipate every possible problem in advance. This is to fundamentally find the cause of each problem and avoid failure from the design stage.

It’s also because of following the high standards of product quality that Anbera won the Bosch Global Excellent Supplier Award in 2019, standing out from its 43,000 suppliers. This is also an honor that Anbera has received after more than a decade of cooperation with Bosch.

2021 Shanghai Auto Show Anbera Products

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.