Introduction

There has been a lot of discussion lately about Tesla’s inability to brake, and I won’t go into that direction (such as how to view the situation of Tesla employees reenacting the situation of not being able to brake in Haikou, and how to view NHTSA’s refusal of Tesla’s control loss recall application). Along with the direction of autonomous driving at the car show, each company is striving for excellence, with several key issues:

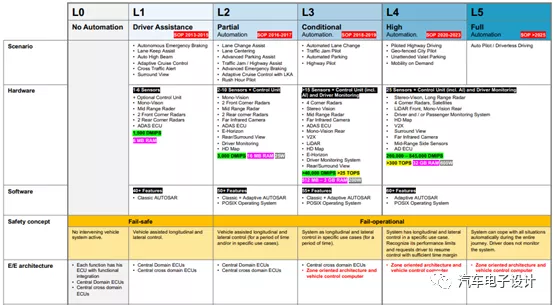

1) As a pioneering company like Tesla, it is facing various doubts and challenges both domestically and internationally. How to deploy FSD software in the future and let a large number of users truly try it out carries a potential risk to road safety, which may be borne by innocent bystanders and other vehicles.

2) Can companies that follow in the footsteps of Tesla design according to safety requirements? This has already been discussed before. During this period, mainstream car companies tend to use L3 to first close high-speed roads on L2+, and then gradually update to L3 after legislation and consumers become familiar with them. And whether it is Huawei, Apollo, the tactics are very interesting. The subsequent entry of technology companies such as Xiaomi and DJI will definitely expand in this direction.

BAIC and Huawei

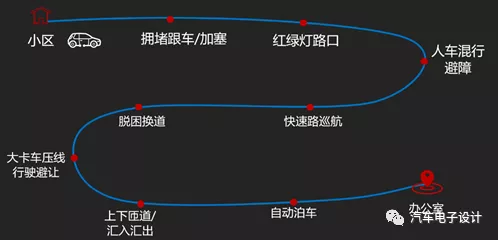

The most eye-catching car at the auto show is still the ALPHA S, the first ground product of Huawei and BAIC. This is a car model designed for the automatic driving capability of urban roads based on the configuration of the laser radar. It covers multiple scenarios such as urban areas, high-speed roads, and parking lots for point-to-point travel across the entire scene. In terms of design features, it is an ambitious attempt to allow users to have continuous automatic driving experience from the neighborhood garage to the company garage (this ambition is not small, facing the L3 of urban roads).

Of course, to put it more directly, this car integrates all of Huawei’s (ICT) capabilities – the Advanced Autonomous Driving Full-Stack Solution ADS. The success of high-end autonomous driving depends on the three aspects of rapid iteration and long-term persistence of the full-stack algorithm, data lake, computing, and sensor hardware. Any deficiencies in any of these areas will lead to failure and dropping out of the competition:

1) In terms of algorithms, ADS uses a leading full-stack algorithm that is the only one that brings high-end autonomous driving capabilities of Robotaxi to private cars. The machine continues to accumulate environmental information and driving habits through self-learning, and continuously iterates and optimizes.

2) In terms of data, massive high-quality data based on algorithmic capabilities promotes constant iteration and optimization of ADS. The ADS super data lake has an essential difference from raw materials. It is derived from core algorithms, serves core algorithms, and fully realizes the value of data. Otherwise, it is just a pile of raw materials that consumes costs.### Translation in English

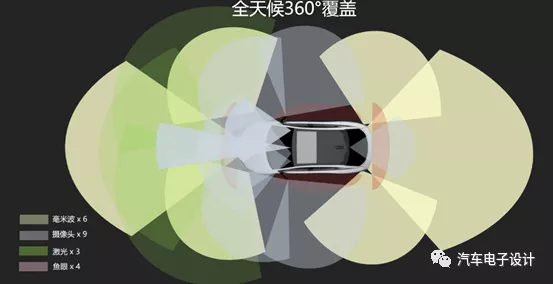

3) In terms of hardware, ADS is equipped with the super-central supercomputer ADCSC, which can support 400TOPS/800TOPS of computational power in two stages, and through leading-edge design, support further upgrade of computing power in the future. Based on the urban area scenario in China, it optimizes the sensor layout for the private car market.

Alpha S, configured with 35 sensors of five categories, has three LiDARs, six millimeter-wave radars and four fisheye surround cameras. Through the remote high-definition camera and LiDAR, Alpha S matches high-precision maps to accurately extract lane-level traffic signal information.

Note: These hardware components make the price very high.

First of all, the importance of LiDAR needs to be mentioned here. In the complex scenarios of Chinese urban roads, especially at unprotected intersections, U-turns, NN intersections, and vehicle merging and inserting, the side view is equally important as the front view for the automatic driving system. Therefore, besides the front LiDAR, two side LiDARs are needed to achieve the overall effect.

Apollo and WM Motor

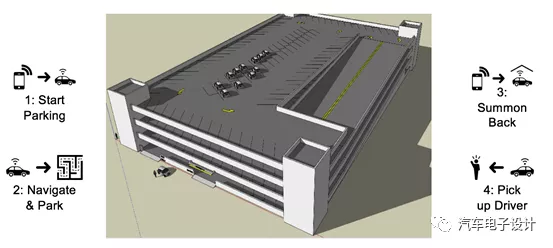

The second interesting point is the low-speed parking AVP for L4 that WM Motor and Apollo want to develop, which is specifically divided into two different scenarios. One is the parking path for private individual scenarios, mainly targeting fixed parking spaces such as residential areas and company parking lots. This positioning HAVP does not require self-learning for parking. In this scenario, it can be simply understood as using a fixed route for unmanned parking.

As shown below, users set the entrance and exit routes at the parking entrance and parking location based on their determined parking position. The red line is the entrance, and the yellow line is the exit. After the W6 AVP system runs once, the high-computing system automatically memorizes the driving trajectory and preset parking space location in the local and cloud. Then, every time, users only need to get off at the entrance and exit and remotely control the vehicle to enter and wait for it to come out.

Based on the high-precision maps of Apollo, it can even be extended to PAVP, mainly for public areas, such as commercial parking lots in shopping malls, underground parking lots of office buildings, etc., for users who do not have fixed parking spaces.PAVP enables unmanned driving parking for non-fixed parking scenarios (finding a space for you to park). With the support of AVP system, which is empowered by cloud computing and high-precision maps, PAVP can independently traverse obstacles, cruise through multiple floors, and automatically park in specific parking environments. You can also summon the vehicle to drive to your side when you need it. To use this service, a parking lot with high-precision map and the ability to find suitable parking spaces autonomously is required. Once such conditions are met, the service completely breaks the distance limitation and liberates users.

The configuration of the hardware for environment perception is mainly based on low speed, and does not require advanced hardware such as lidar and special high computing power core. It includes:

- 2 intelligent high-definition cameras with a maximum detection distance of over 150 meters;

- 4 high-definition surround-view cameras with a resolution of 1 million pixels and a detection range of 20 meters around the vehicle;

- 5 millimeter-wave radars with a forward detection range of 210 meters and a side detection range of 100 meters;

- 12 ultrasonic sensors with a long-distance detection range of 25-500 cm and a short-distance detection range of 20-250 cm.

Conclusion

In these two cases, it is the technology provider that drives the entire car companies. It is expected that in the future, the role of automatic driving engineers in many car companies will be positioning as cooperation partners, cooperating with different technology companies, and comparing the performance of the system. Of course, like the case of Tesla, these systems may face significant challenges during use.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.