The most thorough understanding of “decoupling of software and hardware” must be from the venerable Jin Yong, in his martial arts world, top masters do not have magic weapons, and even weaponless. The Six Meridian Swords of the Dali family, the Dragon-subduing 18 Palms of Xiao Feng and Hong Qi Gong, Tai Chi Fist of Zhang Sanfeng, and Jiuyang Divine Art of Zhang Wuji are all like this. Even the top swordsmanship, the ultimate realm is “No Sword Style,” emphasizing that no move is better than having a move.

And even the extermination master Tai and the Golden Lion King, who own the Heaven-reliance Sword and Dragon-slaying Knife respectively, and even the martial arts that require weapons and fixed moves such as the Kui Hairpin Scripture can only be considered as the second tier. This is decoupling of software and hardware. Internal martial arts are software, and weapons are hardware. Only by realizing decoupling of software and hardware can one teach the extermination master Tai to be a person in minutes even empty-handed.

Therefore, when I was looking at the automatic driving solution for DJI vehicles, the synchronous image in my mind was Zhang Sanfeng teaching Zhang Wuji Tai Chi Fist. According to DJI, their automatic driving solution is mainly based on computer vision algorithms and can have but does not rely on lidar, high-precision maps, RTK high-precision positioning, V2X signals, or even high-computing power chips. The computing platform is adapted to the preferences of the automaker, truly achieving decoupling of software and hardware, not binding to any hardware, and focusing on software algorithms.

Won the cooperation of Wuling and Volkswagen for mass-producible intelligent driving solutions

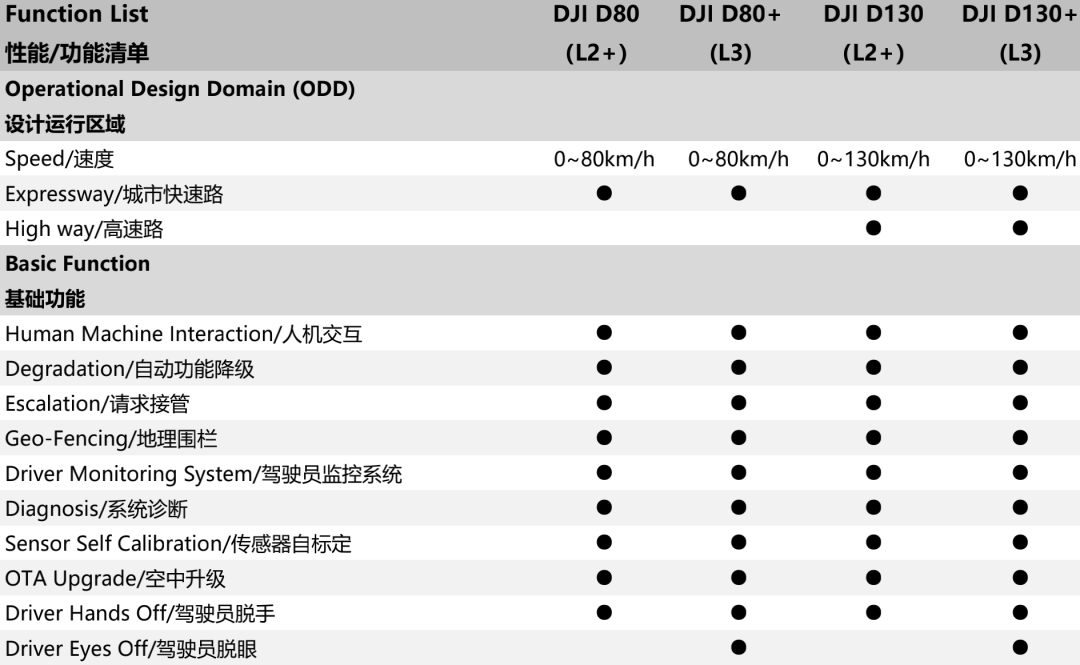

The DJI vehicle team began in 2016. As of the end of 2020, it had more than 500 core R&D personnel, with a master-to-doctoral ratio of 87%, and more than 1,000 patents in intelligent driving and related fields. At this auto show, DJI released the D130 and D130+ intelligent driving solutions for highways, the D80 and D80+ intelligent driving solutions for urban expressways, as well as the P5 “APA,” P100 “APA+,” and P1000 “AVP” solutions for parking scenarios.

Although it is designed for highways and urban expressways, the D80 series and D130 series also offer urban driving assistance functions for non-structured road scenes such as urban areas, with the ability to adaptively cruise, lane keep, and perform AEB active braking and track search through intersections.

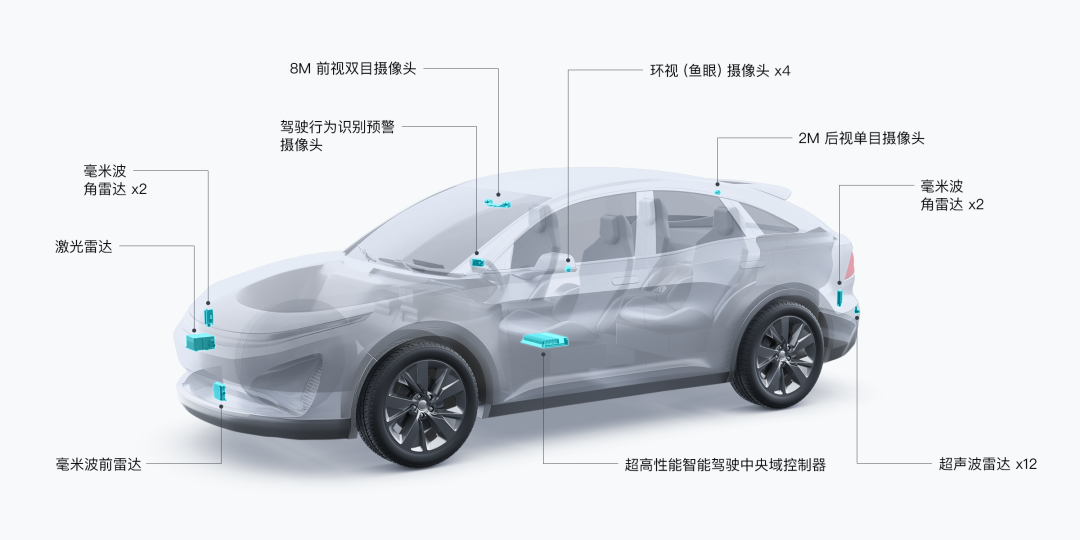

The D80 series differs from the D130 series in that it supports different maximum vehicle speeds. The D130 series can achieve intelligent driving within the 0-130 km/h speed range. The higher the speed, the farther the detection distance needed. Therefore, the D130 series is equipped with an 8 million pixel high-definition binocular camera, while the D80 series is equipped with a normal 2 million pixel binocular camera.

The difference between D80 and D80+ as well as D130 and D130+ lies in the depth of safety redundancy, where D80 and D130 belong to Level 2 assisted driving while D80+ and D130+ belong to Level 3 autonomous driving, allowing drivers to briefly relax and take hands off the steering wheel and eyes off the road during system operation. To achieve higher levels of safety redundancy, both D80+ and D130 come standard with a set of lidar and DMS systems as well as correspondingly equipped with higher computing chipsets. However, according to DJI engineers, even with DJI’s excellent algorithms, the computing power requirement for accomplishing a Level 3 D130+ system would not be exaggerated to the point of hundreds of TOPS.

The difference between D80 and D80+ as well as D130 and D130+ lies in the depth of safety redundancy, where D80 and D130 belong to Level 2 assisted driving while D80+ and D130+ belong to Level 3 autonomous driving, allowing drivers to briefly relax and take hands off the steering wheel and eyes off the road during system operation. To achieve higher levels of safety redundancy, both D80+ and D130 come standard with a set of lidar and DMS systems as well as correspondingly equipped with higher computing chipsets. However, according to DJI engineers, even with DJI’s excellent algorithms, the computing power requirement for accomplishing a Level 3 D130+ system would not be exaggerated to the point of hundreds of TOPS.

The difference between P5 parking assist, P100 memory parking, P1000 autonomous parking, and intelligent summoning lies in the design and operation area, where P5 is called after arriving at the parking space, P100 allows for fully autonomous parking at medium/long distances, while P1000 allows for fully autonomous summoning at medium/long distances.

According to DJI engineers on site, DJI’s core ability lies in software algorithms. Through binocular cameras, common objects can be accurately recognized, and the distance between the objects can be estimated to achieve environment depth perception. With accumulated experience in drone vision algorithms, DJI’s binocular camera perception system can achieve online self-calibration, effectively avoiding errors caused by environmental factors such as temperature changes and vibrations, and outputting stable and reliable perception information.

# DJI Announces a Series of Intelligent Driving Solutions

# DJI Announces a Series of Intelligent Driving Solutions

What impresses me most about DJI’s core algorithm is its powerful generalization ability, even without using lidar, high-precision maps, GNSS and RTK signals, and V2X signals. DJI can still obtain scene depth information through visual algorithms and obtain location information through visual SLAM to achieve reliable intelligent driving solutions. This provides great imagination space for the cost reduction of intelligent driving solutions. Even “Wuling’s People’s Car” has announced cooperation with DJI Automotive to launch “the first intelligent driving car for young people” using DJI’s technology.

Although DJI has its own lidar subsidiary “Livox”, DJI’s attitude towards lidar is that it is not necessary but also not excluded. Even if the lidar is used, it is not bound to be used with DJI’s lidar and bring extra costs. The key is that DJI believes that adding lidar to achieve what can be done through vision would result in a “qualitative change”. The D80 and D130 can support human-machine co-driving and upgrade from ADAS to L3 autonomous driving after being equipped with lidar and upgraded to the D80+ and D130+ solutions.

This is different from the current popular Huawei ADS. Huawei’s solution is to use L4 functionality to achieve L2 experience. Although it is equipped with three lidars and 400 TOPS of computing power, the driver is still the main driver and cannot take a rest during the driving process. The system helps the driver to drive more safely in specific scenarios, rather than driving for the driver.

Is vision reliable for advanced driver assistance?

How to achieve point-to-point autonomous driving assistance? This requires the car to understand and solve three problems: “Where am I?”, “Where do I want to go?”, and “How to get there?”. If we do not rely on lidar, high-precision maps, and high-precision localization, the most difficult problem to solve is “Where am I?”.

Imagine being dropped unconscious on any street in a city. How would you know where you are? The first step is to open a map and check your location on it. You will know which street you’re on. Step two, enlarge the map and look for landmarks, such as the intersection of XX Street and XX Street, and a KFC located 20 meters from the intersection on one side of the street. Step three, look up to see where the intersection is and where the KFC is, and then compare to determine your precise location: X Side of XX Street, XX City, X meters away from the XX intersection.

Machines have their own world, and high-precision maps belong to that world. At the same time, high-precision maps are also a mapping of the real world. Understanding their location on these high-precision maps means understanding the vehicle’s position in the real world. Solutions that use high-precision maps, such as XPeng NGP, NIO NOP, and Huawei ADS, use on-board high-precision maps and RTK high-precision positioning signals to determine a vehicle’s lane-level position. With its laser radar, the Huawei ADS system can match the positioning layer in the high-precision map through algorithms to improve its positioning accuracy and redundancy.

The absence of high-precision maps and positioning in automobiles is analogous to humans being in an environment without GPS and mobile navigation, making it much more difficult to know “where am I through passive navigation”, thereby reducing accuracy. Initially, you need to be trained to memorize the road features of the entire city, becoming an “active map” so that you can know where you are without relying on navigation and positioning. DJI hopes to enable vehicles to solve the “where am I” problem independently through a combination of vision and inertial navigation, just like humans have “memories”.

It is currently unclear whether DJI uses its own vehicle fleet to collect environmental information on highways and urban expressways to supplement the positioning layer of a high-precision map. However, the vehicle will surely rely on binocular cameras to perform visual SLAM, and store the mapping information to form “memories”. When the vehicle passes by this location again, it can obtain the relative position to the reference point through comparison, and if the reference point has absolute positioning information, the vehicle indirectly obtains relatively accurate positioning. In essence, this can be understood as using binocular visual SLAM with visual crowdsourcing to solve the “where am I” problem. Of course, this sounds very paranoid. Why not use ready-made centimeter-level positioning signals? DJI’s reliance on high-precision maps instead of high-precision positioning is actually intended to provide redundancy to compensate for the loss of RTK signals, and only sufficiently powerful technology can be used without relying on hardware.

Image from Qianxun Location

Solving one’s location information is not enough for route planning. One must also comprehend the destination’s location information, understand the meaning of traffic indicators and traffic rules, in order to construct a cognitive system of the real world. Without formal testing, we cannot determine whether DJI’s algorithm can achieve the level of carrying a combination of a Light Detection and Ranging (LIDAR) system and high-precision positioning system. However, from a logical and technical perspective, it is achievable.

Conclusion

Many car companies claim that LIDAR is a redundant but essential sensor for autonomous driving. However, using LIDAR as redundancy has only become a gimmick for promotion. The meaning of redundancy is the ability to accomplish the task even without LIDAR, but with alternative measures when LIDAR fails. Currently, LIDAR is not redundant, but rather the main force to accomplish specific functions for certain scenarios.

From this perspective, I am in favor of DJI’s intelligent driving solution. LIDAR is positioned as a redundancy. Using visual algorithms to reduce the dependence on hardware for autonomous driving presents significant technical challenges, but it is meaningful for advancing autonomous driving.

However, one should be alert as the larger the bubble of a seemingly perfect technical solution, the greater the risk of accompanying compromises and concessions during practical implementation. Imperfect solutions may be the true face of autonomous driving.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.