What is the most likely cause of a Tesla Model Y accident?

I posted an answer in the “Disassembly Lab” section of @Zhihu Automotive:

It is most likely due to the outdated hardware used by Tesla.

The Model Y and other models in the Tesla fleet use the third generation hardware, known as HW 3.0, or HardWare 3.0 for short.

Each generation of Tesla hardware focuses on the main control chip. HardWare 1.0 used the world’s leading visual solution provider, Mobileye’s perception chip. Thanks to Mobileye’s powerful visual perception capability, Tesla introduced AutoPilot 1.0, which brought automated driving assistance into the public’s view. However, due to a serious traffic accident in 2016, Tesla quickly passed the blame onto Mobileye, but Mobileye refused to take the blame and terminated their partnership with Tesla. This incident made Tesla aware of the importance of self-researched algorithms. In October 2016, Tesla turned to Nvidia and used the Nvidia PX2 and expanded the perception camera from 1 to 8, which became HardWare 2.0. Later, Tesla found that Nvidia’s chips not only had expensive prices but their computing power could not keep up with the demand, so Tesla began researching their own chips. In March 2019, Tesla released their self-driving chip FSD, which became HardWare 3.0.

Tesla’s Hardware 3.0 contains not only a brand-new FSD control chip, but also eight cameras surrounding the car body, including three pairs of cameras under the windshield, two cameras pointing backwards near the front wheels on both sides, two cameras pointing forward on both sides of the B pillars, and one camera pointing backwards under the rear logo. In addition, there is a millimeter-wave radar installed inside the front bumper, and eight ultrasonic radars installed around the car body, also known as parking radars.

Tesla’s Hardware 3.0 contains not only a brand-new FSD control chip, but also eight cameras surrounding the car body, including three pairs of cameras under the windshield, two cameras pointing backwards near the front wheels on both sides, two cameras pointing forward on both sides of the B pillars, and one camera pointing backwards under the rear logo. In addition, there is a millimeter-wave radar installed inside the front bumper, and eight ultrasonic radars installed around the car body, also known as parking radars.

All eight cameras on Tesla’s vehicle are from the same supplier, ON Semiconductor, a semiconductor company based in the United States. These cameras were released in 2015 and have 1.2 million pixels. There are three cameras with different focal lengths under the windshield, the long-focus camera being responsible for target detection within the farthest range of 250 meters, the medium-focus camera responsible for target detection within the farthest range of 150 meters, and the short-focus camera being used more often for nearby targets or detection of traffic lights. The two side-facing cameras installed near the front wheels on both sides are responsible for identifying side-approaching vehicles, and the two front-facing cameras installed on the B pillars are responsible for identifying forward targets. The one camera facing rearwards is responsible for detecting targets further behind.

Tesla’s forward millimeter-wave radar uses Continental’s fourth-generation radar ARS4-B, which was released in 2016, more than four years ago. Millimeter-wave radar can make up for the shortcomings of cameras in accurate distance and speed measurement. The industry often combines millimeter-wave radar with cameras for information fusion to achieve precise target perception.But due to the inherent design problems of the fourth-generation radar in mainland China, it cannot achieve the measurement of target height, and it will detect stationary targets when passing through a front overpass. It is difficult to distinguish whether the detected target is the bridge or a car really parked under the bridge. In addition, millimeter-wave radar will suffer from severe multipath effects in tunnel and underground garage scenarios, resulting in the mis-detection of many stationary targets. Therefore, many manufacturers in the industry filter out all stationary targets directly when doing information fusion and only use moving targets. Tesla currently does this too, resulting in the tragic accident of a Tesla colliding with a stationary white truck in Taiwan’s Jiayi in June 2020.

As the leader of Tesla, Elon Musk is accustomed to using “first principles” to think about problems. In his eyes, since humans can perceive the traffic environment with only their eyes to identify lane lines, traffic lights, and signs, Tesla must also be able to complete the autonomous driving perception task with only the eight cameras on the car. He even threw out the surprising statement that “those who use lidar are idiots”, making the world’s autonomous driving practitioners full of black lines.

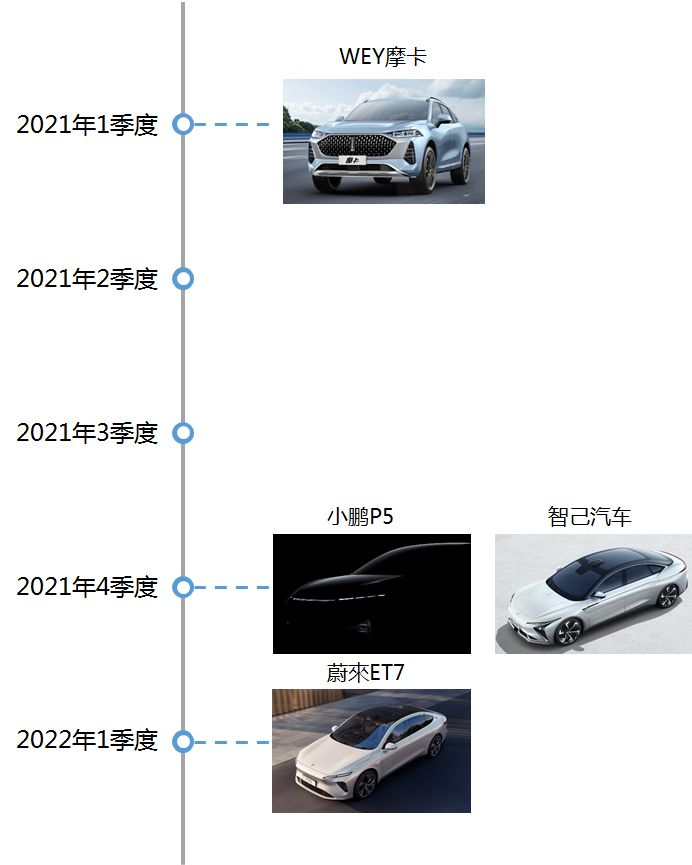

In fact, the Chinese automobile makers XPeng, NIO, Great Wall, and IM have all put the new sensor scheme on their agenda. These new schemes will all use lidar as the main sensor to achieve autonomous driving.

The existing pure vision and non-redundant technology solution of the Tesla Model Y is difficult to achieve full automatic driving in urban areas in China. There are a large number of occlusion scenarios in urban roads, and suddenly there may be some irregular obstacles crossing the road. If the training visual algorithm data set does not include these types of obstacles, it is difficult to ensure driving safety. Therefore, a multi-redundant sensor scheme is the only way to deal with extreme complex scenarios, and domestic manufacturers have already reduced the price of lidar to an acceptable range. So Musk, shall we install one?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.