Let me provide a bit of insight into Tesla’s assisted driving system.

This “insight” starts with the fact that Tesla used a lot of sensors that were developed more than 5 years ago.

Tesla Model Y and other models in the series use Tesla’s third-generation hardware solution, called HW 3.0, short for HardWare 3.0.

Each generation of Tesla’s hardware updates has focused on the main control chip. HardWare 1.0 used the world’s leading visual solution supplier, Mobileye’s perception chip. Thanks to Mobileye’s strong visual perception capability, Tesla launched AutoPilot 1.0, bringing automatic assisted driving into the public’s view. However, Tesla’s good times didn’t last long. The blame for a serious traffic accident in 2016 was squarely put on Mobileye, but Mobileye did not want to take the blame and terminated its cooperation with Tesla. This incident made Tesla realize the importance of self-developed algorithms. In October 2016, Tesla turned to Nvidia and used Nvidia PX2, increasing the perception cameras from 1 to 8, and releasing HardWare 2.0. Later, Tesla realized that Nvidia’s chips were not only expensive, but their computing power could not keep up with demand, so Tesla started to develop its own chips. With the launch of its own automatic driving chip FSD in March 2019, HardWare 3.0 was released.

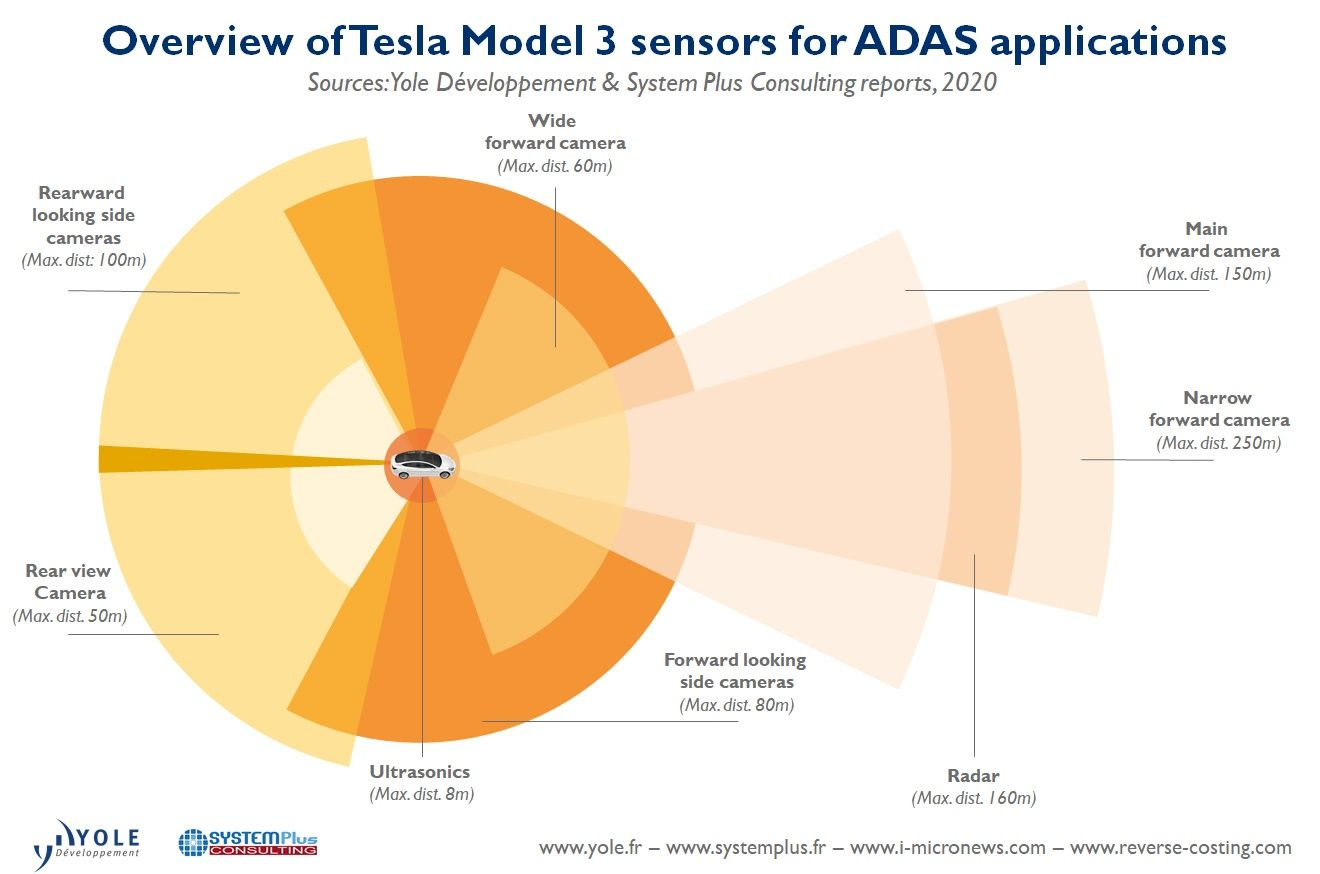

In addition to using the new FSD control chip, Tesla Hardware 3.0 includes 8 cameras around the car (a video shows 3 cameras aligned under the windshield, 2 forward-facing cameras near the front wheels on the sides of the car, 2 forward-facing cameras on the left and right B-pillars, and 1 rear-facing camera below the car’s logo), 1 millimeter-wave radar installed inside the front bumper (a video shows, from the perspective of looking through the bumper, a close-up view of the radar), and 8 ultrasonic radars installed around the car, also known as parking radars.Tesla’s 8 cameras are all from the same supplier – ON Semiconductor, a semiconductor company based in the United States. These cameras were released in 2015 and have 1.2 million pixels. Under the windshield, there are 3 cameras with different focal lengths: the long-focal camera is responsible for target detection within the farthest range of 250 meters, the medium-focal camera is responsible for target detection within the farthest range of 150 meters, and the short-focal camera, though unable to see far, has a wide field of view and is therefore mainly used for detecting nearby targets or traffic lights (as shown in the figure below, an animation can be used to explain the different detection ranges, with a sector appearing for each range described). The 2 side rear-facing cameras installed on the left and right front wheels (which require close-up shots taken in advance) are responsible for recognizing side rear-approaching vehicles, and the 2 front-facing cameras installed on the B-pillar are responsible for recognizing front targets. The 1 rear-facing camera is responsible for detecting targets further behind.

Tesla’s forward millimeter-wave radar uses Continental’s fourth-generation radar ARS4-B, which was officially released in 2016 and has been in use for over 4 years. Millimeter-wave radar can make up for the shortcomings of cameras in accurately measuring distance and speed, and the industry often combines millimeter-wave radar with cameras for information fusion to achieve precise target perception. However, the design of the Continental fourth-generation radar precludes it from measuring the height of a target, and when passing a pedestrian bridge, for example, it may detect a stationary target, making it difficult to distinguish from data whether the detected target is the bridge or a car actually parked underneath. In addition, millimeter-wave radar exhibits serious multipath effects in tunnel and underground scenarios, resulting in the detection of many stationary false positives. Therefore, many companies in the industry choose to filter out all stationary targets and only use moving targets when performing information fusion. This is currently what Tesla does. As a result, in June 2020, a tragic accident occurred in Chiayi, Taiwan, when a Tesla collided with a stationary white truck.

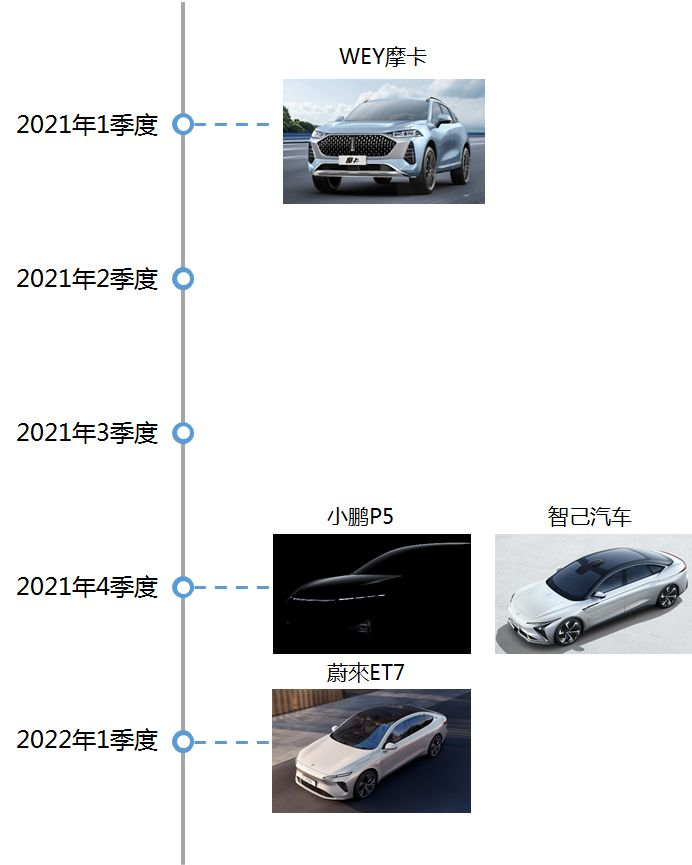

As Tesla’s leader, Elon Musk is accustomed to using “first principles” to think about problems. In his view, since humans can perceive the traffic environment by simply recognizing lane lines, traffic lights, and signs with only their eyes, then Tesla should also be able to complete autonomous driving tasks by relying solely on the 8 cameras on board the vehicle. He even made the shocking statement that “those who use lidar are fools”, which caused consternation among the global autonomous driving community.Actually, Chinese electric vehicle manufacturers XPeng, NIO, Great Wall, and Zhiqi have already put the brand-new sensor scheme on the agenda. All these new schemes will use lidars as the main sensor to achieve autonomous driving.

Tesla’s current pure vision and non-redundant technical solution for Model Y makes it difficult to achieve fully autonomous driving in urban areas in China. There are many obstructive scenes on urban roads. If a rare and unknown obstacle suddenly appears and the visual algorithm’s training dataset does not include this type of obstacle, driving safety cannot be guaranteed. Therefore, a multi-redundant sensor scheme is the only way to deal with extremely complex scenes. Moreover, domestic manufacturers have lowered the price of lidars to an acceptable range. How about we install one, Mr. Musk?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.