*Author: Chris Zheng

Around January 1st, 2021, some rare Tesla models were spotted by foreign netizens in Silicon Valley, including Model S, Model Y, and Model X.

The commonalities of the above models can be summarized as follows:

-

Equipped with a 360° laser radar perception system

-

The license plates are all 63277

63277 is the manufacturer ID assigned to Tesla by the California Department of Motor Vehicles, which means that it is a Tesla internal laser radar fleet.

So, how should we interpret Elon Musk’s swing attitude towards laser radar, which is characterized as vacillating, inconsistent, unpredictable, untrustworthy, and deceitful:)

Ground Truth

Let’s take a closer look at the details of these photos. Thanks to their unique styling, we can easily distinguish that they are Hydra series laser radars under Luminar, a laser radar supplier.

Luminar officially defined the Hydra series as a complete toolkit for testing and program development.

The public specification data from Luminar shows that the Hydra series can have a field of view of up to 120°, meaning that three Hydras can cover the entire 360° surroundings of a car.

However, Tesla seems to have a different view on this. Each of their test cars is equipped with four Hydras to better achieve 360° laser perception.

So, what is Tesla doing with the test laser radars? Does it indicate that Elon Musk will add laser radars to the Autopilot perception system?

According to a former Tesla engineer, Tesla has been using Luminar’s laser radars for depth sensing through point cloud data output for ground truth comparison.

What is ground truth? On Zhihu, there is a question that goes like this: The term “ground truth” frequently appears in machine learning. Could you explain what it means accurately?

Facebook research scientist Yuanyuan Wang answered below: It means “standard answer”.

From Navigate on Autopilot to the FSD Beta that has been scaled for testing in North America, Tesla is undergoing a transformation from 2D visual recognition to 4D perception based on temporal video streams. In this technological leap, Tesla aims to solve an issue: providing 3D perception capabilities similar to those of lidar based on camera input.

From Navigate on Autopilot to the FSD Beta that has been scaled for testing in North America, Tesla is undergoing a transformation from 2D visual recognition to 4D perception based on temporal video streams. In this technological leap, Tesla aims to solve an issue: providing 3D perception capabilities similar to those of lidar based on camera input.

In the process, Tesla compares the perception output of their in-house Vidar (Vision LIDAR) visual lidar algorithm with the “ground truth” output of real lidar Luminar Hydra for quick iteration and improvement.

From a results-oriented perspective, Tesla has made tremendous progress in the field of 3D visual perception.

The following image, demonstrated by Tesla at their Autonomy Day on April 23, 2019, combines millimeter-wave radar with cameras to provide perception through deep neural networks.

From the bird’s-eye view angle on the left, we can see that the deep neural network predicts distance accurately as the SUV in the left lane accelerates away and the pickup truck approaches from the right, but there are deviations in predicting the size and orientation of both vehicle types.

In February 2020, Tesla Senior AI Director Andrej Karpathy presented at Scaled ML 2020, which included a slide showing the latest performance of Tesla’s camera-based depth prediction.

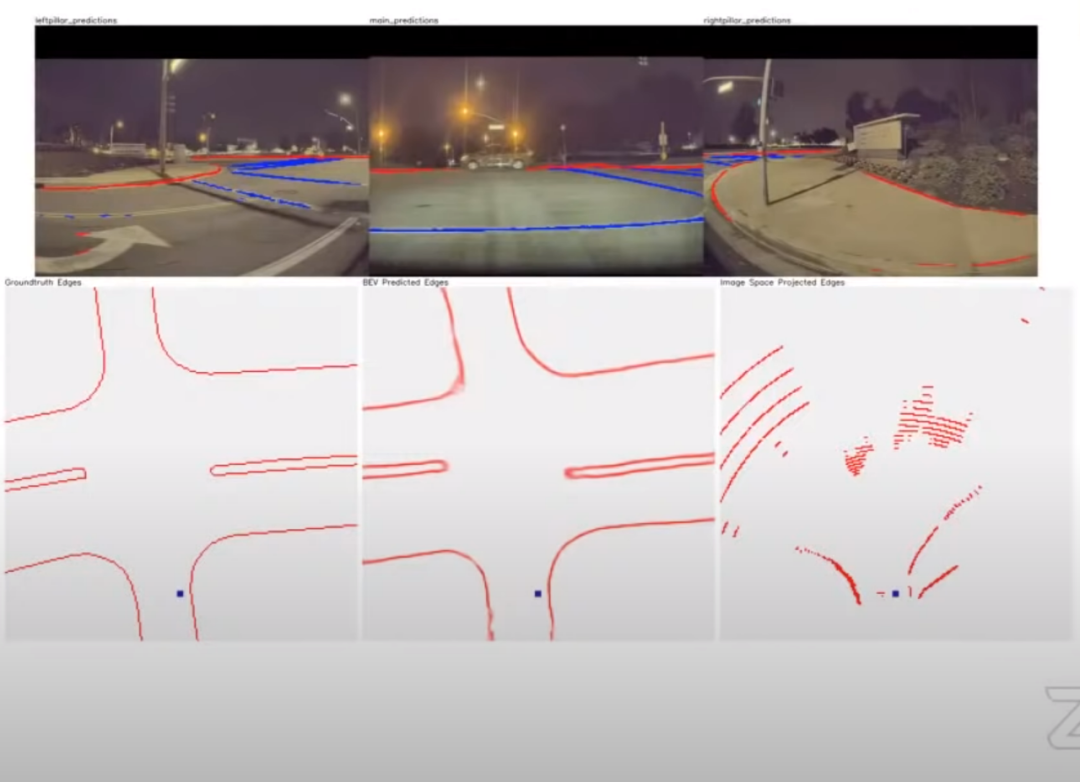

The following six small images have a small line of text in the upper left corner of each, with the top three respectively showing predictions from the left front camera, main front camera, and right front camera.

In the upper left of the image below is Ground Truth Edges, BEV Projected Edges, and ImageSpace Projected Edges.

BEV stands for Bird’s Eye View, which is the sequence-based 360° video perception architecture mentioned by Tesla CEO Elon Musk and is currently the technical basis for Tesla’s FSD Beta North America internal testing.

Therefore, from left to right, they are mapping based on perception of laser point clouds, mapping based on 4D visual perception, and mapping based on 2D image perception.

As shown in the figure, although the BEV mapping in the middle is still not as good as the “standard answer” laser point cloud mapping on the left, it is already very close and has almost no error information in this simple road scenario.

The mapping based on 2D image perception on the right shows a certain degree of accuracy only when the distance to the vehicle (purple dot in the figure) is very close, and the perception at medium and long distances is far from the “standard answer” and is a complete mess.

In summary, Tesla’s purchase of Luminar’s laser radar is to use the depth perception of laser radar as a reference to better tap the potential of visual perception.

Will Tesla install laser radar?

Although Elon Musk has repeatedly stated that Tesla will not install laser radar from a technical path level, there is still a high-volume opinion in the industry that Tesla does not use laser radar mainly because the cost has not reached the threshold of commercialization.

On the other hand, 2021 will be the first year for mass production and installation of laser radar, and a large number of new models released in 2021, including NIO, XPeng, BMW, BAIC Arcfox, and Lucid Motors, will be equipped with laser radar for sale.

What about Tesla?On February 19, 2019, Tesla applied for a patent named “Estimating Object Properties Using Visual Image Data”, which was authored by Ashok Elluswamy, Tesla’s Perception & Computer Vision Director for Autopilot software.

Tesla presents a method that uses laser point cloud data and 4D visual perception to perform Ground truth comparison iterations in the form of a patent.

An interesting paragraph can be found in the detailed description of this patent:

For example, once the machine learning model has been trained to be able to determine an object distance using images of a camera without a need of a dedicated distance sensor, it may become no longer necessary to include a dedicated distance sensor in an autonomous driving vehicle.

When used in conjunction with a dedicated distance sensor, this machine learning model can be used as a redundant or a secondary distance data source to improve accuracy and / or provide fault tolerance.

In this statement, Tesla publicly asserts for the first time their design of redundant perception for autonomous driving.

As we know, single sensors have a risk of failure or malfunction in autonomous driving perception, and redundancy is an important factor for fault tolerance.In this patent, Tesla stated for the first time that the extreme visual potential is squeezed to enable it to play an independent role in perception redundancy/assistance in future Tesla self-driving cars.

So, in the next generation of Tesla’s Autopilot hardware architecture HW 4.0, will Tesla equip it with a dedicated distance sensor: laser radar or higher performance millimeter-wave radar?

In other words, Elon mentioned two points when answering questions from netizens on November 12, 2020: Firstly, he once again clarified that Tesla did not choose laser radar out of any subjective emotion, and SpaceX developed and applied laser radar to dock Dragon spacecraft with the space station. Secondly, he reaffirmed his stance on autonomous driving sensors beyond cameras: millimeter-wave radar with a wavelength of less than 4 mm is a better choice.

Millimeter-wave (MMW) radar refers to radar with a working wavelength of 1-10 mm, corresponding to a frequency range of 30-300 GHz.

In the automotive industry, mainstream millimeter-wave radars have wavelengths concentrated at 24 GHz, 77 GHz, and 60 GHz (mainly in Japan), where 24 GHz radar is mainly responsible for short-range perception (SRR), while 60/77 GHz radar is mainly responsible for medium-to-long-range perception (LRR).

In terms of wavelength, the wavelength of 24 GHz is 1.25 cm, the wavelength of 60 GHz is 5 mm, and the wavelength of 77 GHz is 3.9 mm.

Combined with the radar frequency bands open by transportation departments of various countries and Elon’s statements, as well as the occasional rumors of Tesla’s self-developed radar in the past two years, we can speculate that the next generation of Autopilot HW 4.0 is more likely to be equipped with Tesla’s self-developed 77 GHz imaging millimeter-wave radar to further enhance the redundancy and fault tolerance of the perception system.After the release of FSD Beta, the Tesla Autopilot department entered a high-speed iterative mode of pushing updates once a week. Up to this week, FSD Beta has iterated to version 9. According to Elon, FSD Beta will enter the Early Access Program in full push within the next one to two weeks.

Behind such rapid progress, the credit goes to Luminar’s LiDAR. The lengthy prelude finally echoes the title —

Tesla: I endorse LiDAR!

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.