Last week, we published a popular science article on LiDAR for novices, which has received a lot of positive feedback and many friends hope we can provide more related analyses.

So, let’s start with NIO, which has been most talked about recently.

On January 9th at the NIO Day, Li Bin casually said, “Today, we take intelligent electric vehicles one step closer, from assisted driving to autonomous driving.” I understood that NIO was going to make a big move this time.

New Heights for Perception Hardware in Mass Production Cars

Starting with the name, NIO’s current assisted driving system is called NIO Pilot, while the newly released autonomous driving system at the conference is called the NAD, which stands for NIO Autonomous Driving. The fact that NIO emphasizes “autonomous driving” on the promotional level shows that NIO has high expectations for this system, and NIO’s confidence largely comes from the unprecedented hardware configuration of the NAD.

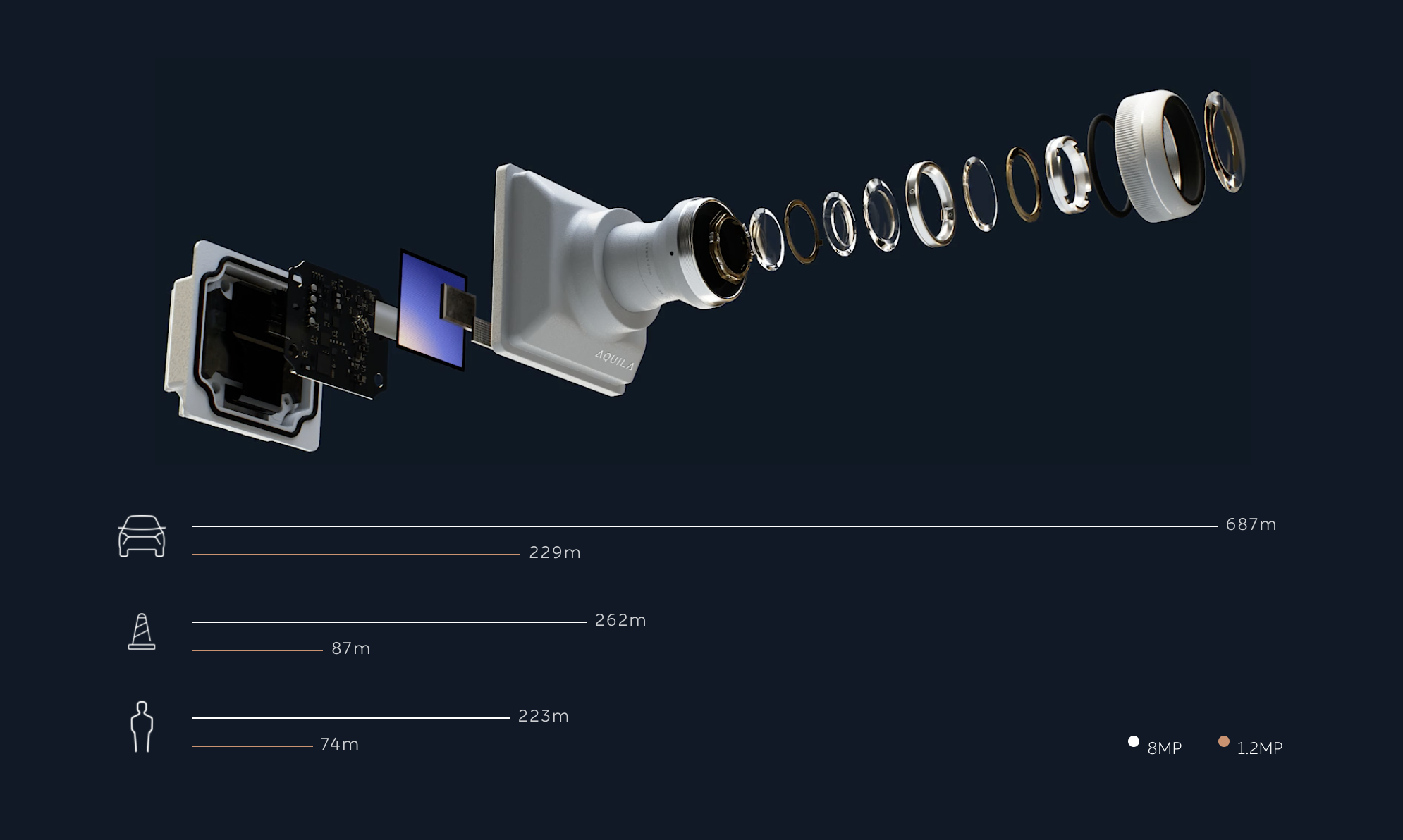

The ET7 is equipped with a large number of smart driving sensors. In addition to the 11 8-megapixel high-definition ADAS cameras and 5 millimeter-wave radars, there are also 12 ultrasonic radars, 2 positioning units (GPS + IMU), 1 enhanced driver perception unit, and 1 V2X car-road collaborative perception unit.

Among them, the 8-megapixel ADAS camera is a global first, and NIO claims that this camera has higher resolution and longer visual perception distance than the 1.2-megapixel camera currently used by Tesla, with its perception distance increased by nearly 3 times according to the data shown on the conference PPT.

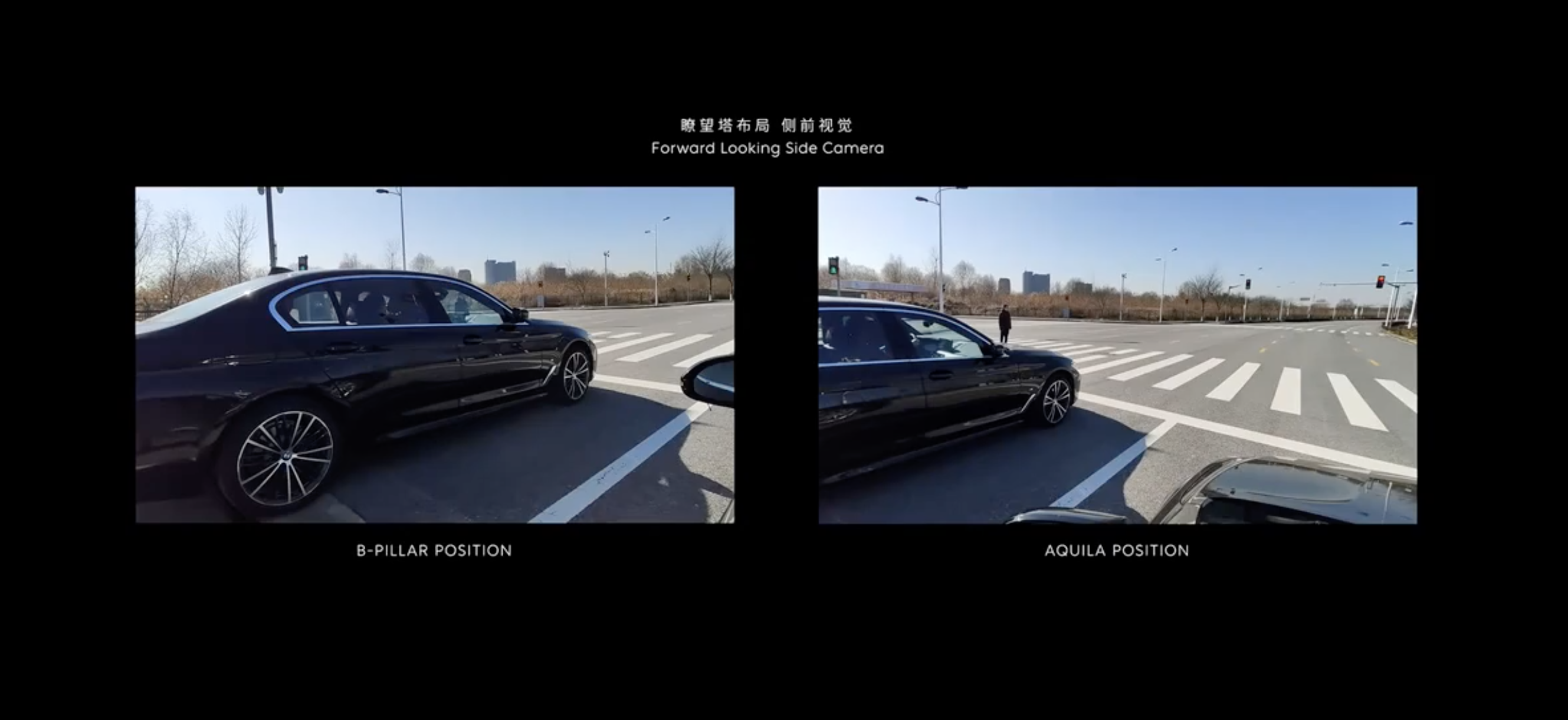

In addition, NIO arranged the camera on the roof instead of on the B-pillar, which has a higher and wider field of view, reduces blind spots, and effectively improves system safety in scenarios where the multi-view of vehicles is poor.

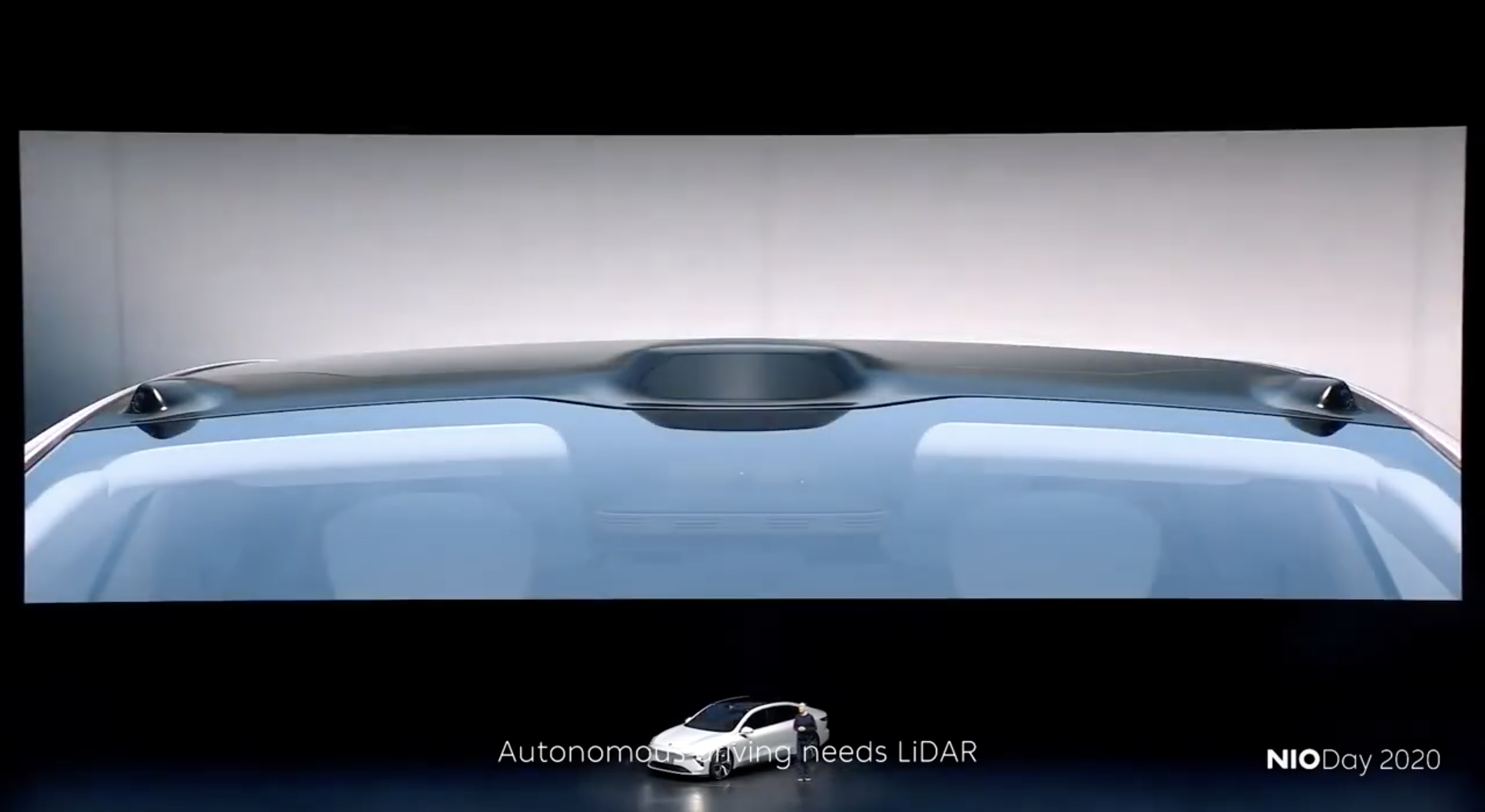

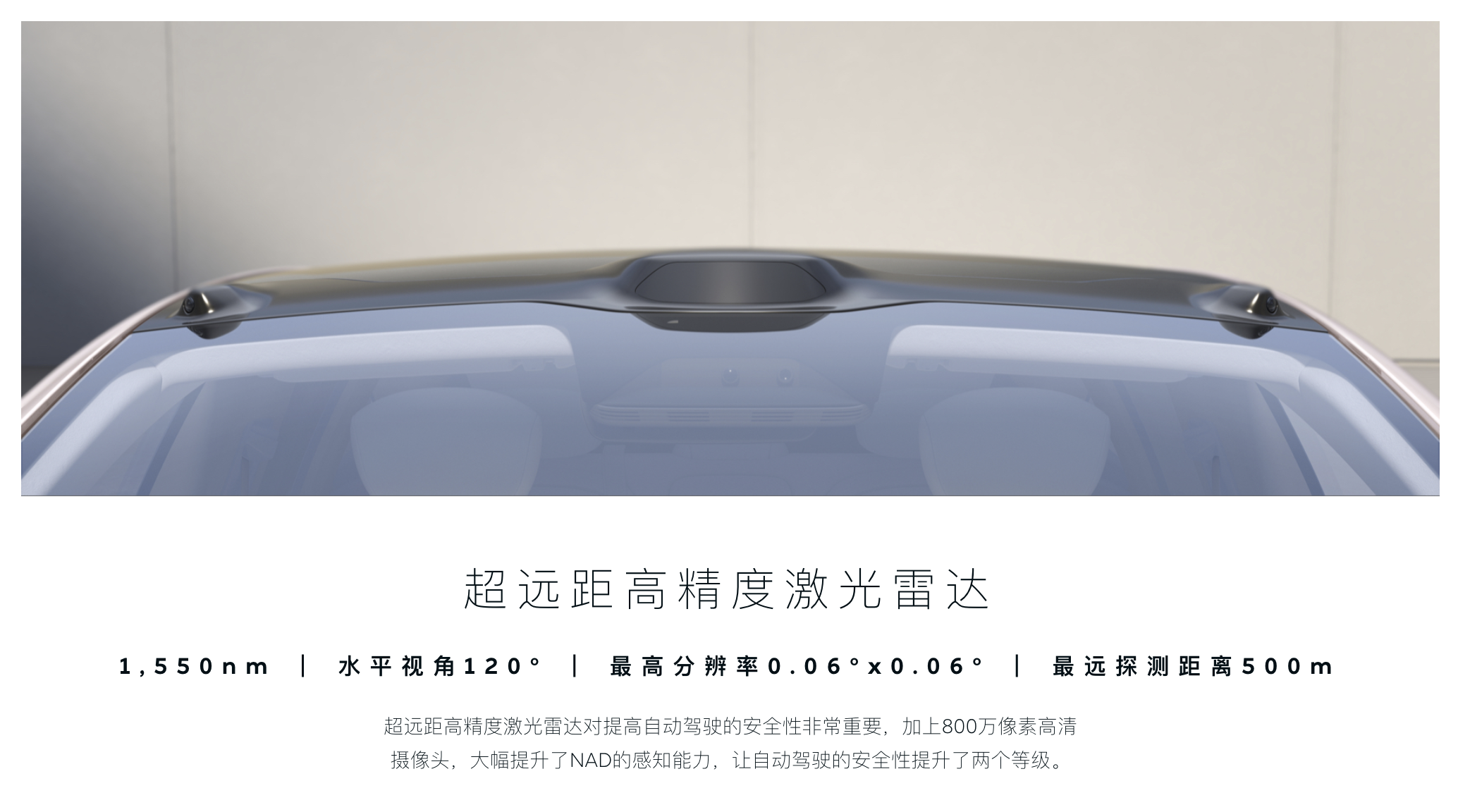

However, with the help of the 32 sensors in the above-mentioned 6 categories, NIO still feels it is not enough. Along with Li Bin’s slow remark “autonomous driving needs LiDAR”, the big screen shows a close-up of the ET7’s roof: NIO ET7 is equipped with LiDAR.

Why Did Li Bin Say “Autonomous Driving Needs LiDAR”?Currently, the main road environment sensors for intelligent driving include cameras and various types of radar. The key reason why manufacturers use different types of sensors is that they are not versatile.

Cameras: Most Informative, Most Difficult to Measure Distance

Vision is a perception method with a lot of information, such as shape, color, object type, and motion status, which can be known through vision. Human driving is also based on visual information.

However, machines and humans are different. Although cameras can capture image information, it is a complex process to understand higher-level information such as shape, distance, object type, and motion status from images. This process requires a lot of image algorithms to assist in processing. Or simply put, the machine cannot obtain driving environment information directly from the camera’s images.

The most difficult information to obtain indirectly through image and algorithms is distance information, that is, depth of field. Because the camera captures indirect 2D information, errors may inevitably exist in distance calculation through various transformations after 2D images. Therefore, to measure distance more directly and accurately, radar sensors are installed on vehicles.

The Radar Troops: Each has its own strengths and limitations

Currently, the radars used in intelligent driving include millimeter-wave, ultrasonic, and LiDAR, among which ultrasonic radar belongs to mechanical wave sensors. The wave speed is the speed of sound. Due to its large delay and large angle scattering, it is difficult to perform long-distance detection and is commonly used for short-distance detection within a few meters at low speeds.

Millimeter-wave radar belongs to electromagnetic wave sensors with a wave speed of the speed of light. Compared with ultrasonic radar, millimeter-wave radar has significantly improved detection distance and accuracy and can complete long-range detection with higher speeds and up to 100 meters of distance. Most millimeter-wave radars are also at a cost of several hundred yuan, so this sensor is also a commonly used distance sensor for assisted driving.

However, millimeter-wave radar also has significant shortcomings. Currently, most of the information detected by millimeter-wave radar includes:

- The horizontal XY coordinates of the object

- The speed of the object relative to the radar direction

That is, most millimeter-wave radars cannot detect the object’s Z-axis information and lateral movement speed well. Therefore, if there is a high-chassis semi-trailer crossing the road ahead, millimeter-wave radar may not provide sufficient comprehensive information and warnings.

But LiDAR can.Lidar, as the name suggests, is a sensor that emits and receives reflected laser for ranging. The speed of the wave is the speed of light. Compared with the previous two types of sensors, Lidar can provide comprehensive 3D information about the road environment. This information not only includes the 3D coordinate information of the detected object, but also the normal vector information of the reflection surface and the Vx and Vy direction velocity information of the detected object. In practical terms, Lidar can not only identify the 3D spatial position of objects, but also obtain their orientation, speed and direction, all of which are directly calculated from the actual point cloud data obtained by Lidar. It is plug-and-play, with both real-time and high precision guarantees.

Therefore, Lidar is a sensor suitable for 3D high-precision measurement, and is undoubtedly a powerful complement to the visual perception that has difficulty detecting 3D information. This is why Li Bin said “autonomous driving needs Lidar”.

The Innovusion equivalent 300-line Lidar used in the NIO ET7 announced at NIO DAY has a horizontal FOV of 120 degrees, a maximum angle resolution of 0.06 degrees (horizontal) x 0.06 degrees (vertical), and a farthest detection distance of 500m. All of these are relatively good levels. In addition, this Lidar uses a more eye-friendly wavelength of 1,550nm, which will not have a health impact on pedestrians even when operating at high power.

Later, I also checked Innovusion’s official website. The two existing products, Jagura 65/100, and the released data do not match. Both products are “coming soon”. The Falcon Lidar with higher integration caught my attention. The official press release also explicitly stated that the ET7 will be equipped with this Lidar, and that the detection distance for small objects in front of the car, such as pedestrians and road obstacles, has reached 200m during operation.

However, objectively speaking, although Lidar has powerful performance, it is not omnipotent.One glaring problem is that although lidar can provide comprehensive and accurate 3D spatial information, the point cloud information obtained by lidar only shows the model contour and lacks color and pattern, let alone gloss information on the object surface. Therefore, whether directly or indirectly, lidar is difficult to know “what is being scanned”.

It is clearly impractical for the system to make a proper driving decision in the absence of this type of information. Vision is mainly responsible for identifying roads and various objects in the environment, while lidar assists in the perception of spatial position and object speed, and the two are fused and matched. The difference is that most other companies use millimeter-wave radar, while NIO uses a lidar with higher accuracy and information content.

After that, NIO NAD also has another ace up its sleeve.

Image Processing, Are 4 N Cards Enough?

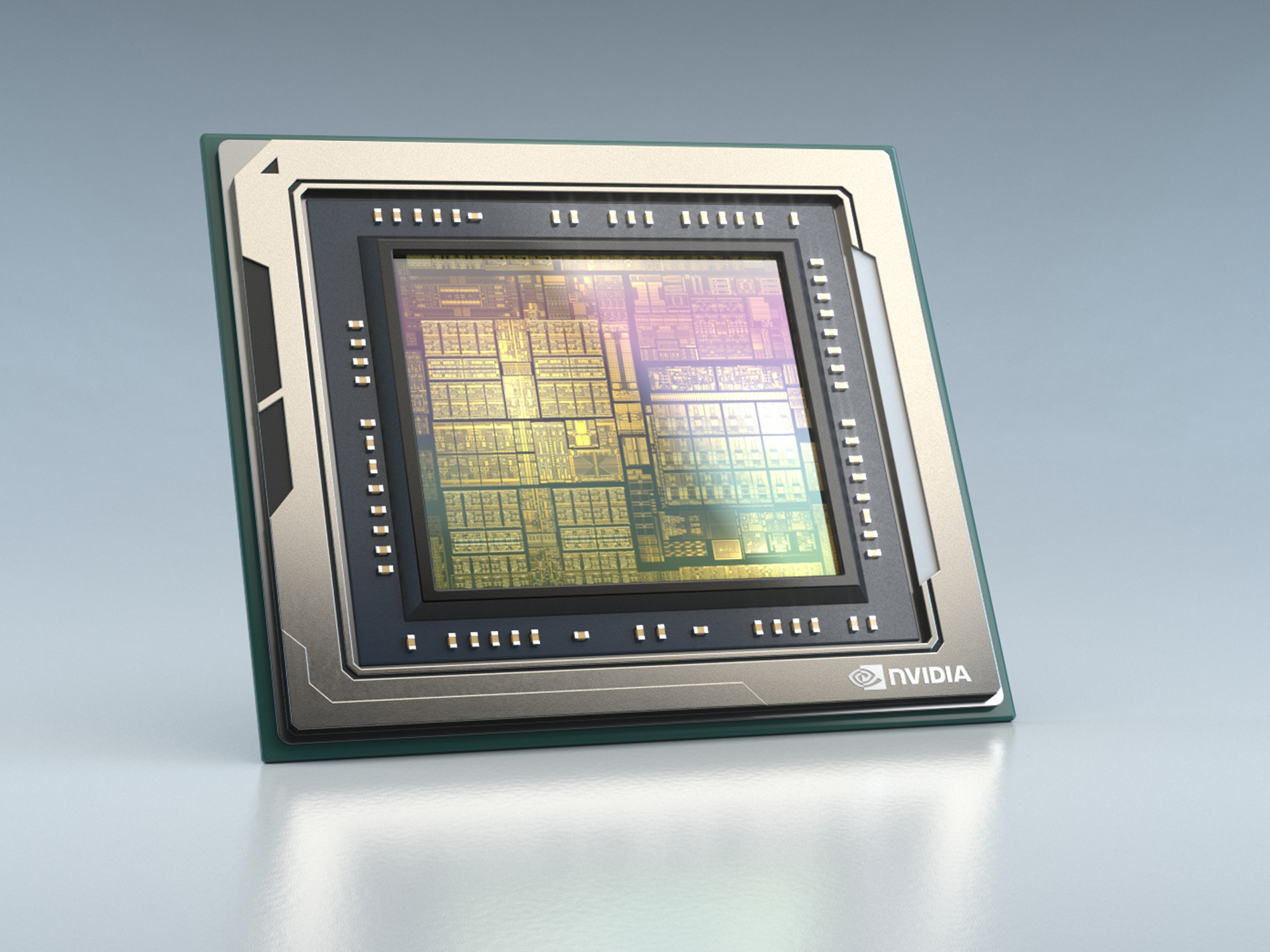

The rich sensing layer of NIO NAD brings diverse and comprehensive perception information to the vehicle’s autonomous driving, and the generated image data volume also reaches 8 G/s, which means that processing the data requires a powerful hardware support.

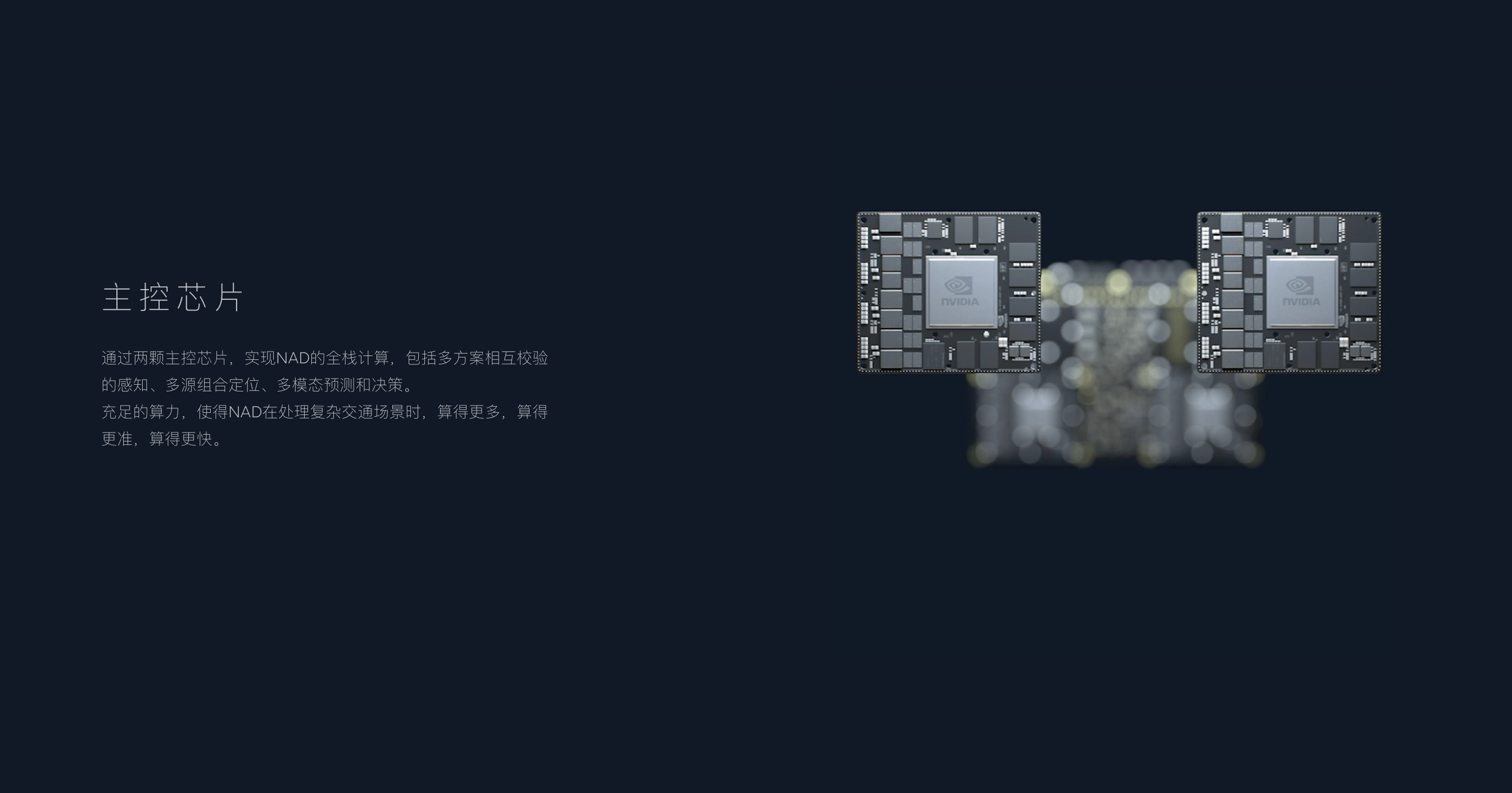

NIO’s solution for this is 4 N cards – composed of 4 NVIDIA Orin chips, with a total computing power of 1,016 TOPS on the ADAM computing platform. In comparison, NVIDIA’s current Xavier chip has a computing power of 30 TOPS, and the highest computing power currently available on the market is the two HW 3.0 chips equipped in Tesla models, with a total computing power of 144 TOPS.

The Triple Use of ADAM’s Four Cores

In terms of computing power allocation, Tesla’s one HW 3.0 chip contains 2 36 TOPS neural processors. These 2 neural processors each receive one of the two copies of the perceptual data input from the sensors after processing, and obtain relevant driving decisions after calculation. The system will compare the results calculated by the two processors and output the available driving decision when their consistency reaches a certain standard.

For the other HW 3.0 chip, Tesla has multiple considerations. On the one hand, it is used as a backup redundancy to prevent chip failures, and on the other hand, Tesla uses this backup chip to run non-executable beta software codes in “shadow mode”. When the computing results deviate greatly from the actual driving situation, the system will mark the corresponding material of the road section and send it back to the cloud.In NIO’s ADAM platform, among the four Orin chips, two are main control chips responsible for all-stack computing of NAD, including multimodal prediction and decision-making, multi-source combined positioning, and mutual verification of multiple solutions. The remaining two Orin chips are used for safety redundancy backup and group intelligence for autonomous driving and personalized training respectively.

It’s not hard to see that the design of NIO ADAM platform is similar to that of Tesla, both having units for computing and comparison verification, backup and redundancy units, as well as units for AI training. The difference is that NIO ADAM hardware unit computing power is more than 7 times that of Tesla for both individual and total computing power.

Seeing the exaggerated computing power of the four Orin chips, some people may think it’s NIO’s usual “over-stacking” strategy. However, the demand for system computing power in the development of autonomous driving is exponentially growing. In this industry, “over-performance” is a false proposition. Moreover, high performance brings longer product life cycles, which is a win-win situation for NIO, which has always emphasized user thinking.

Besides, NIO’s approach has also accelerated the development of the industry, pushing for the mass production of iterative technologies for autonomous driving, and making consumers the beneficiaries.

Moreover, 1,016 TOPS computing power is not an overstatement compared to Tesla’s approach.

The two neural processors on the Tesla HW 3.0 chip have a computing power of 36 TOPS each, processing image data from eight 1.2-megapixel cameras, while the NIO ET7 has 11 8-megapixel cameras.

Image algorithms are pixel-based, so at the same frame rate, the data size generated by the latter is more than nine times that of the former in the same amount of time. The two Orin main control chips in the ADAM platform are also used for dual verification computing, with each chip having a computing power of 254 TOPS, more than seven times that of the neural processor on the HW 3.0 chip.

In comparison, NIO’s configuration is reasonable.

Is NIO stable in this round?

So, after the combination of visual, lidar, and high-computing-power platforms, is NIO’s NAD autonomous driving stable?In my opinion, NIO has made a good start. NIO has established a solid foundation in the key hardware components of perception and computing platforms. Besides computing power, NIO has also well-designed redundancy for other key elements of autonomous driving: redundant power supply for the core controller, redundant steering control, redundant braking, and redundancy in power systems brought by the dual motors. As Li Bin said, “every improvement in safety is worth the effort.”

However, there are still software and algorithms in the remaining elements. To enable the ET7, which has excellent “physical aptitude”, to achieve autonomous driving, it needs to learn how to recognize the world and how to drive. From the Orin chip specially designed for the group intelligence and personalized training of autonomous driving on ADAM, NIO seems to be well-prepared.

But after switching from Mobileye to NVIDIA, NIO’s San Jose Autonomous Driving R&D Center in the Silicon Valley of the United States has been busy during the year before the delivery of new cars.

By the way, NIO NAD also has a very “NIO” purchasing method – hardware is included in standard configuration, and functions are paid monthly at a cost of 680 yuan per month. After spending 64,000 yuan on Tesla FSD, there is no regret medicine. However, if NIO NAD does not feel good to use, users can cancel the subscription next month. Under the premise of standard hardware configuration, this approach requires great courage, which can also demonstrate NIO’s determination to make NAD perfect.

Finally, I cannot say that NIO NAD will be the strongest autonomous driving system at that time, but with 1 Lidar and 4 N-cards, ET7’s autonomous driving capabilities have a good guarantee, and the potential is beyond imagination. With the monthly price of 680 yuan, it is likely to be the most affordable and most subscribed autonomous driving system.

On these two points, NIO is indeed at the forefront of the industry, and I believe this should not be the end of surprises.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.